Author: Denis Avetisyan

New research reveals software engineers prioritize practical functionality over emotional intelligence when working with AI collaborators.

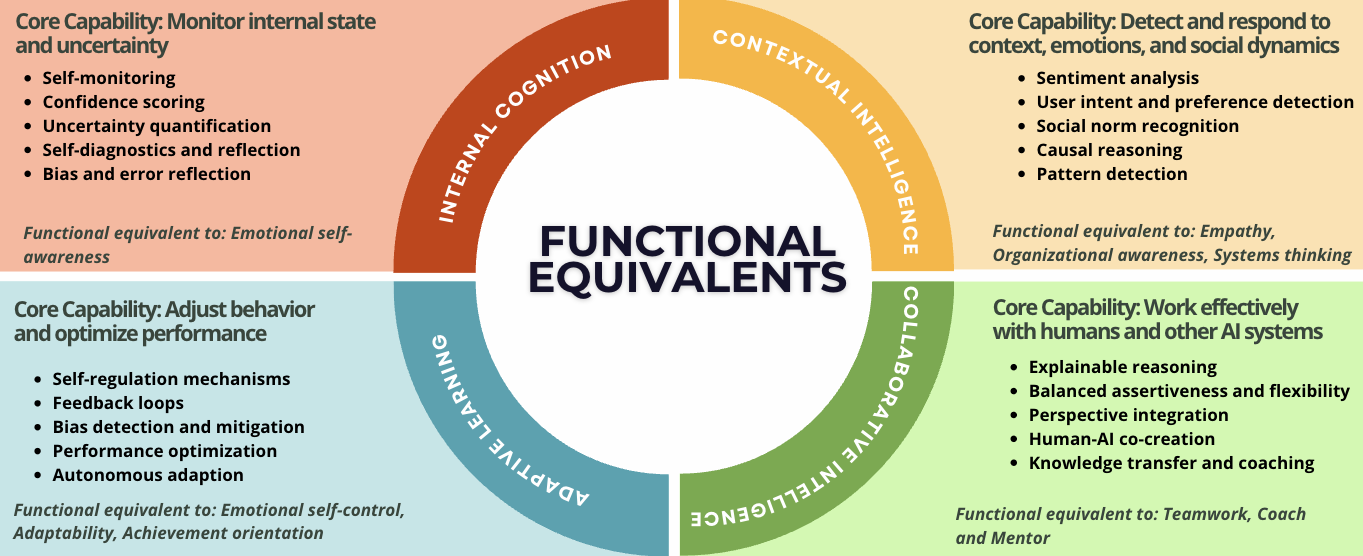

This review demonstrates that engineers seek functional equivalents of socio-emotional intelligence in AI, focusing on technical capabilities that achieve similar collaborative outcomes in software development.

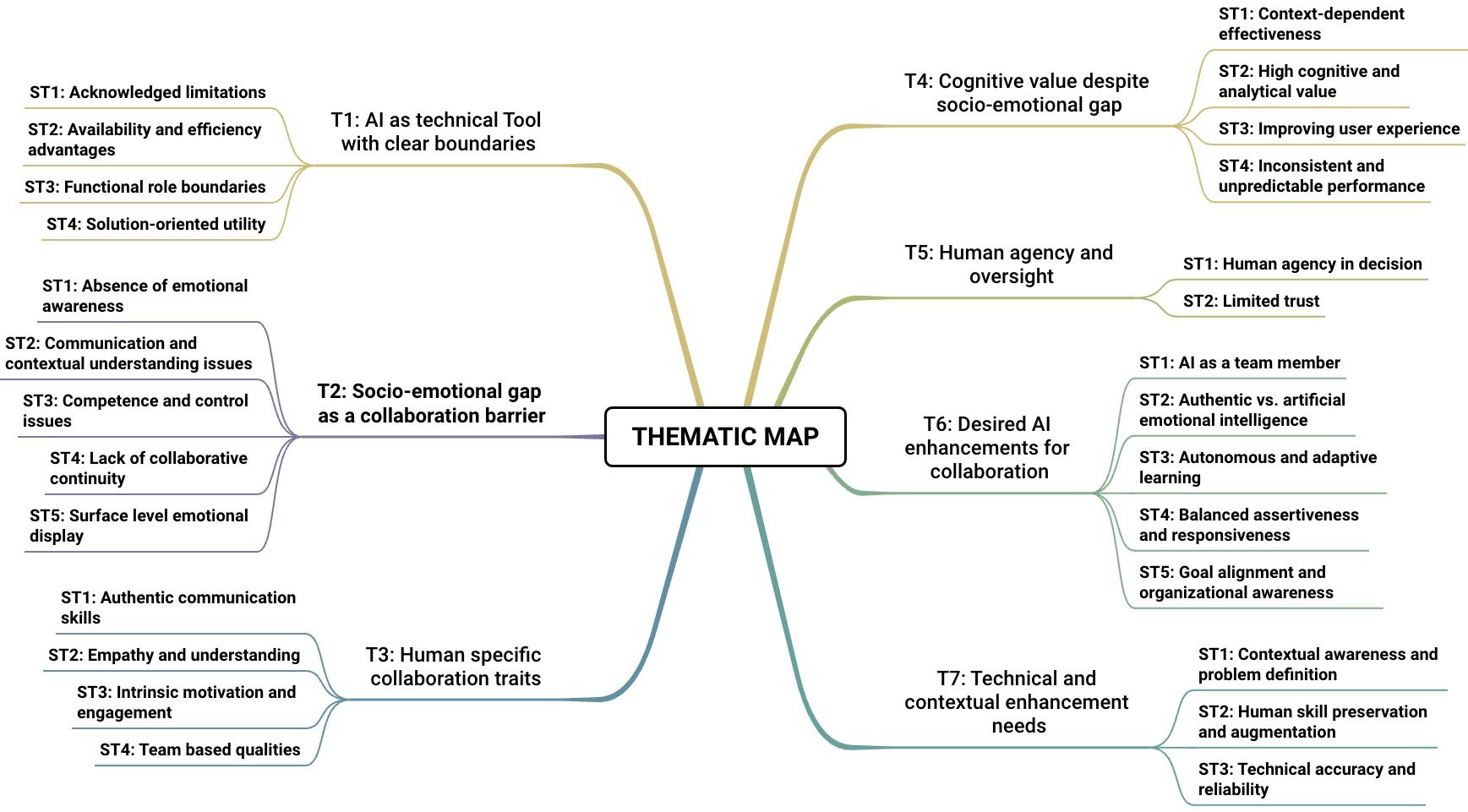

While effective teamwork hinges on socio-emotional intelligence, its relevance in human-AI collaboration remains unclear, particularly as generative AI increasingly supports software engineering tasks. This study, ‘Bridging the Socio-Emotional Gap: The Functional Dimension of Human-AI Collaboration for Software Engineering’, investigates how software practitioners perceive this gap and what capabilities AI requires for truly effective collaboration. Findings reveal that practitioners prioritize functional equivalents – technical skills like contextual intelligence and adaptive learning – over replicating human socio-emotional traits in AI teammates, viewing these as crucial for achieving comparable collaborative outcomes. Does this suggest a shift towards redefining collaboration not as emotional resonance, but as functional alignment between human and artificial intelligence?

The Architecture of Collaboration: Bridging the Human-AI Divide

Truly effective teamwork relies on a constant, often subconscious, exchange of information where individuals anticipate each other’s needs and adjust their actions accordingly; however, replicating this dynamic with artificial intelligence presents significant hurdles. Unlike human teammates who leverage shared experiences, emotional intelligence, and common ground to predict intentions, current AI systems typically operate on explicitly programmed parameters and data analysis. This necessitates a shift from simply assigning tasks to building AI capable of inferring collaborative goals, understanding implicit cues, and proactively offering assistance – a level of contextual awareness that demands more than just computational power, but a fundamentally different approach to designing intelligent systems for shared endeavors.

Conventional software development historically centers on achieving specific task completion, often neglecting the subtleties of genuine collaborative interaction. This emphasis on functionality, while effective for standalone applications, creates a significant hurdle in building truly synergistic human-AI partnerships. Current practices frequently treat AI as a tool to execute instructions, rather than a partner capable of understanding intent, anticipating needs, or adapting to the fluid dynamics of a team environment. The result is a gap between technical capability and true collaboration – AI can perform tasks, but lacks the nuanced understanding required to seamlessly integrate into human workflows, potentially leading to inefficiencies and hindering the realization of its full potential as a collaborative agent.

True human-AI collaboration transcends mere automation; impactful partnerships demand artificial intelligence capable of discerning context and responding to evolving situations. Current AI systems frequently excel at pre-defined tasks, but struggle when faced with ambiguity or unexpected changes in workflow. To unlock the full potential of these technologies, researchers are focusing on imbuing AI with the ability to model team dynamics, anticipate the needs of human colleagues, and adjust its behavior accordingly – essentially moving beyond task execution to become a truly adaptive and supportive team member. This necessitates advancements in areas like common-ground reasoning, shared intentionality, and the ability to infer unstated goals, fostering a synergistic relationship where the combined capabilities of humans and AI surpass those of either entity alone.

The Foundation of Team Performance: Socio-Emotional Intelligence

While technical expertise remains crucial, high-performing teams increasingly rely on socio-emotional intelligence (SEI) as a key differentiator. SEI encompasses the ability to perceive, understand, manage, and utilize emotions, both in oneself and in others. Research indicates a strong correlation between collective SEI and improved team outcomes, including enhanced communication, increased trust, and greater adaptability to challenges. Teams with high SEI demonstrate superior problem-solving capabilities, exhibit more constructive conflict resolution, and foster a more positive and productive work environment. Consequently, organizations are prioritizing the development of SEI alongside traditional skill sets to maximize team effectiveness and overall organizational performance.

The Emotional and Social Competency Inventory (ESCI) is a scientifically validated assessment tool used to measure emotional intelligence in the workplace. It evaluates an individual’s competencies across five composite scales: self-awareness, self-management, social awareness, relationship management, and well-being. Each composite is further broken down into specific, observable behaviors, providing a granular understanding of strengths and areas for development. The ESCI utilizes a 360-degree assessment process, gathering feedback from supervisors, peers, subordinates, and self, to provide a comprehensive profile. Scoring is based on a norm-referenced comparison, allowing organizations to benchmark individual and team competencies against a large database, and identify correlations between ESCI scores and key performance indicators.

Socio-emotional competencies demonstrably influence key performance indicators within teams. Research indicates a strong correlation between high scores in areas like empathy, self-awareness, and social skills – as measured by frameworks like the ESCI – and improved team outcomes. Specifically, teams with members possessing strong socio-emotional intelligence exhibit enhanced innovation rates, measured by the number of successfully implemented novel ideas, and greater resilience in the face of challenges, indicated by faster recovery from setbacks and reduced attrition rates. These competencies facilitate more effective conflict resolution, improved communication, and increased trust, all of which are critical for collaborative success and directly impact organizational performance metrics.

Functional Equivalence: A Pragmatic Approach to AI Collaboration

Functional equivalence in AI team collaboration prioritizes achieving comparable results to human-driven teamwork, rather than attempting to replicate the complexities of human emotion. This approach centers on identifying the effects of emotional intelligence – such as proactive assistance, conflict resolution, and efficient information sharing – and implementing these functions through algorithms and data analysis. The goal is not to create AI that feels empathy, but to develop systems that demonstrate behaviors leading to similar positive outcomes in team performance, such as increased productivity, improved decision-making, and enhanced team cohesion. This is accomplished by focusing on observable collaborative behaviors and translating them into quantifiable metrics and actionable AI functions.

AI systems are being developed with adaptive learning capabilities to interpret collaborative patterns within teams. These systems utilize data analysis of communication channels – including text, audio, and project management tools – to identify individual roles, interaction frequencies, and potential roadblocks. By establishing a baseline of normal team function, the AI can then anticipate needs such as resource allocation, task prioritization, or conflict resolution. Proactive support manifests as automated suggestions, information delivery, or the facilitation of communication, effectively mirroring aspects of emotional intelligence like empathy and support without requiring genuine emotional understanding. This approach allows AI to contribute to team cohesion and productivity by addressing issues before they escalate, based on observed behavioral data.

The successful integration of AI into collaborative teams necessitates transparent reasoning and explainable AI (XAI) to foster trust and acceptance among human members. XAI facilitates comprehension of the AI’s decision-making processes, moving beyond “black box” functionality to reveal the data and logic driving its contributions. This transparency is achieved through techniques like feature importance ranking, rule extraction, and the provision of supporting evidence for AI-generated suggestions or actions. Without understanding why an AI system proposes a solution or makes a recommendation, team members are less likely to adopt its input, potentially leading to underutilization of the AI’s capabilities and hindering overall team performance. Furthermore, clear explanations are essential for identifying and correcting potential biases or errors in the AI’s reasoning, ensuring accountability and maintaining the integrity of the collaborative process.

The Architecture of Trust: Fostering Synergy in Human-AI Teams

Establishing confidence in artificial intelligence extends far beyond simply achieving correct answers; it hinges on a foundation of predictable behavior, lucid explanations, and proven dependability. While technical accuracy is undoubtedly important, users require assurance that an AI system will consistently perform as expected, offering insights into how conclusions are reached, not just what those conclusions are. This demand for transparency stems from a fundamental need to understand the reasoning behind AI-driven decisions, fostering a sense of control and allowing for appropriate calibration of trust. Demonstrable reliability, evidenced through consistent performance across varied inputs and conditions, solidifies this trust, transforming AI from a potentially opaque tool into a predictable and dependable partner.

The perception of artificial intelligence as an inscrutable ‘black box’ hinders its integration into collaborative environments; however, prioritizing explainability and transparency offers a pathway towards establishing trust and fostering synergy. When the reasoning behind an AI’s decisions is readily understandable, users are better equipped to assess its reliability and effectively integrate its outputs into their own workflows. This shift moves AI from being a mere tool to a trusted collaborator, enabling teams to leverage its capabilities with confidence and achieve outcomes exceeding those possible through individual effort. By illuminating the ‘how’ and ‘why’ behind its actions, AI can inspire not just acceptance, but genuine partnership, ultimately amplifying human potential and driving innovation.

The integration of artificial intelligence into collaborative workflows necessitates a fundamental reimagining of its role – not as a substitute for human expertise, but as a powerful extension of it. Recent research highlights this shift, identifying a framework of ‘functional equivalents’ for socio-emotional intelligence within AI systems. This work demonstrates that software practitioners, those building these systems, prioritize capabilities in AI that mirror the collaborative benefits of human traits like empathy and nuanced communication. Rather than replicating these traits directly, the focus is on achieving equivalent outcomes – fostering trust, managing conflict, and ensuring shared understanding within a team. This suggests a future where humans and AI don’t simply coexist, but synergistically amplify each other’s strengths, with AI handling complex data processing and humans providing critical thinking, creativity, and ethical oversight.

The research highlights a pragmatic approach to human-AI collaboration, revealing that software engineers value demonstrable functionality over simulated emotional responses. This aligns with Marvin Minsky’s observation that “The more we understand about intelligence, the more we realize how little we know.” The study demonstrates that engineers seek ‘functional equivalents’ – AI capabilities mirroring collaborative benefits, not replicating human socio-emotional intelligence. This isn’t a dismissal of the importance of human connection, but rather a recognition that in the context of software engineering, a reliable, technically proficient collaborator is prioritized. The focus shifts from being human-like to acting effectively, a testament to prioritizing system behavior over superficial resemblance. Good architecture is invisible until it breaks, and only then is the true cost of decisions visible.

Beyond Mimicry

The pursuit of socio-emotional intelligence in artificial intelligence, as this work suggests, may be fundamentally misdirected. The emphasis on functional equivalents-technical capabilities mirroring collaborative outcomes-reveals a pragmatic prioritization. It begs the question: what are systems actually optimizing for? Not necessarily seamless human-computer rapport, but rather efficient task completion. This is not to dismiss the value of user experience, but to suggest that the ‘soft skills’ of AI are, at their core, another layer of optimized functionality.

Future research should move beyond attempting to replicate human interaction and focus instead on delineating the minimal set of behaviors that elicit desired responses in a collaborative setting. Simplicity is not minimalism, but the discipline of distinguishing the essential from the accidental. A deeper understanding of contextual intelligence-how AI adapts to the nuances of a specific task and engineer-will prove more valuable than broad attempts at emotional mimicry.

Ultimately, the success of human-AI collaboration in software engineering-and beyond-hinges not on creating artificial people, but on designing systems that understand and respond to the underlying structure of effective teamwork. The goal is not to build a colleague, but to build a tool that understands the task, the team, and how to facilitate progress.

Original article: https://arxiv.org/pdf/2601.19387.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-29 04:58