Author: Denis Avetisyan

New research reveals that the benefits of using visual models in software requirements inspection aren’t universal, and depend on individual cognitive strengths.

![Figure 1:Cognitive Tasks. The study illuminates how cognitive tasks, encompassing processes like [latex] \text{planning} [/latex], [latex] \text{reasoning} [/latex], and [latex] \text{learning} [/latex], are not isolated modules but rather interconnected functions that dynamically interact to facilitate complex problem-solving.](https://arxiv.org/html/2601.16009v1/images/OSPAN.png)

The effectiveness of UML diagrams versus textual representations is significantly influenced by the interplay between working memory, mental rotation abilities, and cognitive load during requirements inspection.

Despite widespread adoption of visual representations in software engineering, it remains unclear whether diagrams consistently enhance requirements inspection accuracy. This research, ‘The Role of Cognitive Abilities in Requirements Inspection: Comparing UML and Textual Representations’, investigates how cognitive abilities-specifically working memory and mental rotation-influence the effectiveness of UML sequence diagrams alongside textual requirements. The study reveals a complex interplay between representation type and cognitive skills, demonstrating that individuals with high working memory and mental rotation capacities do not universally benefit from UML support-experiencing reduced violation detection but improved justification accuracy. Does this suggest that multi-modal information requires nuanced cognitive processing, and how can we better tailor requirements representations to individual cognitive profiles?

The Illusion of Rigor: Why Requirements Inspection Fails

The pursuit of robust software fundamentally relies on the early detection of flaws, yet conventional requirements inspection frequently suffers from inconsistencies and a degree of subjectivity. While intended to rigorously examine specifications for ambiguity, completeness, and correctness, these inspections are often influenced by individual inspector biases and varying interpretations of the requirements themselves. This lack of standardized evaluation can lead to critical defects slipping through the process, ultimately increasing development costs and potentially compromising the final product. Consequently, organizations are continually seeking methods to enhance the reliability and objectivity of requirements inspection, moving beyond purely manual reviews toward more structured and quantifiable approaches.

The efficacy of requirements inspection, a cornerstone of software quality assurance, is increasingly challenged by the inherent limits of human cognition when confronted with escalating complexity. Modern requirements specifications are no longer simple statements; they are dense, interconnected documents often incorporating multiple viewpoints, intricate business rules, and evolving user stories. This cognitive load-the total mental effort required to process information-can overwhelm inspectors, leading to missed defects, superficial reviews, and inconsistent evaluations. Inspectors struggle to maintain focus, accurately assess all potential implications, and effectively prioritize issues within these complex documents, diminishing the return on investment for this crucial quality control practice. Consequently, even skilled professionals can be susceptible to overlooking critical flaws, highlighting the need for improved inspection techniques and potentially automated support tools to augment human capabilities.

The Cognitive Bottleneck: What’s Really Limiting Inspection Quality

Effective requirements inspection is demonstrably linked to an inspector’s cognitive capacities, with both working memory (WM) capacity and mental rotation ability playing crucial roles. WM capacity dictates the amount of information an inspector can actively hold and manipulate during review, impacting their ability to track dependencies and identify inconsistencies within requirements. Mental rotation ability, a component of spatial reasoning, enables inspectors to mentally manipulate and compare different representations of the requirements, facilitating the detection of logical flaws or ambiguous phrasing that might otherwise be missed. These cognitive skills are not uniformly distributed, and variations in an inspector’s WM capacity and mental rotation ability directly correlate with their inspection performance; individuals with higher capacities in these areas consistently demonstrate improved defect detection rates and overall review quality.

Inspector performance in requirements analysis is directly constrained by the finite capacity of working memory (WM) and the cognitive effort required for mental manipulation of requirements data. While sufficient WM capacity and spatial reasoning skills are necessary to process complex requirements and identify inconsistencies, exceeding these cognitive resources leads to cognitive overload. This overload manifests as reduced attention, increased error rates, and impaired ability to detect defects. The complexity of the requirements document, the number of conditions within each requirement, and the volume of requirements being inspected all contribute to the cognitive load experienced by inspectors, ultimately impacting the effectiveness of the inspection process.

Reading Techniques: A False Sense of Control

Requirement inspection relies on a spectrum of reading techniques employed by inspectors to process and understand documented needs. These techniques range from highly structured, systematic approaches – involving pre-defined steps and checklists – to more flexible, ad-hoc reading methods where inspection is driven by the inspector’s experience and intuition. The selection of a particular technique is influenced by factors such as the complexity of the requirements, time constraints, and the inspector’s individual preferences. While systematic approaches aim for comprehensive coverage, ad-hoc reading can be effective in identifying obvious defects or inconsistencies, but may not guarantee thoroughness. Both approaches represent valid strategies, with their effectiveness influenced by the skill and experience of the inspector.

Cognitive load during requirements inspection is demonstrably affected by presentation format. Research indicates that purely textual requirements necessitate a greater expenditure of cognitive resources during review, potentially increasing the likelihood of overlooked defects. Conversely, the incorporation of visual aids, specifically UML Sequence Diagrams, demonstrably reduces this cognitive burden by providing a more readily digestible representation of system interactions. This allows inspectors to focus on logical consistency and completeness rather than struggling with complex textual descriptions, thereby improving comprehension and enhancing the probability of defect detection.

Issue detection accuracy and the quality of supporting justifications were quantitatively assessed to measure the effectiveness of inspection techniques. Analysis of all observations yielded a mean F1-score of 0.464 for issue detection, indicating moderate performance in identifying requirements defects. Concurrently, issue justification accuracy demonstrated a mean value of 4, based on a defined scale, which represents a high level of quality in the rationale provided for identified issues. These metrics provide a data-driven basis for evaluating and comparing the efficacy of different reading techniques during requirements inspection.

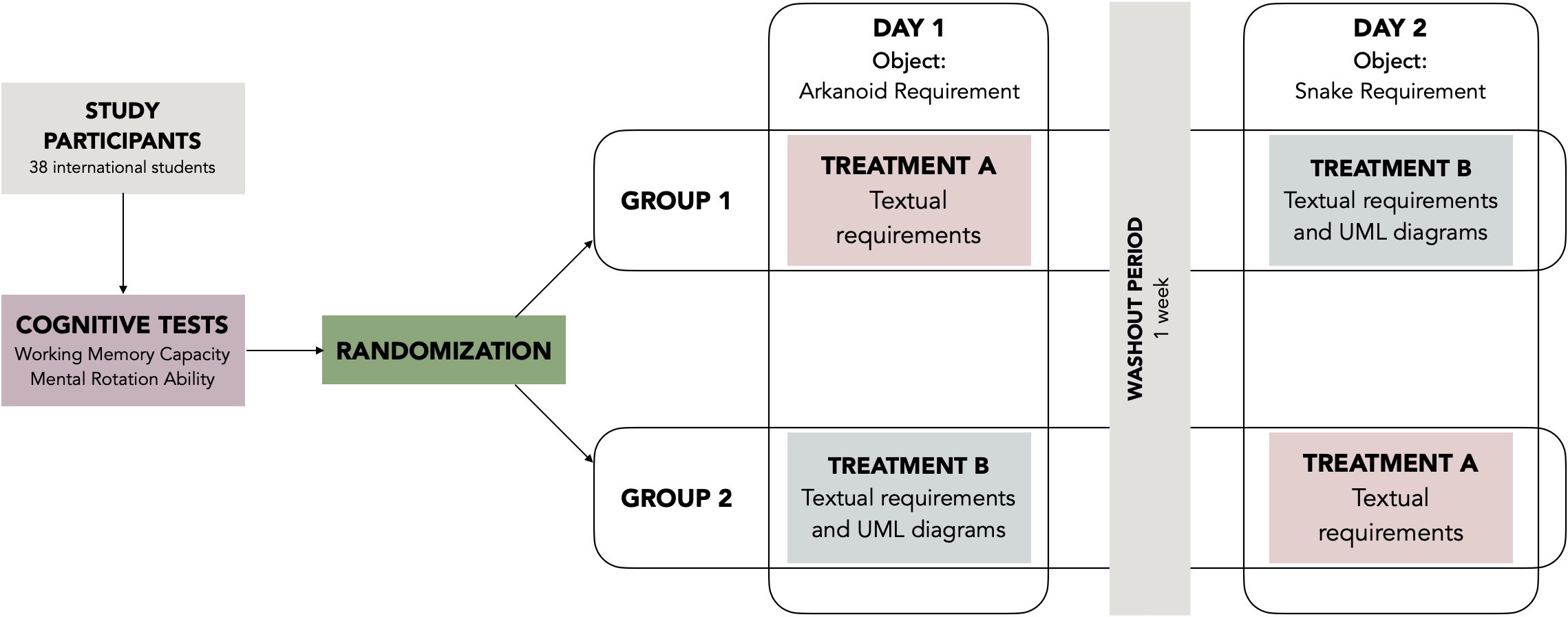

The Illusion of Control: Why Rigor Doesn’t Guarantee Results

The study employed a crossover experimental design to rigorously evaluate the effectiveness of various requirements inspection methods. This approach ensured each participant experienced all inspection conditions, effectively minimizing the influence of pre-existing differences in cognitive skills-such as working memory and mental rotation abilities-on the results. By systematically rotating participants through each method, researchers could isolate the impact of the inspection technique itself, rather than attributing observed differences to variations in individual cognitive capacity. This careful control enhances the validity of the comparison, providing a more accurate understanding of which methods yield the most reliable and insightful issue detection, and justification, during the requirements phase of software development.

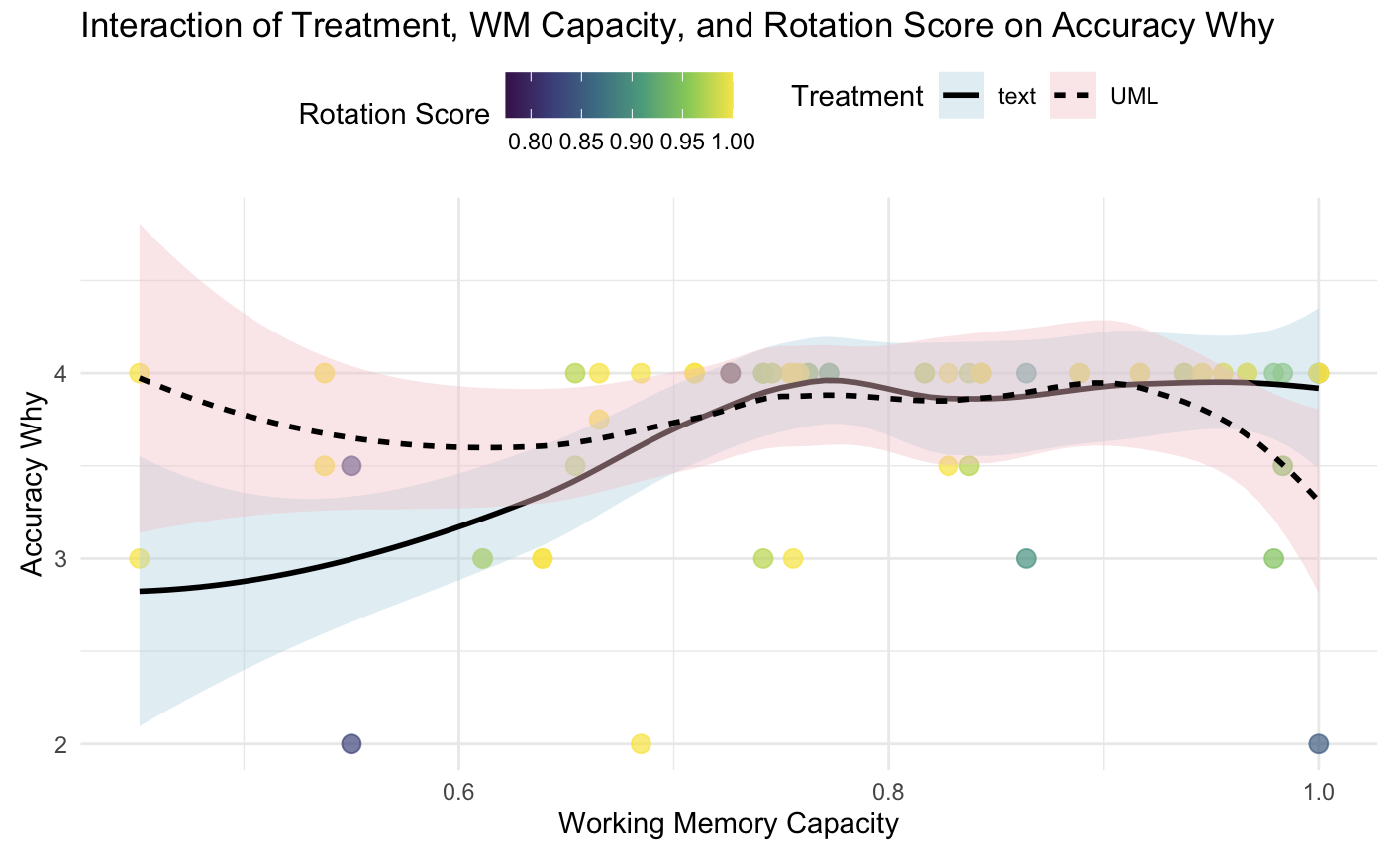

A carefully designed experiment revealed a complex interplay between inspection treatment, working memory capacity, and mental rotation ability, significantly impacting both issue detection and the quality of explanations provided. Statistical analysis demonstrated a three-way interaction-specifically, individuals with high cognitive abilities, when utilizing UML diagrams during inspection, actually exhibited decreased accuracy in identifying defects (as measured by F1-score, p < 0.05). However, this same group simultaneously demonstrated improved accuracy in their ability to justify their inspection decisions – providing more reasoned explanations for both identified issues and non-issues (Accuracy Why, p < 0.001). This suggests that while advanced cognitive skills coupled with UML may not enhance a reviewer’s ability to find all flaws, they do bolster their capacity to articulate a clear and logical rationale for their assessments, a crucial aspect of effective requirements validation.

The nuanced interplay between cognitive skills, inspection techniques, and issue detection has direct implications for optimizing requirements inspection processes. Findings suggest that while leveraging tools like UML diagrams can enhance the ability to justify identified issues, it paradoxically decreases the accuracy of detecting those issues, particularly when paired with individuals possessing high working memory capacity and mental rotation skills. Consequently, best practices should focus on strategically allocating tasks; individuals with strong cognitive abilities might be better suited for verifying the rationale behind proposed fixes rather than initial defect identification. By tailoring inspection approaches to account for both human cognitive factors and methodological strengths, organizations can demonstrably improve software quality, reduce the costly rework associated with defects discovered late in the development lifecycle, and ultimately achieve significant reductions in overall project expenses.

The study meticulously details how supposedly ‘superior’ representations – those UML diagrams – don’t automatically translate to better requirements inspection. It seems the human mind, when presented with complexity, doesn’t scale linearly. High cognitive ability, rather than being a universal benefit, can actually increase the likelihood of overload. As Blaise Pascal observed, “The eloquence of angels is no more than the echo of our own thoughts.” This research echoes that sentiment; even the most sophisticated tools are limited by the capacity of those wielding them. Production, predictably, will always find a way to break elegant theories, and in this case, that ‘way’ is cognitive limitations.

What Comes Next?

This investigation into the cognitive quirks of requirements inspection provides a useful, if predictable, lesson: throwing diagrams at a problem doesn’t magically solve it. The interplay between working memory, mental rotation, and the sheer volume of detail in UML-or any formal notation, really-suggests a limit to how much cognitive horsepower actually improves outcomes. High ability individuals, apparently, are just as capable of drowning in information as anyone else. It’s almost comforting; if a system crashes consistently, at least it’s predictable.

Future work will undoubtedly attempt to ‘optimize’ diagram design, or develop ‘AI-assisted’ inspection tools. One anticipates a flurry of papers on ‘cognitive load balancing’ and ‘just-in-time information delivery.’ The field will chase increasingly granular metrics of ‘inspectability,’ conveniently ignoring the fact that requirements, by their nature, are ambiguous and prone to interpretation. It’s a beautiful cycle of optimization, followed by inevitable failure.

Perhaps the more fruitful avenue isn’t better tools, but better acceptance of the inherent messiness of software development. After all, the code isn’t written for the machine-it’s a series of notes left for digital archaeologists. One suspects, though, that’s a lesson few are willing to learn. The pursuit of elegant solutions will continue, even as production finds new and creative ways to break them.

Original article: https://arxiv.org/pdf/2601.16009.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Honor of Kings Year 2026 Spring Festival (Year of the Horse) Skins Details

2026-01-25 16:42