Author: Denis Avetisyan

Researchers propose a new layer of typed functions, dubbed ‘web verbs’, to enable more reliable and efficient interactions between web agents and online services.

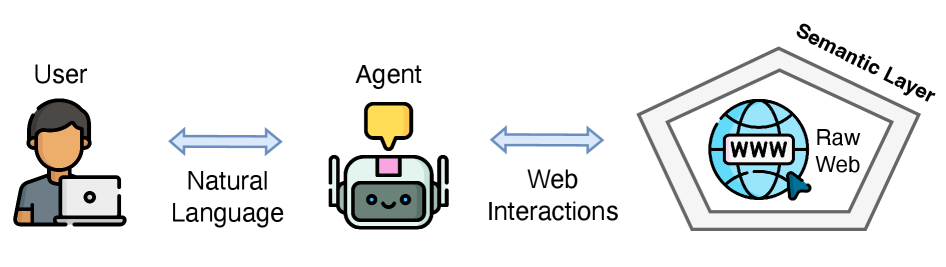

This paper introduces a semantic layer of action abstractions for composing reliable tasks on the agentic web, moving beyond brittle GUI automation.

While the web increasingly hosts software agents acting on user behalf, current approaches rely on brittle, low-level interactions like clicks and keystrokes. This paper, ‘Web Verbs: Typed Abstractions for Reliable Task Composition on the Agentic Web’, proposes a solution: a web-scale layer of typed, semantically documented functions – ‘Web Verbs’ – that abstract web actions into stable and composable units. By unifying API-based and browser-based paradigms, these verbs enable large language models to synthesize reliable, auditable workflows with explicit control and data flow. Could this standardized action layer unlock a truly programmable web, fostering greater efficiency and trust in agentic systems?

The Inherent Fragility of Superficial Web Interaction

Contemporary methods for enabling software agents to interact with the web frequently depend on techniques like web scraping and UI automation, approaches demonstrably prone to failure. These systems operate by mimicking human user actions – clicking buttons, filling forms, and parsing visual layouts – meaning even minor website redesigns can render them inoperable. The resulting fragility necessitates constant monitoring and re-engineering, creating a significant maintenance burden and limiting the scalability of agentic systems. This reliance on superficial, presentation-level interactions, rather than understanding the underlying meaning of web content, creates a brittle architecture vulnerable to even the most innocuous of website updates, hindering the development of truly autonomous and reliable web agents.

Current web automation techniques often treat websites as mere visual interfaces, devoid of inherent meaning. This superficial interaction limits an agent’s capacity to truly understand the content or function of a webpage, resulting in fragile workflows easily disrupted by even minor site changes. Without semantic comprehension – the ability to discern what a button does, not just where it is – agents struggle to adapt to variations in website design or content presentation. Consequently, these systems require constant retraining and maintenance, failing to generalize effectively across different websites or even different sections within the same site. The lack of deeper understanding fundamentally restricts the potential for truly autonomous web agents capable of complex, reliable task completion.

Current methods for web interaction often falter because they mimic human behavior – clicking buttons and parsing visual layouts – rather than understanding the meaning behind web elements. This superficial approach creates fragile agents, easily disrupted by even minor website changes. A truly scalable solution necessitates a shift towards semantic understanding, where agents can interpret the intent of web functionality-what a feature does, not just where it is. Such a system would allow agents to reliably access and utilize web services, regardless of superficial presentation, and generalize their skills across diverse online platforms, ultimately enabling a more resilient and capable agentic web.

Deconstructing Web Functionality: The Power of Typed Interfaces

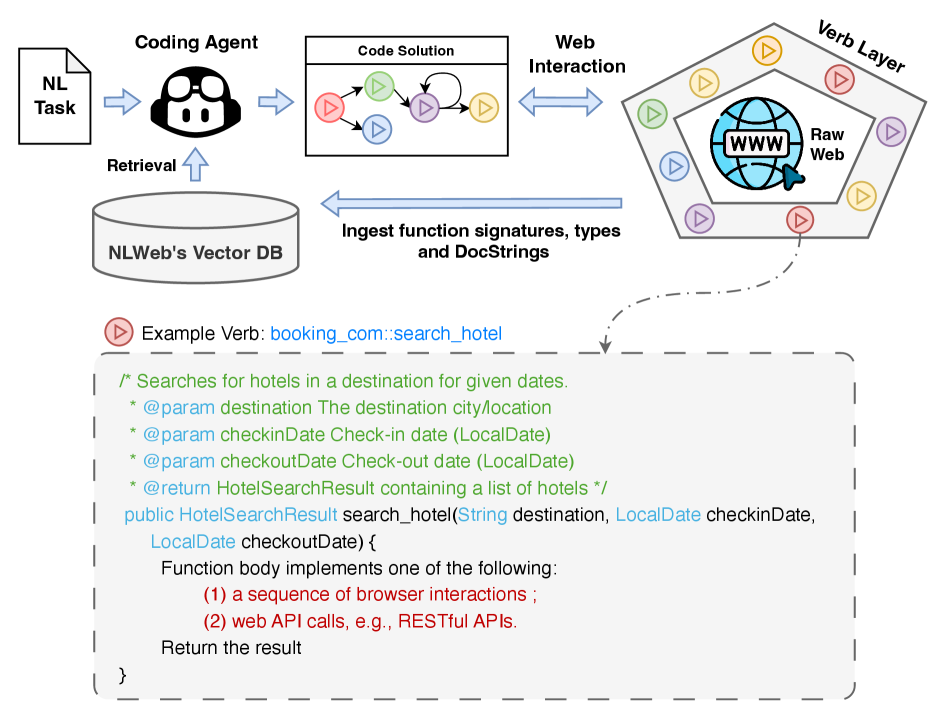

Web Verbs establish a typed interface for accessing web-based functionalities by decoupling actions from specific user interface interactions and underlying implementation details. This abstraction allows software agents and applications to interact with web services programmatically, specifying desired actions with defined parameters and expected return types. Rather than simulating user actions like clicks and form submissions, Web Verbs directly invoke server-side functions, improving reliability and efficiency. This typed approach facilitates automated workflows, enabling consistent and predictable execution of web-based tasks regardless of UI changes or website restructuring. The resulting interface offers a stable, machine-readable method for interacting with web resources.

The functionality of Web Verbs is reinforced by adherence to existing web standards, most notably Schema.org vocabulary, which provides a structured data framework for describing actions and their associated parameters. This standardization allows for machine-readable descriptions of web functionalities, improving discoverability by search engines and agents. Furthermore, the use of Stable Public Locators (SPLs) – persistent, resolvable identifiers – facilitates reliable access to these verbs and their definitions, even as underlying implementations change. SPLs ensure interoperability by providing a consistent point of reference, decoupling the verb’s semantic meaning from specific URLs or application code, and enabling consistent invocation across different platforms and agents.

The Semantic Layer functions as an intermediary between agentic requests and the execution of Web Verbs, enhancing both retrieval accuracy and grounding. It achieves this by providing a structured representation of Web Verb functionality using standardized vocabularies and ontologies, such as those found in Schema.org. This structured data allows agents to more effectively query for relevant verbs based on intent rather than specific UI pathways. Grounding is improved because the Semantic Layer links abstract requests to concrete, executable actions, resolving ambiguity and ensuring the agent correctly understands the desired operation. Consequently, the Semantic Layer minimizes the need for complex natural language understanding and reduces errors in translating requests into actionable web interactions.

Orchestrating Intelligent Action: From Verbs to Executable Workflows

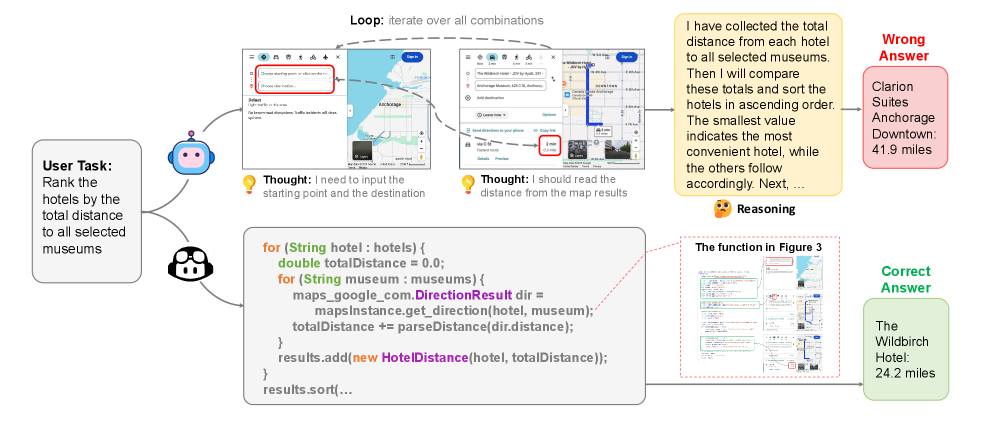

Code synthesis is the process of automatically generating executable code from a high-level specification, and in the context of agentic systems, it’s crucial for translating sequences of Web Verbs – representing individual actions like searching or form submission – into functional workflows. This synthesized code orchestrates the execution order of these verbs, manages data dependencies between them, and handles any necessary error handling or retries. By automating the construction of these workflows, code synthesis allows agents to perform complex, multi-step tasks without requiring manual coding for each sequence, significantly increasing adaptability and scalability. The resulting code typically involves control flow logic, data transformation, and API call sequences specific to the targeted web services.

Agent interaction with Web Verbs is primarily facilitated through two distinct modalities: API Agents and Browser Agents. API Agents operate by directly invoking server-side APIs, enabling programmatic control and data exchange without rendering a user interface. This approach is typically employed for tasks requiring high efficiency and direct data manipulation. Conversely, Browser Agents automate interactions within a graphical web browser, simulating user actions such as clicking, typing, and form submission. This modality is suitable for tasks involving websites lacking robust APIs or requiring interaction with dynamic content rendered within the browser. Both agent types utilize Web Verbs as the foundational units of action, but differ in their execution environment and interaction paradigm.

The reliable operation of Web Verb-based agents necessitates the definition of explicit preconditions and postconditions for each verb. Preconditions specify the state that must be true before a verb can successfully execute, while postconditions define the state guaranteed to be true upon successful completion. This approach allows for deterministic behavior, as the agent can verify preconditions before invocation and validate postconditions after execution to confirm correct operation. Formal specification of these conditions facilitates automated verification, debugging, and the composition of complex, multi-step workflows by ensuring each step operates as expected and maintains system consistency. Without clearly defined preconditions and postconditions, agent actions become unpredictable and difficult to integrate into larger automated processes.

![The [latex]get_direction[/latex] verb is implemented as a sequence of Playwright actions, leveraging structured inputs and outputs for reliable direction retrieval.](https://arxiv.org/html/2602.17245v1/x3.png)

Quantifying the Advancement: Reliability, Efficiency, and Verifiable Reasoning

Recent evaluations demonstrate a significant improvement in web automation reliability through the implementation of Web Verbs. Unlike traditional agents susceptible to disruptions from frequently changing user interface elements, Web Verbs operate by decoupling actions from these brittle UI components and grounding them in stable, semantic definitions. This approach yielded a 100% success rate on a demanding benchmark consisting of 100 diverse web tasks – a stark contrast to baseline agents which consistently failed to complete the same tasks. The consistent performance highlights the robustness of Web Verbs and suggests a pathway toward more dependable and predictable web automation, even amidst the dynamic nature of online environments.

Research demonstrates that Web Verbs markedly improve task completion efficiency by streamlining the cognitive processes required for web automation. Unlike conventional agents which necessitate extensive, iterative reasoning to navigate complex user interfaces, Web Verbs employ a structured composition that directly maps intentions to actions. This approach minimizes the number of reasoning cycles needed, enabling significantly optimized task execution. Benchmarks reveal substantial speed gains – ranging from 2.7 to 8.3 times faster – when compared to baseline agents grappling with the same web-based challenges. This enhanced efficiency isn’t merely about speed; it represents a fundamental shift towards a more direct and reliable method for interacting with web applications.

A key advancement offered by Web Verbs lies in their capacity for verifiable reasoning, a feature absent in conventional agents. Through the implementation of explicit programming abstractions, the internal logic of these agents becomes transparent and auditable, facilitating robust debugging and validation processes. This contrasts sharply with baseline agents, which operate as ‘black boxes’ and consequently failed to successfully complete a demanding 100-task benchmark. The ability to scrutinize and correct the reasoning pathways of Web Verbs not only enhances their reliability but also opens avenues for systematic improvement and refinement, ensuring predictable and trustworthy performance across diverse web-based tasks.

The pursuit of a standardized ‘web verb’ layer, as detailed in the paper, resonates deeply with a commitment to foundational correctness. Donald Knuth once stated, “Premature optimization is the root of all evil.” This sentiment underscores the importance of establishing a robust and verifiably correct semantic layer – the ‘web verbs’ – before focusing on performance gains. The paper’s focus on typed abstractions isn’t merely about building more efficient web agents; it’s about ensuring their actions are mathematically consistent and predictable, moving beyond the inherent fragility of relying on GUI-based interactions. A system built on such principles, though perhaps initially slower to develop, gains an enduring reliability that surpasses superficial optimizations.

What Lies Ahead?

The proposition of ‘web verbs’ elegantly sidesteps the inherent messiness of simulating human interaction with the web. However, a standardized interface, however mathematically pleasing, does not automatically guarantee a robust system. The true challenge resides not in defining the verbs, but in formally verifying their composition. A web verb that functions correctly in isolation is a triviality; it is the harmonious interaction of many, under varying conditions, that demands rigorous analysis. Current approaches to agentic systems often prioritize expediency over provability, a regrettable tendency that this work attempts to correct, but cannot, alone, resolve.

A persistent limitation lies in the inescapable ambiguity of natural language. While web verbs offer a structured representation of actions, the translation of human intent into a sequence of these verbs remains a fundamentally imprecise process. The semantic layer, as proposed, must contend with the inherent plasticity of meaning. Future research should focus not merely on expanding the verb vocabulary, but on developing a formal logic capable of resolving semantic conflicts and ensuring the deterministic execution of agentic tasks.

Ultimately, the success of this approach will be measured not by the number of websites it can interface with, but by the degree to which it allows for the provable correctness of web-based automation. A system built on elegant abstractions, yet lacking a foundation of mathematical certainty, is merely a sophisticated illusion. The pursuit of true elegance, in this domain, demands nothing less.

Original article: https://arxiv.org/pdf/2602.17245.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- 1xBet declared bankrupt in Dutch court

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

2026-02-22 16:30