Author: Denis Avetisyan

A new wave of artificial intelligence systems is moving beyond simple question-and-answer interactions to proactively pursue complex goals.

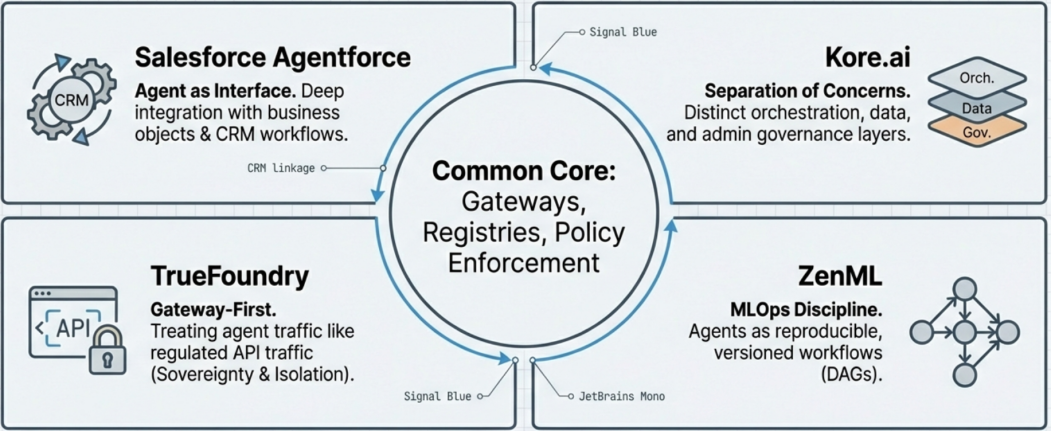

This review analyzes the evolving software architectures that underpin agentic AI, focusing on the critical importance of governance, observability, and composability in multi-agent systems.

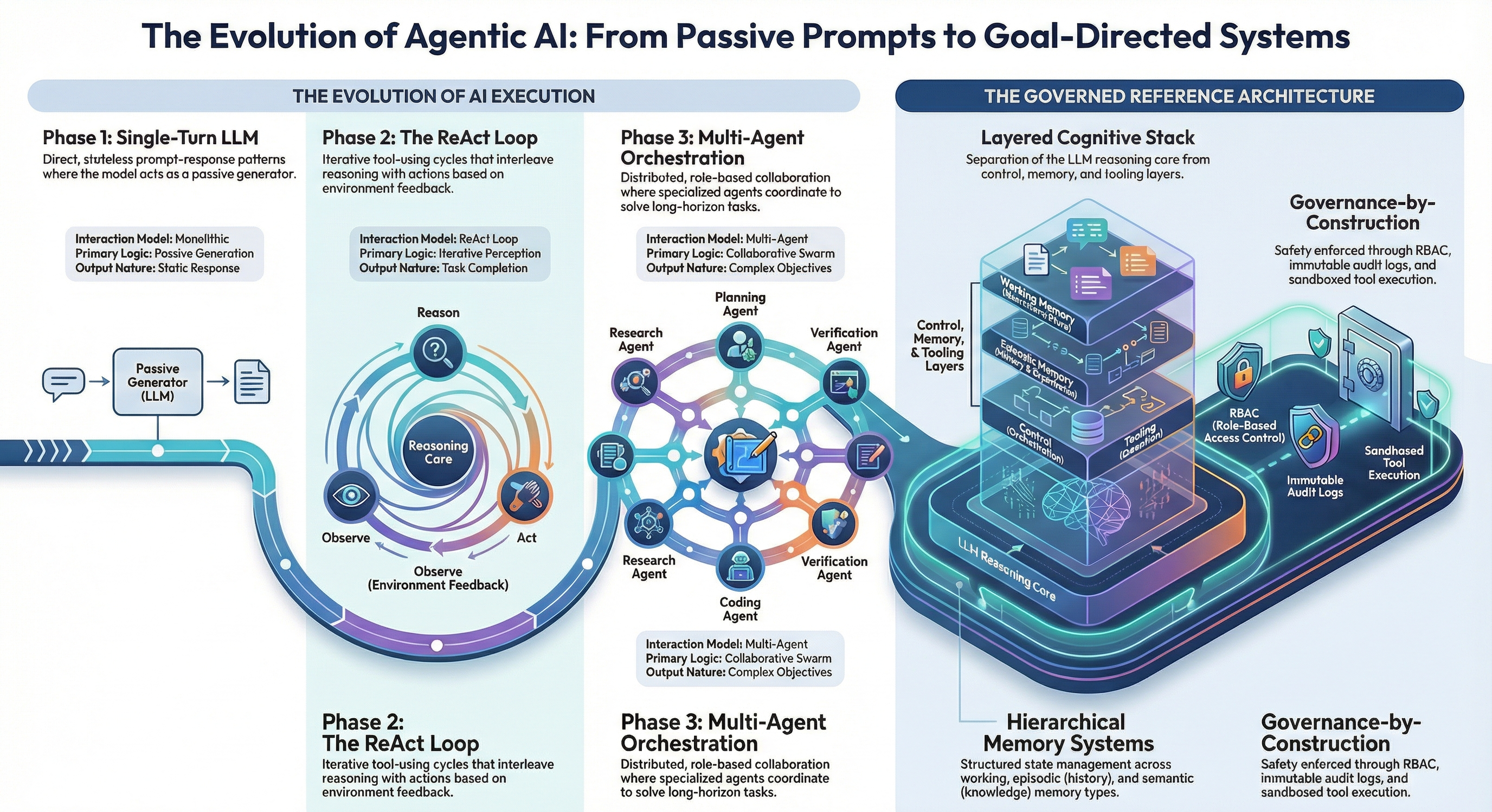

While large language models demonstrate impressive generative capabilities, realizing truly autonomous systems requires a fundamental shift beyond simple prompt-response interactions. This paper, ‘From Prompt-Response to Goal-Directed Systems: The Evolution of Agentic AI Software Architecture’, analyzes this transition by connecting established intelligent agent theories with contemporary LLM-centric approaches, ultimately proposing a reference architecture for production-grade agents. The study identifies a convergence towards standardized agent loops and governance mechanisms essential for scalable autonomy, alongside a critical taxonomy of multi-agent topologies and their associated risks. As agentic AI matures, will standardized protocols and layered governance structures prove sufficient to address the persistent challenges of verifiability, interoperability, and safe deployment?

Beyond Reactive Systems: The Rise of Intentional Intelligence

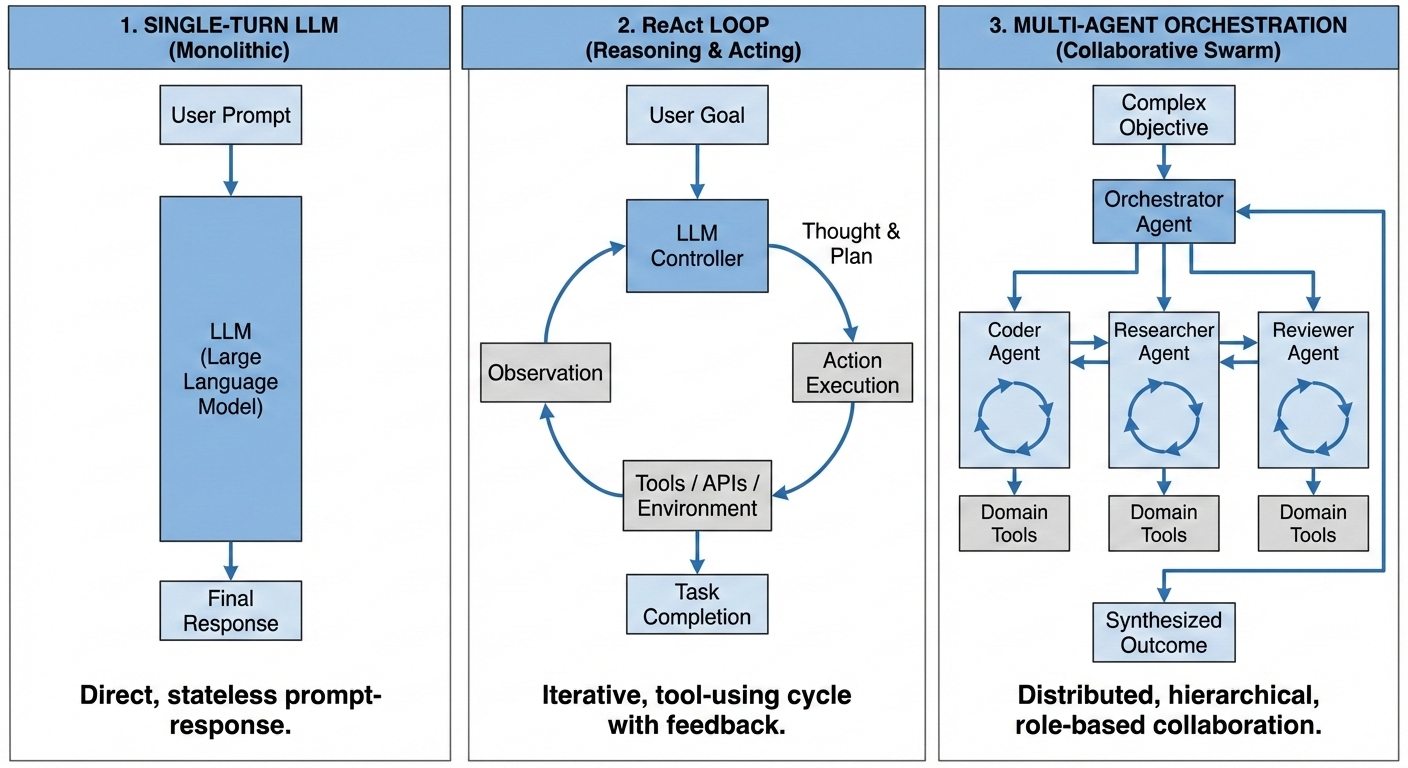

Conventional generative artificial intelligence functions as a ‘stateless’ system, meaning each interaction is treated in isolation, devoid of memory from prior exchanges. This prompt-response methodology, while capable of impressive feats of text or image creation, fundamentally restricts its applicability to complex, real-world tasks. Without the ability to retain information or learn from previous actions, these systems struggle with scenarios demanding sustained reasoning, planning, or adaptation – imagine a chatbot unable to recall earlier parts of a conversation, or a robotic assistant requiring constant re-instruction. This limitation necessitates a departure from simply reacting to inputs towards building systems that can maintain internal state, allowing for more nuanced, persistent, and ultimately, useful intelligence.

The current trajectory of artificial intelligence research is rapidly evolving beyond simple input-output models, giving rise to Agentic AI systems. Unlike traditional generative AI which responds to each prompt in isolation, these emerging systems are designed with persistent state – a form of memory that allows them to retain information across interactions. This foundational characteristic, coupled with the capacity for autonomous action, enables Agentic AI to independently pursue goals, plan multi-step tasks, and adapt to changing circumstances without constant human intervention. Instead of merely reacting, these agents proactively navigate environments, leveraging past experiences to inform future decisions and ultimately exhibiting a level of intelligence previously confined to biological entities. This paradigm shift promises to unlock applications far exceeding the capabilities of stateless systems, moving AI from a tool for completion to a partner in problem-solving.

The progression toward truly autonomous AI demands a departure from simple prompt-response models and a fundamental rethinking of system architecture. The Closed-Loop Control Architecture addresses this need by establishing a continuous cycle of observation, planning, and action. Unlike traditional systems, an agent built on this foundation doesn’t simply react to input; it actively perceives its environment, formulates goals based on internal state and external feedback, and executes actions designed to achieve those goals. This iterative process allows the agent to learn and refine its behavior over time, adapting to changing circumstances and improving its performance without explicit reprogramming. Crucially, the architecture incorporates mechanisms for self-evaluation and error correction, enabling the agent to identify and address shortcomings in its plans or execution, thus paving the way for robust and resilient artificial intelligence.

Perception and Planning: The Foundations of Autonomous Action

Effective agency in autonomous systems requires continuous perception of the environment; however, the time required for a complete perception cycle introduces latency. This latency is particularly pronounced when utilizing Vector Databases for similarity searches, a common component in perception pipelines. Vector Databases, while enabling efficient semantic retrieval, necessitate time for vector embedding generation, index traversal, and similarity scoring. Consequently, the delay inherent in querying these databases directly impacts the responsiveness of the agent. Minimizing this latency is crucial, as prolonged perception cycles can lead to stale information and impaired decision-making, ultimately hindering the agent’s ability to react effectively to dynamic environments.

Effective management of memory resources within agent architectures necessitates the implementation of ‘Context Window Budgeting’ and ‘Key-Value Cache Policies’. Context Window Budgeting involves dynamically allocating the limited context window – the amount of recent information accessible to the agent – to prioritize the most relevant data based on task demands and information entropy. This prevents information overload and maintains processing speed. Complementing this, Key-Value Cache Policies utilize techniques such as Least Recently Used (LRU) or Least Frequently Used (LFU) to store and retrieve frequently accessed data in a high-speed cache, minimizing latency associated with repeated vector database queries. These policies operate by storing embeddings paired with their corresponding data as key-value pairs, allowing for rapid recall of information crucial for ongoing perception and planning cycles. Proper configuration of both budgeting and caching mechanisms is critical for optimizing performance, especially when dealing with large datasets and complex reasoning tasks.

Effective planning within this architectural framework utilizes a dual approach, combining the strengths of Neural Design and Symbolic Design. Neural Design facilitates high-level task decomposition, enabling the system to break down complex goals into manageable sub-tasks through learned representations and pattern recognition. Complementing this, Symbolic Design introduces deterministic constraints and logical rules, ensuring that plans adhere to predefined boundaries and requirements. This combination allows for flexible, adaptable planning-leveraging the generalization capabilities of neural networks-while maintaining reliability and predictability through explicitly defined symbolic constraints, ultimately optimizing for both innovation and adherence to system limitations.

Orchestrating Intelligence: Multi-Agent Topologies for Complex Tasks

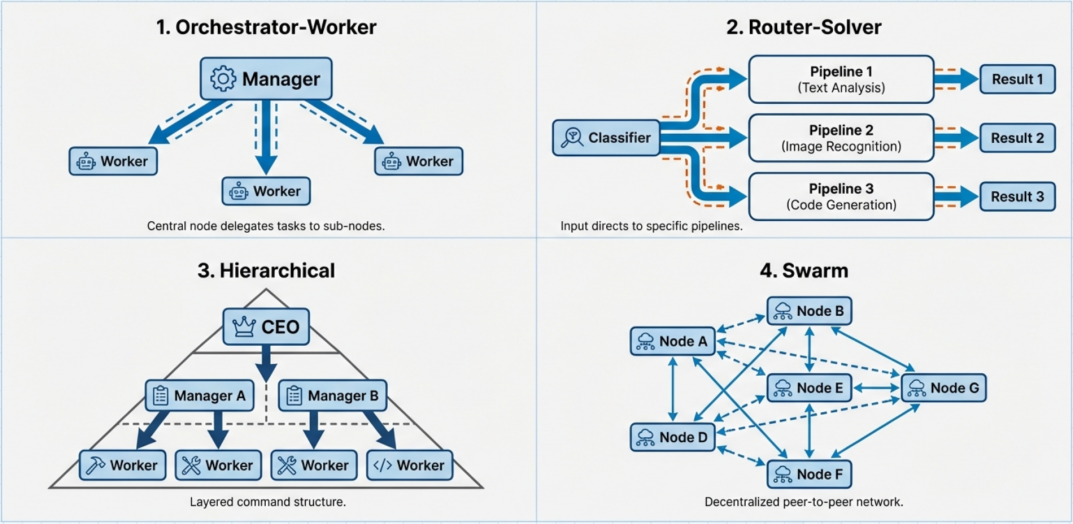

Multi-agent systems utilize varied topologies to effectively decompose complex tasks. The ‘Orchestrator-Worker’ model designates a central agent responsible for task assignment and monitoring, distributing subtasks to multiple worker agents. Conversely, the ‘Router-Solver’ topology employs a routing agent to direct specific task components to specialized solver agents based on expertise; this is particularly effective when tasks require diverse skillsets. These topologies are not mutually exclusive and can be combined to address nuanced requirements; for example, a router might assign tasks to orchestrators which then delegate to workers. Selection depends on factors like task complexity, the need for centralized control, and the degree of specialization within the agent population.

Hierarchical command structures in multi-agent systems establish a tiered control system, typically featuring a central authority delegating tasks to subordinate agents; this allows for complex task decomposition and efficient resource allocation, but introduces a single point of failure. Conversely, swarm architectures prioritize decentralized coordination, where agents operate autonomously and communicate locally, relying on emergent behavior to achieve collective goals. This approach enhances robustness and scalability, as the failure of individual agents does not necessarily compromise the system’s overall function. The choice between these architectures depends on the specific application requirements, balancing the need for centralized control with the benefits of distributed resilience and adaptability.

The ReAct paradigm, combining reasoning and acting, enables agents to iteratively observe, reason about the current state, and then act upon it, forming a closed-loop control system. This is further enhanced by the Tree of Thoughts (ToT) approach, which expands upon ReAct by allowing the agent to explore multiple reasoning paths – or “thoughts” – at each step, evaluating them to select the most promising course of action. ToT facilitates more complex problem-solving by moving beyond single-step reasoning and considering a wider range of potential solutions before committing to an action, improving adaptability in dynamic environments and enhancing performance on tasks requiring planning and sequential decision-making.

Securing the Agentic Ecosystem: Infrastructure and Governance

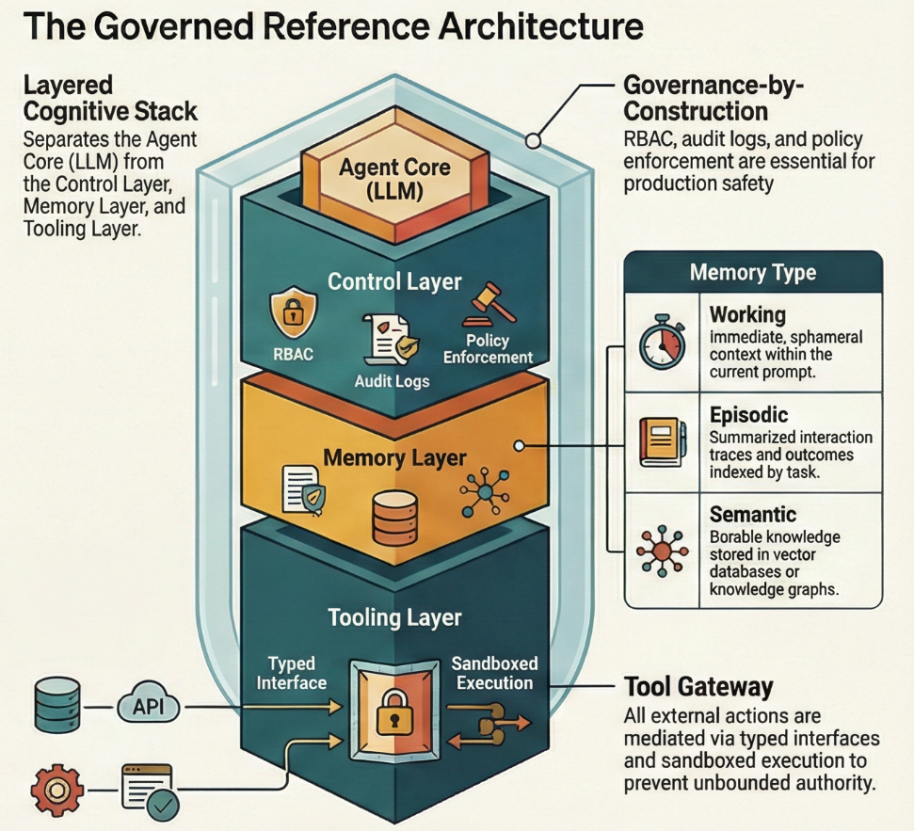

An API Gateway functions as a single entry point for all requests directed to agentic systems, enabling centralized management of critical security and operational functions. Specifically, it handles authentication-verifying the identity of the requesting agent-and authorization, determining access privileges. Routing capabilities within the gateway direct requests to the appropriate backend services or agents. Furthermore, rate-limiting features prevent abuse and ensure system stability by restricting the number of requests permitted within a given timeframe. By consolidating these functions, the API Gateway simplifies security implementation, improves observability, and enhances the overall resilience of the agentic ecosystem.

Tool Registries function as a centralized inventory and management system for the tools accessible within an agentic ecosystem. These registries maintain metadata regarding each tool, including its name, description, input parameters, authentication requirements, and endpoint details. This allows agents to dynamically discover and utilize available tools without requiring pre-configuration or hardcoded dependencies. Furthermore, a Tool Registry facilitates administrative control over tool access and usage, enabling features such as versioning, deprecation, and the implementation of usage quotas. The registry’s standardized interface streamlines integration with diverse agent frameworks and promotes interoperability between different tools within the system.

Role-Based Access Control (RBAC) is a critical security component within agentic ecosystems, functioning by assigning permissions based on defined roles rather than individual user identities. This approach simplifies administration and enhances security by limiting access to resources based on job function; for example, an agent configured for data analysis would only receive permissions to access data repositories, while an agent responsible for system configuration would have privileges related to infrastructure management. RBAC implementations typically define roles, associate users or agents with those roles, and then link roles to specific permissions on resources. This granular control minimizes the potential impact of compromised agents or unauthorized access attempts, and supports compliance requirements by providing a clear audit trail of access privileges.

The Future of Intelligence: Agentic Enterprises and Observability

The concept of the Agentic Enterprise represents a fundamental shift in how artificial intelligence is perceived and utilized within organizations. Rather than viewing AI as simply automating tasks, this framework positions it as an orchestrating interface – a dynamic layer that translates human intention into concrete computational action. This means AI doesn’t just execute pre-programmed instructions; it actively mediates between goals and their realization, intelligently selecting and coordinating various tools and resources. This reframing envisions a future where AI empowers human agency, augmenting capabilities and enabling complex problem-solving through seamless interaction and intelligent task delegation, ultimately fostering a more responsive and adaptive enterprise.

As agentic systems-AI designed to act on behalf of users-become increasingly complex, the need for robust observability tools is paramount. These aren’t simply logging systems; they represent a comprehensive approach to understanding the internal state and decision-making processes of these autonomous entities. Frameworks like LangChain are proving instrumental, offering capabilities to trace requests across multiple agents and tools, analyze reasoning chains, and pinpoint the source of errors or unexpected behaviors. Without such tools, debugging agentic systems becomes exceptionally difficult, hindering both development and trust. Comprehensive observability allows developers to effectively monitor performance, identify bottlenecks, and ensure these AI-driven enterprises operate reliably and predictably, ultimately fostering responsible innovation in the field.

The advent of truly autonomous applications hinges on effective communication between large language models and the tools they utilize, a challenge addressed by the Model Context Protocol (MCP). This protocol establishes a standardized framework allowing models to dynamically discover available tools and seamlessly interact with them, eliminating the need for pre-programmed integrations. Rather than being limited to a fixed skillset, an agent leveraging MCP can intelligently assess its environment, identify necessary functions, and execute them through the appropriate tools – whether that involves data analysis, web searching, or controlling physical devices. This dynamic capability moves beyond simple task completion towards genuine agency, where systems can adapt to unforeseen circumstances and independently pursue complex goals, fostering a new era of intelligent automation and problem-solving.

The progression detailed within this analysis of agentic AI architecture underscores a fundamental principle of effective systems: the necessity of focused intention. The early iterations, reliant on simple prompt-response, lacked the directedness achieved through goal-directed architectures. This shift isn’t merely a technical refinement, but a reduction toward essential function. As Blaise Pascal observed, “The eloquence of the body is in the muscles.” Similarly, the power of these systems isn’t in the complexity of their prompts, but in the deliberate construction of architectures-governance, observability, and composability-that channel potential toward clearly defined goals. The pursuit of such focused intention represents an elegant simplification, extracting maximum capability from minimal components.

What Lies Ahead?

The progression from prompt-response to goal-directed systems reveals a predictable pattern. Complexity accrues. The initial elegance of a single query yielding an answer gives way to architectures demanding governance, observability, and, inevitably, constraint. The pursuit of agency, it seems, necessitates a corresponding architecture of control. This is not progress, precisely. It is merely a shifting of the problem.

Current limitations reside not in the capacity of large language models, but in the scaffolding surrounding them. Tool use, while potent, introduces new failure modes – brittle integrations, emergent unintended consequences, and the perennial challenge of verification. Composability, lauded as a strength, requires standardization, which is anathema to rapid innovation. The tension is inherent.

Future work must address the fundamental question: how does one build a system that knows when it doesn’t know? Observability, beyond mere logging, demands introspection. Governance requires not just rules, but mechanisms for graceful degradation. Clarity is the minimum viable kindness. The goal is not more features, but fewer moving parts. Perhaps, the most significant advancements will come not from adding intelligence, but from subtracting it.

Original article: https://arxiv.org/pdf/2602.10479.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- How to download and play Overwatch Rush beta

2026-02-12 15:46