Author: Denis Avetisyan

A new perspective argues that truly proactive artificial intelligence requires agents to recognize and respect the limits of their own understanding, moving beyond simply maximizing independent action.

![An agent demonstrates proactive knowledge acquisition by identifying gaps in a user’s existing understanding-delineated by known knowledge ([latex]KK[/latex]), known unknowns ([latex]KU[/latex]), and unknown unknowns ([latex]UK[/latex])-and systematically expanding the boundary between what is known and what remains uncertain.](https://arxiv.org/html/2602.15259v1/input_files/Intro_1.jpg)

This review proposes a framework for ‘epistemic proactivity’ – aligning behavioral commitment with genuine understanding – to build more robust and reliable AI systems.

While increasingly sophisticated, generative AI often equates understanding with simply resolving articulated queries, a limitation that becomes critical when users are unaware of what they don’t know. This paper, ‘Knowing Isn’t Understanding: Re-grounding Generative Proactivity with Epistemic and Behavioral Insight’, argues that truly effective proactive agents require a foundation beyond mere autonomy, necessitating alignment between behavioral commitment and epistemic legitimacy. We demonstrate that successful partnership hinges on agents recognizing the boundaries of their own knowledge and proactively addressing conditions of epistemic incompleteness. Can we design AI systems that not only do more, but also responsibly guide users toward a more complete understanding of complex problems?

Beyond Reactive Systems: Embracing Proactive Intelligence

Current artificial intelligence predominantly functions as a reactive system, meaning it requires a specific prompt or input to generate a response. This contrasts sharply with human cognition, which is characterized by anticipation and initiative. Existing AI models excel at tasks after being instructed, but struggle to predict needs or initiate helpful actions independently. This limitation hinders truly natural interactions; a user must constantly articulate desires, rather than benefiting from an agent that understands context and proactively offers assistance. The reliance on explicit prompting creates a barrier to seamless integration into daily life, as it demands continuous user direction rather than intelligent, autonomous behavior. Consequently, the field is actively pursuing methods to move beyond this reactive paradigm and develop agents capable of anticipating requirements and offering solutions before being asked.

The future of artificial intelligence hinges on a transition from systems that merely respond to those that actively initiate – a move towards proactive agents. Current AI largely operates on a reactive model, requiring explicit prompts for every action, which creates a stilted and unnatural interaction experience. Proactive agents, conversely, are designed to anticipate needs and autonomously begin tasks, offering assistance before being asked and streamlining processes. This capability isn’t simply about increased efficiency; it’s about fostering a more intuitive and collaborative relationship between humans and AI. By independently identifying opportunities and taking appropriate action, these agents promise to move beyond the limitations of current technology, offering a truly seamless and intelligent partnership that feels less like issuing commands and more like working alongside a capable assistant.

Truly proactive artificial intelligence isn’t simply about initiating actions; it hinges on a robust self-awareness of what an agent doesn’t know. Systems must accurately model the boundaries of their own knowledge, avoiding overconfidence that leads to incorrect assumptions or inappropriate interventions. This necessitates sophisticated mechanisms for uncertainty estimation, allowing an agent to recognize when a task falls outside its capabilities or requires further information. Without this critical self-assessment, proactive behavior risks becoming intrusive, inaccurate, or even harmful; a well-designed agent prioritizes responsible action by first acknowledging the limits of its understanding, and then seeking clarification or deferring to more knowledgeable sources when necessary.

![Proactive agents foster epistemic partnerships by identifying and articulating missing relational information-rather than prematurely acting-which expands the inquiry space and enables collaborative discovery of [latex] ext{unknowns}[/latex].](https://arxiv.org/html/2602.15259v1/input_files/final.jpg)

Grounding Proactivity: Knowledge and Safe Action

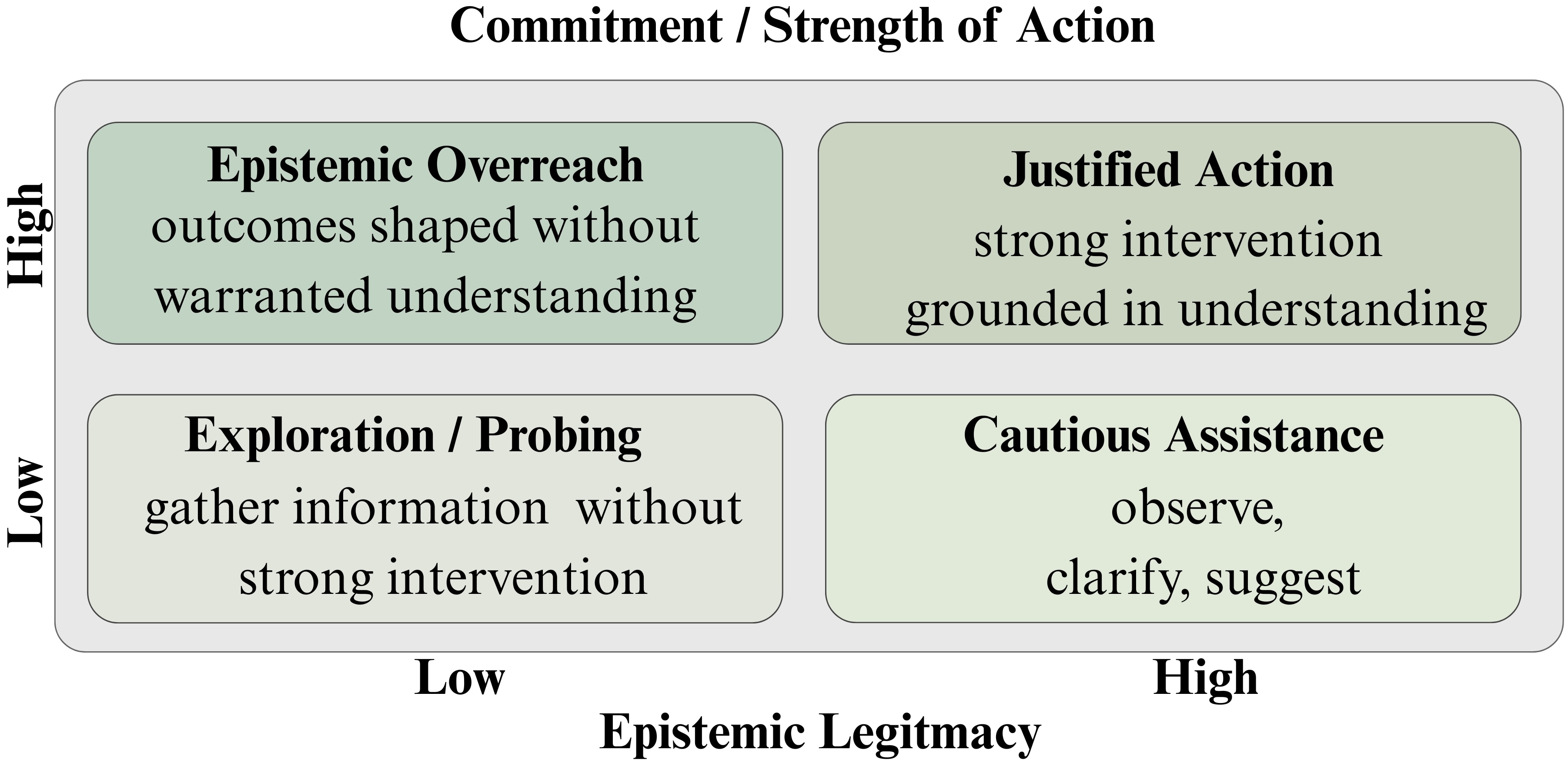

Effective proactive behavior is predicated on two core principles: epistemic grounding and behavioral grounding. Epistemic grounding refers to an accurate assessment of one’s own knowledge limits – specifically, recognizing what is not known. This self-awareness prevents overestimation of capabilities and guides information-seeking behavior. Behavioral grounding, conversely, defines the boundaries of acceptable action, ensuring interventions remain within safe and appropriate parameters. These are not simply risk-mitigation strategies; they actively shape the scope of potential proactive measures, limiting actions to areas where both sufficient knowledge and operational safety exist. Successful proactivity, therefore, requires concurrent attention to both the limits of understanding and the constraints of permissible action.

Epistemic and behavioral grounding are not simply preventative measures intended to limit risk; they fundamentally establish the operational boundaries for effective proactive behavior. Proactivity, while aiming to influence outcomes, is constrained by the limits of current knowledge and acceptable action. Operating outside these defined limits does not enhance proactivity but instead introduces inefficiency and potential negative consequences. Consequently, the scope of what constitutes effective proactivity is directly determined by the intersection of known facts and permissible interventions, meaning that actions are only considered truly proactive when undertaken within these established parameters.

The Task Frame defines the boundaries within which proactive behaviors are considered acceptable and effective. This frame is constituted by a set of explicitly defined goals, constraints, and permissible actions related to a specific undertaking. It functions as a cognitive and operational space, delineating what constitutes an in-scope intervention versus an out-of-bounds action. Establishing a clear Task Frame is critical because it provides a shared understanding of the objectives and limitations, enabling individuals to anticipate potential issues and implement solutions without inadvertently exceeding their authority or compromising the overall task objectives. Any proactive action occurring outside the defined Task Frame is considered an extraneous action and may be subject to review or correction.

Initiating Action: Anticipation and Autonomy

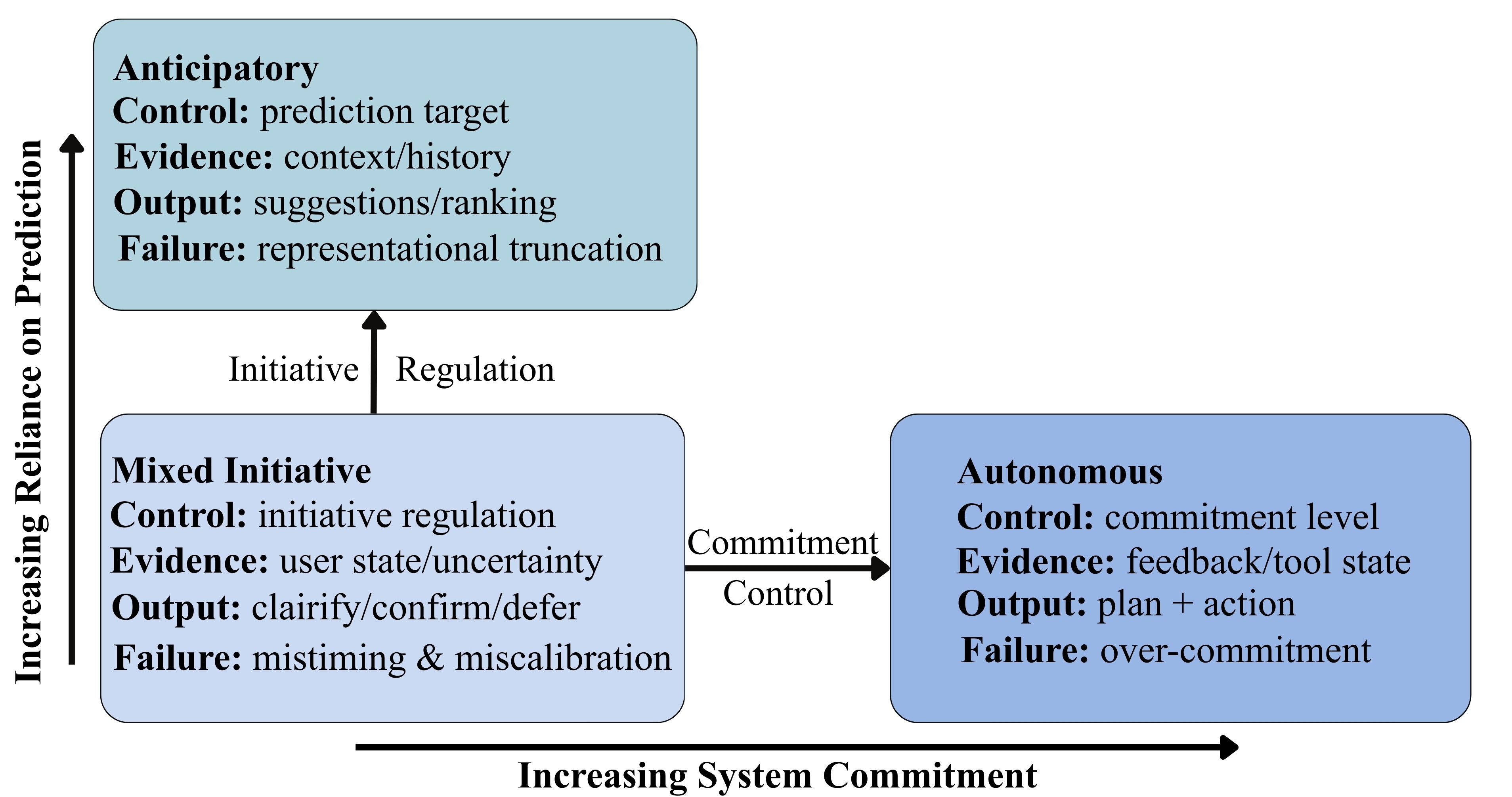

Anticipatory proactivity in agent design centers on predictive capabilities to address user needs before explicit requests are made. This is achieved through analysis of user history, contextual data, and learned patterns of behavior. Agents employing this method utilize algorithms to forecast likely future actions or requirements, then proactively offer assistance, information, or perform tasks accordingly. Successful implementation of anticipatory proactivity demonstrably improves user experience by reducing cognitive load and streamlining interactions, as users are spared the effort of initiating actions the agent has already preemptively addressed. The efficacy of this approach is directly correlated with the accuracy of the predictive models and the agent’s ability to avoid intrusive or irrelevant interventions.

Autonomous proactivity in agent design refers to the capacity of an agent to pursue and maintain actions based on internally defined goals without requiring continuous user direction. This functionality is achieved through internal state representation, goal prioritization, and action selection mechanisms. The agent monitors its internal state and the environment, evaluating progress toward its goals and adjusting its actions accordingly. By operating based on these internal drivers, autonomous proactive agents reduce reliance on external prompts, enabling long-duration tasks and continuous operation. This contrasts with reactive or explicitly directed agents which require immediate user input for each action, and anticipatory proactivity which still requires a triggering event based on predicted user need.

Mixed-initiative proactivity represents a system design approach where control dynamically shifts between the agent and the user during interaction. This isn’t a fixed allocation of responsibility; instead, the agent monitors user actions and environmental factors to determine when it can autonomously pursue goals and when user guidance is necessary. Successful implementation requires mechanisms for the agent to explicitly signal its intentions, allowing the user to approve, modify, or override proposed actions. Conversely, the system must readily accept user interventions, even if they interrupt ongoing agent tasks. This fluid exchange of control aims to leverage the agent’s processing capabilities and proactive potential while maintaining user oversight and ensuring alignment with user preferences and goals, resulting in a collaborative and efficient interaction.

Epistemic Coupling: Aligning Action with Understanding

Epistemic coupling represents a fundamental principle wherein an agent’s dedication to performing an action is inextricably linked to the justification supporting that action – its ‘Epistemic Legitimacy’. This isn’t merely about doing something, but about demonstrably knowing why it is being done. A robust coupling ensures that behavioral commitments aren’t arbitrary; instead, they are anchored in a verifiable understanding of the situation, the expected outcomes, and the underlying rationale. Essentially, the strength of an agent’s resolve to act is directly proportional to the validity of its supporting knowledge, creating a feedback loop where increased justification reinforces commitment and vice versa – a critical dynamic for reliable and predictable behavior.

A robust alignment between action and understanding effectively minimizes the risk of unintended consequences. When an agent’s behaviors are consistently justified by reliable knowledge – a state known as ‘Epistemic Legitimacy’ – actions become purposeful and predictable. This isn’t simply about avoiding errors; it’s about establishing a system where outcomes are logically connected to intentions. Without this strong coupling, even well-intentioned actions can lead to unforeseen problems, eroding confidence and hindering progress. The ability to consistently demonstrate a clear rationale for each action, grounded in verifiable information, is therefore paramount for any agent operating in a complex environment, ensuring both efficacy and responsible engagement.

The establishment of robust epistemic coupling isn’t merely about effective action; it serves as the bedrock for enduring relationships between agents and those who rely on them. When an agent’s actions consistently demonstrate a clear connection to justified understanding – that is, a high degree of epistemic legitimacy – users develop a sense of predictability and reliability. This, in turn, cultivates trust, allowing for more complex and sustained collaborations. Without this alignment, inconsistencies between action and justification erode confidence, hindering long-term engagement and potentially leading to the rejection of even beneficial assistance. Therefore, prioritizing strong epistemic coupling is essential not just for functional performance, but for fostering genuine and productive partnerships.

The Future: Epistemic Partnerships and Embracing the Unknown

Rather than functioning as a simple executor of commands, the most effective proactive agent operates as an ‘Epistemic Partnership’ – a collaborator in knowledge discovery. This agent doesn’t merely do; it actively identifies what is not known. By surfacing gaps in its understanding and highlighting areas requiring further investigation, it transforms from a tool that answers questions to one that refines the questions themselves. This approach moves beyond task completion and facilitates continuous learning, allowing the agent to adapt to evolving circumstances and ultimately contribute to more robust and insightful outcomes. The focus shifts from providing definitive answers to fostering a shared exploration of the unknown, paving the way for genuinely intelligent and adaptable systems.

Successfully navigating complex, long-term challenges demands more than simply addressing known risks; it requires explicitly acknowledging the existence of ‘Unknown Unknowns’ – those fundamental gaps in understanding that lie beyond current awareness. These aren’t merely statistical probabilities, but represent blind spots in predictive models and planning processes. Research indicates that systems designed to identify and flag potential ‘Unknown Unknowns’-by, for instance, monitoring for anomalies or inconsistencies in data-exhibit significantly improved robustness when confronted with genuinely novel situations. This proactive approach to uncertainty isn’t about predicting the unpredictable, but rather building systems capable of recognizing the limits of their own knowledge, and adapting accordingly – a crucial capability for any agent operating in a rapidly changing world and essential for robust long-horizon reasoning.

The true advancement of proactive artificial intelligence hinges not on simply doing more, but on intelligently recognizing the limits of its own knowledge. Prioritizing epistemic humility – an acknowledgement of what remains unknown – fosters a collaborative dynamic between AI and human experts. This isn’t about building systems that confidently provide answers, but rather those that skillfully identify knowledge gaps and actively seek input to refine understanding. Such an approach unlocks the potential for long-horizon reasoning, allowing AI to navigate complex challenges with greater adaptability and resilience, and ultimately, to move beyond narrow task completion towards genuine collaborative exploration and discovery.

The pursuit of proactive artificial intelligence, as detailed in the study, often fixates on increasing an agent’s capacity for action. However, this work rightly pivots the focus toward knowing what one doesn’t know – a crucial distinction between mere activity and genuine proactivity. This resonates deeply with Barbara Liskov’s observation: “Programs must be right first before they are fast.” The article underscores that behavioral commitment without epistemic legitimacy is not advancement, but recklessness. true progress isn’t achieved by building agents that simply do more, but by crafting agents that understand the boundaries of their knowledge and act responsibly within those limits. It’s a testament to the idea that simplicity-in this case, acknowledging ignorance-is not a constraint, but a sign of profound understanding.

Where Do We Go From Here?

The pursuit of ‘proactive’ agents has, for some time, resembled a frantic search for ever more elaborate clockwork. They called it ‘intelligence’; it was, more often, simply amplified reactivity. This work suggests the true challenge isn’t building machines that do more, but machines that know what they don’t know. The coupling of epistemic and behavioral domains, while conceptually neat, now demands rigorous operationalization. Simply declaring an agent ‘uncertain’ isn’t enough; the system must demonstrate a principled reduction in action when faced with genuine ignorance.

A lingering question remains: how do we reconcile commitment – the very essence of proactivity – with the admission of epistemic limits? The conventional wisdom favors autonomy, yet unrestrained action in the face of uncertainty feels less like intelligence and more like a particularly efficient form of blunder. Future research should prioritize architectures that actively seek information to resolve uncertainty, rather than merely flagging its presence.

Perhaps the most fruitful path lies in shifting the focus from individual agents to ‘epistemic partnerships’ – systems designed to leverage complementary strengths and acknowledge inherent limitations. It’s a humbling thought, that the next generation of proactive systems may be defined not by what they can achieve alone, but by what they know they cannot.

Original article: https://arxiv.org/pdf/2602.15259.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-19 02:53