Author: Denis Avetisyan

A new framework is emerging to enable AI agents to collaboratively tackle complex tasks with verifiable trust and adaptive coordination.

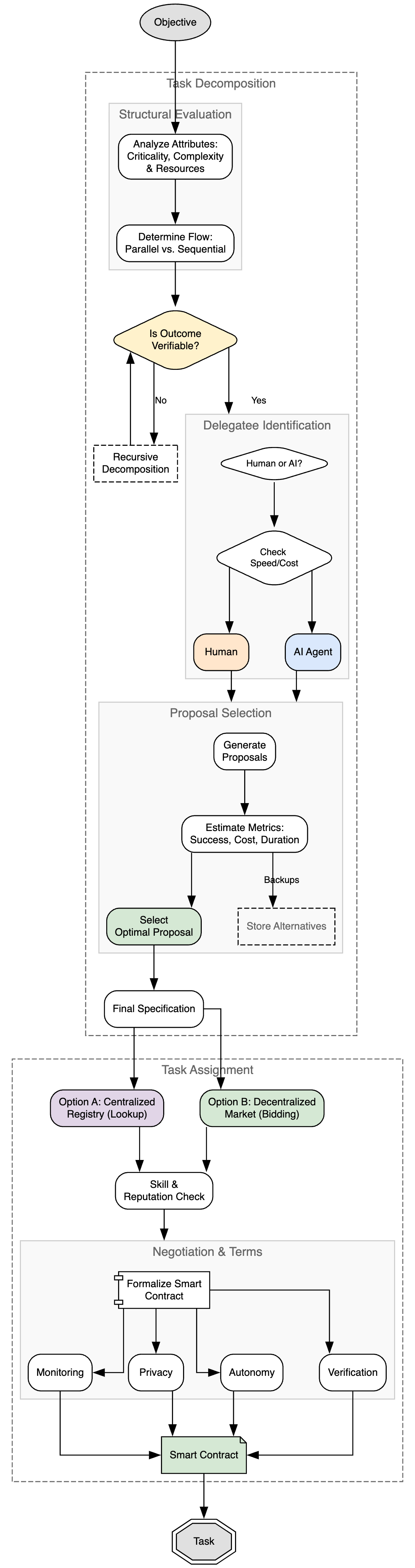

This review details a system for intelligent delegation, focusing on task decomposition, agentic safety, and reliable multi-agent coordination.

Despite advances in artificial intelligence, reliably tackling complex, real-world tasks demands more than individual agent capability. This paper introduces a framework for ‘Intelligent AI Delegation’, proposing an adaptive system for decomposing problems and distributing work between AI agents and humans, built on principles of verifiable trust and clear accountability. The core of this approach lies in dynamically allocating tasks while establishing robust mechanisms for role definition, intent clarity, and failure mitigation within multi-agent networks. Could such a system unlock the full potential of agentic systems and pave the way for safer, more effective collaboration between humans and AI?

The Fragility of Centralized Trust

Historically, the assignment of tasks has depended on placing trust in a central authority – a manager, a system administrator, or a single coordinating agent. This centralized model, while seemingly efficient, introduces critical vulnerabilities; the entire process collapses if that central point fails, is compromised, or acts maliciously. Consider a supply chain reliant on a single verification server – a disruption there halts production. Or a smart home controlled by a lone hub – a security breach grants access to everything. This inherent single point of failure extends to increasingly complex systems, where the concentration of trust creates a tempting target and limits scalability. The reliance on a single entity for delegation not only hinders resilience but also restricts adaptability, as the system struggles to respond effectively to changing circumstances or unforeseen events.

Contemporary automated systems often falter when deployed in intricate, decentralized settings due to fundamental challenges in ensuring verifiable actions, clear accountability, and dynamic adaptability. Existing architectures typically lack the granular transparency needed to rigorously confirm that delegated tasks were executed correctly, making it difficult to pinpoint responsibility when errors occur. Moreover, these systems are frequently rigid, struggling to adjust to unforeseen circumstances or shifting priorities within a complex environment-a significant limitation when dealing with real-world scenarios characterized by constant change. This inability to provide demonstrable proof of performance, coupled with a lack of responsive flexibility, ultimately restricts the reliable implementation of autonomous agents and collaborative robotic networks across varied and unpredictable landscapes.

The advancement of artificial intelligence and the vision of interconnected multi-agent systems are significantly constrained by current limitations in delegating tasks effectively. Without reliable mechanisms for assigning responsibility and verifying completion, these systems struggle to operate autonomously in complex scenarios. A robust delegation framework isn’t simply about issuing commands; it requires ensuring agents can confidently rely on each other, trace actions back to their origin for accountability, and adapt to unforeseen circumstances without centralized control. This deficiency impacts scalability, as managing trust becomes exponentially more difficult with each added agent, and ultimately limits the potential for these systems to tackle intricate, real-world problems requiring distributed collaboration and independent decision-making. Consequently, progress in areas like robotic swarms, decentralized finance, and autonomous supply chains remains hampered until these foundational delegation challenges are addressed.

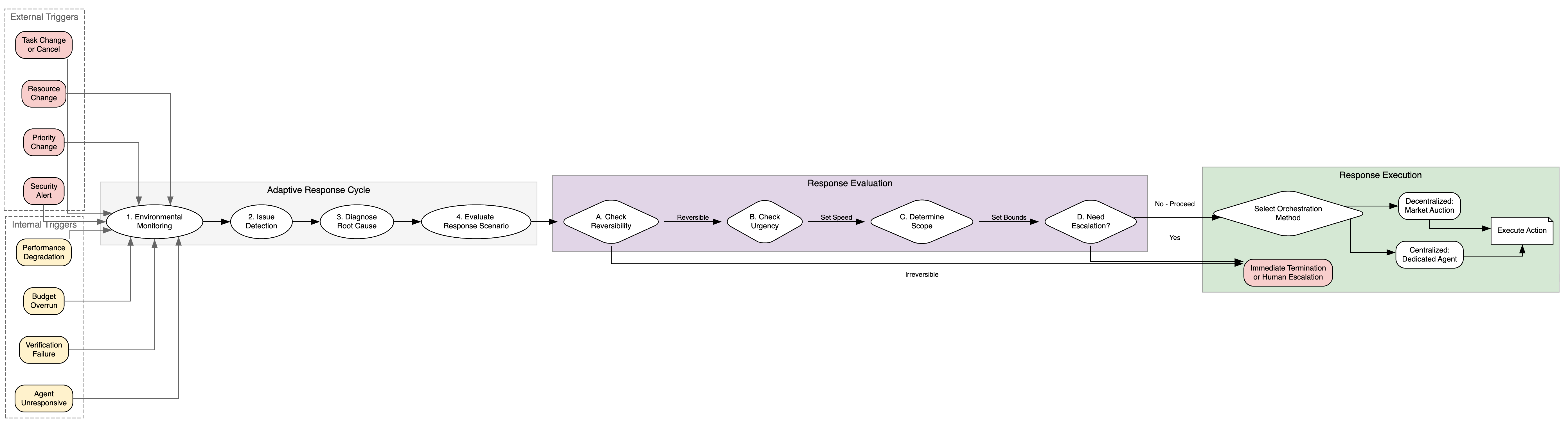

Architecting for Resilience: Intelligent Delegation

Intelligent Delegation moves beyond static task assignment by incorporating ongoing verification procedures and dynamic adjustment capabilities. Traditional delegation typically involves a one-time authorization and subsequent execution without further oversight. In contrast, Intelligent Delegation employs continuous monitoring of task progress and results against predefined criteria. This allows the system to detect deviations from expected behavior, trigger corrective actions, or re-allocate resources as needed. Runtime adaptation further enables the framework to respond to changing environmental conditions or unforeseen circumstances, ensuring tasks are completed successfully even in dynamic or uncertain environments. This proactive approach contrasts with reactive error handling and enhances the overall robustness and reliability of the delegated processes.

Contract-First Decomposition is a core principle of the Intelligent Delegation framework, mandating the formal specification of task inputs, outputs, and expected behavior prior to any execution. This process involves defining a verifiable contract – typically expressed as a set of assertions or pre/post conditions – that the delegated agent must adhere to. By establishing these constraints upfront, the system can validate the agent’s actions against the defined contract during runtime, enabling early detection of deviations or malicious behavior. This preemptive verification step is crucial for ensuring task integrity and security, as it allows for intervention or termination of the task before any damage can occur, and provides a clear basis for auditing and accountability.

Delegation Capability Tokens are cryptographically secured credentials that precisely define the permissions granted to an agent for a specific task. These tokens operate on the principle of least privilege, limiting an agent’s access only to the resources and actions absolutely necessary for completion. Implementation involves associating each token with a digitally signed policy outlining permissible actions, resource access, and execution timeframes. Should an agent be compromised, the damage radius is contained by the restricted scope of the token; unauthorized actions outside the defined policy will be rejected by the system. Token revocation mechanisms are also integral, enabling immediate disabling of compromised credentials and preventing further malicious activity.

Anchoring Trust: Security and Accountability in Practice

A Trusted Execution Environment (TEE) is a secure area of a main processor that guarantees code and data execution in an isolated environment. This isolation is achieved through hardware-based security features, creating a secure enclave resistant to software attacks even with a compromised operating system. TEEs leverage technologies like ARM TrustZone and Intel SGX to provide confidentiality and integrity for sensitive operations such as cryptographic key storage, digital rights management, and secure authentication. The enclave operates independently from the rich execution environment, protecting critical assets from unauthorized access or modification during task execution and ensuring a higher level of security than software-based isolation alone.

Liability firebreaks, implemented with escrow mechanisms, mitigate financial risk in delegated tasks by segmenting responsibility and establishing predefined financial limitations at each stage of execution. These firebreaks function by requiring funds to be held in escrow, released incrementally upon verified completion of task segments, and reverting to the originator if pre-defined service level agreements are not met. This process creates a clear chain of accountability, as each delegate is financially responsible only for the portion of the task they execute, and protects the originator from losses due to non-performance or malicious activity further down the delegation chain. The escrow acts as collateral, ensuring that financial recourse is available without requiring protracted legal proceedings.

Real-time monitoring is achieved through the implementation of Server-Sent Events (SSE), enabling continuous updates on task execution status without requiring the client to repeatedly poll for changes. This allows for dynamic adjustments to task parameters or, if necessary, intervention to prevent failures or mitigate risks. Concurrently, Zero-Knowledge Proofs (ZKPs) are utilized to cryptographically verify that a delegated task has been completed correctly, without revealing any underlying sensitive data used in the computation. ZKPs provide assurance of outcome validity while preserving data privacy, as the verifier only confirms the proof’s correctness and not the data itself. This combination of real-time monitoring and privacy-preserving verification enhances both operational control and data security within delegated task execution.

The Human Element: Guarding Against Automation Bias

Truly effective oversight transcends mere monitoring of automated systems; it demands proactive ‘Human Oversight’ rooted in critical thinking. This isn’t about catching errors after they occur, but rather establishing a framework where humans intelligently anticipate potential issues and evaluate automated suggestions with informed skepticism. Such oversight necessitates cultivating cognitive skills – questioning assumptions, considering alternative explanations, and understanding the limitations of the technology itself. By actively engaging in this process, operators move beyond passively accepting outputs to becoming discerning collaborators with the automation, ultimately ensuring a more reliable and trustworthy outcome than simple surveillance could provide. This approach prioritizes understanding why a system suggests a particular course of action, not just that it does, fostering a resilient partnership between human intellect and artificial capability.

Cognitive friction, as a design principle, actively combats automation bias by deliberately introducing small challenges or pauses in automated processes. Rather than seamless efficiency, systems incorporating cognitive friction require users to engage with information, confirm decisions, or briefly consider alternative outcomes before an action is finalized. This isn’t about making systems more difficult, but rather about increasing attentiveness and preventing mindless acceptance of machine outputs. By forcing a moment of conscious thought, even a fleeting one, the approach encourages individuals to apply their own judgment and expertise, thereby reducing the risk of errors stemming from over-trust in algorithms or automated suggestions. The intention is to create a collaborative dynamic between humans and machines, where automation augments, but does not replace, critical thinking.

A truly resilient automated system relies not only on careful oversight, but also on a secure and nuanced authorization process; methods like ‘Macaroon’ and ‘Biscuits’ offer precisely this capability. These cryptographic techniques move beyond simple permission grants, instead allowing for the delegation of limited, revocable access rights. This granular control minimizes the potential damage from compromised credentials or malicious actors, as automated systems operate only within clearly defined boundaries. Furthermore, these authorization methods support verifiable claims, ensuring that delegated tasks are executed with appropriate context and accountability, thereby building trust in the overall system and bolstering its ability to withstand both technical failures and adversarial attacks. The result is a delegation process that isn’t merely efficient, but demonstrably safe and reliable.

The pursuit of intelligent delegation, as detailed in this framework, echoes a fundamental truth about complex systems: their longevity isn’t guaranteed by initial perfection, but by graceful adaptation. The paper posits a system built on verifiable trust and adaptive coordination, recognizing that static designs will inevitably falter. This mirrors G. H. Hardy’s observation: “A mathematician, like a painter or a poet, is a maker of patterns.” Just as a mathematician refines patterns over time, so too must AI systems continuously adjust to maintain integrity and function within a dynamic environment. The proposed framework, with its emphasis on accountability, seeks to build a resilient pattern, one capable of withstanding the inevitable decay inherent in all systems, ultimately extending its functional lifespan.

What Lies Ahead?

The pursuit of intelligent delegation, as outlined in this work, inevitably encounters the limitations inherent in any complex system. The framework presented isn’t a solution, but rather a carefully constructed scaffolding. The true challenge isn’t achieving delegation itself, but understanding how these agentic networks age-how their capacity for verifiable trust erodes, and how adaptive coordination shifts over time. Systems learn to age gracefully, and the long-term resilience of such frameworks will depend not on preventing decay, but on anticipating it.

A significant unresolved aspect lies in the quantification of ‘accountability’ within multi-agent systems. While the framework proposes mechanisms for tracing task decomposition, assigning responsibility in scenarios involving emergent behavior-where the collective action deviates from individual agent intent-remains a difficult problem. The field may find it more fruitful to study the patterns of failure, rather than striving for absolute prevention.

Perhaps the most subtle, and ultimately important, direction for future research lies in accepting that some problems are best observed, not solved. Sometimes observing the process-the nuanced dance of adaptation and decay-is better than trying to speed it up. The goal shouldn’t be to build perpetually optimizing systems, but to develop the tools to understand their natural evolution.

Original article: https://arxiv.org/pdf/2602.11865.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 is bringing the v5.3.1 update: What to expect and what’s coming

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- How to watch Marty Supreme right now – is Marty Supreme streaming?

- Country star Thomas Rhett welcomes FIFTH child with wife Lauren and reveals newborn’s VERY unique name

2026-02-13 20:39