Author: Denis Avetisyan

A new review examines the evolving relationship between people and artificial intelligence, exploring how to build collaborative decision-making systems that move beyond simple assistance.

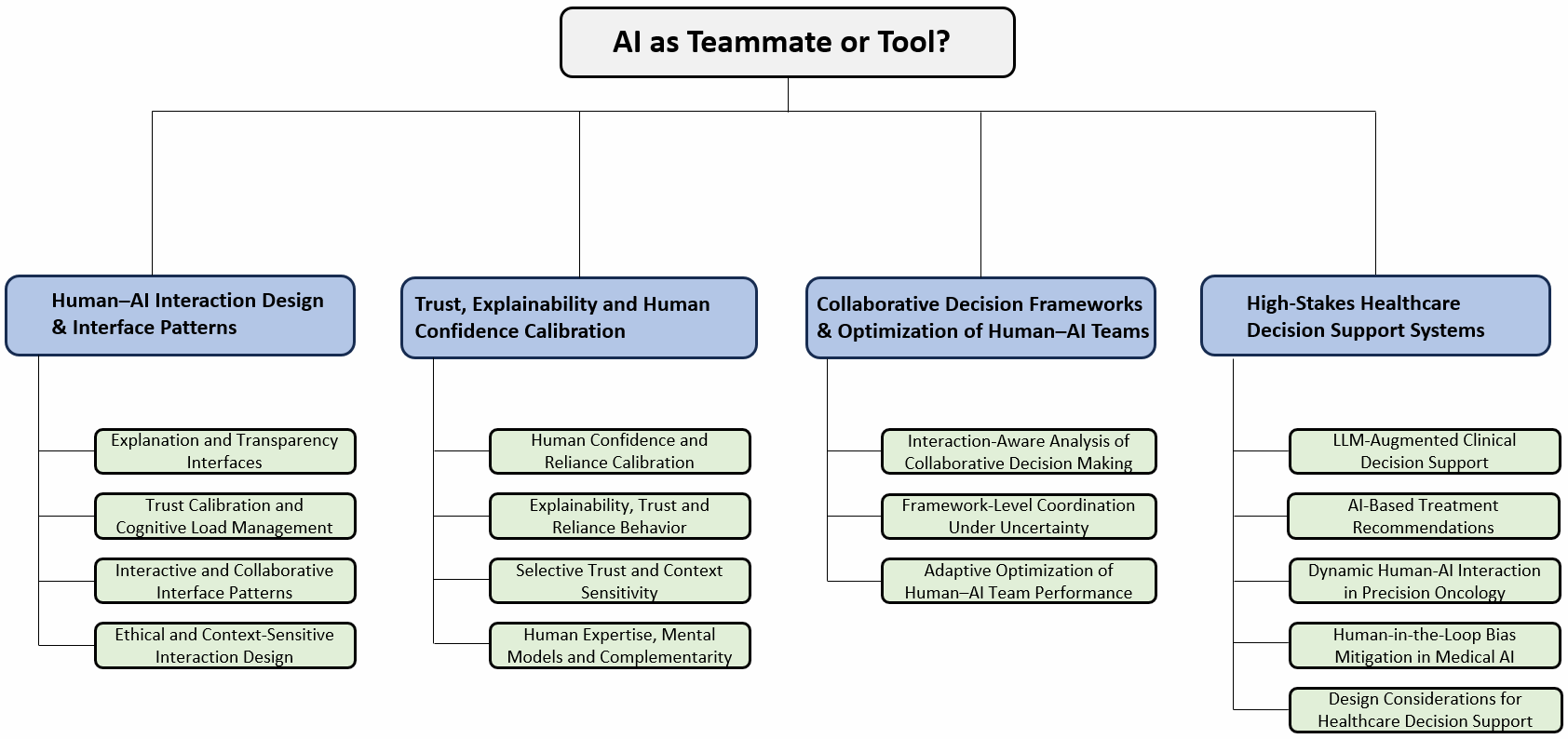

This paper surveys recent advances in Human-AI Interaction and decision support, with a focus on trust calibration, explainability, and interface design for high-stakes applications like healthcare.

Despite advances in artificial intelligence, realizing its full potential hinges on defining its role-as a simple tool or a collaborative teammate. This is the central question addressed in ‘AI as Teammate or Tool? A Review of Human-AI Interaction in Decision Support’, which synthesizes the Human-AI Interaction (HAI) literature to reveal that static interfaces and miscalibrated trust currently limit AI efficacy in decision support. The review demonstrates that transitioning toward truly collaborative AI requires adaptive, context-aware interactions fostering shared mental models and dynamic negotiation of authority-particularly vital in domains like healthcare. Can we design interfaces that move beyond explainability to cultivate genuine partnership between humans and AI, unlocking more effective and trustworthy decision-making?

The Illusion of Seamless Intelligence

Modern healthcare presents challenges exceeding the capacity of singular intelligence, be it human or artificial. Diagnostic accuracy, treatment planning, and even preventative care now involve navigating vast datasets, considering intricate patient histories, and accounting for a rapidly expanding body of medical literature. While artificial intelligence excels at identifying patterns within these datasets, it often lacks the nuanced judgment and contextual understanding crucial for personalized care. Conversely, human clinicians, though adept at holistic assessment, are susceptible to cognitive biases and limitations in processing sheer data volume. Consequently, optimal outcomes increasingly depend on a collaborative approach, where AI augments-but does not replace-human expertise, offering a powerful synergy to address the complexities inherent in contemporary medical decision-making.

The most impactful decisions often reside at the intersection of nuanced judgment and exhaustive data analysis, a space where neither human intellect nor artificial intelligence functions optimally in isolation. Human expertise excels at pattern recognition, contextual understanding, and ethical considerations – skills difficult to replicate algorithmically. Conversely, AI systems demonstrate unparalleled capacity for processing vast datasets, identifying subtle correlations, and minimizing cognitive biases. Consequently, effective decision-making increasingly relies on a synergistic partnership, leveraging the strengths of both. This isn’t about replacing human judgment with automated systems, but rather augmenting it; AI serves as a powerful analytical tool, providing insights that inform, but do not dictate, human choices, ultimately leading to more robust and well-considered outcomes.

The mere implementation of artificial intelligence systems does not guarantee improved outcomes; substantial gains arise from the quality of human-AI collaboration. Research indicates that optimal performance isn’t achieved by simply automating tasks, but by designing interfaces and workflows that leverage complementary strengths – human intuition, critical thinking, and ethical judgment combined with AI’s speed, data processing capabilities, and pattern recognition. This synergistic approach requires careful consideration of how information is presented, how decisions are made, and how trust is established between the human operator and the AI system. Ultimately, the focus must shift from building increasingly intelligent machines to cultivating intelligent interactions, enabling humans and AI to function as a unified, more effective decision-making unit.

The pursuit of truly effective decision-making increasingly centers on hybrid intelligence frameworks, moving beyond simply using artificial intelligence to strategically integrating it with human cognitive strengths. These frameworks aren’t about replacing human judgment, but rather about augmenting it; AI excels at processing vast datasets and identifying patterns, while humans provide crucial context, ethical considerations, and nuanced understanding. Successful hybrid systems prioritize optimized collaboration, distributing tasks based on comparative advantage – AI handling computationally intensive analysis and humans focusing on interpretation, innovation, and handling unforeseen circumstances. The design of these frameworks centers on seamless information exchange, intuitive interfaces, and methods for calibrating trust between human and machine, ultimately fostering a synergistic partnership that surpasses the capabilities of either entity alone.

The Trust Equation: A Necessary Calibration

Uncritical acceptance of AI recommendations introduces risk, as current systems are not infallible and can generate incorrect or suboptimal suggestions. Conversely, complete disregard for AI output prevents realization of potential benefits, including increased efficiency and access to data-driven insights. This is particularly relevant given the documented presence of biases and limitations within many AI models; while AI can augment human capabilities, it requires human oversight to mitigate errors and ensure appropriate application. Therefore, a balanced approach-acknowledging both the strengths and weaknesses of AI-is necessary to maximize collaborative performance.

Trust calibration, the process of aligning human confidence with the demonstrated reliability of an artificial intelligence system, is essential for maximizing collaborative performance. Misalignment – either over-reliance on an unreliable AI or unjustified dismissal of a capable one – directly impacts outcome quality and efficiency. Optimal performance is achieved when human operators accurately assess the AI’s capabilities in a given context and adjust their level of dependence accordingly; this requires quantifiable metrics of AI performance, transparent communication of system uncertainty, and mechanisms for users to provide feedback and refine their assessment of the AI’s trustworthiness over time. Failure to calibrate trust leads to either automation bias, where human operators uncritically accept AI suggestions, or disengagement, resulting in underutilization of potentially valuable AI assistance.

Accurate trust calibration relies on the assessment of multiple factors. AI confidence scores, representing the system’s own assessment of its prediction accuracy, provide a crucial data point. However, these must be weighted against human expertise; individuals with greater domain knowledge should apply more critical evaluation. Critically, a static assessment is insufficient; longitudinal evaluation of system performance – tracking accuracy rates over time and across diverse datasets – is necessary to establish a reliable baseline and identify potential drift in AI reliability. Combining these elements allows for a dynamic adjustment of human reliance, maximizing the benefits of AI assistance while mitigating risks associated with over- or under-trust.

Humans exhibit selective trust as a cognitive mechanism wherein reliance on information, including AI recommendations, varies based on perceived situational factors and prior experience. This isn’t indicative of irrationality, but rather a rational adaptation to varying levels of uncertainty; individuals will generally defer to AI when confidence is high and the task is complex, but increase scrutiny and potentially override suggestions when confidence is low or the situation demands specific human judgment. Supporting this natural response requires systems that transparently communicate AI confidence levels and allow for easy human override, as well as interfaces designed to facilitate appropriate monitoring of AI performance in different contexts. Ignoring or suppressing selective trust can lead to both complacency when AI is incorrect and unnecessary resistance when it is accurate, hindering collaborative performance.

Making the Invisible Visible: A Necessary Transparency

Transparency in AI systems is critical because users require insight into the reasoning behind automated recommendations to assess reliability and potential biases. Without understanding why an AI arrived at a specific conclusion, users are less likely to trust or effectively utilize the system’s output, particularly in high-stakes domains such as healthcare, finance, and legal decision-making. This need for rationale extends beyond simple accuracy; users must be able to evaluate whether the AI’s logic aligns with their own understanding of the situation and relevant contextual factors. A lack of transparency hinders debugging, accountability, and the identification of unintended consequences, ultimately limiting the widespread adoption and responsible implementation of AI technologies.

Explainable AI (XAI) techniques are critical for establishing user confidence in artificial intelligence systems. These techniques encompass a range of methods, notably visual explanation patterns which highlight the features driving a model’s decision, and increasingly, explanations generated by Large Language Models (LLMs). LLM-based explanations translate complex algorithmic outputs into natural language, detailing the reasoning process in a human-understandable format. The implementation of XAI is not merely about disclosing information; it directly addresses the need for accountability and reliability, enabling users to validate AI recommendations and identify potential biases or errors, thus fostering appropriate levels of trust.

Large Language Models (LLMs) are increasingly utilized to translate the decision-making processes of complex AI systems into natural language explanations. Unlike traditional methods requiring hand-engineered rules or simplified proxies, LLMs can analyze the internal states and feature importance within a model – including those based on neural networks – and generate coherent, contextualized rationales. This capability extends beyond simply identifying influential inputs; LLMs can articulate the reasoning behind a prediction, effectively summarizing the model’s logic in a human-readable format. Furthermore, LLMs can tailor explanations to specific audiences, adjusting the level of technical detail and complexity as needed, and can provide counterfactual explanations detailing how input changes would alter the output.

Effective explanations derived from AI systems directly impact the efficiency of human-AI collaboration by minimizing cognitive load. When presented with AI outputs accompanied by clear rationales, human team members require less mental effort to understand, validate, and ultimately act upon the information. This reduction in cognitive load translates to faster decision-making, improved accuracy, and increased trust in the AI system. Furthermore, well-structured explanations enable humans to identify potential errors or biases in the AI’s reasoning, fostering a more critical and informed assessment of the AI’s recommendations and promoting appropriate oversight within the collaborative workflow.

Adaptive Assistance: The Illusion of Seamless Integration

Adaptive assistance systems represent a shift from static support to dynamically adjusted collaboration, observing user actions and task complexity to provide precisely timed interventions. These systems don’t simply offer help; they learn to anticipate needs, delivering assistance only when requested or when performance dips below a defined threshold. This nuanced approach is crucial for fostering effective human-AI partnerships, as overbearing assistance can undermine user agency and trust, while insufficient support leaves individuals struggling. By tailoring the level and type of support, these systems enhance collaborative performance, allowing humans and AI to complement each other’s strengths and mitigate weaknesses, ultimately leading to more efficient and effective outcomes in complex tasks.

Effective adaptive assistance hinges on a delicate balance between support and autonomy, fostering what researchers term “trust calibration.” Systems designed with this principle in mind don’t simply offer help, but intelligently gauge a user’s current capacity and provide assistance only when demonstrably needed. This avoids both over-reliance – where users become passive recipients of solutions – and under-assistance, which can lead to frustration and diminished performance. By empowering users to maintain control over the process and confidently execute tasks, these systems build a positive feedback loop; successful completion reinforces trust in the AI, while retained agency ensures the user remains an active participant. This careful orchestration of support ultimately cultivates a more robust and productive human-AI collaboration, maximizing the benefits of both intelligence sources.

The integration of adaptive assistance principles holds immense promise for transforming clinical decision support, especially within the complex field of precision oncology. Current systems often present a static deluge of information, potentially overwhelming clinicians; however, intelligently tailored assistance can dynamically adjust to a physician’s workflow and expertise. By monitoring real-time data – such as the physician’s diagnostic reasoning or the patient’s genomic profile – these systems can proactively offer relevant insights, flag potential anomalies, and suggest personalized treatment strategies. This isn’t about replacing clinical judgment, but rather augmenting it with continuously calibrated support, fostering trust and ultimately leading to more informed, efficient, and effective cancer care. The potential extends beyond diagnosis to encompass treatment planning, monitoring, and even patient communication, heralding a new era of human-AI collaboration in healthcare.

A synthesis of 24 recent studies – spanning 2023 to 2025 and focused on Human-AI Interaction – reveals consistent gains in collaborative task performance. These investigations demonstrate that thoughtfully designed AI assistance leads to variable, yet measurable, improvements in both accuracy and task completion time. Crucially, researchers are moving beyond subjective assessments of collaboration, with emerging frameworks like A²C – encompassing Awareness, Assistance, and Control – allowing for the quantification of ‘CoEx Success Rate’ – a metric representing effective human-AI teamwork. This data-driven approach promises to refine the development of adaptive assistance systems and rigorously evaluate their impact on complex tasks.

The pursuit of seamless Human-AI interaction, as detailed in the review, feels less like building intelligent assistants and more like meticulously constructing elaborate failure modes. The paper advocates for AI as a ‘teammate,’ implying a level of trust and collaboration. Yet, the history of technology suggests that even the most carefully calibrated systems will eventually be pushed to their limits, revealing unexpected vulnerabilities. As Barbara Liskov observed, “Programs must be right first before they are fast.” This rings true; the focus on collaborative decision-making frameworks and explainable AI feels less about achieving perfect solutions and more about understanding how things will inevitably break down. The bug tracker, inevitably, awaits.

What’s Next?

The pursuit of ‘teammate’ AI in decision support is, predictably, revealing that humans are remarkably good at finding edge cases. The literature suggests a focus on explainability and trust calibration, which feels less like solving problems and more like building increasingly complex dashboards to monitor inevitable failures. Tests, after all, are a form of faith, not certainty. The current emphasis on interface design assumes a static user, a convenient fiction that production will swiftly dismantle.

Future work will likely involve wrestling with the question of who calibrates the trust. Is it the AI developer, the clinician, or the patient facing a diagnosis? Each option introduces a new layer of potential bias, and the assumption of a ‘correct’ calibration feels dangerously optimistic. The field should perhaps spend less time striving for perfect collaboration and more time building systems resilient enough to absorb predictable human error-both in using the AI and in misinterpreting its outputs.

Ultimately, the goal isn’t to create an AI that feels like a teammate, but one that degrades gracefully when inevitably misused. The real metric of success won’t be elegant algorithms or intuitive interfaces, but the number of Mondays it survives without deleting production data, or worse.

Original article: https://arxiv.org/pdf/2602.15865.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-19 14:24