Author: Denis Avetisyan

New research explores how perceptions of authorship are shifting as generative AI tools become increasingly integrated into programming education.

A focus on ‘process-oriented attribution’-demonstrating thoughtful engagement with AI-may be more effective than simply disclosing its use.

The increasing prevalence of generative AI in computing education challenges established notions of authorship and academic integrity. This study, ‘Exploring Emerging Norms of AI Disclosure in Programming Education’, investigates student perceptions of ownership and appropriate attribution when utilizing AI assistance in programming contexts. Findings reveal that judgments of authorship are primarily determined by the degree of AI autonomy and the extent of subsequent human refinement, suggesting a shift towards valuing demonstrated engagement with AI-generated content over simple acknowledgement of its use. Will adopting ‘process-oriented attribution’ effectively foster responsible AI integration and critical thinking skills in future programmers?

The Inevitable Shift: AI and the Erosion of Skill

The integration of generative artificial intelligence into education is fundamentally reshaping how students acquire and demonstrate knowledge. While offering exciting possibilities for personalized learning and access to information, these tools also present considerable challenges to established pedagogical practices. Current research indicates a potential trade-off: the ease with which AI can complete tasks may inadvertently diminish the development of crucial skills such as critical thinking, problem-solving, and creative expression. The rapid evolution of these technologies necessitates a proactive approach to curriculum design and assessment, focusing not simply on what students know, but on how they learn and apply their understanding in a world increasingly augmented by artificial intelligence. The effective harnessing of AI’s potential requires careful consideration of its impact on student agency and the cultivation of lifelong learning capabilities.

The increasing prevalence of generative AI tools in education raises significant concerns about the development of crucial self-regulated learning (SRL) skills. These tools, while offering convenience, inherently automate tasks traditionally requiring active cognitive effort, potentially diminishing a student’s need to plan, monitor, and evaluate their own learning. When AI readily provides answers or completes assignments, the opportunity for students to engage in the deliberate practice and reflective thinking central to SRL is curtailed. This reduction in cognitive demand may lead to a dependence on external solutions, hindering the development of internal metacognitive strategies and ultimately impacting a student’s ability to learn independently and effectively beyond the immediate support of the technology. The challenge lies not in the tools themselves, but in mitigating the risk of passively receiving information instead of actively constructing knowledge.

The increasing integration of artificial intelligence into learning environments carries a significant, yet often overlooked, risk to students’ metacognitive abilities. As AI tools automate tasks previously requiring critical thinking and problem-solving, opportunities for students to actively monitor, evaluate, and adjust their own learning strategies diminish. This reliance on externally generated solutions can create a “cognitive offloading” effect, where students become less aware of their knowledge gaps and less capable of independently assessing the validity of information. Consequently, the development of crucial self-reflective skills – the ability to understand how one learns – is potentially hindered, leaving students less equipped to navigate complex challenges and become lifelong, self-directed learners. The concern isn’t simply that AI provides answers, but that it preempts the very cognitive processes necessary for genuine understanding and intellectual growth.

Attribution: A Band-Aid on a Broken System

Explicit attribution, in the context of academic work utilizing artificial intelligence, necessitates clear documentation of both AI-generated content and all subsequent human modifications or contributions. This practice is fundamental to upholding academic integrity by distinguishing between original student thought and AI output. Specifically, students should identify which portions of their work were created by AI, detailing the prompts used and the specific AI tools employed. Furthermore, any editing, analysis, or synthesis performed by the student on the AI-generated material must also be explicitly indicated. Failure to provide this level of transparency can constitute plagiarism or misrepresentation of authorship, undermining the assessment of genuine student learning and critical thinking skills.

Process-Oriented Attribution represents a move beyond simply noting that AI tools were used, and instead prioritizes demonstrating how a student thoughtfully interacted with and critically evaluated AI-generated content. This framework assesses the student’s iterative process – including prompting strategies, content selection, revision choices, and justification of modifications – as evidence of learning and ownership. Evaluation focuses on the cognitive labor applied to refine AI output, rather than solely on the final product, acknowledging that meaningful engagement with AI necessitates active participation and critical thinking throughout the workflow. Documentation of this process – through drafts, annotations, or reflective statements – becomes central to establishing attribution and demonstrating academic integrity.

Functional modification, defined as substantive alterations to AI-generated content, demonstrates a student’s cognitive investment beyond simple prompt engineering or content acceptance. This involves not merely editing for grammar or style, but restructuring arguments, integrating external sources, adding novel analysis, or significantly changing the scope and focus of the original AI output. The degree of modification is directly correlated with evidence of learning; minimal changes suggest passive use, while extensive revisions indicate active engagement with the material and a deeper understanding of the subject matter. Assessing the extent and nature of these functional modifications provides a measurable indicator of student ownership and the development of critical thinking skills, shifting the focus from detecting AI use to evaluating demonstrated learning outcomes.

The proposed attribution framework moves beyond simple acknowledgement of AI usage to prioritize demonstrating a student’s cognitive investment in the work. This necessitates evaluating the extent to which a student actively refines and adapts AI-generated content, rather than merely presenting it. Attribution, therefore, becomes a measure of the student’s process – the specific modifications, critical analysis, and creative contributions applied to the AI’s output – and a validation of their learning as evidenced by these adaptations. The focus shifts from whether AI was used to how the student engaged with and transformed the AI’s contributions into their own work.

Unpacking the Perception: A Study in Futility

A Factorial Vignette Study was employed to systematically assess student perceptions of AI assistance in academic work. This experimental design presented participants with hypothetical academic scenarios, or vignettes, systematically varying two key factors: the level of AI assistance provided and the amount of subsequent human effort applied post-AI generation. By manipulating these factors, researchers were able to isolate and quantify their independent and combined effects on student judgements of authorship and ownership. Each vignette presented a completed academic task, and participants were asked to attribute the work to either the student, the AI, or a combination of both, allowing for a nuanced understanding of how different levels of AI involvement and human refinement influence perceptions of responsibility for the final product.

The factorial vignette study employed a 2×2 design, systematically varying AI Assistance Level (low vs. high) and Human Post-AI Effort (low vs. high). Vignettes were constructed to present participants with scenarios depicting academic work completed with different combinations of these two factors. Low AI Assistance involved AI generating only an initial draft or outline, while high AI Assistance represented a nearly complete assignment. Similarly, low Human Post-AI Effort indicated minimal editing or revision after AI completion, and high Human Post-AI Effort signified substantial rewriting and refinement. This manipulation allowed for the isolation and assessment of the individual and combined effects of both factors on student perceptions of authorship and ownership of the final product.

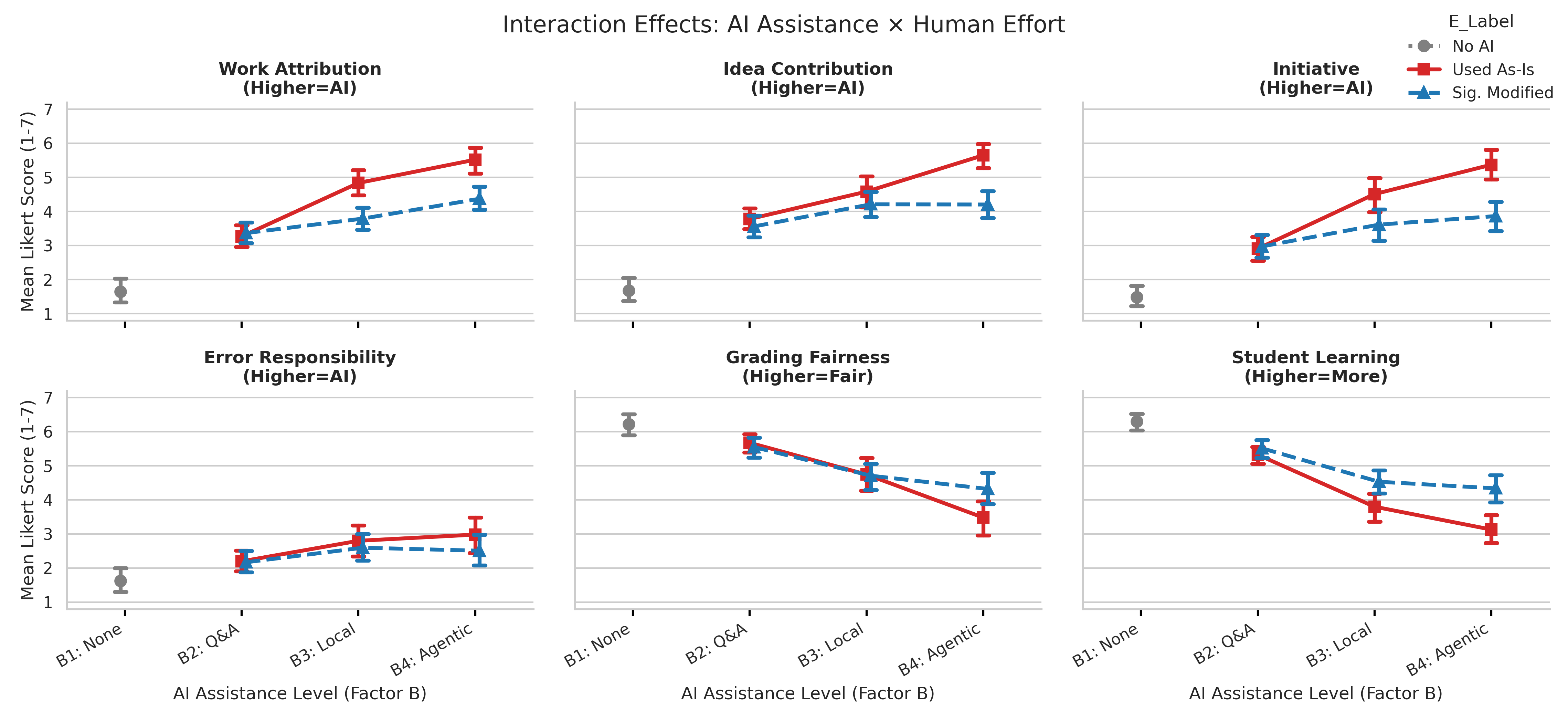

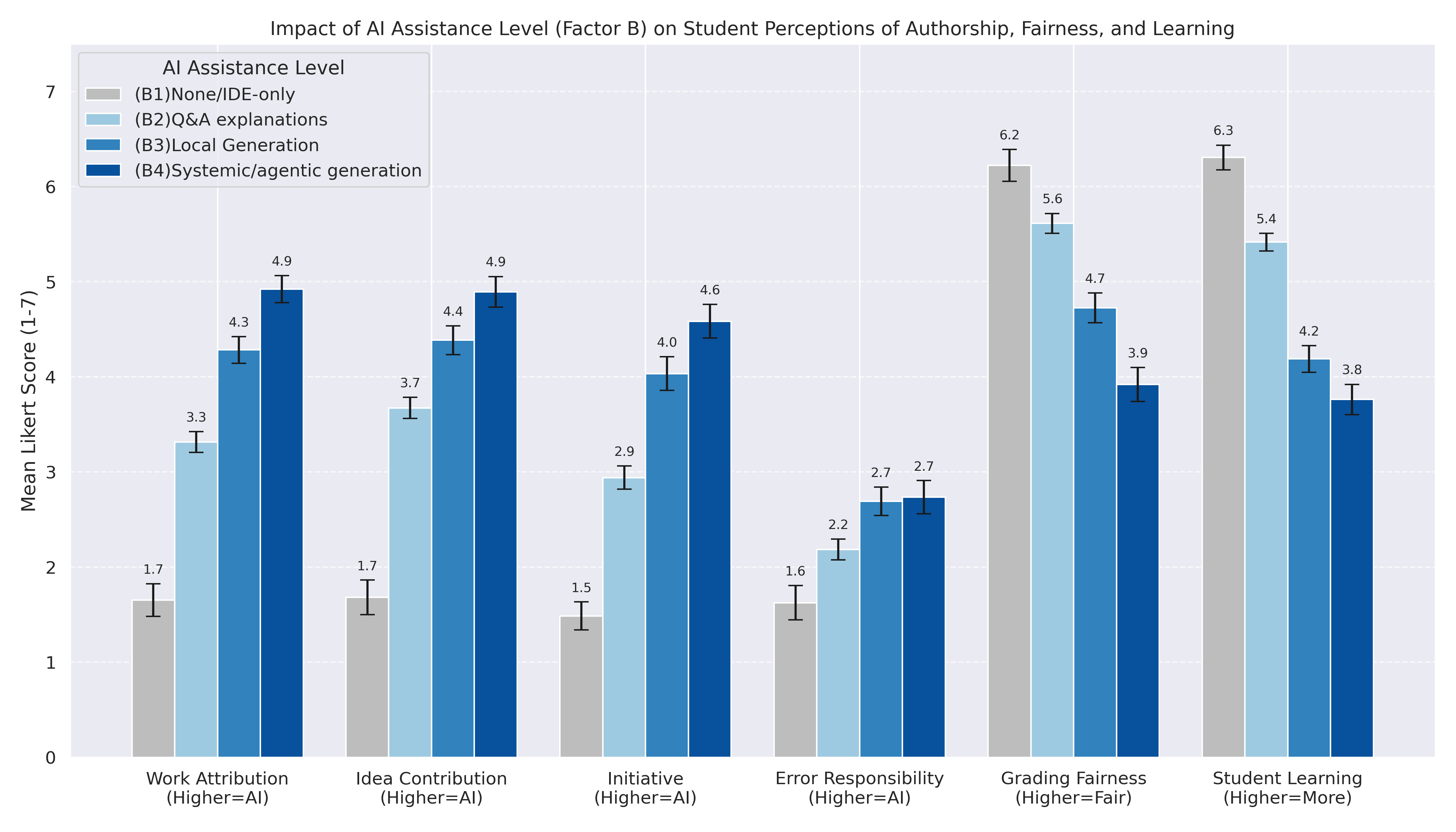

Student perceptions of authorship are significantly influenced by both the degree of AI assistance and the extent of subsequent human modification. Analysis of the Factorial Vignette Study data indicates a substantial proportion of variance in authorship attribution is accounted for by the AI Assistance Level (ηp2 = 0.16), demonstrating that students readily associate increased AI contribution with decreased student authorship. Specifically, scenarios involving higher levels of AI-generated content were consistently perceived as reflecting less of the student’s own work, even when coupled with varying degrees of human post-AI effort. This finding highlights the sensitivity of authorship perception to the initial level of AI involvement in the creation of academic work.

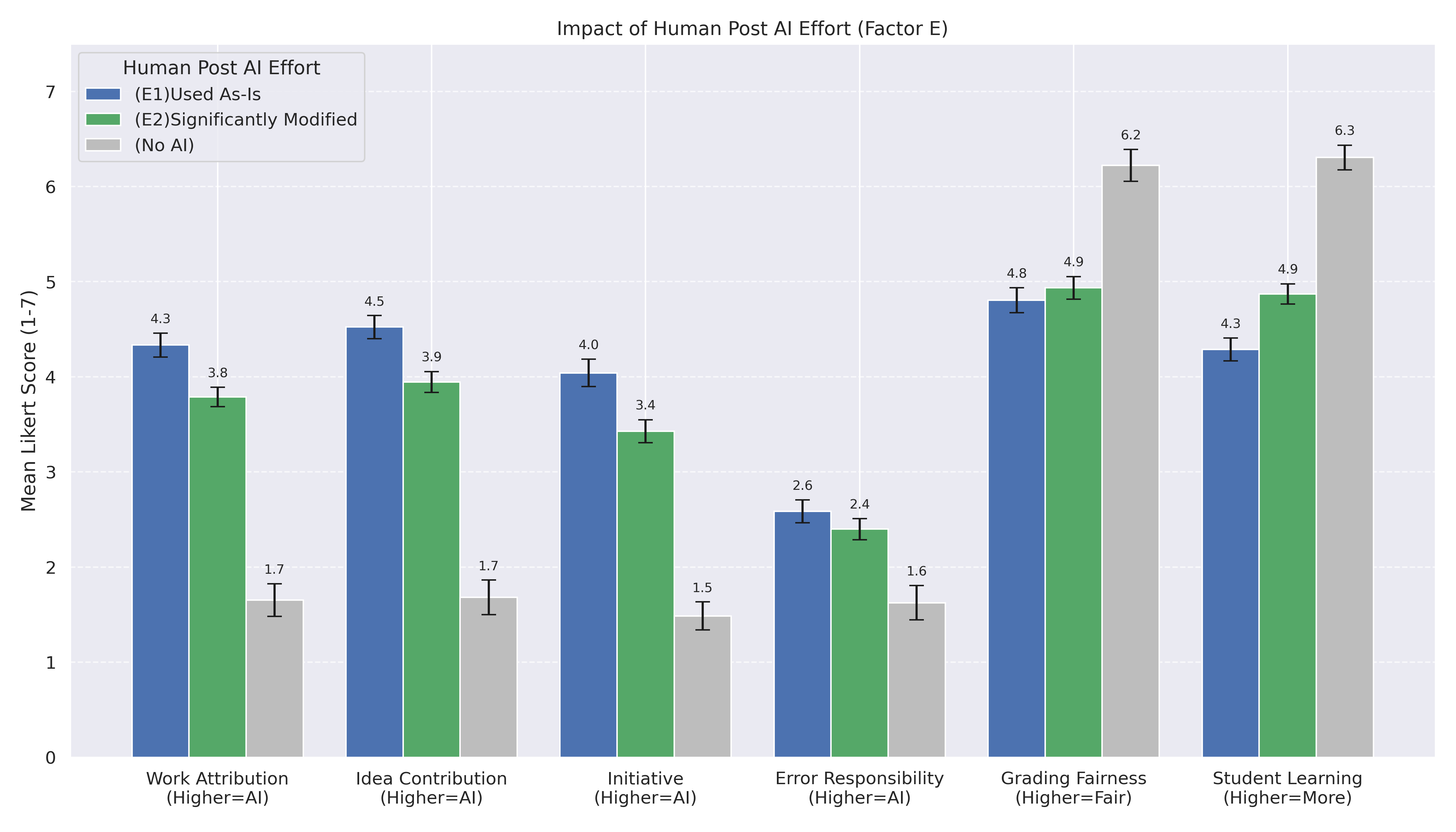

Statistical analysis revealed that while the degree of Human Post-AI Effort did influence perceptions of authorship – accounting for 4% of the variance (ηp2 = 0.04) – the level of AI Assistance provided was the dominant factor in determining authorship attribution. The AI Assistance Level explained a substantially larger proportion of the variance, at 16% (ηp2 = 0.16). This indicates that the extent to which AI was initially used to generate content had a significantly greater impact on how students perceived ownership of the final product compared to the amount of subsequent human editing or refinement.

The study findings indicate that student perceptions of authorship are directly linked to the degree of human involvement following AI assistance. Active engagement – specifically, substantial human post-AI effort – significantly increases the attribution of authorship to the student. This suggests that simply using AI is insufficient to establish ownership of work; instead, iterative refinement and modification by the student are critical. The observed effect mitigates risks associated with perceived academic dishonesty or a lack of original thought, reinforcing the need for pedagogical approaches that emphasize critical evaluation and substantive revision of AI-generated content rather than passive acceptance.

The Inevitable Consequences: Grading, Disclosure, and a Failing System

Student evaluations of grading fairness are intrinsically linked to their beliefs about authorship and contribution. Research indicates a direct correlation between perceived effort and expected grades; students anticipate higher marks for work they feel they actively shaped, even when utilizing tools like artificial intelligence. This suggests that simply completing an assignment isn’t enough; students need to perceive their own meaningful involvement for a grade to feel justified. Consequently, educators must consider not only the final product but also the demonstrable process, recognizing that a student’s sense of ownership over their work is a critical component of their evaluation of fairness and, ultimately, their learning experience.

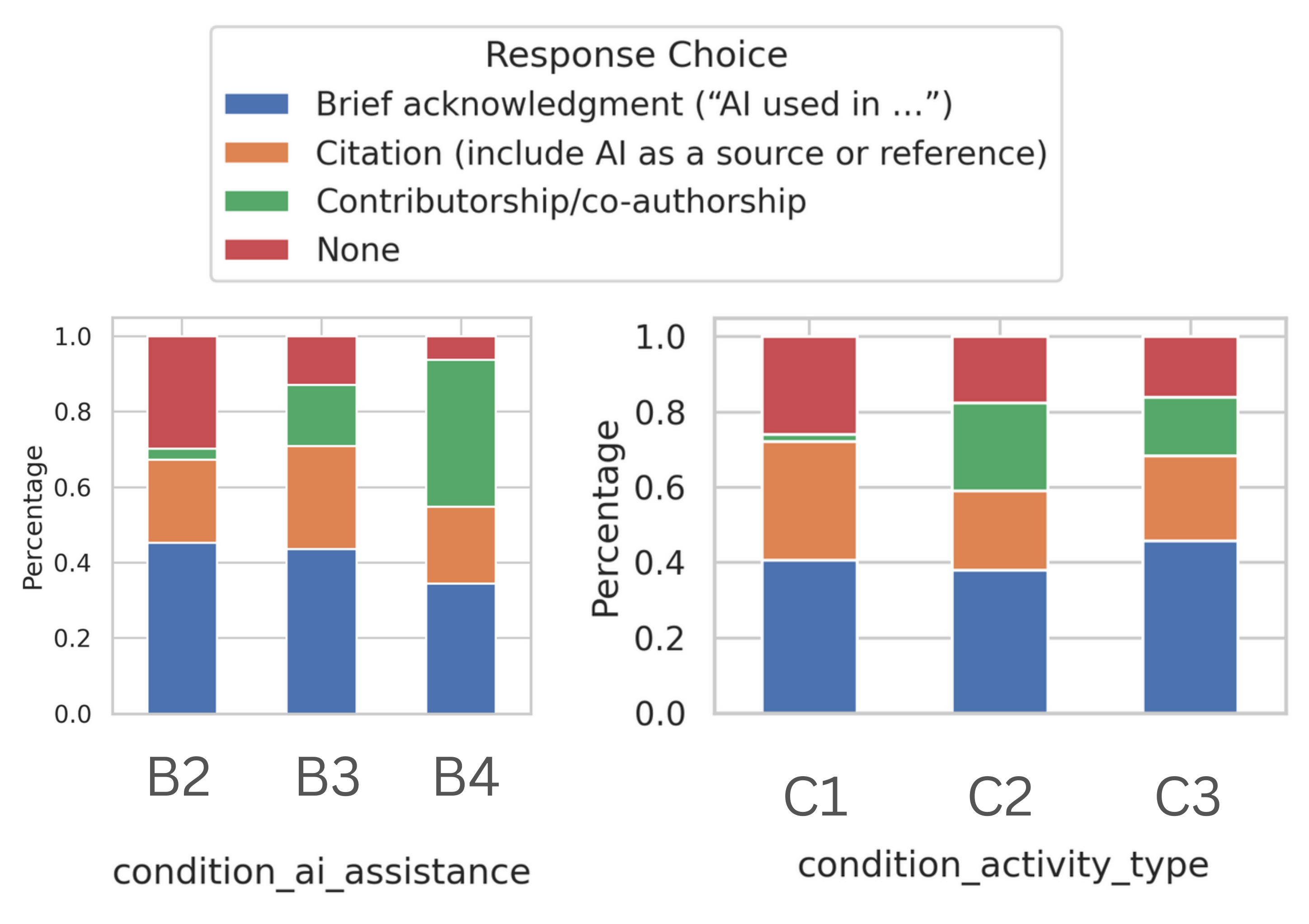

Student choices regarding the disclosure of AI assistance are demonstrably linked to the extent of that assistance and the nature of the academic task. Statistical analysis reveals a significant correlation – indicated by a chi-squared value of 202.40 with a p-value less than 0.001 – between the level of AI involvement and whether a student opts to reveal its use. Furthermore, the type of activity – be it creative writing, problem-solving, or research – also significantly influences disclosure preferences, as shown by a chi-squared value of 21.24 with a p-value of 0.002. These findings suggest that students are not uniformly inclined to disclose AI use; rather, their decisions are nuanced, varying with both the depth of AI’s contribution and the specific demands of the assignment.

Statistical analysis reveals a compelling relationship between students’ sense of fairness in grading and their support for stringent AI disclosure rules. A negative regression coefficient of -0.38, statistically significant at the p < .001 level, indicates that as students perceive grading to be fairer, the demand for strict policies requiring detailed disclosure of AI use diminishes. This suggests that when students believe assessments accurately reflect their understanding and effort, they are less concerned with policing the boundaries of AI assistance. The findings imply that establishing clear, equitable grading practices may be a proactive strategy for mitigating anxieties surrounding AI in education and fostering a more trusting learning environment, potentially reducing the perceived need for overly restrictive disclosure protocols.

The study’s results underscore a crucial need for proactive communication from educators regarding the appropriate integration of artificial intelligence tools into academic work. Simply prohibiting AI use is insufficient; instead, clear guidelines defining acceptable and unacceptable applications are essential to establish a shared understanding between students and instructors. Furthermore, pedagogical approaches should evolve to reward students not for simply obtaining answers, but for demonstrating active and critical engagement with the technology – for example, by evaluating how effectively AI was used to refine arguments, synthesize information, or overcome challenges. This shift in focus acknowledges AI’s potential as a learning aid, fostering a culture where students are recognized for their thoughtful application of the tool, rather than penalized for its mere presence in their work.

The integration of artificial intelligence into education presents an opportunity to redefine academic integrity, not by restricting its use, but by cultivating a learning environment centered on transparency and the genuine demonstration of effort. Rather than framing AI as a threat to originality, educators can emphasize the value of human contribution-critical thinking, nuanced analysis, and creative synthesis-even when augmented by intelligent tools. This approach necessitates a shift in assessment strategies, prioritizing the process of learning and the demonstrable engagement with course material over solely evaluating a final product. By openly acknowledging the role of AI and rewarding students for thoughtfully integrating it into their workflow, institutions can harness its potential to deepen understanding and foster a more authentic, enriching educational experience, ensuring that academic honesty remains paramount even as technology evolves.

The study’s findings regarding ‘process-oriented attribution’ feel…predictable. It seems students, and ultimately production environments, care less about that AI wrote something and more about whether a human demonstrably understood and refined it. This aligns perfectly with the inevitable lifecycle of any ‘revolutionary’ framework. As Bertrand Russell observed, “The difficulty lies not so much in developing new ideas as in escaping from old ones.” The insistence on simply detecting AI use misses the point; what truly matters is demonstrating thoughtful engagement, a concept that will, undoubtedly, become tomorrow’s tech debt as new tools emerge and definitions of ‘thoughtful’ shift. The focus will always return to demonstrable understanding, not just a lack of red flags.

What’s Next?

The notion of ‘process-oriented attribution’ feels… generous. Acknowledging that students aren’t necessarily writing code anymore, but curating it, is a start. It’s a neat way to repackage the inevitable. The research suggests that demonstrating ‘thoughtful engagement’ is the new bar for academic honesty, which translates to ‘proving you didn’t just blindly copy-paste.’ If a system crashes consistently, at least it’s predictable. The next step isn’t refining attribution models, it’s bracing for the inevitable arms race between AI sophistication and plagiarism detection – a delightful cycle of escalating complexity.

One wonders if ‘responsible AI’ in education is a category error. The current focus seems to be on teaching students to document their reliance on tools, rather than fostering genuine understanding. It’s a bit like demanding scribes meticulously record the brand of quill they used. The real challenge isn’t attribution; it’s designing assignments that resist trivialization by increasingly capable models. We don’t write code – we leave notes for digital archaeologists.

Ultimately, this field will likely settle into a state of managed compromise. Expect a proliferation of ‘AI-assisted’ badges on student work, signifying little more than ‘we tried.’ The research correctly identifies the shift in focus from product to process, but glosses over the fact that the process itself is becoming increasingly opaque. The goalposts will keep moving, and the definition of ‘thoughtful engagement’ will inevitably become… flexible.

Original article: https://arxiv.org/pdf/2602.04023.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- Brent Oil Forecast

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

- Free Fire Beat Carnival event goes live with DJ Alok collab, rewards, themed battlefield changes, and more

- Silver Rate Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

2026-02-05 22:23