Author: Denis Avetisyan

A new framework leverages probabilistic modeling to enable collaborative robots to better understand and respond to human intent in industrial settings.

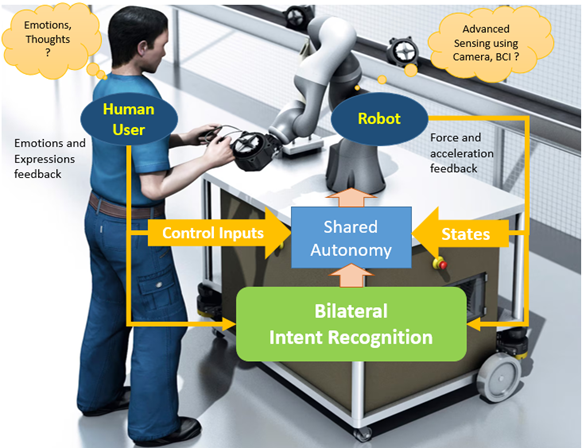

This review details a stochastic decision-making approach using Partially Observable Markov Decision Processes (POMDPs) to enhance safety and efficiency in human-robot collaboration by incorporating intent prediction and emotion recognition.

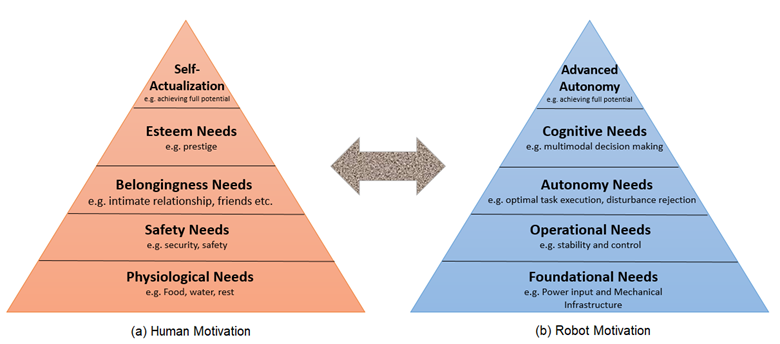

Despite advancements in collaborative robotics, anticipating human behavior remains a key challenge for truly effective and safe human-robot interaction. This is addressed in ‘Stochastic Decision-Making Framework for Human-Robot Collaboration in Industrial Applications’, which proposes a novel approach leveraging Partially Observable Markov Decision Processes (POMDPs) and stochastic modeling to predict human intent within shared workspaces. By modeling human actions and emotional states probabilistically, the framework enables cobots to adapt their behavior, enhancing both safety and efficiency. Could this anticipatory framework unlock a new generation of truly collaborative robots capable of seamless integration into complex industrial environments?

The Inevitable Echo: Anticipating Human Action

Conventional robotic systems, designed with precise pre-programmed movements, frequently encounter difficulties when operating alongside humans due to the inherent variability of human actions. This often results in inefficient task completion or, more critically, potentially unsafe scenarios where a robot’s rigid programming clashes with spontaneous human behavior. The core challenge lies in the fact that humans rarely move with the robotic predictability expected by these machines; subtle deviations in gait, unexpected changes in direction, or entirely novel actions can disrupt a robot’s carefully planned trajectory. Consequently, these systems often react after an event occurs, rather than anticipating it, leading to jerky movements, delayed responses, and a diminished capacity for seamless collaboration in dynamic, real-world environments.

Effective navigation within shared environments hinges on a system’s capacity to move beyond simply reacting to observed behaviors and instead proactively interpret the underlying intentions driving those actions. This necessitates algorithms capable of inferring goals – whether a pedestrian intends to cross a street, a colleague will reach for a shared tool, or a patient requires assistance – based on subtle cues like gaze direction, body posture, and even minute changes in velocity. Such predictive capabilities aren’t merely about anticipating what someone will do, but understanding why, allowing for preemptive adjustments that enhance safety, efficiency, and the overall quality of interaction. By modeling potential future trajectories rooted in inferred intent, systems can move from being responsive obstacles to truly collaborative partners in increasingly complex shared spaces.

Intent prediction represents a significant leap toward more intuitive and effective human-robot interactions, focusing on the development of algorithms capable of forecasting future actions. These systems move beyond simply reacting to observed behaviors, instead proactively estimating what a person is likely to do next based on subtle cues like body language, gaze direction, and contextual information. By anticipating human intent, robots can adjust their own actions accordingly, preventing collisions, offering assistance at the right moment, and ultimately fostering a more seamless and reliable collaborative experience. This proactive approach is crucial for applications ranging from manufacturing and healthcare to assistive robotics, where anticipating needs and preventing errors is paramount to safety and efficiency. The resulting systems promise not just to share space with humans, but to genuinely work with them.

The Pattern Within: Deep Learning as Predictive Engine

Deep learning architectures, particularly recurrent neural networks (RNNs) and transformers, excel at modeling sequential data due to their ability to maintain internal state representing past observations. This capacity allows these models to capture temporal dependencies and non-linear relationships between observed behaviors and predicted intentions, exceeding the capabilities of traditional statistical methods like Markov models or linear regression. Specifically, the use of multiple hidden layers and non-linear activation functions enables the network to learn hierarchical representations of behavior, identifying patterns and features that correlate with specific future actions. The model’s parameters are adjusted during training via backpropagation to minimize the difference between predicted intentions and actual outcomes, effectively learning a mapping from behavior sequences to likely intentions.

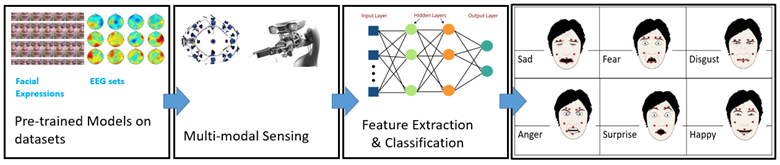

Deep learning models leverage extensive datasets of observed human actions to identify patterns and correlations indicative of future behavior. These models employ algorithms capable of processing high-dimensional data, enabling the detection of nuanced behavioral cues often imperceptible to traditional analytical methods. The process involves feature extraction from the action data, followed by iterative training to establish probabilistic relationships between observed actions and predicted outcomes. The scale of the dataset is critical; larger datasets allow the model to generalize more effectively and improve predictive accuracy by reducing the impact of noise and individual variation in human behavior. This data-driven approach allows the system to learn predictive indicators without explicit programming of specific behavioral rules.

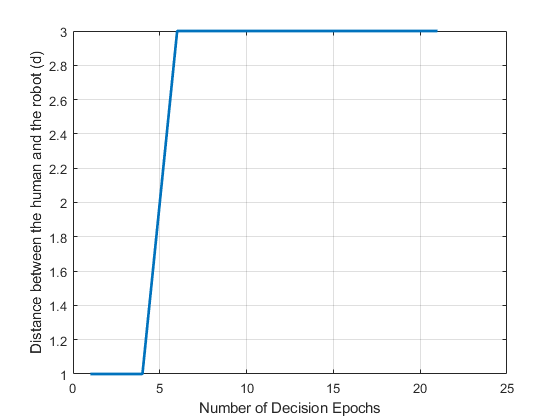

A simulation-based case study was conducted to validate the deep learning framework’s learning process, consisting of 20 epochs of iterative training and evaluation. Each epoch involved presenting the model with a dataset of simulated human actions and adjusting its internal parameters to minimize prediction error. Performance metrics, including prediction accuracy and loss function values, were recorded at the end of each epoch to track the model’s convergence and identify potential overfitting. The 20-epoch duration was selected to allow sufficient time for the model to learn the underlying patterns within the data, while also providing a practical timeframe for computational resource allocation and analysis.

Extending the Gaze: Vision and Reinforcement as Predictive Layers

Vision-based intent prediction utilizes camera systems to augment traditional input data with visual information, specifically body language and gaze direction. This expands the data available to predictive models beyond solely kinematic data or explicit signals. Analyzing body pose, hand gestures, and the direction of a user’s gaze provides contextual cues regarding their intended actions. These visual inputs are processed, often through computer vision techniques such as pose estimation and object detection, to extract relevant features. These features are then integrated into the prediction model, allowing for more accurate anticipation of future behavior compared to systems relying on limited data streams.

Reinforcement learning (RL) enables agents to develop predictive capabilities by iteratively refining strategies through interaction with an environment. This process involves the agent taking actions, receiving reward signals based on the accuracy of its predictions, and adjusting its internal model to maximize cumulative reward. Unlike supervised learning, RL does not require pre-labeled data; instead, the agent learns through exploration and exploitation of the environment’s dynamics. The framework utilizes a reward function to quantify prediction accuracy, guiding the agent toward optimal policies for anticipating future states. This trial-and-error approach allows the agent to adapt to complex, dynamic environments and improve its predictive performance over time without explicit programming of prediction rules.

The predictive framework underwent testing in a simulated environment across three distinct tasks designed to evaluate its performance in anticipating human actions. Successful completion of these tasks – including object handoff, collaborative assembly, and dynamic obstacle avoidance – validated the system’s capacity for both prediction and adaptive behavior. Quantitative metrics, collected during simulation runs, demonstrated a consistent improvement in predictive accuracy over baseline models and confirmed the framework’s ability to adjust strategies in response to varying human behaviors and environmental conditions. These results indicate the framework’s potential for application in real-world human-robot interaction scenarios.

The Distributed Echo: Federated Learning and the Collective Intent

Federated Learning presents a transformative approach to machine learning by shifting the paradigm from centralized data repositories to a distributed network of devices or servers. This methodology enables models to be trained directly on decentralized data sources – such as smartphones, edge devices, or hospital databases – without the need to transfer sensitive information to a central location. Consequently, privacy is substantially enhanced, as raw data remains localized and never leaves the originating source. Beyond privacy benefits, Federated Learning significantly reduces communication overhead; instead of transmitting large datasets, only model updates – much smaller in size – are exchanged, leading to faster training times and reduced bandwidth requirements. This distributed architecture unlocks the potential for learning from vastly more diverse datasets, as organizations are more willing to participate when data privacy is prioritized, ultimately leading to more robust and generalized machine learning models.

The capacity for models to learn from geographically and organizationally diverse datasets, without necessitating data centralization, represents a significant advancement in machine learning. Traditionally, training robust models demanded the aggregation of data into a single repository, raising concerns about privacy, security, and communication bandwidth. This distributed learning paradigm bypasses these limitations by bringing the model to the data, rather than the data to the model. Consequently, applications previously hindered by data silos – such as personalized healthcare informed by patient records across multiple hospitals, or localized traffic prediction leveraging sensor data from numerous cities – become increasingly feasible. The broadened scope extends to scenarios where data sharing is legally restricted or practically challenging, ultimately fostering innovation and enabling more inclusive and effective artificial intelligence systems.

To facilitate safe and intuitive human-robot interaction, the system employed a nuanced belief state comprised of nine distinct states, enabling it to predict potential human actions with greater accuracy. This predictive capability was rigorously evaluated through testing at three levels of distance control – near, medium, and far – simulating realistic interaction scenarios. By varying the permissible distance, researchers confirmed the system’s ability to adapt its behavior and maintain a comfortable safety margin, preventing collisions and fostering trust. The multi-state belief system, combined with graded distance control, demonstrated a robust approach to anticipating human intent and ensuring a harmonious collaborative experience between humans and robots.

The pursuit of predictable systems, even those modeled with the elegance of Partially Observable Markov Decision Processes, invariably courts illusion. This framework, striving to anticipate human intent through stochastic modeling and emotion recognition, echoes a fundamental truth: the human element introduces irreducible uncertainty. As Henri Poincaré observed, “Mathematics is the art of giving reasons, even when one has no right to do so.” The attempt to quantify and predict action, while valuable for enhancing safety in human-robot collaboration, remains a compromise frozen in time. Dependencies – the inherent unpredictability of human behavior – persist, regardless of the sophistication of the predictive model. One builds not a fortress against chaos, but a garden within it.

What’s Next?

The pursuit of predictable collaboration, as exemplified by this work, inevitably bumps against the inherent stochasticity of human action. A framework built on intent prediction, however sophisticated, merely delays the encounter with irreducible uncertainty. The system functions not as a solution, but as a beautifully complex buffer against the inevitable. It maps, with increasing fidelity, the space of possible human behaviors, but the map is not the territory – and a sufficiently motivated actor will always find a path outside the cartography.

Future iterations will undoubtedly refine the emotional state recognition and integrate more granular data streams. Yet, the fundamental challenge remains: modeling a system that evolves faster than the model itself. Stability is merely an illusion that caches well. The true progress lies not in eliminating risk, but in designing for graceful degradation-in architectures that embrace, rather than resist, the emergence of the unexpected. A guarantee is just a contract with probability.

This is not a failure of prediction, but a feature of the system. Chaos isn’t failure – it’s nature’s syntax. The goal, then, isn’t to control collaboration, but to cultivate an ecosystem within which both human and machine can adapt, learn, and, occasionally, productively stumble.

Original article: https://arxiv.org/pdf/2601.14809.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- KAS PREDICTION. KAS cryptocurrency

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- How to download and play Overwatch Rush beta

2026-01-22 08:02