Author: Denis Avetisyan

A new dataset offers researchers a unique window into how artificial intelligence agents are impacting the world of software development.

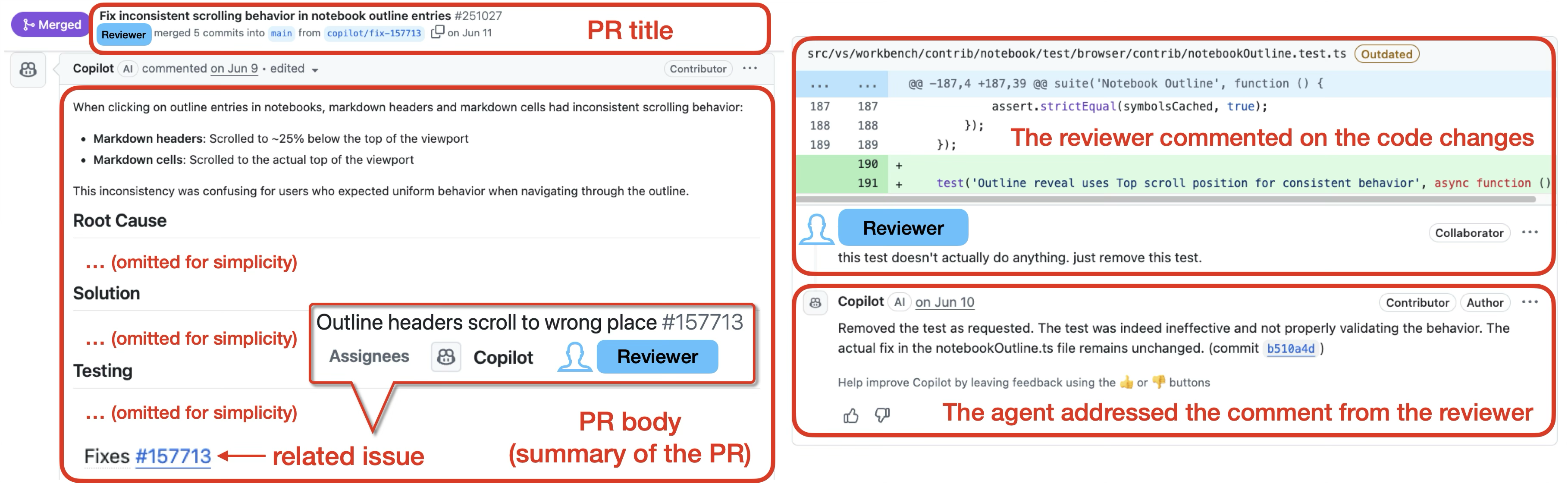

This paper introduces AIDev, a large-scale dataset of Agentic Pull Requests to investigate the adoption, quality, review, and risks of AI coding agents in real-world software engineering projects.

Despite the rapid proliferation of AI coding agents, a comprehensive understanding of their real-world impact on software development remains limited by a lack of dedicated, large-scale data. To address this gap, we introduce AIDev: Studying AI Coding Agents on GitHub, a dataset comprising 932,791 agent-authored pull requests spanning 116,211 repositories and 72,189 developers. This resource-including a curated subset with detailed code review information-provides a foundation for empirically investigating the adoption, quality, and collaborative dynamics of AI agents in modern software engineering. How will this data illuminate the future of human-AI collaboration and the evolving landscape of code creation?

The Evolving Landscape of Code Contribution

Modern software creation is rarely a solitary pursuit; instead, projects routinely involve distributed teams and open-source contributions, fostering a highly collaborative environment. This shift towards collective coding presents unique opportunities for artificial intelligence to integrate seamlessly into existing workflows. The increasing complexity of software systems, coupled with the demand for faster development cycles, means human developers are often overwhelmed by the sheer volume of tasks requiring attention. Consequently, AI tools are now positioned not as replacements for programmers, but as force multipliers-capable of automating repetitive tasks, identifying potential bugs, and even suggesting code improvements within these collaborative frameworks. This burgeoning interplay between human expertise and artificial intelligence promises to redefine the future of software engineering, accelerating innovation and broadening access to technological development.

The landscape of software development is undergoing a subtle yet significant transformation with the increasing prevalence of ‘Bot-generated Pull Requests’ (PRs). These aren’t simply automated formatting changes or basic tests; increasingly, AI systems are autonomously crafting and submitting code modifications directly into core software projects. This represents a departure from AI’s traditional role as a development tool – assisting human programmers – towards genuine participation in the software creation lifecycle. While currently focused on relatively simple tasks – like bug fixes or documentation updates – the rise of bot-generated PRs signals a broader trend: AI is no longer merely augmenting developers, but actively becoming a contributor, reshaping established workflows and prompting a re-evaluation of collaborative coding practices. The sheer volume of these contributions is also noteworthy, hinting at a future where AI handles a substantial portion of routine coding tasks, freeing human developers to concentrate on more complex challenges and innovative designs.

True integration of artificial intelligence into software development hinges not merely on assistance, but on the creation of autonomous ‘Coding Agents’. These agents represent a leap beyond current AI tools, demanding the capacity for independent task completion – from understanding complex project requirements and designing solutions, to writing, testing, and debugging code without constant human direction. The development of such agents necessitates advancements in areas like reasoning, planning, and generalization, allowing them to navigate the inherent ambiguity and evolving nature of real-world software projects. Ultimately, robust Coding Agents promise to unlock a new era of productivity, enabling developers to focus on higher-level design and innovation while these AI entities handle the intricacies of implementation, fundamentally reshaping the software creation process.

AIDev: A Dataset Reflecting Agentic Contribution

The AIDev dataset consists of 932,791 pull requests, termed ‘Agentic-PRs’, sourced directly from public GitHub repositories. These contributions represent a substantial corpus of code modifications created by AI agents, providing a uniquely large-scale resource for analyzing the practical impact of AI in software development. The dataset’s size allows for statistically significant research into agent behavior, code quality, and the types of tasks for which AI agents are most effectively utilized in real-world projects. This differs from synthetic datasets as it reflects actual contributions integrated into live software projects, offering insights into agent performance beyond controlled environments.

The AIDev dataset includes contributions sourced from five distinct coding agents: OpenAI Codex, Devin, GitHub Copilot, Codeium, and Amazon CodeWhisperer. This allows researchers to comparatively analyze the outputs and behaviors of each agent across a substantial volume of real-world code contributions. The dataset logs the specific agent responsible for each pull request, facilitating metrics such as lines of code contributed, commit frequency, and the types of changes implemented by each agent. This granular tracking enables quantitative assessment and qualitative comparison of agent performance, identifying strengths and weaknesses in areas like bug fixing, feature implementation, and code refactoring.

The AIDev dataset leverages the ‘Conventional Commits’ standard to provide structured annotation of each pull request’s purpose, facilitating analysis of agent intent. This commit message format categorizes changes based on type (e.g., fix, feat, docs), scope, and optional body/footer sections, allowing for programmatic filtering and categorization of agent contributions. The dataset encompasses 116,211 unique GitHub repositories, representing a diverse range of projects and coding styles, and enabling broad generalization of observed agent behaviors.

Accessing and Validating Agentic Performance

The AIDev dataset is publicly available via two primary distribution channels to facilitate broad access and collaborative research. Researchers can download the complete dataset, and associated metadata, through the Hugging Face Hub, benefiting from version control and integration with popular machine learning frameworks. Alternatively, a permanent archive of the dataset is hosted on Zenodo, a CERN-managed repository providing long-term preservation and DOI-based citation. This dual availability ensures data accessibility and reproducibility for the research community investigating coding agent performance and software development workflows.

The AIDev dataset facilitates the quantitative evaluation of Coding Agent performance through integration with established benchmark suites such as SWE-bench. SWE-bench provides a standardized set of programming problems and evaluation metrics, allowing researchers to compare the capabilities of different agents across a consistent testing ground. Utilizing this dataset in conjunction with SWE-bench enables objective measurement of agent proficiency in tasks like code generation, bug fixing, and code completion, contributing to advancements in automated software development and agent-based programming tools. Performance is assessed based on metrics like pass@k, measuring the probability of an agent successfully solving a problem within k attempts.

The AIDev dataset comprises contributions from a substantial developer base of 72,189 individuals involved in Agentic-PRs. A curated portion of this dataset consists of 33,596 Agentic-PRs sourced from 2,807 GitHub repositories. These repositories were specifically selected based on a criterion of exceeding 100 GitHub stars, indicating a level of community recognition and project maturity. This filtering process aims to provide a high-quality dataset representative of well-maintained and actively developed projects.

Implications for a Collaborative Future of Code

The advent of ‘AIDev’ showcases a significant leap in the capabilities of Coding Agents, demonstrating their potential to reshape software development workflows. These AI systems are no longer limited to simple code completion; they are actively contributing to complex tasks, from generating pull requests to addressing nuanced bug fixes-a phenomenon observed through the analysis of real-world ‘Agentic-PRs’. This automation isn’t intended to replace human developers, but rather to augment their abilities, handling repetitive tasks and accelerating project timelines. The observed improvements suggest a future where developers can focus on higher-level design and innovation, while AI agents manage the more granular aspects of coding, ultimately increasing productivity and fostering a more efficient software creation process.

A novel dataset centered on ‘Agentic-PRs’ – pull requests autonomously generated by AI coding agents – offers a grounded evaluation of artificial intelligence’s software development prowess. This approach moves beyond synthetic benchmarks to analyze performance on genuine coding tasks, revealing current limitations and pinpointing specific areas requiring refinement. Crucially, an accompanying analysis of regional policy interventions – specifically, restrictions on large language model (LLM) access – demonstrates a measurable impact on developer productivity, with a 6.4% reduction observed in affected areas. These findings highlight not only the immediate capabilities of AI-driven coding, but also the potential economic consequences of policies that limit access to the underlying technologies, underscoring the importance of informed policy decisions as AI integration expands.

The trajectory of software development increasingly points toward a collaborative partnership between human expertise and artificial intelligence. Future iterations of ‘coding agents’ are not envisioned as replacements for developers, but as powerful tools capable of handling repetitive tasks, automating testing procedures, and even suggesting optimized code solutions. This synergy promises to unlock new levels of productivity, allowing engineers to focus on the more nuanced and creative aspects of software design – architecture, user experience, and problem-solving. Such advancements necessitate ongoing research into areas like agentic reasoning, robust error handling, and seamless integration with existing development environments, ultimately fostering a future where complex software projects benefit from the combined strengths of both human ingenuity and artificial intelligence.

The creation of the AIDev dataset exemplifies a commitment to discerning signal from noise within the burgeoning field of AI coding agents. The project meticulously catalogs Agentic Pull Requests, acknowledging that true progress isn’t simply about adding more features or complexity, but rather about understanding the fundamental quality and impact of these agents on existing software engineering workflows. As Donald Davies observed, “The only thing that really matters is communication,” and this dataset serves as a crucial communication channel-providing researchers with the data needed to assess the efficacy and potential risks inherent in integrating AI into the collaborative process of code development. The focus on real-world PRs, rather than synthetic benchmarks, demonstrates a preference for practical insight over theoretical perfection.

What Lies Ahead?

The creation of the AIDev dataset is not, in itself, a resolution. It is, rather, a sharpening of the questions. The proliferation of agentic pull requests necessitates a reassessment of established code review practices. Current metrics, designed for human contribution, may prove inadequate, even misleading, when applied to code generated by non-human entities. The field must move beyond simply detecting change and focus on discerning improvement.

A critical, and largely unaddressed, concern is the propagation of subtle errors within these agent-driven workflows. The dataset offers a lens through which to study these ‘latent bugs’ – those accepted not through active validation, but through the absence of active rejection. Understanding the error profiles of these agents, and their susceptibility to adversarial prompting, is paramount.

Ultimately, the true test will not be the agents’ ability to produce code, but their capacity to refine it. The dataset invites research into automated assessment of code quality beyond superficial metrics, and into the development of agents capable of critically evaluating their own work – a recursive loop of improvement, mirroring, perhaps, the elusive ideal of self-correction in any complex system.

Original article: https://arxiv.org/pdf/2602.09185.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

2026-02-18 06:49