Author: Denis Avetisyan

A new agentic system powered by vision and language models is automating complex tasks at synchrotron beamlines, accelerating materials characterization.

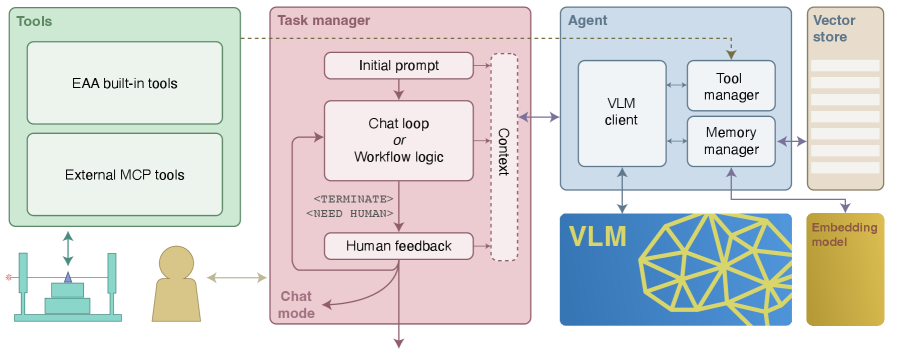

This work introduces EAA, an Experiment Automation Agent utilizing a Model Context Protocol for tool-using AI and long-term memory in automated experimentation.

Automated materials characterization often demands significant expertise and can be a bottleneck in scientific discovery. To address this, we present ‘EAA: Automating materials characterization with vision language model agents’, an agentic system leveraging multimodal reasoning and tool-using AI to automate complex workflows at experimental facilities. EAA’s flexible architecture-incorporating long-term memory and compatibility with the Model Context Protocol-enables both fully autonomous operation and intuitive, natural language-guided experimentation. Could such vision-capable agents fundamentally reshape how materials science research is conducted, accelerating innovation and broadening access to advanced characterization techniques?

The Inevitable Bottleneck of Manual Discovery

Synchrotron beamlines, powerful tools for materials and life science research, are often hampered by workflows that rely heavily on manual operation and intervention. While the instruments themselves are at the cutting edge of technology, the processes of experiment setup, data collection, and initial analysis remain surprisingly labor-intensive. Researchers must physically adjust samples, meticulously monitor instrument parameters, and manually record observations – a process that significantly limits the volume of data achievable and extends the time required for discovery. This reliance on human control creates a bottleneck, preventing these facilities from fully capitalizing on their potential and slowing the pace of scientific advancement, particularly in fields demanding large datasets and high-throughput experimentation.

Modern scientific experiments, particularly those utilizing complex instrumentation, generate data at an unprecedented rate, quickly overwhelming traditional analytical pipelines. While automation is crucial for handling this influx, current systems often falter due to their rigidity and inability to respond to dynamic experimental conditions. These approaches typically rely on pre-programmed sequences, lacking the sophisticated algorithms necessary for real-time data interpretation and adaptive control. Consequently, experiments may proceed along suboptimal paths, missing crucial data points or failing to capitalize on unexpected results – a significant impediment to accelerating scientific discovery. The challenge lies not simply in automating what is currently done, but in creating systems capable of autonomously determining how to best proceed based on evolving experimental feedback.

The limitations of current scientific instrumentation extend beyond mere operational speed; a significant bottleneck arises from the inability of systems to independently refine experimental parameters. Many facilities rely on pre-programmed sequences or require constant human intervention to adjust settings, resulting in data acquisition that often fails to fully explore the experimental landscape. This lack of autonomous optimization means potentially crucial data points are missed, and the time required to achieve meaningful results is substantially extended. Consequently, discoveries are delayed, and the full potential of sophisticated equipment remains unrealized, as systems are unable to dynamically respond to incoming data and intelligently navigate toward optimal conditions for observation and analysis.

![During interactive data acquisition, the agent was initially shown a broad image [latex]\mathbb{(a)}[/latex], then prompted to perform finer scans of a selected region [latex]\mathbb{(b)}[/latex] indicated by a dashed box, despite a minor hardware-related truncation affecting the right side of scans with [latex]Δ=2μm[/latex].](https://arxiv.org/html/2602.15294v1/x6.png)

The Emergence of Experiment Automation Agents

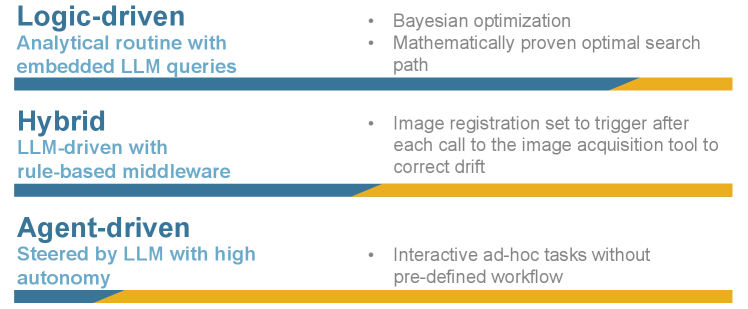

Experiment Automation Agents (EAAs) constitute an agentic system engineered for the autonomous control and optimization of experimental procedures within complex facilities. This system moves beyond simple automation by incorporating decision-making capabilities, allowing it to dynamically adjust experimental parameters and workflows without direct human intervention. EAAs are designed to operate in environments where real-time adaptation is crucial, such as scientific laboratories, manufacturing plants, or testing facilities. The core principle involves an agent continuously observing the experiment’s state, evaluating progress against defined goals, and then executing actions to improve outcomes or address unforeseen circumstances. This contrasts with traditional automated systems that execute pre-programmed sequences without the ability to learn or respond to changes outside of those defined parameters.

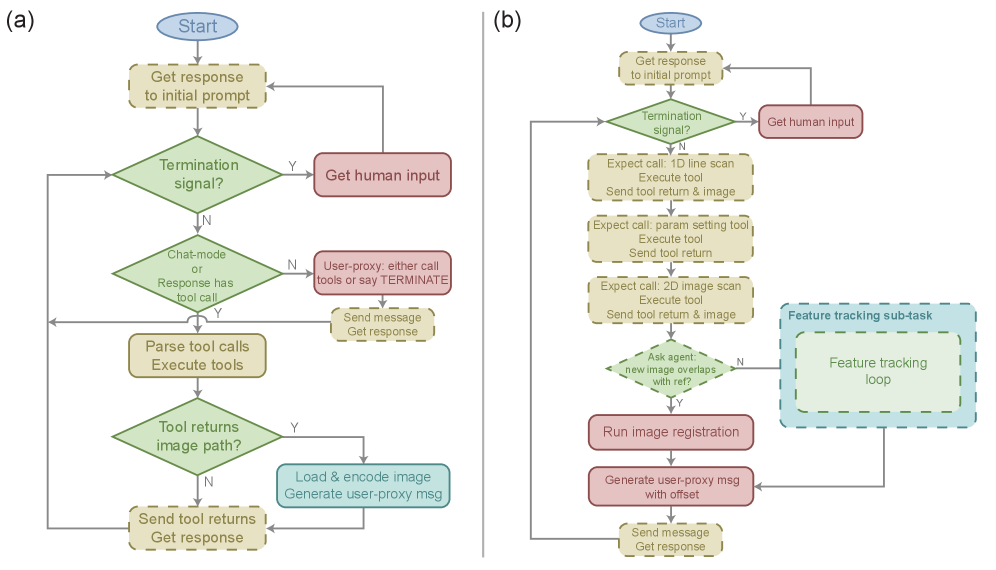

Experiment Automation Agents (EAAs) utilize Large Language Models (LLMs) and Vision Language Models (VLMs) to process and understand the objectives of experimental procedures. LLMs provide the capability for natural language understanding, allowing the EAA to interpret high-level goals expressed in text. VLMs extend this capability by enabling the system to process and interpret visual data, such as images from microscopes or sensor readings, providing contextual awareness of the experiment’s physical state. This combined processing allows the EAA to dynamically adjust experimental parameters and respond to unforeseen circumstances, effectively adapting to changing conditions within the experimental setup and ensuring continued progress towards the defined goals.

Function Calling is a key enabling technology for Experiment Automation Agents, providing the mechanism by which a Large Language Model (LLM) translates its reasoning into concrete actions within a physical experimental setup. This process involves the LLM identifying the need for a specific function – such as adjusting a valve setting, initiating a data acquisition sequence, or modifying a device parameter – and then invoking that function via a defined API. The API call includes necessary parameters, allowing the LLM to directly control hardware and software tools without requiring a human intermediary. This direct linkage between reasoning and action is crucial for autonomous experiment control and optimization, enabling the EAA to respond dynamically to experimental conditions and achieve desired outcomes.

The Experiment Automation Agent (EAA) operates as a Tool Agent by dynamically selecting and utilizing tools necessary for experiment control and data acquisition. This functionality is managed through a Tool Manager component, which maintains a registry of available tools, including hardware interfaces, data analysis scripts, and simulation software. The EAA, guided by its defined experimental goals, queries this registry to identify the optimal tool for each required task. Intelligent tool selection is based on the current experimental state, tool capabilities, and pre-defined dependencies, enabling the EAA to autonomously execute complex experimental procedures without manual intervention. The Tool Manager facilitates version control and ensures compatibility between the EAA and the available tools.

Intelligent Data Acquisition and the Illusion of Control

EAAs employ Image Acquisition techniques to optimize data collection, integrating automated focusing and feature search capabilities to reduce manual intervention and increase throughput. Automated focusing ensures images are consistently sharp and in-plane, while feature search algorithms autonomously locate regions of interest within the sample. This combination minimizes the need for operator control during image capture, enabling faster and more reproducible data acquisition. The system is designed to handle diverse sample types and imaging conditions, and is particularly effective in scenarios requiring high-volume or repetitive image collection.

Image registration is a core process within the EAA system, utilized to spatially align multiple datasets acquired from different modalities or time points. This alignment is crucial for combining complementary information from diverse sources, such as fluorescence microscopy and brightfield imaging, or for tracking changes within a sample over time. The technique compensates for variations in scale, rotation, and translation, enabling accurate comparison and analysis of the registered datasets. By normalizing spatial relationships, image registration significantly improves the quality and interpretability of results, facilitating more precise quantitative measurements and enhanced visualization of complex biological structures or processes.

The Intelligent Data Acquisition and Analysis system incorporates Retrieval-Augmented Generation (RAG) to improve data-driven decision-making. RAG functions by accessing and integrating information from relevant knowledge bases during the experimental process. This allows the system to dynamically refine experimental parameters based on existing data and contextual information. Furthermore, RAG facilitates improved data interpretation by cross-referencing acquired data with the knowledge base, providing a more informed and comprehensive analysis of results.

The system exhibits high precision in visual tasks related to marker identification. Utilizing Gemini 3 Pro Preview, the system achieved an accuracy of less than 5 pixels when locating marker positions. When employing GPT-5 and standard Gemini models with reasoning capabilities enabled, the accuracy increased slightly to less than 10 pixels. These results indicate a consistent and reliable performance level in accurately identifying visual markers across different model architectures and processing approaches.

During testing, the Intelligent Data Acquisition and Analysis system successfully completed all image acquisition attempts, achieving a 100% hit rate. This consistent performance was observed across all models utilized – Gemini 3 Pro Preview, GPT-5, and standard Gemini models – indicating the robustness and reliability of the automated image acquisition process regardless of the underlying reasoning or processing capabilities. The consistent success rate confirms the system’s ability to consistently locate and capture images as required by the experimental parameters.

The Inevitable Failures of Autonomous Systems

Embedded safety guardrails form a foundational element of the Experimental Autonomous Agent (EAA) architecture, proactively mitigating risks inherent in automated experimentation. These aren’t simply reactive error-handling mechanisms, but rather preemptive constraints woven into the agent’s operational logic. The system rigorously validates proposed actions against pre-defined experimental protocols, effectively preventing deviations that could compromise data integrity, instrument safety, or experimental objectives. This includes limitations on parameter ranges, permissible tool interactions, and a continuous monitoring system to detect and flag anomalous behavior. By prioritizing adherence to established scientific methods and boundaries, the EAA fosters a robust and dependable environment for autonomous research, ensuring that explorations remain within safe and scientifically valid parameters.

The seamless integration of large language models (LLMs) into automated experimental systems hinges on clear and consistent communication, and the Model Context Protocol (MCP) addresses this critical need. This standardized interface defines a common language for the LLM to interact with diverse scientific tools – from robotic arms and sensors to data analysis software and simulation engines. By establishing a predictable exchange of information, the MCP ensures reliable operation, minimizing errors and maximizing the reproducibility of experiments. Rather than requiring bespoke coding for each new instrument or analytical method, researchers can leverage the MCP to rapidly deploy LLMs across a wide range of scientific workflows, fostering interoperability and accelerating the pace of discovery by simplifying the complex task of coordinating multiple devices and data streams.

Experimental Autonomous Agents (EAAs) represent a substantial leap forward in scientific efficiency by systematically automating previously manual procedures and refining experimental setups. This automation extends beyond simple task completion; EAAs leverage algorithms to dynamically optimize parameters – such as temperature, pressure, or reagent concentrations – leading to more precise and informative results with reduced resource expenditure. Consequently, researchers experience a notable acceleration in the rate of discovery, as EAAs can perform experiments around the clock, analyze data in real-time, and iteratively refine approaches without the delays inherent in human-driven processes. The resulting reduction in operational costs, coupled with the increased speed of innovation, positions EAAs as a transformative technology for laboratories across diverse scientific disciplines.

The advent of Experimental Autonomous Agents (EAAs) signifies a fundamental shift in how scientific inquiry is conducted. Rather than dedicating significant time to the meticulous, repetitive tasks of experiment control and data collection, researchers are now empowered to concentrate on the more nuanced aspects of their work – the analysis of results, the interpretation of complex patterns, and the formulation of novel hypotheses. This transition isn’t merely about increased efficiency; it represents a move toward a higher cognitive level of scientific engagement, allowing investigators to leverage the precision and consistency of automated systems to unlock insights previously obscured by the demands of manual operation. By offloading tedious control functions, EAAs cultivate an environment where creativity and critical thinking can flourish, ultimately accelerating the pace of discovery and fostering more profound understanding.

The pursuit of automated experimentation, as demonstrated by EAA, isn’t about achieving flawless execution, but rather about embracing the inevitable drift inherent in complex systems. The system doesn’t fail to characterize materials perfectly; it evolves a unique method of doing so, constrained by the environment and its own internal state. Ada Lovelace observed that “The Analytical Engine has no pretensions whatever to originate anything.” This holds true for EAA – the agent doesn’t invent new scientific principles, but it orchestrates existing tools and observations in ways unforeseen by its creators. Long-term stability, often lauded in engineering, is merely a temporary illusion; the true measure lies in a system’s capacity to adapt and reveal the hidden disasters lurking within its initial design. EAA, with its agentic system and Model Context Protocol, isn’t building automation-it’s cultivating an ecosystem.

What Lies Ahead?

The presented work, while demonstrating a functional agentic system for materials characterization, primarily illuminates the scope of what remains unknown. Automation, in this context, isn’t a destination but a protracted negotiation with inherent system opacity. Each successful execution merely refines the boundaries of predictable failure. The ‘Model Context Protocol’ offers a foothold against drift, but long-term memory, even when architected, is a selective forgetting. The true challenge isn’t building agents that do, but designing systems that gracefully accommodate the inevitability of not knowing.

Future iterations will inevitably encounter the limits of vision language models as oracles. The beamline, as a complex adaptive system, will continue to reveal edge cases unanticipatable by current training paradigms. Monitoring, therefore, isn’t the detection of anomalies-it is the art of fearing consciously. The value lies not in preventing incidents, but in cultivating a responsiveness to revelation.

Resilience, in such systems, begins where certainty ends. The focus should shift from perfecting automation to establishing robust feedback loops, embracing the unpredictable interplay between agent and environment. The objective isn’t a self-correcting system, but one capable of evolving with its errors, a continuous process of negotiated understanding.

Original article: https://arxiv.org/pdf/2602.15294.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-18 09:47