Author: Denis Avetisyan

New research demonstrates how generative artificial intelligence can significantly streamline the creation of domain-driven design models, offering a pathway to faster and more efficient software development.

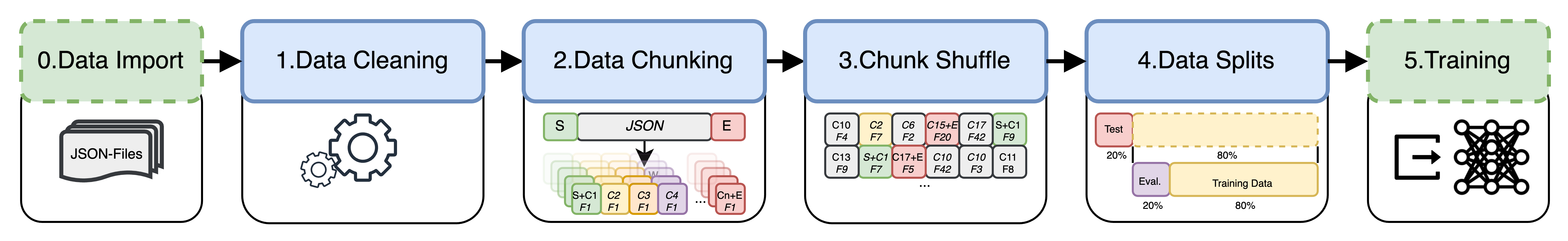

Fine-tuning an open-weight Large Language Model to generate syntactically correct JSON for Domain-Driven Design models, even with limited computational resources.

Effective software design often demands substantial manual effort in precisely modeling complex application domains, yet automating this process remains a persistent challenge. This paper, ‘Leveraging Generative AI for Enhancing Domain-Driven Software Design’, explores a partial solution by demonstrating the successful fine-tuning of an open-weight Large Language Model-Code Llama-to generate syntactically correct JSON objects representing Domain-Driven Design (DDD) models. Achieved even with limited hardware resources through techniques like 4-bit quantization and Low-Rank Adaptation, this approach significantly streamlines metamodel generation. Could this represent a paradigm shift towards more efficient, AI-driven software development methodologies?

The Inevitable Formalization: Domain Models and Generative AI

The backbone of many contemporary business operations is the Domain Model – a formalized representation of the data and relationships crucial to specific industries or functions. However, constructing and updating these models is traditionally a painstaking, manual process, requiring deep subject matter expertise and meticulous attention to detail. This reliance on human effort introduces significant bottlenecks and the potential for errors, as even minor inconsistencies can ripple through entire systems. Maintaining accuracy becomes increasingly challenging as business requirements evolve, necessitating constant revisions and validation. Consequently, organizations often grapple with outdated or flawed Domain Models, hindering agility and increasing the risk of costly mistakes, impacting everything from data integration to automated decision-making.

The creation of Domain Models, essential for structuring complex business data, is increasingly being revolutionized by generative AI. Traditionally a manual and often error-prone process, these models can now be automatically constructed directly from stated business requirements. This automation drastically reduces both development time and associated costs, allowing organizations to rapidly adapt to changing needs. Crucially, generative AI ensures syntactical correctness in the resulting JSON objects – a common data format – when provided with clear and concise prompts, minimizing the need for debugging and ensuring data integrity from the outset. This capability promises to streamline workflows and unlock new levels of efficiency in data-driven processes.

Constructing Structure: Leveraging Large Language Models for JSON Generation

Code Llama establishes a robust basis for JSON generation due to its pre-training on a massive dataset of code, including substantial volumes of JSON. JSON (JavaScript Object Notation) is a lightweight, text-based format commonly used for data interchange between applications and systems. Its widespread adoption stems from its readability, ease of parsing, and compatibility with numerous programming languages. Code Llama’s architecture, specifically its ability to understand and generate code structures, directly translates to effective JSON creation, allowing it to produce syntactically correct and logically structured JSON outputs based on provided prompts or schemas. This capability is valuable for applications requiring dynamic data generation, API responses, or configuration file creation.

Hugging Face Transformers provides a comprehensive ecosystem for utilizing and fine-tuning large language models for JSON generation. This includes pre-trained model weights, readily available architectures like those based on the Transformer model, and tools for tokenization and decoding. The library abstracts away the complexities of model implementation, offering a Python-based API for tasks such as loading pre-trained models, defining training pipelines, and performing inference. Furthermore, it supports distributed training across multiple GPUs or TPUs, facilitating the efficient training of models on large datasets. The infrastructure also includes integrations with popular deep learning frameworks like PyTorch and TensorFlow, providing flexibility for developers and researchers.

Large language models generate JSON through Next Token Prediction, a probabilistic process where the model calculates the likelihood of each possible token following a given sequence. The model is trained on extensive datasets of text and code, including JSON examples, allowing it to learn the patterns and syntax of the format. During generation, the model begins with an initial prompt or seed, then iteratively predicts the most probable next token – be it a brace, bracket, colon, string, number, or boolean – based on the preceding sequence. This process continues until a designated end-of-sequence token is predicted, or a predefined length limit is reached, resulting in a complete JSON structure. The accuracy of JSON generation is directly correlated to the model’s training data and its ability to accurately assess token probabilities within the context of valid JSON syntax.

Adaptive Efficiency: Parameter-Efficient Fine-Tuning Techniques

Low Rank Adaptation (LoRA) modifies pre-trained models like Code Llama by freezing the original weights and introducing trainable low-rank matrices into each layer. This approach significantly reduces the number of trainable parameters – often by over 100x – compared to full fine-tuning. Specifically, LoRA decomposes the weight update matrices into two smaller matrices, reducing the parameter count while maintaining representational capacity. For JSON generation, this translates to lower computational costs during training and inference, reduced memory footprint, and faster adaptation to the specific nuances of the JSON schema without requiring extensive resources. The technique achieves comparable performance to full fine-tuning while drastically minimizing the required computational power and storage.

Quantized Low Rank Adaptation (QLoRA) builds upon the benefits of Low Rank Adaptation (LoRA) by incorporating 4-bit quantization. This process reduces the precision of model weights from the typical 16- or 32-bit floating point representation to 4-bit integer representation. The resulting decrease in model size is substantial – often exceeding a 4x reduction – with minimal impact on performance metrics. By quantizing the weights, memory bandwidth requirements are lowered, and computational throughput is increased, particularly on hardware optimized for integer arithmetic. This optimization enables the deployment of large language models, such as Code Llama, on devices with limited resources, such as edge devices or systems with smaller GPU memory.

Parameter-efficient fine-tuning techniques, such as Low Rank Adaptation (LoRA) and Quantized LoRA, are essential for model deployment in environments with limited computational resources, including devices with restricted memory and processing power. Full fine-tuning of large language models requires substantial resources, making it impractical for many applications. These techniques reduce the number of trainable parameters-and thus the memory footprint and computational cost-while maintaining a significant portion of the model’s performance. This reduction in resource demand enables deployment on edge devices, mobile platforms, and in scenarios where minimizing latency and energy consumption are critical, broadening the accessibility and applicability of Code Llama for tasks like JSON generation.

Decoding Performance: Evaluation and Hyperparameter Optimization

Evaluating the fidelity of generated JSON outputs requires quantifiable metrics, and the BLEU score serves as a critical indicator of similarity between the system’s creations and the anticipated, correct structures. During the hyperparameter tuning phase, the model achieved an approximate BLEU score of 0.062, suggesting a relatively low degree of overlap with the expected outputs. While seemingly modest, this score provides a baseline for iterative improvement; each adjustment to the model’s parameters aims to incrementally increase this value, bringing the generated JSON closer to the desired standard and demonstrating the effectiveness of the optimization process. This metric, though imperfect, allows for a data-driven approach to enhancing the quality and reliability of the JSON generation system.

Achieving optimal performance from any generative model hinges on careful hyperparameter tuning, a process which systematically explores the configuration space to identify the settings that yield the best results. Recent work demonstrates the utility of employing a Random Forest Regressor not simply to perform the tuning, but to analyze its impact. Through Permutation Importance Analysis, this approach reveals which hyperparameters exert the most substantial influence on model performance; by randomly shuffling the values of each hyperparameter and observing the resulting decrease in model accuracy, researchers can quantify its relative importance. This insight goes beyond simply finding good values; it offers a deeper understanding of the model’s behavior, guiding future development and potentially simplifying the tuning process by focusing on the most critical parameters.

Effective data pre-processing proved foundational to the success of the JSON generation model. Initial domain models often contained inconsistencies or formatting errors that hindered training and negatively impacted output quality; therefore, a robust pre-processing pipeline was implemented to cleanse and standardize the input data. This involved rigorous validation, error correction, and normalization procedures, ultimately resulting in a significant improvement in parsing accuracy. Post-processing of all generated samples demonstrated an 81% success rate, indicating that the cleaned data enabled the model to reliably interpret and utilize the domain information, thereby establishing a strong basis for subsequent hyperparameter optimization and performance evaluation.

![Permutation importance calculated using a Random Forest Regressor [18] reveals the relative influence of each parameter on multiple evaluation metrics.](https://arxiv.org/html/2601.20909v1/pictures/ParameterImportanceforMultipleObjectives_800_400_5.png)

The pursuit of automated model generation, as demonstrated in this work, echoes a fundamental truth about software architecture. Systems, even those meticulously crafted with Domain-Driven Design principles, inevitably succumb to the pressures of evolving requirements. As Robert Tarjan once observed, “The most effective algorithms are those that adapt to the data.” This sentiment resonates deeply with the fine-tuning of Code Llama; the model isn’t merely generating JSON, it’s adapting to the specific constraints and nuances of DDD modeling. The paper’s success with limited resources further underscores the value of elegant adaptation-achieving substantial results through resourceful optimization, rather than brute force computation. This suggests that the future of software design lies not in eliminating the need for human oversight, but in augmenting it with intelligent, adaptive tools.

What Lies Ahead?

The successful application of fine-tuned Large Language Models to the generation of Domain-Driven Design artifacts is not a triumph over complexity, but a temporary accommodation. Syntactic correctness, as demonstrated by BLEU scores, merely delays the inevitable cascade toward semantic erosion. Technical debt, in this context, isn’t simply accrued through hasty implementation; it’s inherent to the translation between evolving understanding and static code. Each generated JSON object represents a fleeting moment of harmony between intention and representation-a fragile state quickly subject to the pressures of changing requirements.

The constraints of limited hardware, while pragmatically addressed through techniques like Low-Rank Adaptation, are symptomatic of a larger truth: scaling computational power does not resolve fundamental limitations. It only postpones them. Future work must move beyond evaluating the generation of models and address the persistent challenge of maintaining their integrity over time. A focus on automated refinement-systems capable of detecting and correcting semantic drift-will prove far more valuable than simply increasing the volume of generated code.

Uptime, in the realm of software, is a rare phase of temporal harmony. The true measure of progress lies not in achieving it, but in gracefully navigating the inevitable decline. The next phase of research will not be about building better generators, but about constructing systems resilient enough to accept, and even anticipate, their own obsolescence.

Original article: https://arxiv.org/pdf/2601.20909.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Gold Rate Forecast

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-01-31 22:49