Author: Denis Avetisyan

A new framework harnesses the power of advanced artificial intelligence to create dynamic and personalized learning experiences for students.

This review details a system integrating large language models with adaptive feedback mechanisms to enhance collaborative learning platforms and address shortcomings in existing AI moderation.

Existing collaborative learning platforms often struggle to balance dynamic discussion moderation with personalized feedback and equitable participation. This challenge is addressed in ‘Dynamic Framework for Collaborative Learning: Leveraging Advanced LLM with Adaptive Feedback Mechanisms’, which introduces a novel system integrating large language models-like GPT-4o-and robust feedback mechanisms to facilitate adaptive, inclusive educational experiences. The framework demonstrably improves student collaboration and comprehension through dynamic adjustments to prompts and discussion flows, offering a scalable solution for diverse learning environments. Could this approach pave the way for a new generation of AI-driven educational tools that truly personalize learning at scale?

The Inevitable Drift of Collaborative Systems

Conventional collaborative learning environments frequently encounter challenges in ensuring all students contribute equitably. Research indicates that established group work often defaults to participation by a limited number of individuals, while others remain passive or are actively excluded from meaningful engagement. This disparity arises from various factors, including differences in confidence, communication styles, and pre-existing social dynamics within the group. Consequently, marginalized students may experience reduced learning gains and diminished opportunities to develop essential teamwork skills, ultimately reinforcing inequalities rather than fostering a truly inclusive educational experience. Addressing this requires a shift from simply assigning group tasks to implementing strategies that specifically promote balanced contribution and actively counteract patterns of unequal participation.

Simply assembling students into groups does not guarantee productive collaboration; a truly effective system necessitates proactive design for inclusivity. Research demonstrates that without intentional scaffolding, dominant voices can easily overshadow quieter participants, replicating existing power imbalances and diminishing the benefits of collective learning. Successful collaborative environments require clearly defined roles, structured communication protocols, and mechanisms for addressing disruptive behaviors – not as punitive measures, but as opportunities for constructive feedback and skill-building. These systems must also account for diverse learning styles and provide personalized support to ensure every student feels empowered to contribute, fostering a dynamic where all voices are not only heard but actively valued and integrated into the learning process.

Collaborative learning, while promising, can inadvertently widen achievement gaps if not carefully managed. Research demonstrates that simply placing students in groups does not guarantee equitable participation; instead, dominant voices often overshadow those less assertive, and pre-existing inequalities can become entrenched. Without consistent, proactive moderation to address disruptive behaviors and ensure all contributions are valued, collaborative tasks risk reinforcing existing power dynamics. Furthermore, personalized support-tailored to individual learning needs and communication styles-is crucial to help every student confidently engage and benefit from the shared learning experience. Failing to provide this scaffolding can ultimately hinder learning outcomes for marginalized students, negating the potential benefits of collaboration and creating environments where some are consistently left behind.

The Illusion of Control: LLM-Driven Adaptation

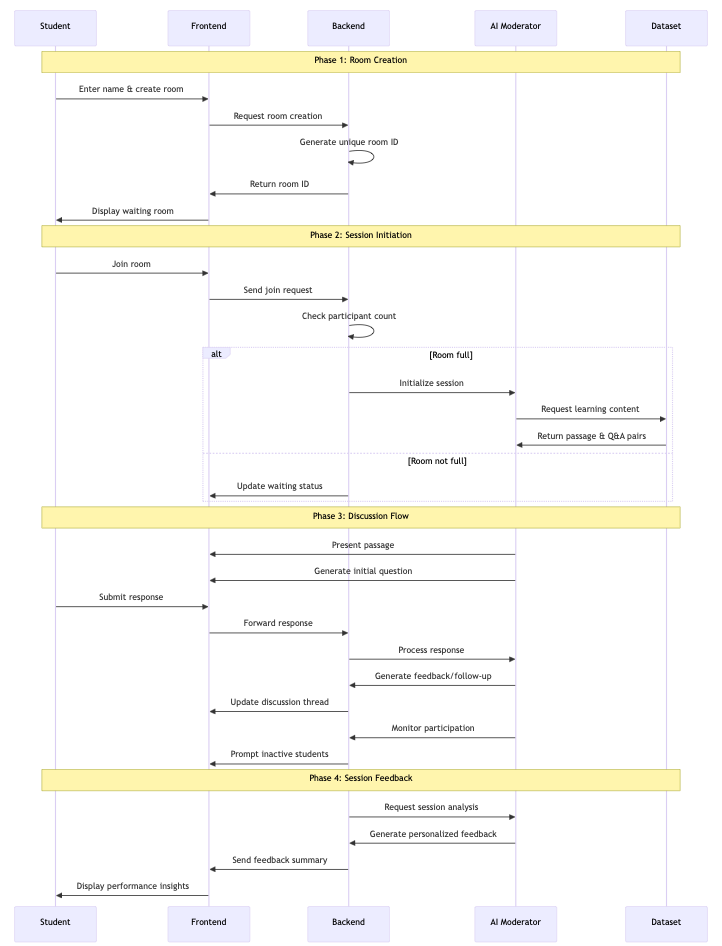

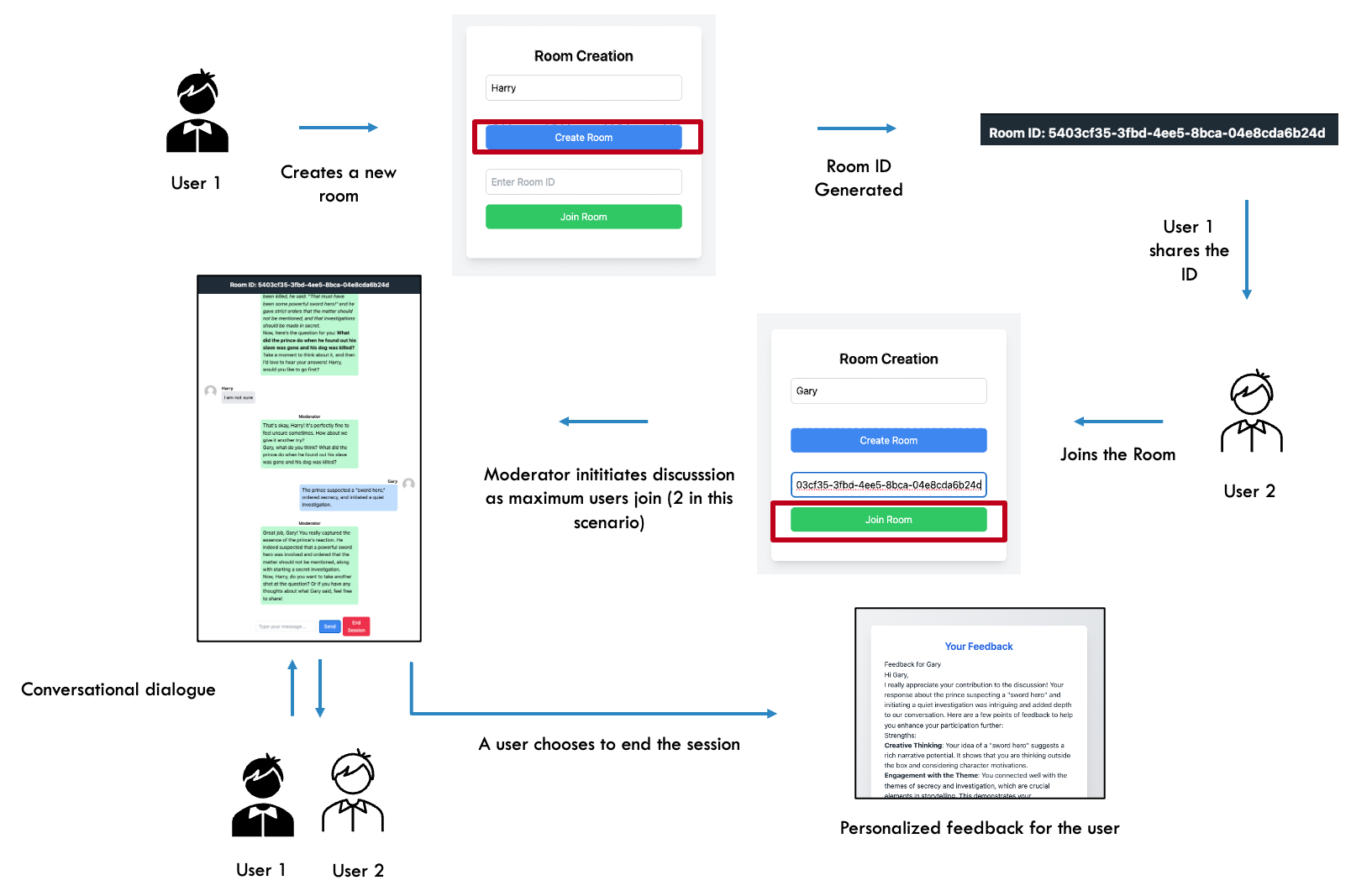

The Collaborative Learning Platform leverages Large Language Model (LLM) integration to dynamically adjust learning pathways and content presentation for individual students. This personalization is achieved through analysis of student performance data, interaction patterns, and identified learning preferences. LLMs facilitate the curation of relevant resources, the generation of tailored feedback, and the adaptation of difficulty levels within learning activities. Furthermore, the system employs LLMs to recommend specific collaborative groups or peer-to-peer learning opportunities based on complementary skill sets and learning goals, maximizing the effectiveness of group work and fostering a more engaging learning environment.

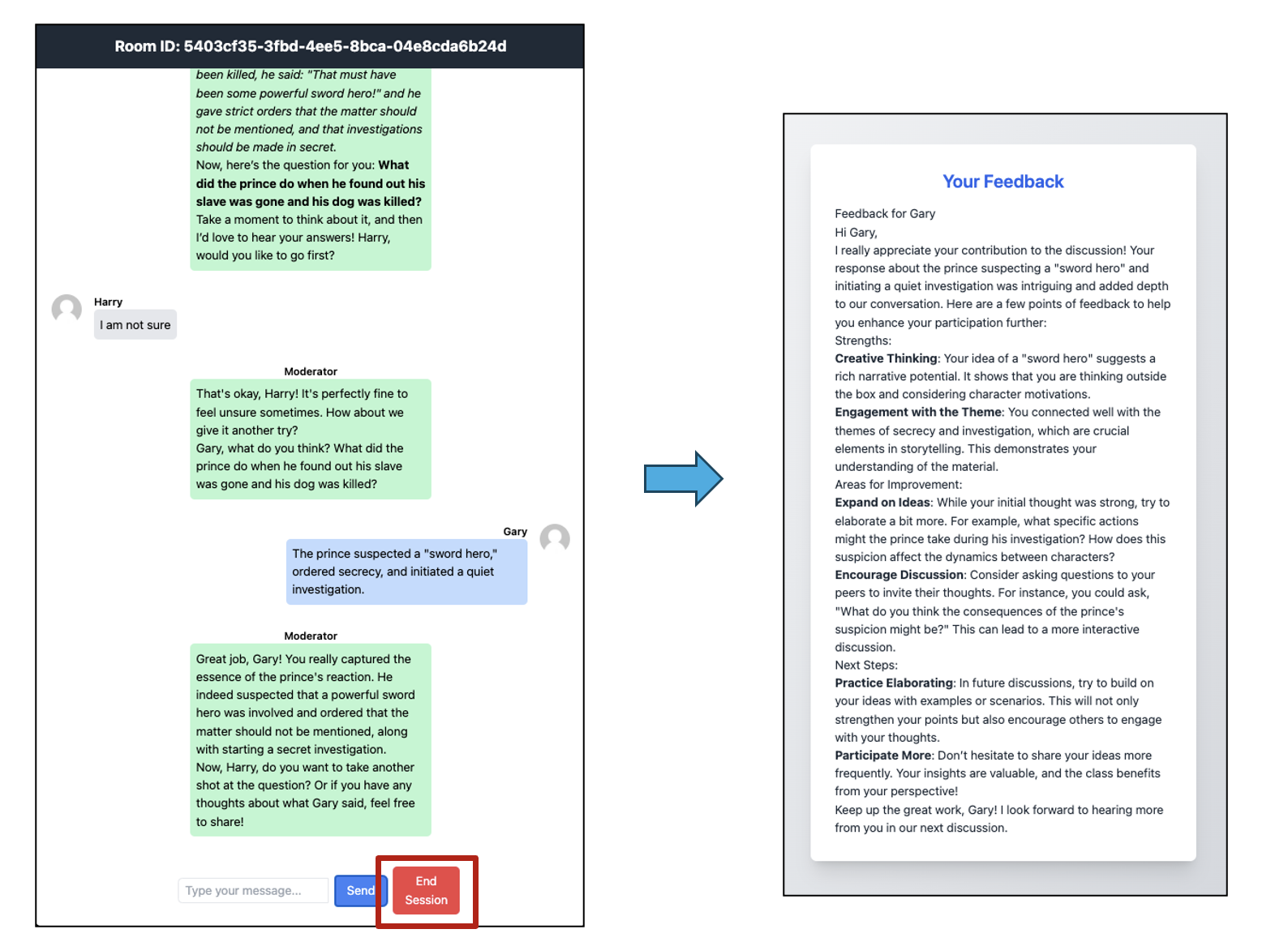

The Collaborative Learning Platform employs adaptive moderation utilizing the GPT-4o language model to maintain a constructive learning environment. This system proactively identifies potentially disruptive behaviors – including but not limited to inappropriate language, personal attacks, and off-topic contributions – through real-time analysis of text-based interactions. Upon detection, GPT-4o initiates pre-defined actions, ranging from issuing automated warnings to temporarily muting users or flagging content for human review by platform administrators. This automated intervention is designed to minimize disruption, encourage positive engagement, and ensure all participants can contribute effectively without experiencing harassment or unproductive behavior, ultimately fostering a safer and more focused collaborative experience.

The integration of Large Language Models (LLMs) with Retrieval-Augmented Generation (RAG) significantly improves content delivery within the platform. RAG addresses the limitations of LLMs – namely, potential inaccuracies and knowledge cut-off dates – by grounding responses in a verified, up-to-date knowledge base. Specifically, when a student query is received, the system first retrieves relevant documents from this knowledge base. These retrieved documents are then provided as context to the LLM, which uses this information to formulate a response. This process ensures that the content delivered to students is not only coherent and contextually appropriate but also factually accurate and reflects the most current information available, exceeding the capabilities of a standalone LLM.

Stress Testing the Inevitable

Student Persona Simulation was utilized as a testing methodology to evaluate the system’s robustness against challenging user interactions. This involved leveraging the DeepSeek V3 large language model to generate simulated student behaviors, including potentially disruptive or complex queries and statements. The resulting simulated interactions were then processed by the system, allowing for controlled and repeatable testing of its ability to maintain stability and functionality under stress. This approach facilitated the identification of potential failure points and informed optimization efforts prior to live deployment, ensuring the system could effectively handle a wide range of user inputs.

System latency was measured to assess the real-time responsiveness of the LLM moderator, a critical factor for timely and effective intervention in potentially harmful interactions. Results indicate a mean response latency of 1.84 seconds. This metric represents the average time elapsed between the detection of a problematic input and the moderator’s response, demonstrating the system’s capacity to operate within acceptable timeframes for real-time applications. Consistent low latency is essential for preventing the escalation of harmful content and maintaining a safe user environment.

Scalability testing of the framework demonstrated the platform’s ability to maintain performance levels under concurrent user load and complex interaction scenarios. Specifically, the system achieved 91% accuracy in stress detection following a user-specific fine-tuning and calibration process. This calibration involved adapting the system’s parameters to individual user interaction patterns, which significantly improved its ability to identify and flag potentially harmful or disruptive behavior. The testing methodology included simulating a high volume of concurrent users engaging in varied interaction types to assess system stability and response times under pressure.

The Mirage of Personalized Learning

The convergence of dynamic group formation and large language model (LLM)-driven adaptation represents a significant leap toward genuinely personalized learning. This system moves beyond static groupings, continuously reconfiguring student teams based on evolving skill levels, learning styles, and collaborative dynamics. Simultaneously, the LLM analyzes individual student responses and tailors the complexity and presentation of educational materials accordingly, ensuring each learner receives content optimally suited to their needs. This dual mechanism fosters an environment where students are consistently challenged at their ‘zone of proximal development,’ maximizing engagement and knowledge retention, while also promoting peer learning through optimally balanced group interactions – effectively creating a continuously adapting, individualized curriculum within a collaborative setting.

The system actively fosters inclusive learning environments by moving beyond simply acknowledging participation imbalances to proactively rectifying them. Through continuous monitoring of group interactions, the framework identifies when certain students consistently dominate discussions or when others remain disengaged. It then dynamically adjusts group compositions and prompts to redistribute speaking opportunities, ensuring quieter voices are heard and preventing any single student from monopolizing the conversation. This isn’t merely about equal time speaking, but about creating conditions where all students feel comfortable contributing, valuing diverse perspectives, and building confidence in their own ideas – ultimately leading to a more enriching and equitable educational experience for everyone involved.

Further development of this adaptive learning framework centers on accommodating a broader spectrum of learning styles, moving beyond simply identifying knowledge gaps to tailoring instructional approaches to individual preferences – visual, auditory, kinesthetic, and more. Integration with established educational platforms is also a key priority, aiming to seamlessly incorporate the system’s dynamic group formation and personalized adaptation features into existing curricula. To facilitate ongoing refinement and ensure robust performance across diverse contexts, researchers plan to leverage the FairytaleQA dataset, a rich resource of narrative-based questions, for continuous model evaluation and improvement, ultimately creating a more versatile and effective learning tool.

The pursuit of seamless collaborative learning platforms, as detailed in this framework, echoes a fundamental truth about complex systems. One anticipates inevitable imperfections, even within meticulously designed architectures. As Carl Friedrich Gauss observed, “If I have seen further it is by standing on the shoulders of giants.” This sentiment applies directly to the reliance on pre-trained LLMs like GPT-4o; the system’s capabilities are built upon vast datasets and prior research, acknowledging inherent dependencies. The adaptive feedback mechanisms, though innovative, are but refinements, acknowledging the foundational layers upon which they rest. It is not about creating a perfect system, but about thoughtfully building upon existing knowledge, perpetually aware that technologies change, dependencies remain.

The Seed and the Garden

This framework, presented with admirable ambition, does not so much solve the problems of collaborative learning as relocate them. The challenge isn’t building a better moderator – every refactor begins as a prayer and ends in repentance – but accepting that any system intended to nurture growth will inevitably cultivate its own wilderness. The pursuit of “inclusive” experiences, laudable as it is, suggests a belief that a perfectly balanced garden is achievable, rather than recognizing that imbalance is merely a different form of life.

Future work will not focus on refining the Large Language Model – that is merely pruning – but on understanding the emergent properties of these increasingly complex educational ecosystems. It’s a question not of what the system can do, but of what it will become. The adaptive feedback mechanisms, while promising, are still predicated on the assumption of a fixed, knowable ideal; a learner to be optimized. The true frontier lies in embracing the unpredictable, in designing for serendipity, and in acknowledging that a system’s instability isn’t a bug-it’s just growing up.

The integration of RAG, while a useful tactic, is ultimately a containment strategy. The knowledge isn’t within the system, but flows through it, a temporary dam against the inevitable flood. The next generation of these platforms will not aim to deliver education, but to facilitate its natural propagation-to become, less a framework, and more a fertile ground.

Original article: https://arxiv.org/pdf/2601.21344.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- Naomi Watts suffers awkward wardrobe malfunction at New York Fashion Week as her sheer top drops at Khaite show

2026-02-01 11:58