Author: Denis Avetisyan

Researchers have developed a novel system that dynamically adjusts its computational load based on how frequently data changes, enabling more efficient and responsive artificial intelligence.

Reactive Circuits offer a probabilistic programming framework for asynchronous reasoning and continual inference in dynamic environments.

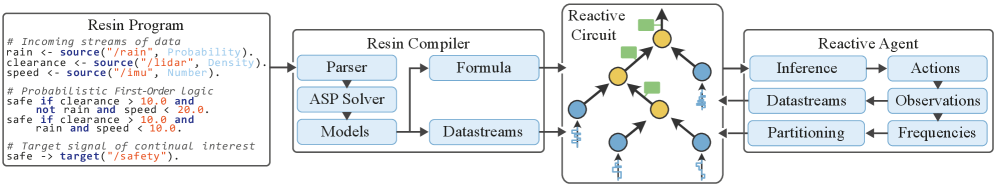

Efficiently reasoning with complex probabilistic models is often hampered by computational cost, particularly for agents operating in dynamic, real-time environments. This paper, ‘Reactive Knowledge Representation and Asynchronous Reasoning’, addresses this challenge by introducing Reactive Circuits (RCs), a novel framework for continual inference. RCs dynamically adapt computation by partitioning and memoizing sub-problems based on the estimated [latex]Frequency of Change[/latex] in asynchronous input streams, enabling significant speedups over traditional methods. Could this reactive, asynchronous approach unlock new possibilities for low-latency, robust reasoning in complex, ever-changing systems?

Deconstructing the Static: The Challenge of Continual Inference

Numerous practical challenges necessitate reasoning with data that doesn’t arrive in a neat, predictable sequence. Consider applications like financial market analysis, where trade data streams in at variable rates, or sensor networks monitoring environmental conditions, each reporting observations sporadically. This asynchronous arrival-information appearing irregularly over time-presents a fundamental difficulty for traditional inference techniques designed for static datasets. Effectively processing these data streams requires methods capable of adapting to the dynamic flow of information, rather than relying on complete, pre-defined data structures. The inherent unpredictability demands a shift towards algorithms that can incrementally update beliefs and draw conclusions as new data points become available, making real-time responsiveness and efficiency paramount.

Conventional inference techniques, designed for static datasets, face significant hurdles when applied to asynchronous data streams. These methods typically demand a complete re-evaluation of the inference process with each new data point, a computationally expensive undertaking. This constant recomputation arises because traditional approaches lack the capacity to incrementally update existing inferences based on incoming data; instead, they treat each update as a fresh start. Consequently, systems relying on such methods struggle to maintain real-time responsiveness and can experience performance bottlenecks as data velocity increases. The inability to efficiently assimilate new information into pre-existing knowledge represents a fundamental limitation in dynamic environments, impacting the scalability and practicality of many inference-driven applications.

The computational burden of constantly re-evaluating inferences over streaming data significantly impacts a diverse range of critical applications. In real-time monitoring systems – such as those tracking financial markets, environmental conditions, or patient health – delays caused by inefficient inference can render data obsolete before actionable insights are gained. Similarly, distributed system management, where maintaining optimal performance requires continuous assessment of numerous interconnected components, suffers when inference processes cannot keep pace with the rate of incoming data. This limitation affects everything from cloud infrastructure scaling to network security threat detection, potentially leading to resource misallocation, service disruptions, and increased vulnerability. Effectively addressing this inefficiency is therefore paramount for building responsive and reliable systems in a world increasingly defined by dynamic, asynchronous information flows.

![Reactive Circuits optimize asynchronous reasoning by dynamically restructuring a Resin program into a directed acyclic graph (DAG) that prioritizes memorization of stable sub-formulas based on input signal volatility, as demonstrated by assigning [latex]speed[/latex], [latex]clearance[/latex], and [latex]rain[/latex] signals to different depths within the DAG.](https://arxiv.org/html/2602.05625v1/x3.png)

Beyond Static Graphs: Reactive Circuits as an Adaptive Foundation

Reactive Circuits build upon the established framework of Algebraic Circuits by introducing a dynamic, adaptive computational structure. Foundational Algebraic Circuits perform inference using a fixed graph of operations; Reactive Circuits extend this by allowing the computational graph itself to change during inference. This adaptation is achieved through mechanisms that modify the circuit’s structure-adding, removing, or weighting operations-in response to input data. The resulting architecture enables a more flexible and efficient approach to computation compared to static Algebraic Circuits, allowing resources to be allocated based on the specific characteristics of the input and the evolving state of the computation.

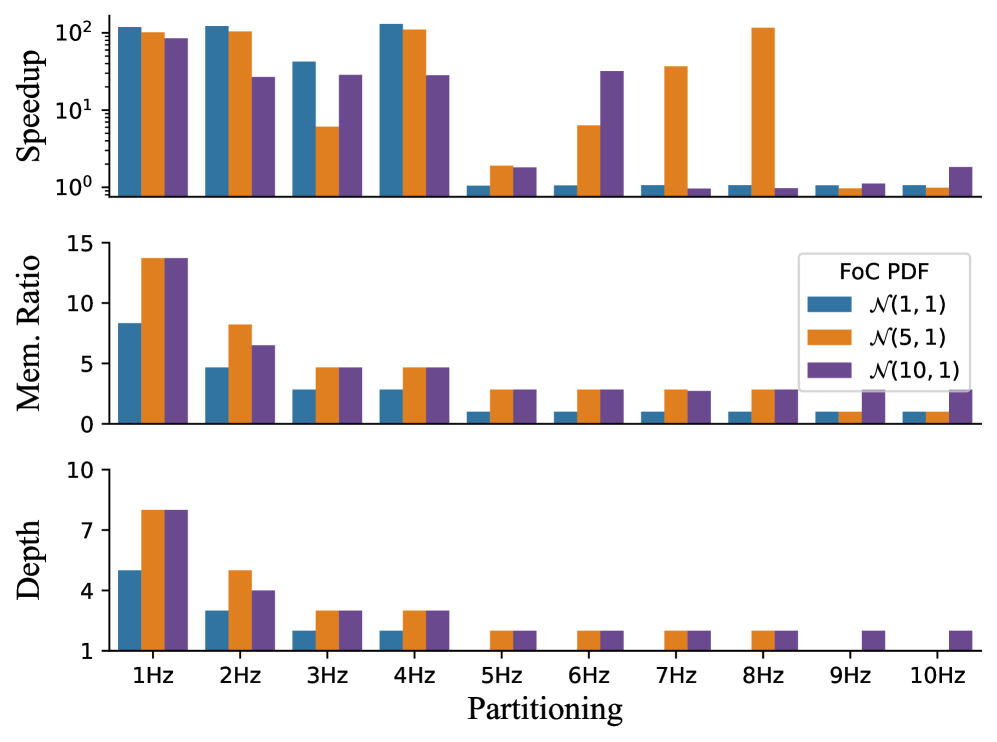

Reactive Circuits utilize a dynamic computational structure predicated on the frequency of change observed in input signals. This means the circuit’s processing pathways are not static; instead, they adapt in real-time to prioritize and allocate resources to signals exhibiting higher rates of fluctuation. Signals with low frequency of change are processed with reduced computational effort, while those undergoing rapid change receive increased attention. This selective allocation is achieved through mechanisms that effectively prune irrelevant computations and focus processing power on the most actively evolving data, directly impacting the efficiency of inference.

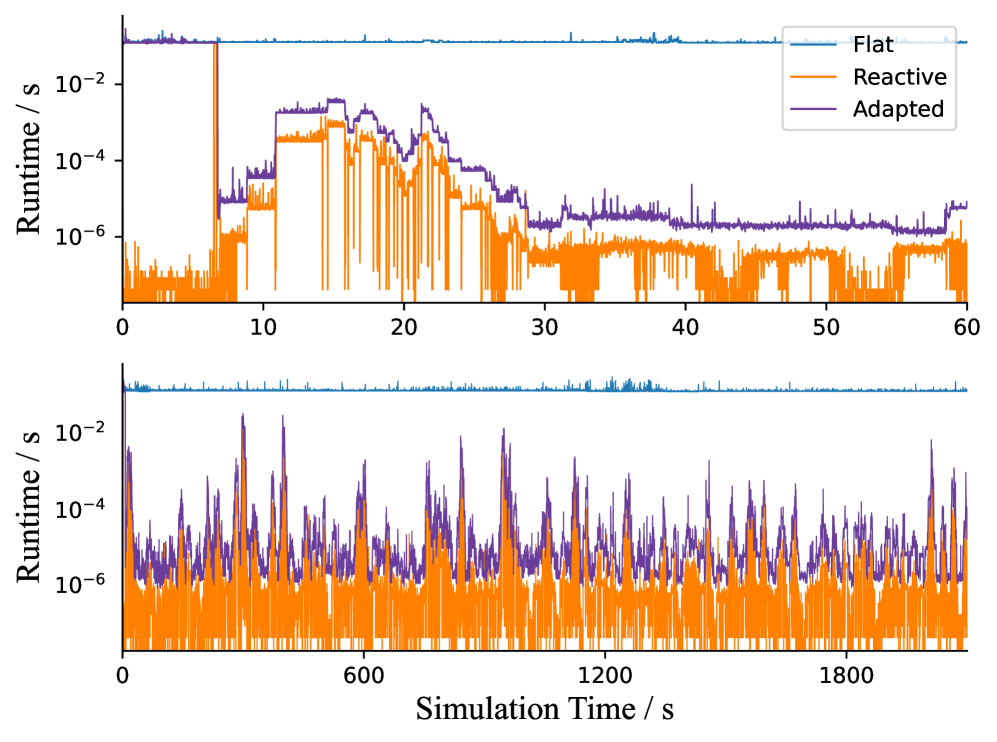

Reactive Circuits demonstrate significant performance gains over static, non-adaptive inference techniques due to their ability to prioritize computational resources. Benchmarking indicates speedups ranging from 102 to 104, depending on the dataset and model complexity. This acceleration is achieved by dynamically allocating more processing power to input signals exhibiting high rates of change-those most likely to contribute to meaningful updates in inference-while reducing computation for stable or redundant data. The selective allocation minimizes redundant calculations, resulting in substantial efficiency improvements and reduced latency.

![Resin achieves efficient asynchronous inference by dynamically adapting reactive circuits to memorize intermediate results [latex] ext{(green)}[/latex] and offload sub-formulas [latex] ext{(yellow)}[/latex] based on the frequency of incoming data [latex] ext{(blue)}[/latex].](https://arxiv.org/html/2602.05625v1/x4.png)

The Architecture of Change: Dynamic Restructuring via Lift and Drop Operations

Reactive circuits utilize ‘Lift’ and ‘Drop’ operations as a mechanism for online adaptation of the computational graph. The ‘Lift’ operation increases the level of abstraction within the graph by promoting signal nodes to higher levels, effectively reducing the immediate computational load at that stage. Conversely, the ‘Drop’ operation decreases abstraction by moving signal nodes downwards, increasing local computational detail and potentially enabling more focused processing. These operations are performed during runtime, allowing the circuit to dynamically restructure itself in response to changing input data or operational requirements, thereby optimizing performance without requiring a complete system redesign.

Lift and Drop operations manipulate the abstraction level within a reactive circuit by altering the position of signal nodes. The ‘Lift’ operation increases abstraction by moving a signal node to a higher level in the computational graph, effectively summarizing or generalizing the signal. Conversely, the ‘Drop’ operation reduces abstraction by moving a node downwards, exposing a more granular representation of the signal and enabling focused computation on that specific detail. This dynamic adjustment of abstraction levels allows the circuit to prioritize relevant signals and computations, optimizing performance based on changing input characteristics and computational needs.

The dynamic restructuring of computational graphs via Lift and Drop operations is directly informed by the estimated Frequency of Change (FoC) of signal nodes. Algorithms, such as the Kalman Filter, are employed to continuously estimate FoC, providing a quantitative measure of signal volatility. Higher FoC values indicate a need for increased abstraction via Lift operations, while lower values suggest focused computation is achievable through Drop operations. This adaptive structural adjustment, coupled with reactive execution utilizing memorization techniques, results in substantial performance improvements by minimizing redundant computations and optimizing the graph for the current input stream. Performance gains are realized as the system effectively balances computational cost with the need for accuracy based on observed signal dynamics.

Resin: A Probabilistic Programming Language Designed for Dynamic Systems

Resin represents a novel approach to probabilistic programming, diverging from traditional static models by being fundamentally built upon Reactive Circuits. This design choice allows Resin to excel at continual inference – the process of updating beliefs and predictions as new data arrives over time. Unlike systems that require complete re-evaluation with each observation, Resin’s reactive foundation facilitates incremental computation, efficiently processing asynchronous data streams common in dynamic systems. This is achieved by representing probabilistic models as networks of interconnected reactive components, enabling the language to maintain and refine its internal state continuously, rather than operating on discrete batches of information. Consequently, Resin is particularly well-suited for applications demanding real-time responsiveness and adaptation to evolving conditions, offering a powerful framework for modeling uncertainty in dynamic environments.

Resin’s efficiency in handling dynamic systems stems from its foundation on Reactive Circuits, a computational paradigm uniquely suited to asynchronous data. Unlike traditional programming approaches that often require data to be synchronized and processed in batches, Resin adapts to the continuous, often unpredictable, flow of information common in distributed systems. This adaptive capability allows the language to process data streams as they arrive, minimizing latency and maximizing responsiveness. By inherently supporting the asynchronous nature of real-world data, Resin avoids bottlenecks associated with data serialization and deserialization, enabling scalable and performant applications even when dealing with high-volume, continuously updating information from multiple sources. This approach is particularly valuable in scenarios demanding real-time analysis and decision-making, where timely processing of incoming data is critical.

Resin’s architecture unlocks significant advancements in applications demanding swift, adaptive responses to incoming data, notably real-time anomaly detection and dynamic resource allocation. By framing these challenges as probabilistic inference problems, Resin leverages the efficiency of Reactive Circuits to continually update beliefs about system states. This allows for the rapid identification of unusual patterns – anomalies – in data streams, triggering timely interventions. Simultaneously, the system dynamically adjusts resource allocation based on probabilistic predictions of future demand, optimizing performance and minimizing waste. Crucially, Resin’s distributed design ensures that these capabilities scale effectively with increasing data volume and system complexity, providing a robust solution for managing continually evolving dynamic systems.

The pursuit of Reactive Circuits embodies a spirit of challenging established computational norms. This work doesn’t simply accept the premise of static computation; it actively probes its limitations by introducing a system that alters its behavior based on incoming data. It’s a deliberate dismantling of the ‘always-on’ approach, questioning the necessity of continuous processing. As Carl Friedrich Gauss famously stated, “If other people would think differently, then things would be different.” This paper echoes that sentiment; it operates on the assumption that current methods can be improved, and systematically explores an alternative where computation isn’t constant, but reactive – adapting to the frequency of change and prioritizing efficiency in dynamic environments. The very structure of RCs-algebraic circuits tuned to signal volatility-is an exercise in controlled disruption, a testament to the power of questioning fundamental assumptions.

What Lies Ahead?

The introduction of Reactive Circuits suggests a shift – or perhaps, a deliberate disruption – of the conventional wisdom surrounding continual inference. The system doesn’t merely process change; it anticipates it, structurally reconfiguring itself based on signal volatility. One wonders, though, if the elegance of this adaptation isn’t also a limitation. Is an over-reliance on frequency of change sufficient to capture all meaningful dynamics? What happens when stasis is the signal – a prolonged absence of change indicating a critical event?

Future work will inevitably explore scaling these circuits, but a more interesting challenge lies in probing their inherent biases. Every adaptive system embodies assumptions about the world. Are Reactive Circuits predisposed to favor certain types of environmental change over others? And crucially, can these circuits be made self-aware of their own limitations-capable of signaling when their reactive mechanisms are leading them astray?

The promise isn’t simply efficient computation, but a new form of inference-one that embraces the inherent messiness of real-world data. The true test won’t be how well it performs on curated benchmarks, but how gracefully it fails – and what those failures reveal about the underlying structure of the problems it attempts to solve. Perhaps the ‘bugs’ aren’t flaws, but emergent properties-signals indicating the limits of our current understanding.

Original article: https://arxiv.org/pdf/2602.05625.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- How to download and play Overwatch Rush beta

- KAS PREDICTION. KAS cryptocurrency

2026-02-08 15:54