Author: Denis Avetisyan

Researchers have developed a new framework that enables humanoid robots to learn and execute complex motions with unprecedented robustness and adaptability.

A pretrained control framework combined with residual learning and center-of-mass awareness allows for fast adaptation to diverse and challenging movements.

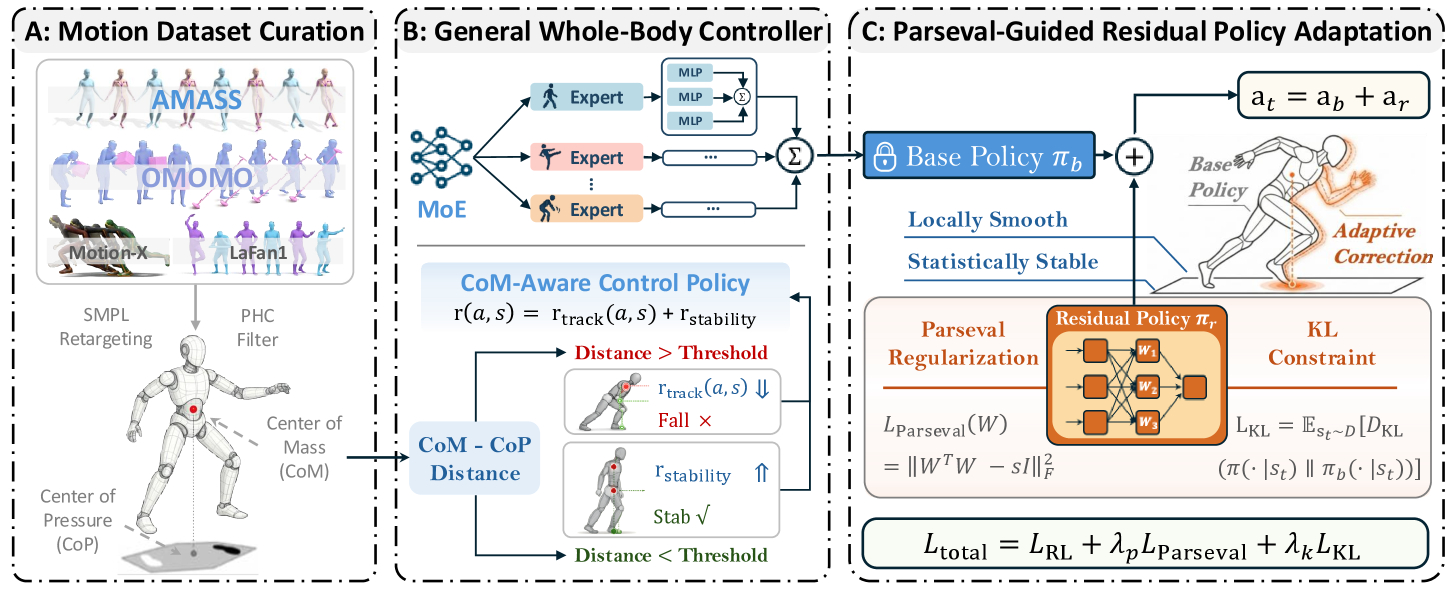

Achieving robust and adaptable whole-body control remains a central challenge in humanoid robotics, particularly when faced with diverse motions and dynamic scenarios. This paper introduces ‘General Humanoid Whole-Body Control via Pretraining and Fast Adaptation’, presenting FAST, a framework that leverages pretraining with lightweight residual learning and Center-of-Mass awareness to enable stable tracking of challenging movements. Through Parseval-Guided Residual Policy Adaptation and [latex]CoM[/latex]-Aware Control, FAST demonstrates improved robustness, adaptation efficiency, and generalization compared to state-of-the-art methods. Could this approach unlock more natural and reliable interactions between humans and humanoid robots in complex real-world environments?

The Challenge of Adaptability: Why Movement Fails

Humanoid robots, despite advancements in engineering, consistently face difficulties when transitioning from controlled laboratory settings to the unpredictable nature of real-world environments. Traditional control methodologies, often reliant on precise modeling and pre-programmed sequences, prove remarkably brittle when confronted with even slight variations in terrain, lighting, or object positioning. Unexpected disturbances – a gentle nudge, an uneven floor, or a partially obscured target – can easily disrupt these systems, leading to instability or complete failure. This susceptibility stems from the inherent complexity of bipedal locomotion and the difficulty in anticipating and compensating for the infinite possibilities present outside of carefully curated testing grounds, highlighting a critical need for more robust and adaptable control strategies.

Humanoid robots built on conventional control systems frequently demand substantial recalibration even when faced with slight alterations to their surroundings or assigned tasks. This reliance on exhaustive retraining represents a significant obstacle to their widespread deployment in dynamic, real-world scenarios. A robot proficient in navigating a laboratory environment, for instance, may require considerable adjustment to function effectively in a home, or even after a minor change in furniture arrangement. This sensitivity stems from the systems’ dependence on precisely defined parameters; deviations from these parameters trigger performance degradation, necessitating a complete or partial overhaul of the control algorithms. The practical implications are considerable, limiting the robots’ versatility and increasing the time and resources needed for implementation in diverse applications, ultimately hindering their potential as adaptable assistants and collaborators.

Current humanoid robot control systems frequently depend on pre-programmed, fixed policies – detailed instructions for every conceivable scenario. This approach proves brittle when confronted with the unpredictable nature of real-world environments and the inherent imperfections of sensor data. Unlike humans, who can instantaneously adjust to shifting terrain or unexpected obstacles, these robots struggle when deviations from the programmed norm occur. The inability to rapidly synthesize new responses from imperfect information necessitates constant re-calibration or, worse, complete task failure. Consequently, research focuses on developing control architectures that prioritize adaptability, allowing humanoids to learn and generalize from experience, rather than rigidly adhering to pre-defined behaviors, ultimately striving for a more resilient and versatile robotic platform.

The limited capacity for generalization poses a significant hurdle to deploying humanoid robots in dynamic, real-world settings. Current control systems, while capable in highly structured environments, often falter when confronted with even slight deviations from their training data – a misplaced object, an uneven surface, or an unexpected interaction. This fragility stems from a reliance on precisely calibrated movements and pre-defined responses, leaving little room for improvisation or adaptation. Consequently, a humanoid robot proficient at one task in one environment may require substantial re-engineering to perform a similar task in a slightly altered context, severely restricting its versatility and hindering the prospect of truly robust, autonomous operation. The inability to efficiently transfer learned skills to novel situations ultimately limits the potential for widespread adoption and practical application of these complex machines.

FAST: A Framework for Rapid Adaptation and Learning

The FAST framework addresses the challenge of adaptable humanoid control by employing a two-stage process: initial skill acquisition via pretraining, followed by rapid refinement through residual learning. Pretrained control establishes a foundational policy based on extensive simulation or demonstration data, providing a functional starting point for robotic tasks. Residual learning then focuses on learning the difference between this pretrained policy and the optimal policy for a new, specific situation. This approach significantly reduces the computational burden and data requirements compared to training a control policy from random initialization, enabling faster adaptation to previously unseen tasks or environmental changes. The framework aims to leverage existing skills instead of relearning fundamental behaviors, promoting efficient and robust performance in dynamic scenarios.

Residual learning, as implemented within the FAST framework, represents a machine learning approach that focuses on learning the difference between a current, potentially suboptimal, policy and a desired, optimal policy. Instead of training a control policy from random initialization – a computationally expensive process – residual learning initializes the policy with a pretrained network and then trains a smaller network to predict the “residual” error. This residual network is added to the pretrained network, effectively refining the existing policy. The benefit lies in significantly reduced training time and improved sample efficiency, as the network only needs to learn the incremental changes required for adaptation, rather than the entire control task. This approach is particularly effective when the new task or environment shares similarities with those used during pretraining.

By leveraging previously acquired skills, the FAST framework minimizes the computational burden associated with learning new behaviors. Instead of requiring the robot to relearn fundamental motor skills for each new task or environment, residual learning techniques enable rapid adaptation by adjusting existing control policies. This is achieved by training a smaller network to predict the difference between the current policy and the desired behavior, significantly reducing training time and data requirements compared to methods that necessitate learning from scratch. Consequently, the robot can efficiently transfer knowledge across tasks and generalize to unseen situations, increasing overall adaptability and robustness.

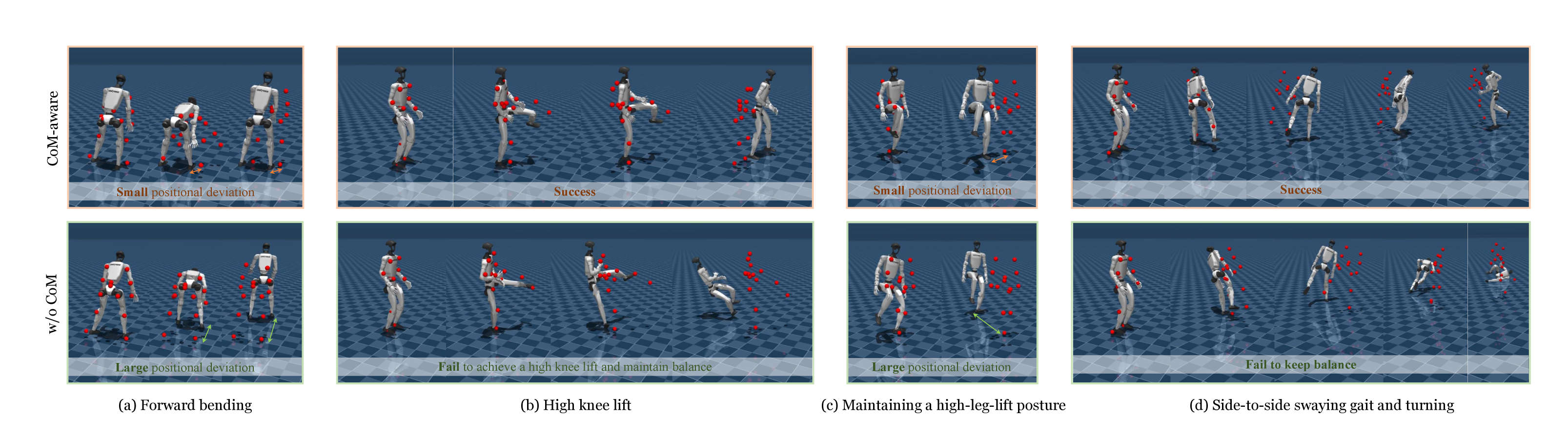

Center-of-Mass-Aware Control (COMAC) is a core component of the FAST framework, directly addressing stability and robustness in humanoid robots. COMAC explicitly regulates the robot’s center of mass (COM) trajectory during motion, allowing for proactive balance adjustments and improved tolerance to external disturbances. This is achieved by formulating control objectives around the COM position and velocity, effectively decoupling COM control from the more complex full-body trajectory tracking. By prioritizing COM stability, the system minimizes the need for reactive balance recovery maneuvers, leading to smoother, more energy-efficient, and reliable locomotion even in challenging or unpredictable environments. The technique enables the robot to maintain balance with reduced reliance on foot placement adjustments and joint torques, enhancing overall performance and safety.

Stabilizing Adaptation Through Calculated Constraint

Parseval Regularization, as implemented within the FAST framework, directly penalizes the spectral energy of the residual policy. This is achieved by minimizing the [latex]L_2[/latex] norm of the Fourier transform of the policy updates. By constraining high-frequency components, the regularization promotes smoother control signals and reduces the likelihood of abrupt or erratic movements. This technique effectively acts as a low-pass filter on the policy updates, improving stability during adaptation and preventing the policy from overfitting to noisy input data. The strength of this regularization is governed by a hyperparameter, allowing for a tunable trade-off between adaptation speed and stability.

A Kullback-Leibler (KL) divergence constraint is implemented within the FAST framework to regulate the residual policy’s deviation from the initial, pretrained base policy. This constraint functions as a penalty, increasing with any substantial difference in the probability distributions of the actions suggested by the residual and base policies. By limiting the magnitude of these distributional shifts, the KL divergence constraint ensures that adapted behaviors remain close to the safe and established control strategies encoded in the base policy, thereby promoting predictable system responses and mitigating potentially hazardous outcomes during adaptation, particularly when faced with imperfect or ambiguous input references.

Real-world robotic systems frequently encounter imperfect motion references due to sensor noise, environmental uncertainty, and incomplete data. These noisy or incomplete references can lead to unstable or unpredictable behavior during adaptation if not addressed. The incorporation of Parseval Regularization and KL Divergence constraints functions to mitigate these effects by promoting smoothness in the learned residual policy and limiting deviations from a safe, pretrained baseline. This ensures that the adapted policy remains robust to inaccuracies in the input motion references, preventing erratic movements and maintaining operational stability even under challenging conditions.

The FAST framework achieves rapid adaptation to new motion references by leveraging Parseval Regularization and a KL Divergence Constraint, which work in concert to maintain stability and safety. Parseval Regularization encourages smoothness in the residual policy, minimizing abrupt changes, while the KL Divergence Constraint limits deviations from the pretrained base policy. This dual approach prevents the adapted policy from exhibiting unpredictable behavior, even when presented with noisy or incomplete reference data. The result is a system capable of quickly adjusting to new tasks or environments without compromising overall performance or introducing potentially unsafe actions.

Empirical Validation: A Framework Proven Through Diversity

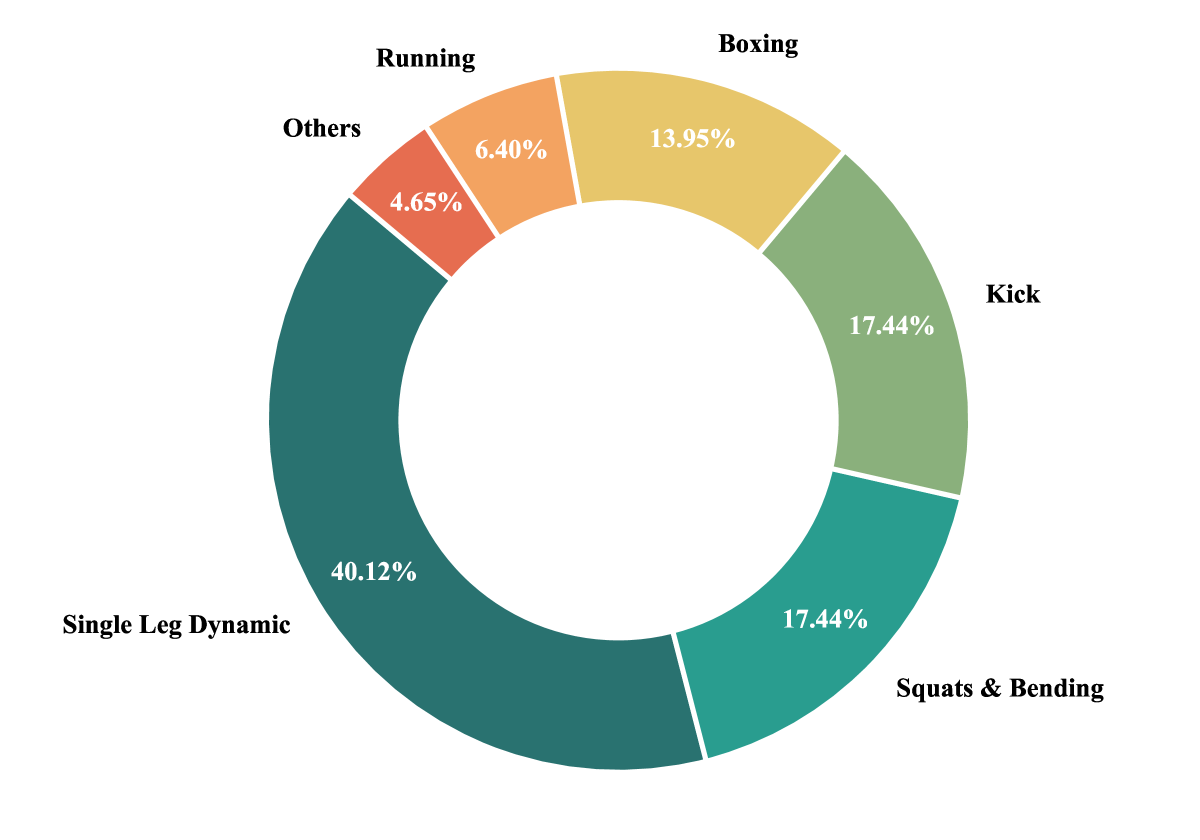

The FAST framework’s robustness was rigorously tested through evaluation on extensive, publicly available motion capture datasets, including AMASS, OMOMO, LaFan1, and Motion-X. This deliberate choice of diverse datasets – encompassing a wide range of activities, subjects, and capture environments – was crucial to ensuring the framework’s capacity for broad generalization. By training and validating FAST against these large-scale, varied motion data, researchers aimed to move beyond performance limited to specific, constrained scenarios and demonstrate its adaptability to real-world human movement. The use of these benchmarks provides strong evidence that FAST isn’t simply memorizing training data, but rather learning underlying principles of human locomotion applicable across different individuals and dynamic situations.

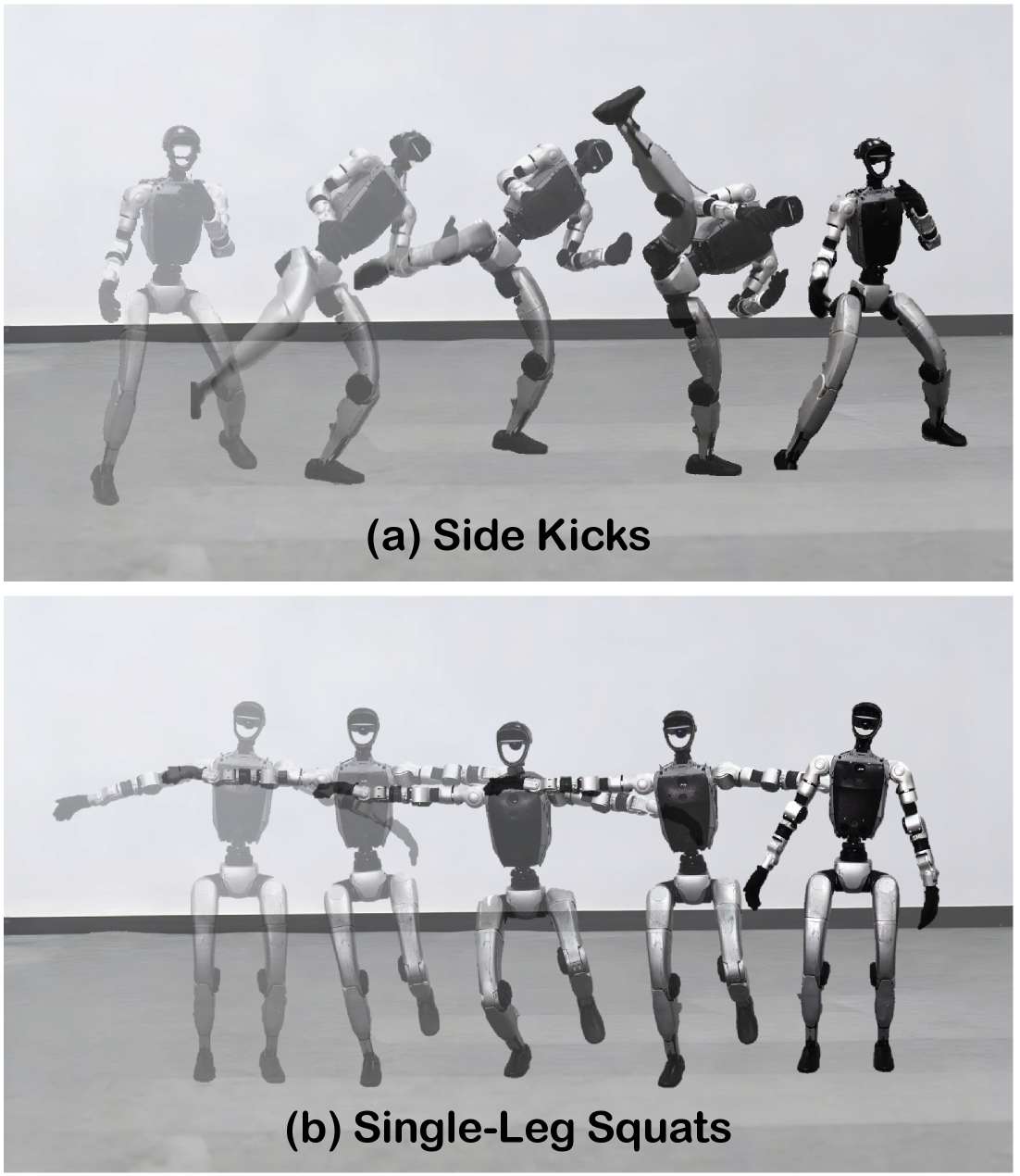

The FAST framework exhibited robust performance when challenged with high-dynamic motions, signifying its capacity to accurately process and replicate complex human movements. Researchers subjected the system to demanding sequences-including jumps, rapid turns, and exaggerated gestures-to evaluate its adaptability. Results indicated that FAST effectively maintained tracking accuracy and balance throughout these challenging maneuvers, demonstrating a significant advancement over existing motion capture technologies. This capability stems from the framework’s innovative approach to predicting and responding to swift changes in body position and velocity, enabling more realistic and fluid animation, as well as improved applications in areas like virtual reality and biomechanical analysis.

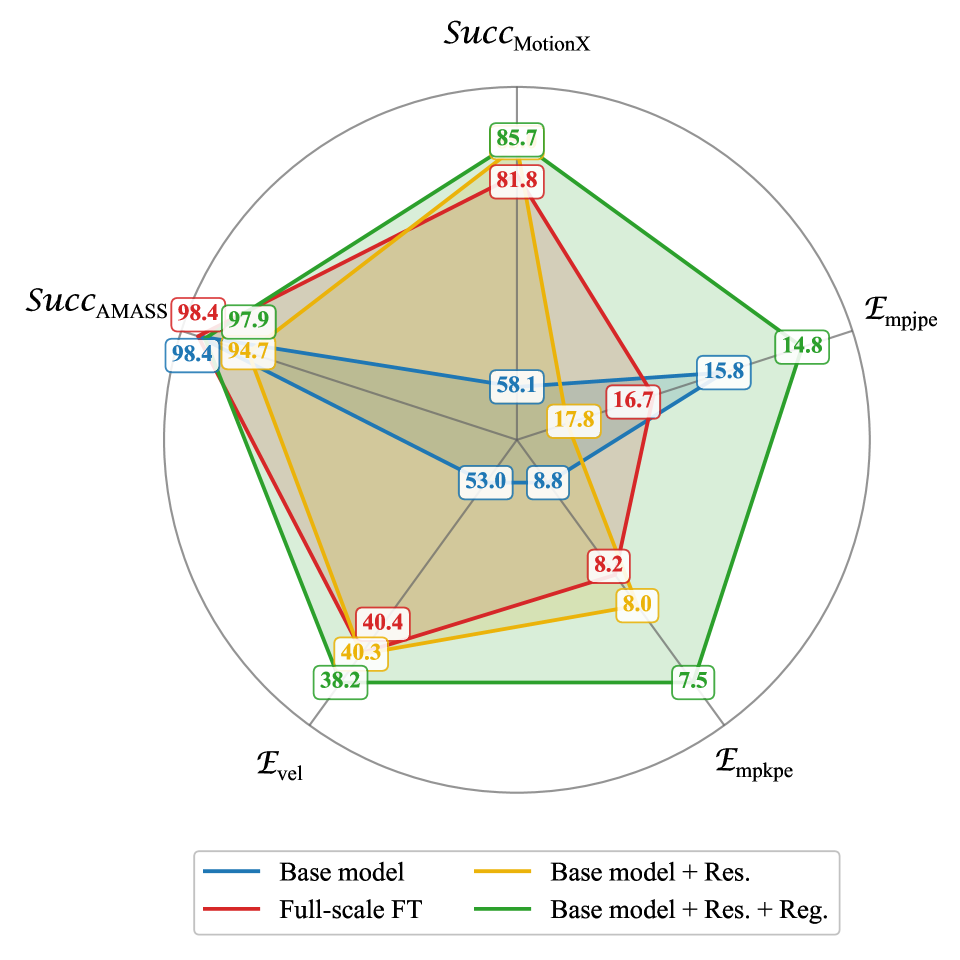

Rigorous evaluation of the FAST framework consistently revealed its superior performance across a diverse range of motion capture datasets, including AMASS, OMOMO, LaFan1, and Motion-X. Success Rate, a primary metric for assessing task completion, reached the highest reported values when compared to existing state-of-the-art methods like GMT and TWIST2. This consistently high success rate demonstrates the framework’s robustness and generalizability to a variety of movements and scenarios. Furthermore, comparative analyses consistently positioned FAST as a leading approach, effectively establishing a new benchmark for motion adaptation and control within the field of dynamic movement generation and analysis.

Evaluations reveal that the FAST framework achieves notably enhanced motion tracking accuracy, as quantified by a significantly lower Global Mean Per Keypoint Position Error (Empkpe) when contrasted with existing methods like GMT and TWIST2. This improvement isn’t merely about precise positioning; integrated CoM-Aware Control within FAST demonstrably reduces slippage – the velocity of the support foot – during movement. This reduction in slippage is a key indicator of improved balance and overall stability, suggesting the framework doesn’t just record motion, but facilitates more natural and controlled biomechanics. The combined effect of lower Empkpe and minimized slippage points to a system capable of faithfully replicating and potentially enhancing the quality of human movement in virtual environments.

The pursuit of robust humanoid control, as demonstrated by FAST, benefits from a dedication to essential principles. It is a refinement, not an accumulation, that yields true progress. This echoes the sentiment of Barbara Liskov: “The best programs are those that do one thing well.” FAST achieves this by focusing on pretraining a foundational controller and then employing residual learning for rapid adaptation-removing unnecessary complexity to achieve stable, diverse motion tracking. The framework’s Center-of-Mass awareness further streamlines control, embodying the idea that elegance arises from a deliberate reduction to core functionality.

Further Refinements

The presented framework, while demonstrating notable adaptability, skirts the fundamental issue of embodiment. Success hinges on pretraining – an implicit acknowledgement that de novo learning of complex locomotion remains computationally prohibitive. The true measure of progress will not be faster adaptation, but a reduction in the reliance on prior experience. The pursuit of generalized control necessitates a departure from imitation; a system must learn to inhabit a body, not merely replicate movements.

Robustness, predictably, remains a persistent concern. The ability to track diverse motions does not equate to resilience in the face of genuine environmental disturbance – unexpected contact, actuator failure, or the subtle asymmetries inherent in physical systems. Future iterations should prioritize the development of internal models capable of anticipating and mitigating such perturbations, rather than simply reacting to them. Emotion, after all, is a side effect of structure, and stability is its architecture.

Ultimately, the field’s preoccupation with “control” may be a misdirection. Perhaps the goal is not to impose order on a chaotic system, but to discover the inherent self-organizing principles that govern dynamic stability. Clarity is compassion for cognition; a parsimonious explanation, devoid of unnecessary complexity, is the highest form of understanding. Perfection is reached not when there is nothing more to add, but when there is nothing left to take away.

Original article: https://arxiv.org/pdf/2602.11929.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Gold Rate Forecast

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

- Meme Coins Drama: February Week 2 You Won’t Believe

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

2026-02-15 19:27