Author: Denis Avetisyan

New research reveals that even powerful language models struggle with the same biased thinking that plagues human decision-making.

A replication study of human motivated reasoning patterns finds base large language models do not reliably exhibit similar biases or accurately assess argument quality.

While humans routinely exhibit motivated reasoning – selectively processing information to support pre-existing beliefs – it remains unclear whether large language models (LLMs) replicate this cognitive bias. This research, ‘Replicating Human Motivated Reasoning Studies with LLMs’, directly investigates this question by replicating four prior studies of political motivated reasoning, finding that base LLM behavior diverges from expected human patterns and demonstrates consistent inaccuracies in assessing argument strength. These findings suggest LLMs do not reliably mimic human reasoning, even absent persona prompting, raising critical questions about their suitability for automating tasks like survey data collection and argument evaluation. How can we better align LLM behavior with human cognitive processes to ensure responsible application in social science research?

The Architecture of Belief: Why Facts Often Fail

Human cognition isn’t the objective evaluation of facts, but rather a process deeply intertwined with pre-existing beliefs. This phenomenon, known as motivated reasoning, sees individuals unconsciously prioritize information that confirms what they already think, while dismissing or downplaying evidence to the contrary. It’s not simply a matter of being stubborn; rather, the brain appears wired to protect existing convictions, often interpreting ambiguous data in a way that supports a favored narrative. This selective acceptance isn’t limited to complex issues; it occurs across a vast range of topics, from personal preferences to deeply held political ideologies, and can even influence how accurately someone recalls past events. Consequently, presenting facts alone often fails to change minds, as individuals are more likely to scrutinize opposing viewpoints while accepting confirming evidence at face value, highlighting a fundamental challenge in effective communication and persuasion.

Conventional approaches to understanding how opinions form often fall short because they treat individuals as rational actors weighing evidence objectively. However, research demonstrates that people are powerfully influenced by pre-existing beliefs, a phenomenon known as motivated reasoning. This creates a complex interplay where argument strength is secondary to whether the information confirms what one already believes. Traditional methods, like simple exposure to facts or persuasive messaging, frequently fail to shift deeply held convictions because they don’t account for this inherent bias. Consequently, studies focusing solely on message characteristics often overlook the crucial role of individual motivation and the selective processing of information, hindering a complete understanding of opinion dynamics and the persistence of beliefs even in the face of contradictory evidence.

The pervasive influence of motivated reasoning presents significant hurdles to navigating contemporary societal challenges. Misinformation, for instance, doesn’t simply fail to convince those holding opposing viewpoints; instead, it often strengthens pre-existing beliefs in the face of contradictory evidence. Similarly, political polarization isn’t merely a difference of opinion, but a consequence of individuals actively seeking out and embracing information that validates their partisan affiliations. Consequently, a deeper understanding of these cognitive processes – how motivations shape information acceptance and rejection – is paramount. Addressing issues like the spread of false narratives and escalating political division requires interventions designed not to simply present facts, but to acknowledge and account for the underlying psychological mechanisms that drive biased reasoning and impede objective evaluation of evidence.

Simulating the Mind: A Computational Approach

Large Language Models (LLMs) present a novel method for simulating human responses to defined stimuli, offering a controlled environment to study cognitive biases. Unlike traditional research methods reliant on participant recruitment and potential confounding variables, LLMs allow researchers to generate responses based on specific parameters and prompts. This capability facilitates systematic manipulation of input variables-such as framing effects or confirmation bias triggers-and subsequent analysis of the LLM-generated output as a proxy for human reaction. The use of LLMs enables large-scale experimentation and replication of studies, increasing statistical power and allowing for the identification of nuanced relationships between stimuli and observed biases. While not a perfect analog for human cognition, LLM-based simulation offers a valuable complementary tool for cognitive science research.

Simulations leveraging Large Language Models (LLMs) enable researchers to systematically alter specific variables influencing opinion formation. A key component of this methodology is the controlled manipulation of motivational types, specifically contrasting directional motivation – where the focus is on advocating a particular viewpoint – with accuracy motivation – where the emphasis is on arriving at the correct answer, regardless of pre-existing beliefs. By isolating these motivational factors and observing their impact on LLM-generated responses to various prompts, researchers can determine the extent to which each influences opinion formation and assess potential biases. This controlled experimental design allows for the quantification of these effects and facilitates comparative analysis across different LLMs and stimulus types.

To validate simulation results and minimize the impact of model-specific artifacts, a diverse set of Large Language Models (LLMs) was employed. The LLMs utilized included GPT-4o Mini, Claude 3 Haiku, and Mistral 8x7B, representing variations in model architecture, training data, and parameter count. Employing multiple LLMs allowed for cross-validation of observed trends; consistent behavioral patterns across these models strengthen the conclusion that the simulated responses reflect generalizable principles rather than idiosyncrasies of a single model. This approach enhances the robustness of the findings and increases confidence in their applicability beyond the specific LLMs tested.

Bridging Simulation and Reality: Validating the Model

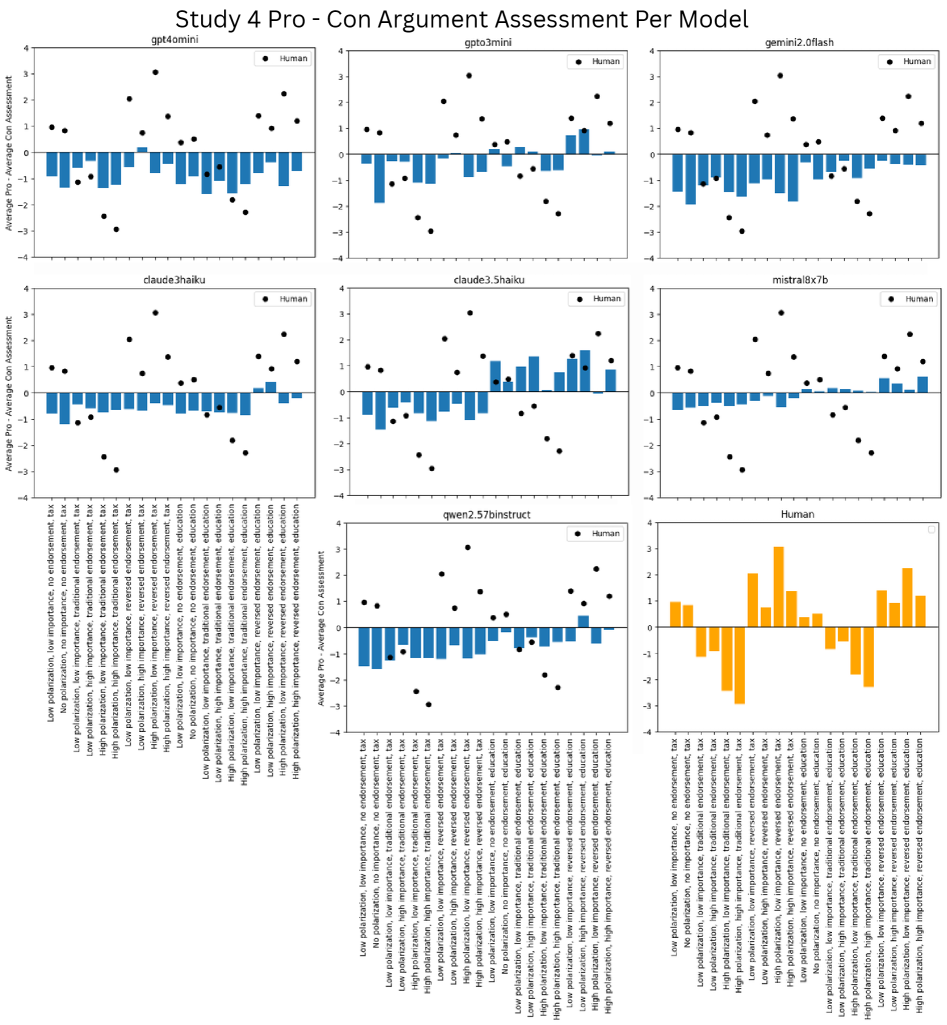

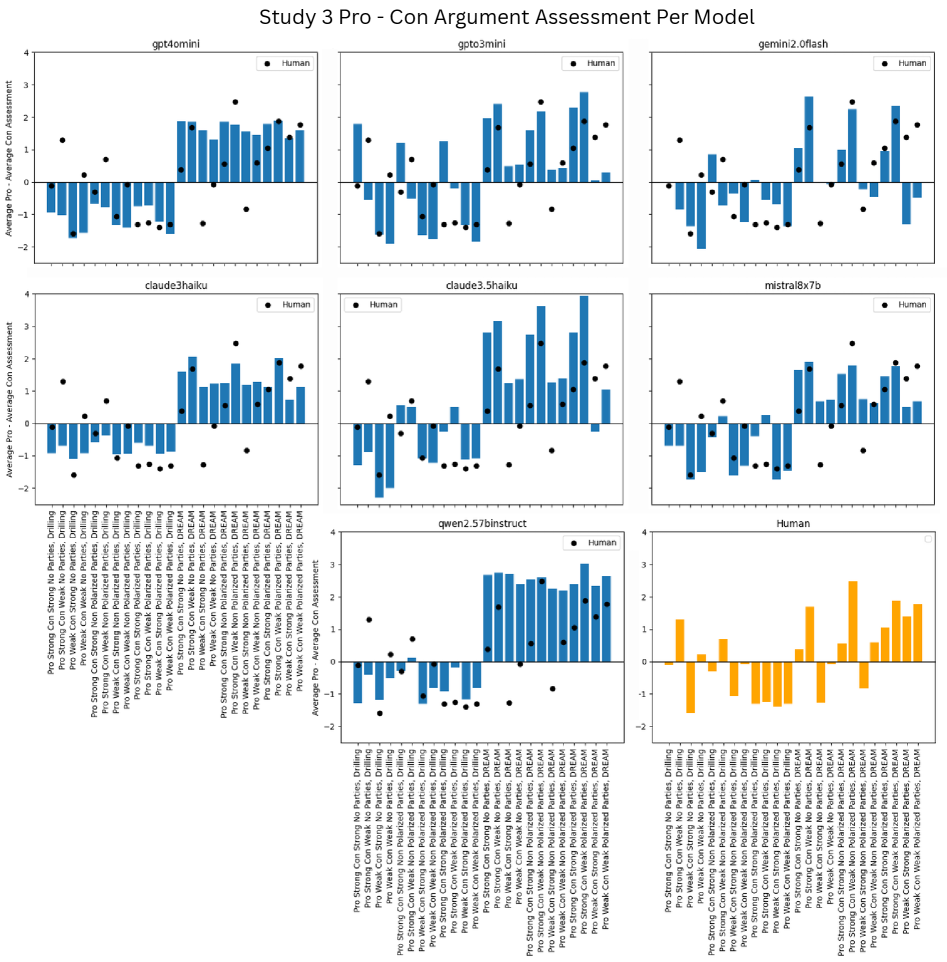

Simulations were conducted to validate the influence of motivational factors on opinion formation, successfully replicating key findings from four prior studies (Bolsen et al., 2014; Bolsen & Druckman, 2015; Druckman et al., 2013; Mullinix, 2016). These simulations demonstrated that directional motivation-a desire to reach a particular conclusion-consistently resulted in biased evaluation of supporting and opposing arguments. Conversely, accuracy motivation-a desire to arrive at the correct conclusion-promoted more objective assessments of argument quality, mirroring the behavioral patterns observed in the original research. This replication provides evidence that the computational model captures the fundamental relationship between motivation and opinion dynamics.

Experimental results indicate a consistent relationship between motivational state and argument evaluation. Participants exhibiting directional motivation – a desire to reach a preferred conclusion – consistently demonstrated biased evaluations of arguments, favoring information that supported their pre-existing beliefs. Conversely, participants primed with accuracy motivation – a desire to arrive at the correct conclusion – exhibited more objective assessments of arguments, evaluating them based on their logical merit regardless of personal preference. This pattern was observed across multiple studies and suggests that the type of motivation significantly influences how individuals process and judge information.

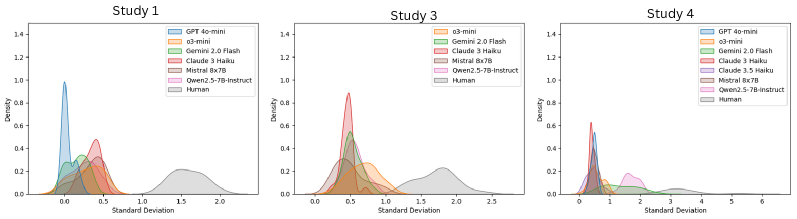

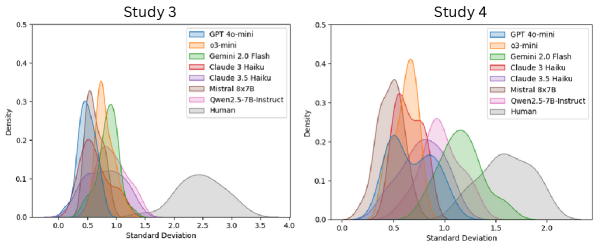

Quantitative analysis of response variability, measured by standard deviation, consistently showed lower values for the Large Language Model (LLM) compared to human participants. Statistical tests, specifically t-tests, indicated that these differences were significant across all comparisons (p < 0.0001), with effect sizes exceeding |d| = 2. This substantial difference in standard deviation suggests that the LLM exhibits a limited capacity to replicate the breadth and diversity of opinions observed in human responses, particularly within the context of motivated reasoning tasks. The LLM’s comparatively narrow range of responses implies a constrained representation of the cognitive processes underlying opinion formation.

Statistical analysis of Large Language Model (LLM) responses in motivated reasoning tasks revealed generally weak or non-significant correlations with human behavioral data. While LLMs could replicate certain directional trends observed in human subjects, the degree of agreement was insufficient to demonstrate strong alignment. This suggests that, despite exhibiting some similar patterns in evaluating arguments based on motivational biases, LLMs do not consistently mirror the complexity or nuance of human responses in these contexts. The observed lack of strong correlation indicates fundamental differences in the underlying cognitive processes driving opinion formation between humans and the current generation of LLMs.

The Path Forward: Implications and Future Directions

Computational simulations are increasingly valuable for dissecting the complex processes driving political polarization and the propagation of misinformation. These models allow researchers to manipulate key variables – such as the strength of prior beliefs, the source of information, and the presence of conflicting evidence – and observe the resulting shifts in simulated opinion. By creating a controlled environment, these tools bypass the ethical and logistical challenges of studying human subjects directly, offering insights into the cognitive mechanisms at play. Furthermore, the ability to run numerous iterations and systematically alter parameters reveals patterns and sensitivities that would be difficult, if not impossible, to detect through traditional research methods, ultimately providing a powerful framework for understanding how and why beliefs become entrenched and how misinformation gains traction within populations.

Researchers are leveraging computational simulations to dissect the intricacies of motivated reasoning and its influence on public opinion. By carefully adjusting variables within these models-such as the strength of prior beliefs or the emotional valence of information-and then meticulously analyzing the resulting responses from large language models, scientists can pinpoint how individuals selectively process information to reinforce existing viewpoints. This systematic manipulation allows for the isolation of specific cognitive mechanisms-like confirmation bias or disconfirmation bias-and provides quantitative insights into how these biases shape attitudes and beliefs. The ability to observe these processes within a controlled environment offers a powerful approach to understanding the psychological underpinnings of polarization and the spread of misinformation, moving beyond correlational studies to explore causal relationships.

Despite the promise of large language models in simulating cognitive processes, current implementations fall short of fully replicating the nuances of human motivated reasoning. Studies reveal a limited capacity to accurately predict shifts in opinion-indicated by consistently low accuracy scores when anticipating expected sign changes in responses-and a noticeable lack of variability in the generated outputs. This suggests that while LLMs can mimic certain patterns of biased information processing, they struggle to capture the full spectrum of cognitive flexibility and individual differences characteristic of human thought. Consequently, interpretations of simulation results must remain cautious, recognizing that these models offer a simplified, rather than complete, representation of the complex interplay between belief, reasoning, and information exposure.

The simulation framework offers a promising avenue for crafting interventions designed to counter biased reasoning and encourage more rational judgments. Researchers can leverage these virtual environments to test the efficacy of different strategies – such as prebunking techniques, framing manipulations, or the introduction of diverse perspectives – in mitigating the influence of motivated reasoning on belief formation. By systematically altering simulation parameters and observing the resulting changes in LLM responses, it becomes possible to identify interventions that consistently nudge the model – and potentially, human subjects – towards more objective assessments of information. This iterative process of simulation and refinement could ultimately lead to the development of tools and techniques for promoting informed decision-making in real-world contexts, offering a pathway to address the challenges posed by political polarization and the spread of misinformation.

The study demonstrates a fundamental disconnect between current LLMs and the nuanced, often biased, processes of human cognition. These models, while proficient at generating text, fail to replicate motivated reasoning-the tendency to interpret information in a way that confirms pre-existing beliefs. This absence of inherent bias, though seemingly positive, highlights a critical difference in how these systems process information versus humans. As Vinton Cerf observed, “The Internet treats everyone the same.” Similarly, these LLMs apply consistent logic, lacking the selective interpretation central to human reasoning-a stark contrast to the subjective lens through which humans assess argument strength, as demonstrated by the research.

What Remains?

The absence of reliably replicated motivated reasoning in base Large Language Models is not, perhaps, a failing of the models, but a clarification of the phenomenon itself. The work suggests that what appears as bias in human cognition may not be a simple matter of information processing, but something entangled with embodied experience, affective states, and a persistent need for narrative coherence – qualities presently absent in these systems. To continue treating LLM outputs as mere proxies for human reasoning is to mistake a mirror for a mind.

Future work should resist the urge to ‘fix’ the LLMs to resemble flawed human reasoning. A more productive avenue lies in understanding why these models diverge, using that difference to illuminate the specific components of human cognition responsible for motivated reasoning. The goal is not to create artificial minds that mimic our failings, but to better understand our own.

The persistent search for bias in artificial systems should be tempered with a recognition that the most revealing insights often emerge not from what is replicated, but from what stubbornly refuses to be. Perfection, in this context, is not a perfect imitation, but a clean separation.

Original article: https://arxiv.org/pdf/2601.16130.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- The five movies competing for an Oscar that has never been won before

- Streaming Services With Free Trials In Early 2026

2026-01-24 13:52