Author: Denis Avetisyan

A new framework combines simulation and deep learning to dramatically improve safety and coordination in complex multi-arm surgical robotic systems.

![A learning framework leverages a Unity-based simulation-generating 75,655 robot configurations-to train a deep neural network that predicts the minimum distance [latex] d_{min} [/latex] between robotic arms, enabling the system to issue an audio warning when [latex] d_{min} [/latex] falls below 0.2 meters and preemptively mitigate potential collisions on real-world robotic setups.](https://arxiv.org/html/2601.15459v1/images/framework.jpg)

Researchers demonstrate accurate collision detection and prediction through a learning-from-simulation approach for enhanced surgical robot safety.

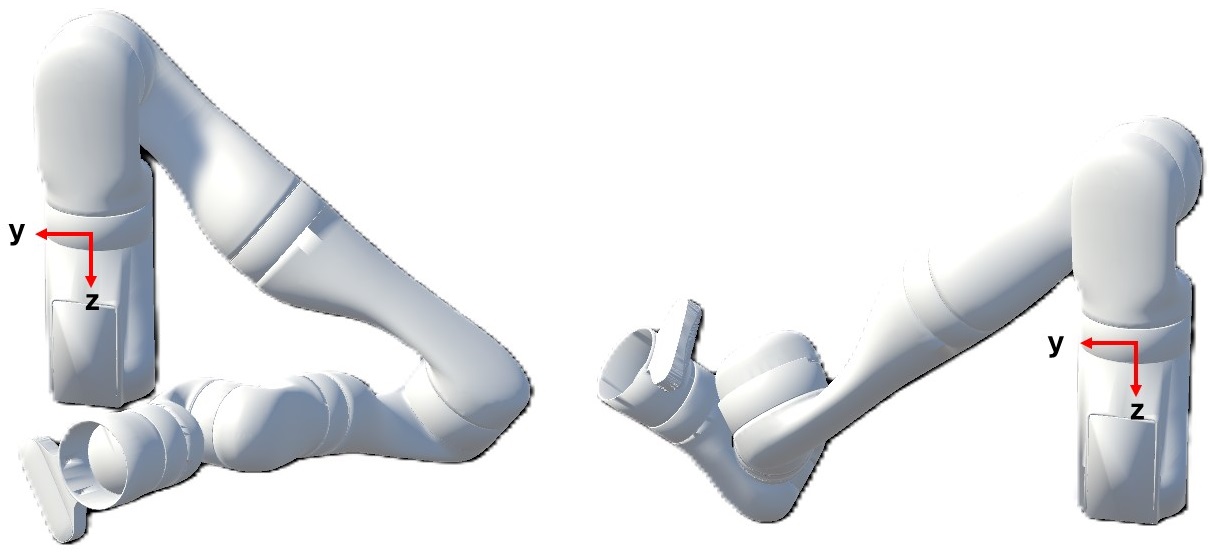

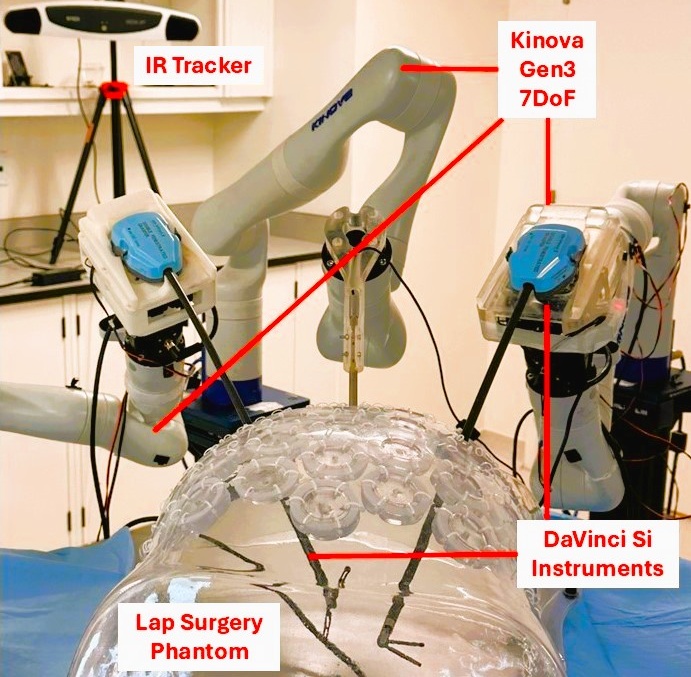

Ensuring safe and efficient operation remains a key challenge in multi-arm robotic systems, particularly within the constrained environment of laparoscopic surgery. This research, ‘Neural Collision Detection for Multi-arm Laparoscopy Surgical Robots Through Learning-from-Simulation’, introduces an integrated framework leveraging analytical modeling, real-time simulation, and deep learning to accurately estimate minimum distances and predict potential collisions. The developed neural network, trained on simulated robotic arm configurations, achieved a mean absolute error of 282.2 mm, demonstrating its ability to generalize spatial relationships and enhance proximity estimation. Could this approach pave the way for more autonomous and coordinated surgical robots, ultimately improving patient outcomes and surgical precision?

Navigating Complexity: The Rise of Collaborative Robotics

The evolution of robotic tasks is driving a significant shift towards collaborative systems featuring multiple robotic arms operating within a shared workspace. This trend, evident in manufacturing, assembly, and increasingly in fields like surgery and space exploration, introduces a considerable challenge: managing the risk of collisions. Unlike single-arm robots navigating relatively static environments, multi-arm systems experience a dramatically increased complexity in their kinematic interactions. Each additional arm multiplies the potential collision points and requires vastly more sophisticated planning and control algorithms to ensure safe and efficient operation. The sheer density of moving parts in close proximity necessitates real-time monitoring and predictive avoidance strategies, as even minor miscalculations can lead to damage, downtime, or, in sensitive applications, pose a risk to human safety. Consequently, developing robust collision avoidance systems is not merely an engineering problem, but a crucial prerequisite for unlocking the full potential of these increasingly sophisticated robotic deployments.

Conventional collision detection techniques, while foundational, often falter when applied to the fast-paced and intricate scenarios presented by modern robotics. These methods frequently rely on computationally expensive algorithms that analyze the complete workspace for potential overlaps, proving impractical for real-time control of multiple robotic arms. The inherent inaccuracies arise from simplifying assumptions about robot geometry and movement, and the inability to precisely predict trajectories in dynamic environments. This limitation becomes critically problematic as robots operate closer to humans or sensitive equipment, demanding a level of precision and responsiveness that traditional approaches struggle to deliver. Consequently, research focuses on developing more efficient and accurate methods, such as predictive algorithms and learning-based approaches, to ensure safe and reliable operation in increasingly complex robotic systems.

The progression of multi-arm robotic systems hinges decisively on robust collision avoidance strategies, particularly as these technologies venture into high-stakes environments. While increased automation promises unprecedented precision and efficiency, the potential for physical interaction between robotic arms-or with surrounding structures-demands fail-safe mechanisms. This is acutely critical in applications like robotic surgery, where even minor unintended contact could have severe consequences for patients. Consequently, significant research focuses on developing algorithms and sensor integrations that anticipate and prevent collisions in real-time, enabling the safe and reliable operation of multiple robotic arms working in close proximity and fostering greater acceptance and implementation of these advanced systems across diverse fields.

A Geometric Foundation for Proximity Estimation

Bézier curves are employed to model robotic link geometry due to their capacity for precise representation using a minimal number of control points. A [latex]n[/latex]-degree Bézier curve is defined by [latex]n+1[/latex] control points, allowing complex shapes to be described without the computational cost associated with high-resolution polygonal meshes or splines with numerous segments. This parametric representation facilitates efficient distance calculations and collision detection, as analytical solutions for minimum distances can be derived. Furthermore, the smoothness of Bézier curves ensures accurate representation of link surfaces, minimizing false positives in proximity assessments and improving the reliability of robot motion planning.

The collision assessment system utilizes an analytical framework based on Bézier curves to determine the minimum distance between robotic arm links. This method represents each link’s geometry as a series of piecewise cubic Bézier curves, allowing for precise calculation of distances without relying on computationally expensive discrete sampling. The analytical solution derives from evaluating the distance function between these curves and iteratively refining the minimum distance estimate through optimization techniques. This approach provides a robust and efficient means of identifying potential collisions, as it avoids the inaccuracies inherent in methods that approximate geometry with a finite number of points. The resulting minimum distance value is then used as a critical input for the collision avoidance algorithms.

Validation and refinement of the geometric proximity estimation framework occur within a Unity-based simulation environment. This platform enables the systematic testing of robotic arm configurations, facilitating the assessment of minimum distance calculations under diverse kinematic conditions. The simulation allows for manipulation of arm joint angles and link lengths, generating a comprehensive dataset for evaluating the accuracy and computational efficiency of the Bézier-based collision detection method. Performance metrics, including calculation time and collision resolution rate, are logged and analyzed to iteratively refine the geometric algorithms and ensure robustness across a wide range of arm poses and configurations. This simulated environment provides a controlled and repeatable testing ground, critical for verifying the framework’s reliability prior to deployment on physical robotic systems.

Predictive Collision Avoidance with Deep Learning

A Deep Neural Network (DNN) is employed to forecast the minimum distances between robotic arms in order to facilitate proactive collision avoidance. This predictive capability is achieved by training the DNN on kinematic data representing arm configurations and movements. The network learns to map input joint angles and velocities to an output representing the closest distance between any two arm links. By continuously predicting these minimum distances, the system can anticipate potential collisions before they occur, allowing for timely adjustments to robot trajectories and preventing physical contact. This approach differs from reactive collision avoidance, which responds to collisions as they begin to happen, and allows for smoother, more efficient robot operation.

The Charbonnier Loss Function was implemented to improve the robustness of the Deep Neural Network (DNN) to outlier data generated during complex robotic arm movements. Unlike the squared error loss, the Charbonnier loss, defined as [latex]L(y, \hat{y}) = \sqrt{(y – \hat{y})^2 + \epsilon^2}[/latex], where ε is a small constant, exhibits linear behavior for large errors and quadratic behavior for small errors. This characteristic reduces the influence of significant outliers-resulting from rapid or unusual arm configurations-on the overall loss calculation and subsequent weight updates, thereby stabilizing training and enhancing prediction accuracy for typical operating conditions.

The Deep Neural Network (DNN) utilized for collision prediction was optimized using the AdamW optimizer and a Cosine Annealing Scheduler. AdamW incorporates weight decay regularization to prevent overfitting and improve generalization performance. The Cosine Annealing Scheduler adjusts the learning rate during training, starting with a higher rate and gradually decreasing it following a cosine function; this technique facilitates convergence to a more stable and accurate solution. This optimization process yielded a coefficient of determination ([latex]R^2[/latex]) of 0.85, indicating that 85% of the variance in minimum distances between robotic arms is explained by the DNN’s predictions.

The implemented deep learning model for real-time collision prediction achieved a Mean Absolute Error (MAE) of 282.2 mm. This metric quantifies the average magnitude of the difference between the predicted minimum distances between robotic arm links and the actual observed minimum distances during testing. A lower MAE indicates higher predictive accuracy; in this case, the model’s predictions, on average, deviate from the ground truth by approximately 282.2 millimeters. This value serves as a key performance indicator for assessing the model’s ability to reliably estimate collision risk in dynamic robotic environments.

Error analysis of the deep learning-based collision prediction system reveals a high degree of accuracy and reliability, as evidenced by the distribution of prediction errors. The majority of errors, specifically approximately 68% as indicated by the ±1σ range, fall within one standard deviation of the actual minimum distances between robotic arms. This statistical characteristic demonstrates that the model’s predictions consistently align closely with observed values, suggesting a robust and dependable system for proactive collision avoidance in robotic applications. The concentration of errors within this range confirms the model’s ability to generalize well to unseen scenarios and maintain a predictable level of performance.

![Collision simulation using ten robot configurations reveals that the neural network’s predicted minimum distances [latex]\psi_p\sqrt{\psi_e}[/latex] closely align with experimentally measured [latex]\psi_e\sqrt{\psi_e}[/latex] and analytically modeled [latex]\psi_t\sqrt{\psi_e}[/latex] distances.](https://arxiv.org/html/2601.15459v1/images/Unity-simulation-results/fig10.png)

System Integration and Validation: A New Benchmark in Collaborative Robotics

The robotic system’s capacity for dynamic, collaborative work hinges on precise spatial awareness, achieved through an integrated optical tracking system. Utilizing infrared cameras and reflective markers affixed to each robotic arm, the NDI Optical Tracking System continuously captures real-time, three-dimensional position and orientation data. This stream of kinematic information isn’t simply displayed; it forms the foundational input layer for a Deep Neural Network (DNN). The DNN processes this data, enabling the system to understand not only where the arms are, but also to predict their trajectories and potential collisions. This constant feedback loop, driven by the NDI system, allows for adaptive planning and ensures the robots can respond to changing conditions and work safely in close proximity – a crucial element for complex assembly tasks and human-robot collaboration.

Accurate robotic control hinges on a precise understanding of how a robot’s joints move and interact within its workspace, a concept formalized through kinematic modeling. This process establishes a mathematical framework defining the robot arm’s possible configurations and trajectories, essentially creating a digital twin of its physical mechanics. By meticulously mapping joint angles to end-effector position and orientation, researchers can predict the arm’s motion with high fidelity. This predictive capability isn’t merely about control; it’s foundational to the prediction system’s validity, as any discrepancies between the model and actual movement would introduce errors into the DNN’s learning process. Consequently, robust kinematic modeling serves as a critical verification step, ensuring the system’s predictions align with the robot’s physical limitations and capabilities, and ultimately guaranteeing safe and reliable operation.

The convergence of real-time optical tracking, precise kinematic modeling, and deep neural networks establishes a new benchmark in multi-arm robotic systems. This integration isn’t simply about combining technologies; it fundamentally alters the potential for collaborative automation by dramatically enhancing both safety and efficiency. Through continuous monitoring and predictive capabilities, the system anticipates and mitigates potential collisions, allowing robotic arms to operate in closer proximity and at higher speeds. This unlocks applications previously deemed impractical – complex assembly tasks, intricate manipulation of delicate objects, and seamless human-robot collaboration – paving the way for more adaptable, productive, and intelligent automated workflows across diverse industries.

The research demonstrates a holistic approach to a complex problem – ensuring safe interaction between multiple robotic arms. This mirrors the interconnectedness of systems, where localized solutions often fall short. As Henri Poincaré stated, “It is through science that we arrive at truth, but it is through art that we express it.” The framework’s integration of analytical modeling, simulation, and deep learning isn’t simply about achieving accurate collision detection; it’s about crafting an elegant system where each component reinforces the others. Just as a resilient organism relies on clear boundaries and integrated functions, this research emphasizes that understanding the whole system-and not just isolated elements-is crucial for robust performance and reliable proximity estimation in multi-arm robotic surgery.

What Lies Ahead?

The pursuit of robust collision detection in multi-arm robotic systems reveals, perhaps predictably, that the challenge isn’t merely one of computational speed or sensor fidelity. It’s a question of defining ‘collision’ itself. This work, while demonstrating a promising integration of analytical and learned models, implicitly optimizes for geometrical avoidance. But surgical contexts, and indeed any complex manipulation task, demand a more nuanced understanding of proximity – of potential interference with delicate tissues, of the energetic consequences of even minor contact. What constitutes a ‘safe’ state isn’t a purely spatial calculation.

Future iterations must therefore grapple with the ambiguities inherent in the task. Simulating physical interaction, even with high fidelity, remains an approximation. The true measure of success won’t be minimizing false positives, but minimizing undetected consequential contacts. This necessitates a shift in focus – from predicting where collisions will occur, to estimating the cost of those that inevitably do.

Ultimately, the elegance of a solution will reside not in its complexity, but in its parsimony. Simplicity is not minimalism; it’s the discipline of distinguishing the essential from the accidental. The field should strive for systems that don’t merely react to imminent danger, but anticipate and accommodate the inherent uncertainty of physical interaction – systems that, like living organisms, exhibit resilience rather than brittle perfection.

Original article: https://arxiv.org/pdf/2601.15459.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- The five movies competing for an Oscar that has never been won before

- Streaming Services With Free Trials In Early 2026

2026-01-24 12:11