Author: Denis Avetisyan

A new agentic system, AgentSM, dramatically improves the accuracy and efficiency of translating natural language into database queries by intelligently leveraging past reasoning steps.

AgentSM utilizes a semantic memory to store and reuse successful query trajectories, enhancing Text-to-SQL performance on complex databases and composite tools.

Despite recent advances in large language model-based Text-to-SQL systems, scaling to complex, real-world databases remains challenging due to limitations in reasoning efficiency and reliability. This paper introduces AgentSM: Semantic Memory for Agentic Text-to-SQL, a novel framework that leverages structured, interpretable semantic memory to capture and reuse prior reasoning paths. By systematically reusing successful execution traces, AgentSM demonstrably improves both efficiency-reducing token usage and trajectory length-and accuracy on benchmark datasets like Spider 2.0. Could this approach to trajectory reuse unlock a new paradigm for more robust and scalable data exploration with agentic systems?

The Limits of Traditional Text-to-SQL

Recent advancements in Text-to-SQL translation have been largely driven by the scaling of Large Language Models (LLMs), yet this approach presents inherent limitations. While increasing the number of parameters within these models often yields improved performance on standard benchmarks, it does so with diminishing returns and substantial computational cost. This reliance on parameter scaling proves inefficient, particularly when confronted with queries demanding complex reasoning – those requiring multiple logical steps, aggregation, or the joining of numerous database tables. LLMs, even at massive scales, can struggle to reliably decompose intricate natural language questions into precise SQL queries, often faltering on the nuanced understanding of database schemas and the relationships between different data elements. The sheer size of these models doesn’t necessarily translate to enhanced reasoning capabilities, suggesting a need for alternative strategies that prioritize algorithmic efficiency and logical inference over brute-force parameterization.

Current Text-to-SQL methodologies demonstrate significant limitations when confronted with the intricacies of modern database systems, particularly those featured in benchmarks like Spider 2.0. This benchmark presents databases exhibiting diverse schemas and requiring complex SQL queries, often incorporating multiple tables and nested operations-a challenging landscape for existing models. Performance metrics reveal a consistent struggle with accurately translating natural language questions into executable SQL code across these multi-dialect databases, indicating a critical need for more resilient and adaptable solutions. The inability to generalize effectively across database structures exposes a fundamental gap in current approaches, suggesting that simply scaling model parameters is insufficient to achieve robust Text-to-SQL performance in real-world scenarios.

Conventional Text-to-SQL systems frequently exhibit a significant limitation in their ability to leverage previously computed reasoning steps. This results in a repetitive and inefficient process where the model re-evaluates similar logical pathways for each query, even when those pathways have already been successfully navigated. Instead of building upon prior insights, these systems often treat each new question as entirely novel, demanding substantial computational resources and hindering performance, particularly with complex database schemas and queries. This inability to effectively cache or recall past reasoning not only slows down query processing but also limits the model’s capacity to generalize to unseen database structures or intricate query requirements, ultimately impacting the scalability and accuracy of the Text-to-SQL conversion.

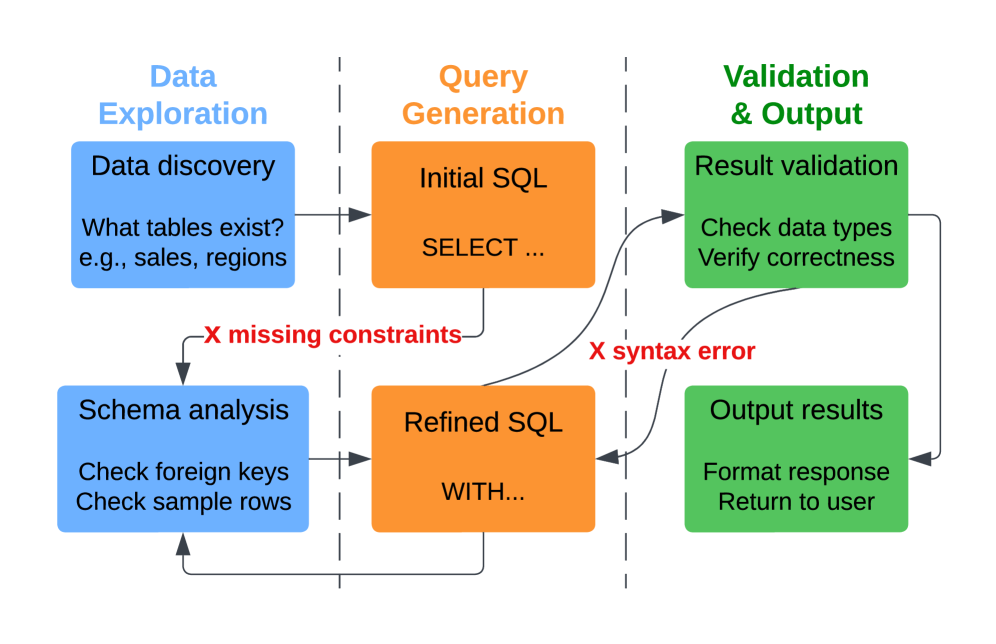

A Paradigm Shift: Agentic Text-to-SQL

Agentic Text-to-SQL represents a shift from traditional, single-turn prompting methods to a dynamic system utilizing autonomous agents. These agents do not simply translate natural language to SQL; instead, they actively engage with the database environment. This interaction involves iterative schema inspection – examining table and column definitions – and query validation, where generated SQL is tested against the database to confirm its correctness and relevance to the initial query. This iterative process allows the agent to refine its understanding of the database structure and the user’s intent, leading to more accurate and robust SQL query generation, particularly in complex database scenarios.

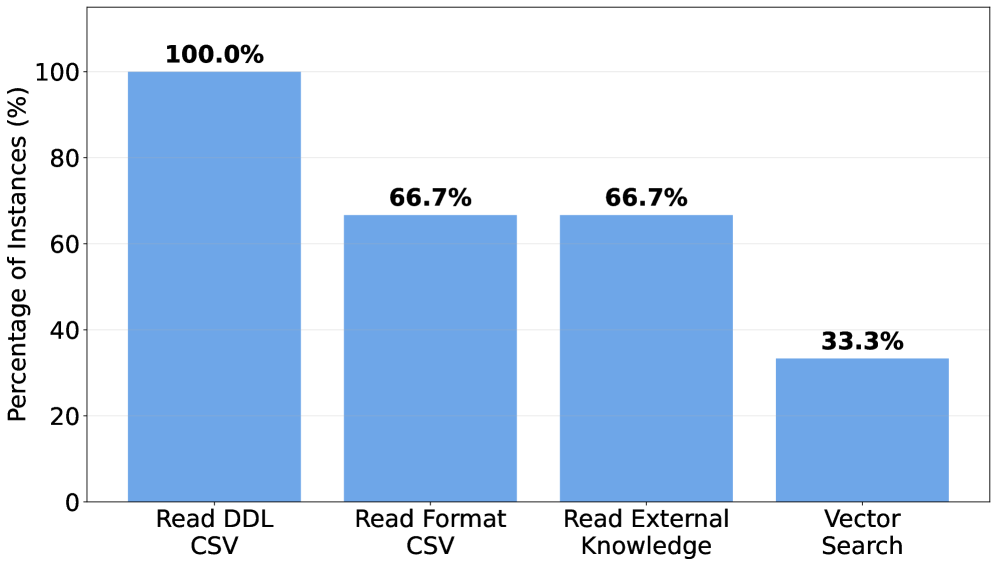

The Schema Linking Agent functions as a critical component in agentic Text-to-SQL systems by establishing a connection between natural language input and the underlying database schema. This agent utilizes tools, notably the Vector Search Tool, to perform semantic matching between tokens in the user’s query and schema elements – including table names, column names, and associated data types. The Vector Search Tool converts both the query tokens and schema elements into vector embeddings, allowing the agent to identify schema components with the highest semantic similarity to the query terms. This mapping process enables the agent to understand the context of the query and accurately identify the relevant database objects needed for query construction, even in cases of ambiguous language or complex database structures.

Agentic Text-to-SQL systems utilize iterative refinement to address the inherent complexities of real-world databases, which often feature numerous tables, intricate relationships, and non-standard naming conventions. This approach contrasts with single-turn query generation by allowing the agent to initially inspect the database schema, formulate a preliminary SQL query, and then validate its output against the database. Discrepancies or errors trigger a re-evaluation of the query and schema, enabling the agent to refine its understanding and improve accuracy. This cycle of query generation, validation, and refinement continues until a valid and accurate SQL query is produced, significantly enhancing performance on complex database interactions compared to non-iterative methods.

Scaling Agentic Reasoning with Memory: AgentSM

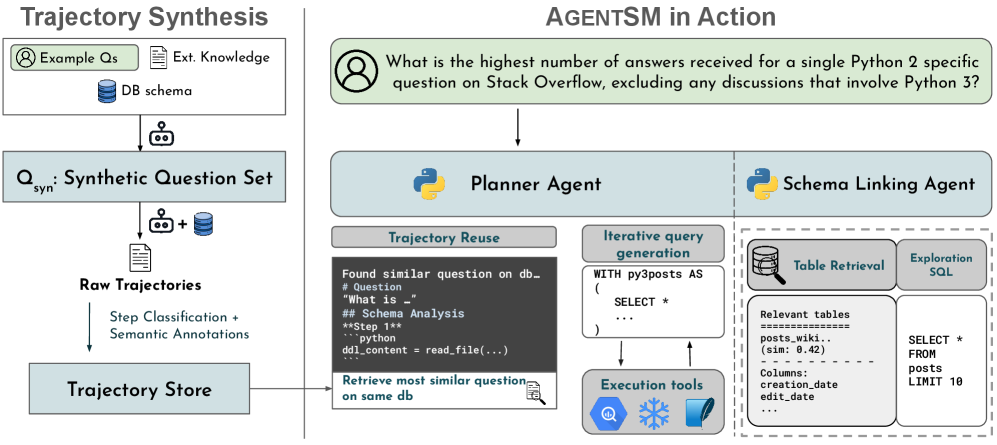

AgentSM employs a scalable and stable agentic framework centered around the principle of Trajectory Reuse, which involves storing and retrieving previously executed reasoning steps as structured semantic memories. This differs from traditional approaches by preserving not just final results, but the complete sequence of actions taken to achieve them. These stored trajectories, representing complete reasoning paths, are then indexed and made available for reuse when the agent encounters similar problems. The system identifies applicable past trajectories based on problem characteristics and adapts them as needed, rather than requiring the agent to reason from scratch each time. This allows for significant gains in both efficiency and accuracy by leveraging prior knowledge and reducing redundant computation.

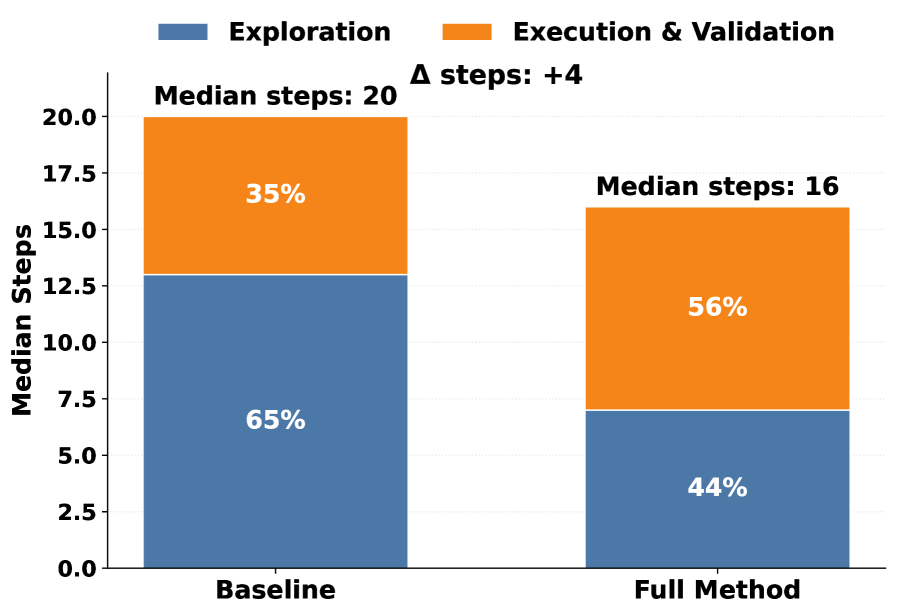

Trajectory Classification within AgentSM categorizes agent actions into distinct phases – exploration, execution, and validation – to optimize memory management and learning processes. During exploration, the agent gathers information and formulates potential solutions. The execution phase involves carrying out the chosen solution, and validation assesses the outcome’s correctness. By segregating actions into these phases, AgentSM can efficiently store and retrieve relevant reasoning steps; specifically, it allows the system to prioritize the storage of successful execution and validation trajectories, while focusing learning efforts on improving exploratory strategies. This phased approach facilitates targeted memory access and reduces computational costs associated with irrelevant data, ultimately enhancing the agent’s overall performance and scalability.

AgentSM incorporates Composite Tools, which are pre-defined combinations of frequently used, individual tools, to optimize the agent’s decision-making process and decrease computational demands. Rather than repeatedly invoking single-purpose tools in sequence, AgentSM can directly utilize these pre-assembled combinations, thereby reducing the number of individual tool calls required to achieve a given task. This approach minimizes latency and resource consumption by streamlining complex operations into single invocations, leading to improved efficiency and scalability, particularly in scenarios involving frequent repetition of common task sequences.

AgentSM attained a state-of-the-art accuracy of 44.8% when evaluated on the Spider 2.0 Lite benchmark, a standardized dataset for evaluating text-to-SQL models. This performance level represents a significant advancement in the field and is directly attributable to the framework’s implementation of trajectory reuse and structured semantic memory. The Spider 2.0 Lite benchmark assesses a model’s ability to convert natural language questions into executable SQL queries, requiring both reasoning and database schema understanding. Achieving 44.8% accuracy demonstrates AgentSM’s proficiency in these areas and its capacity to outperform existing methods on this complex task.

Trajectory Reuse within the AgentSM framework demonstrably improves computational efficiency by reducing the average length of reasoning paths required to reach a solution. Empirical results indicate a 25% decrease in trajectory length when leveraging previously successful reasoning steps stored in the semantic memory. This reduction is achieved by identifying and applying relevant past experiences to current problem-solving, thereby avoiding redundant calculations and streamlining the decision-making process. The impact of shorter trajectories translates directly into reduced computational cost and faster response times for the agent.

Empirical results indicate that the implementation of trajectory reuse within the AgentSM framework yields a demonstrable 35% improvement in overall accuracy. This performance gain is attributed to the system’s ability to leverage previously successful reasoning paths, effectively reducing errors and enhancing the reliability of subsequent query generation. The accuracy improvement was measured across a standardized benchmark, confirming the statistical significance of trajectory reuse as a key factor in enhancing the agent’s problem-solving capabilities and demonstrating its effectiveness as a core component of the AgentSM architecture.

The Planner Agent functions as the core component within AgentSM, responsible for coordinating all reasoning steps and ultimately generating SQL queries to address user requests. This agent receives initial user input and orchestrates the retrieval of relevant information from the structured semantic memory, utilizing Trajectory Reuse to leverage previously successful reasoning paths. It then synthesizes this information, formulates a SQL query based on the identified schema and user intent, and executes it. The Planner Agent’s output is subsequently validated, and the entire process-including the generated query and associated reasoning steps-is stored within the semantic memory for future reuse, contributing to the framework’s scalability and improved performance.

Beyond Baseline: Demonstrating AgentSM’s Superiority

Evaluations reveal AgentSM consistently achieves superior performance when compared to established Text-to-SQL agents such as SpiderAgent and CodingAgent. This advancement isn’t simply incremental; the agent’s architecture, specifically its integration of memory-augmented techniques, allows for more effective reasoning and context retention throughout complex query-solving processes. Unlike traditional agents that process each query in isolation, AgentSM leverages a dynamic memory system to store and retrieve relevant information from previous interactions, leading to increased accuracy and a more nuanced understanding of user intent. This capability proves particularly crucial when dealing with ambiguous or multi-step questions, where maintaining conversational context is paramount to generating correct SQL queries and delivering precise results.

The ReAct framework is central to AgentSM’s functionality, providing a systematic approach to complex problem-solving by interleaving reasoning and action. Rather than processing information passively, the agent actively generates thoughts – internal verbalizations outlining its decision-making process – and then executes actions based on those thoughts. This cycle repeats, allowing the agent to observe the results of its actions and refine its subsequent reasoning. This iterative process isn’t simply about executing commands; it’s about building a chain of thought, where each step informs the next, and where the agent can correct course if initial actions prove ineffective. By explicitly modeling this reasoning-action loop, AgentSM demonstrates an enhanced ability to navigate ambiguous queries and arrive at accurate solutions, surpassing the performance of agents that rely on more direct, less reflective approaches.

AgentSM’s performance is notably enhanced through the integration of sophisticated memory architectures, specifically MemGPT and Mem0. These techniques move beyond simple short-term memory by establishing hierarchical systems that categorize and prioritize information retention. MemGPT facilitates the creation of persistent, contextual memories, allowing the agent to maintain a consistent understanding across extended interactions and complex tasks. Complementing this, Mem0 introduces a mechanism for selectively storing and retrieving crucial past experiences, effectively addressing the challenge of long-term dependencies in reasoning. By strategically managing context through these advanced memory hierarchies, AgentSM demonstrates a marked improvement in its ability to handle intricate queries and maintain coherent, informed responses – ultimately enabling more robust and reliable performance in text-to-SQL applications.

The architecture of AgentSM is designed not merely as a high-performing Text-to-SQL system, but as a springboard for advancing the field of agent-based AI. Its modular construction and emphasis on explicit memory management create a fertile ground for investigating techniques like few-shot learning, where the agent can rapidly adapt to new database schemas or query types with minimal training data. Furthermore, the framework’s ability to maintain and leverage long-term contextual information facilitates research into cross-domain generalization – the capacity to effectively operate across diverse and previously unseen database environments. By decoupling core reasoning capabilities from specific domain knowledge, AgentSM offers a platform to systematically explore how agents can build and retain knowledge, ultimately striving for more adaptable and broadly applicable AI systems.

The pursuit of efficient data exploration, as demonstrated by AgentSM, echoes a fundamental principle of elegant design. The framework’s emphasis on trajectory reuse – leveraging past reasoning steps stored in semantic memory – embodies a deliberate subtraction of redundant computation. Grace Hopper famously stated, “It’s easier to ask forgiveness than it is to get permission.” This resonates with AgentSM’s approach; rather than rigidly adhering to predefined query structures, the system intelligently adapts and builds upon prior successes. By retaining what’s essential from past interactions, AgentSM minimizes unnecessary complexity, mirroring the idea that true innovation lies not in adding features, but in refining existing ones. The system’s ability to navigate complex databases efficiently stems from this core philosophy of purposeful reduction.

What Remains?

AgentSM offers a pragmatic reduction. The problem of Text-to-SQL, once framed as a parsing challenge, now reveals itself as a memory problem. This is not a novel observation, but the framing invites a particular scrutiny. Current iterations depend heavily on the structure of that memory. Future work will likely concern itself not with better organization, but with minimizing the need for it. The true test lies in approaching a system capable of graceful degradation-a system that doesn’t simply fail when recall is imperfect, but adjusts.

The reliance on trajectory reuse, while demonstrably effective, introduces a subtle dependency. Each successful query becomes a constraint on future queries. A truly adaptive system must balance the benefits of precedent with the imperative to explore. The question isn’t simply “can it remember?”, but “when should it forget?”.

Ultimately, the pursuit of agentic systems is a pursuit of delegation. The goal is not to build intelligence, but to offload complexity. Clarity is the minimum viable kindness. The success of AgentSM, therefore, is measured not by its accuracy, but by its capacity to disappear-to become a seamless extension of the user’s intent, leaving no trace of the underlying effort.

Original article: https://arxiv.org/pdf/2601.15709.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- Binance’s Bold Gambit: SENT Soars as Crypto Meets AI Farce

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

2026-01-24 10:45