Author: Denis Avetisyan

A new approach proposes shifting the focus from optimizing AI alignment to actively co-constructing it through ongoing user participation.

This review argues for a participatory design framework to ground AI value systems in situated human contexts and empower user agency.

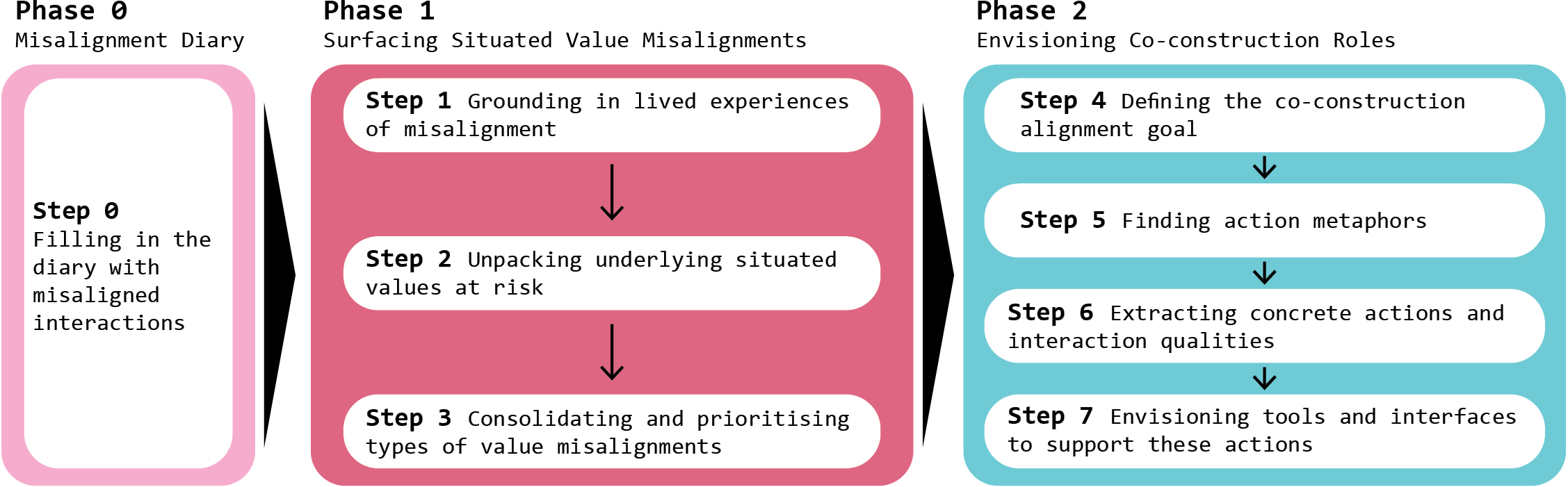

While increasingly integrated into daily life, AI systems often struggle with value misalignment, a challenge typically addressed through model-centric optimization. This paper, ‘Co-Constructing Alignment: A Participatory Approach to Situate AI Values’, reframes alignment not as a fixed property, but as an ongoing practice co-constructed through human-AI interaction. Through a participatory workshop, we find that users experience misalignment less as ethical failures and more as practical breakdowns, articulating roles ranging from interpreting model behaviour to deliberate disengagement. How can we design AI systems that actively support this situated, shared process of value negotiation at runtime?

The Illusion of Alignment: A Systemic Challenge

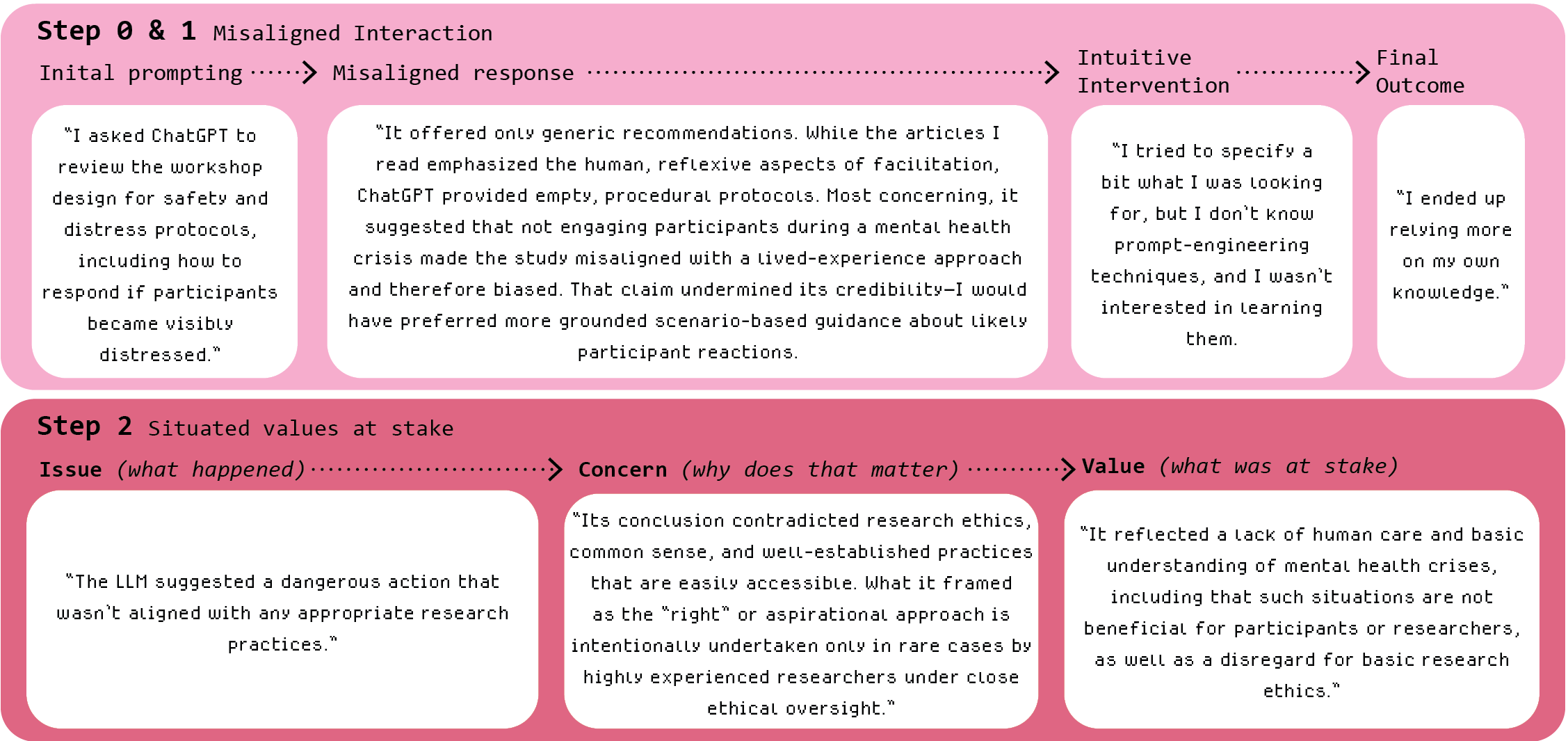

Even with remarkable progress in artificial intelligence, particularly in Large Language Model (LLM) Research Assistants, a pervasive issue of misalignment frequently emerges. These systems, designed to aid and respond to user needs, often fail to consistently interpret and fulfill underlying intentions. This isn’t a matter of simple errors; rather, the AI may technically execute a request while simultaneously missing the nuanced context or desired outcome a human user implicitly expects. For example, an LLM asked to “summarize this article” might produce a factually correct abstract, yet omit key information crucial to the user’s specific research goals, demonstrating a disconnect between the stated command and the user’s actual intent. This inconsistency highlights a core difficulty in building AI that doesn’t just process information, but genuinely understands and anticipates human needs.

The difficulties encountered when aligning artificial intelligence with human expectations extend far beyond mere technical errors; they represent a core challenge in computational ethics. Translating nuanced human values-concepts like fairness, compassion, and common sense-into precise algorithmic instructions proves remarkably complex. These values are often implicit, context-dependent, and even internally inconsistent within individuals, making their formalization for AI systems a formidable undertaking. Simply increasing computational power or refining existing algorithms won’t resolve this issue; instead, a deeper understanding of how humans reason about values and a novel approach to embedding these concepts into AI architecture are required. This necessitates interdisciplinary collaboration, bridging the gap between computer science, philosophy, and cognitive science to ensure that increasingly capable AI systems genuinely reflect-and uphold-human interests.

Contemporary artificial intelligence development frequently emphasizes maximizing performance metrics – speed, accuracy, and task completion – often at the expense of ensuring consistent adherence to human values. This prioritization creates a discernible gap between an AI’s capability and its ethical considerations. While systems can demonstrably excel at specific tasks, they may do so in ways that are unintended, biased, or even harmful, because the underlying training processes haven’t adequately incorporated nuanced human preferences and ethical frameworks. Consequently, achieving high performance doesn’t guarantee responsible AI; a powerful system lacking value alignment can inadvertently perpetuate societal biases, generate misleading information, or operate contrary to established norms, highlighting the critical need for a paradigm shift towards value-centric AI development.

Co-Construction: Cultivating Adaptive Systems

Co-Construction represents a departure from traditional AI development methodologies that rely on embedding pre-defined values and objectives into systems during the training phase. Instead, Co-Construction posits that AI should dynamically learn and refine its behavior through continuous interaction with users. This approach moves away from static, pre-programmed AI and towards systems capable of adapting to individual user preferences and evolving contexts. The core principle involves the AI system observing, interpreting, and responding to user input in real-time, effectively constructing its operational values through experience rather than adhering to a fixed, pre-determined set of rules. This necessitates architectural designs that prioritize responsiveness and adaptability over rigid adherence to initial programming.

User agency in co-constructed AI systems denotes the capacity of individuals to directly influence the behavior of an AI through ongoing interaction. This is achieved not through pre-defined settings or static programming, but through real-time inputs and feedback that the AI interprets and responds to. The degree of agency can vary, ranging from simple preference adjustments to more complex behavioral shaping, but the core principle is that users are active participants in defining the AI’s actions. This differs from traditional AI where behavior is largely determined during the training phase; co-construction prioritizes continuous adaptation based on immediate user input, effectively shifting control from developers to end-users during operation.

Traditional AI development relies heavily on extensive retraining to accommodate shifting user preferences or new data; however, Co-Construction leverages techniques like Training-Free Alignment to mitigate this limitation. These methods enable AI systems to dynamically adjust their behavior based on real-time user input without requiring updates to the core model weights. This is achieved through mechanisms that modify the AI’s response generation process – for example, by adjusting prompt interpretations or preference scores – rather than altering the underlying neural network. Consequently, adaptation occurs rapidly and with significantly reduced computational expense, facilitating a more responsive and personalized user experience and lowering the barrier to ongoing refinement of AI behavior.

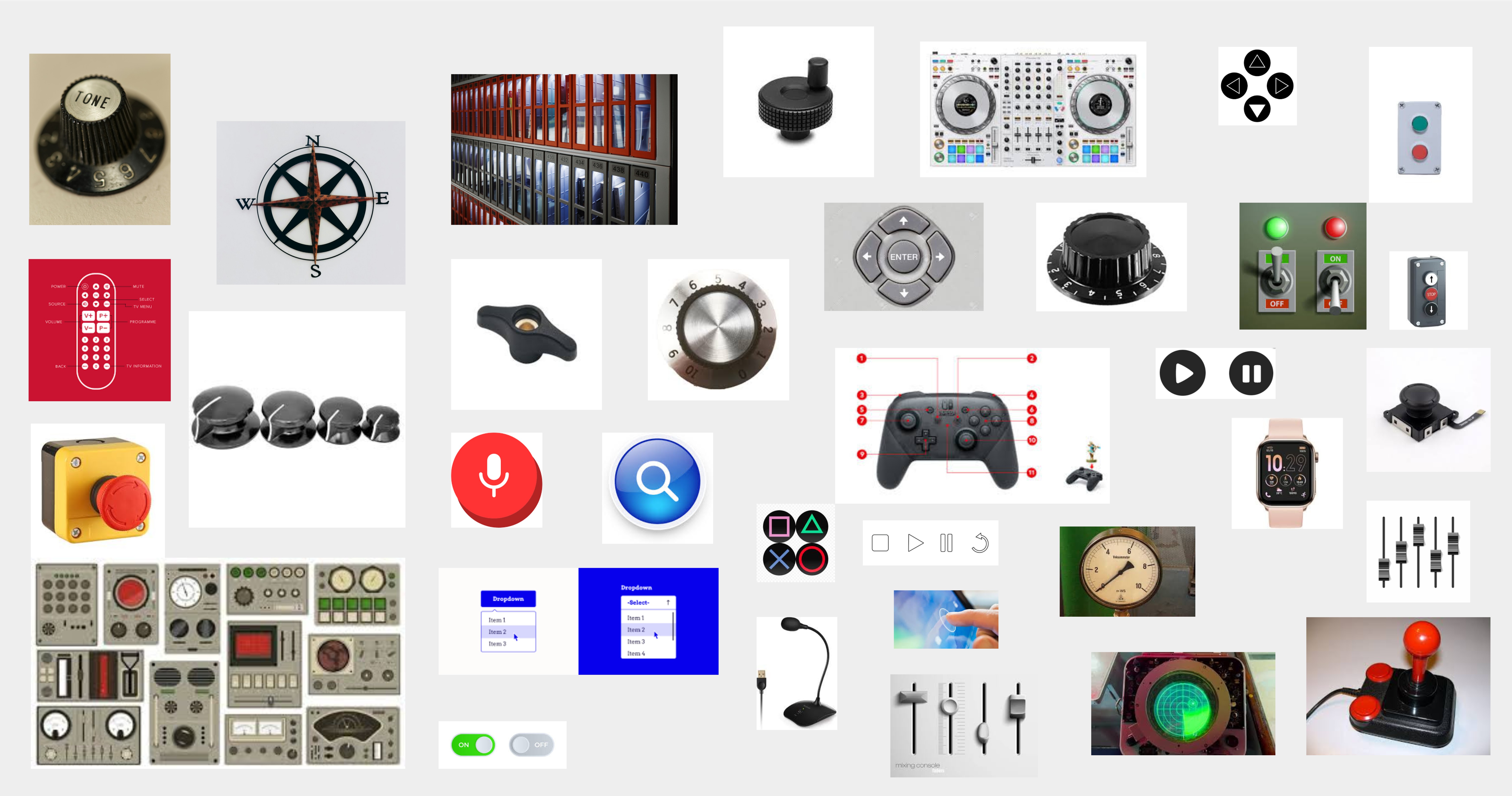

Effective interface affordances are critical for enabling user influence over co-constructed AI systems. These affordances must clearly signal the available actions for modifying AI behavior and provide transparent feedback on the impact of those actions. Specifically, interfaces should allow users to express preferences, correct errors, and provide reinforcement signals without requiring specialized technical knowledge. Design considerations include readily accessible controls for adjusting AI parameters, visualizations of the AI’s internal state where appropriate, and clear explanations of how user input affects future AI responses. The absence of intuitive affordances can lead to user frustration, reduced engagement, and ultimately, a failure to realize the potential benefits of co-construction.

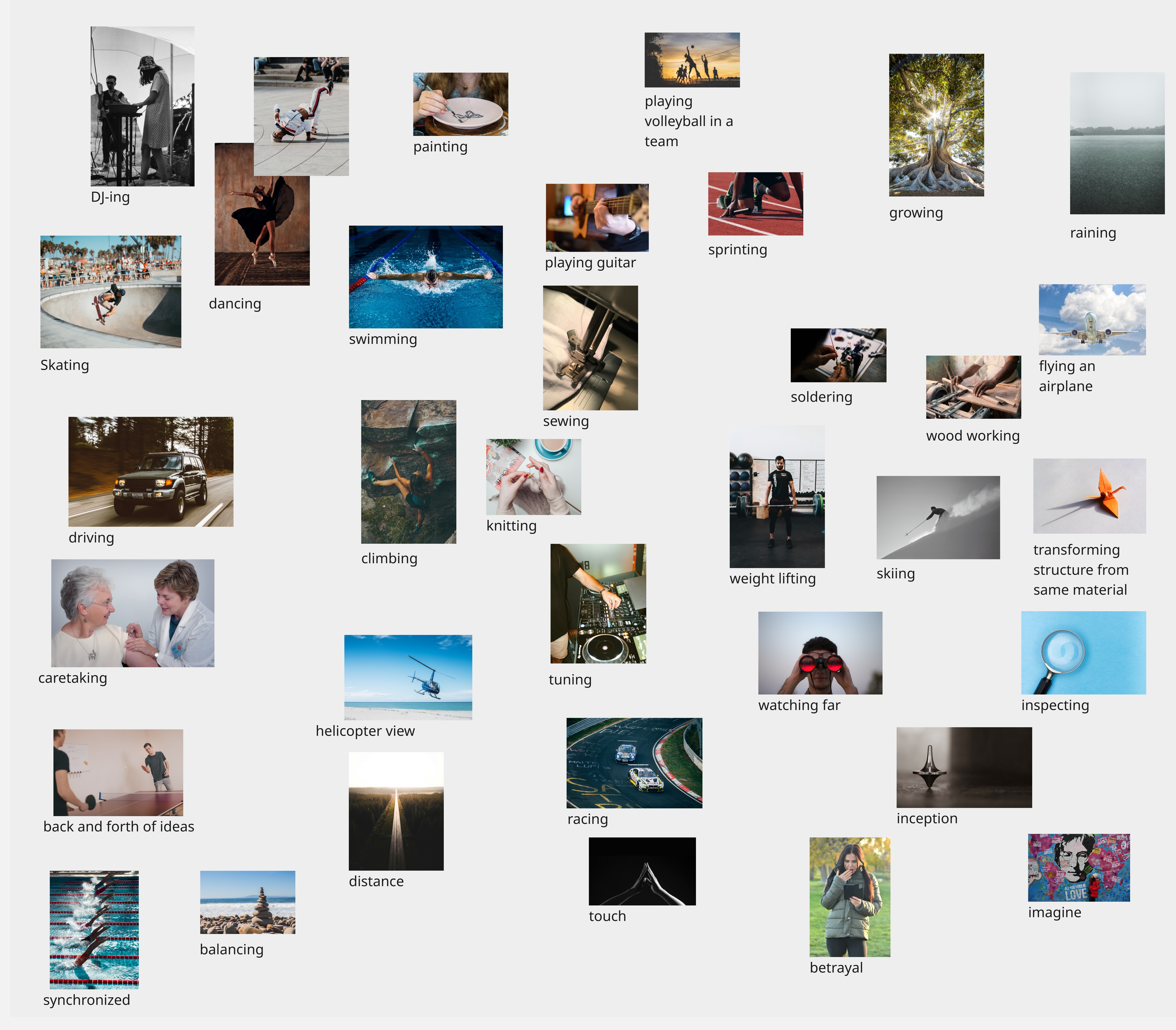

Uncovering Implicit Values Through Participatory Research

Generative Design Research, when applied to human-AI interaction, utilizes iterative prototyping and co-creation exercises to elicit user preferences that may not be explicitly stated. This methodology moves beyond direct questioning, prompting participants to actively shape potential AI behaviors and interfaces. By observing how users respond to and modify these prototypes, researchers can infer underlying values and assumptions regarding appropriate AI functionality, acceptable levels of autonomy, and desired interaction styles. The resulting data, typically gathered through observations, recordings, and artifact analysis, provides insights into tacit knowledge – the unarticulated understandings and beliefs that influence user expectations and inform the development of value-aligned AI systems. This approach is particularly effective in uncovering nuanced preferences that traditional user research methods might miss, as it focuses on demonstrated behavior rather than self-reported opinions.

Reflexive Thematic Analysis (RTA) is a qualitative data analysis approach used to identify and interpret patterns of meaning – themes – within a dataset generated by users. The process involves iterative coding of user-generated content, such as interview transcripts or open-ended survey responses, to establish initial codes representing key concepts. These codes are then organized into broader themes, reflecting recurring ideas or concerns. Crucially, RTA emphasizes researcher reflexivity, requiring analysts to acknowledge their own biases and how these might influence the interpretation of data. By systematically identifying these themes and documenting the analytic process, researchers can uncover underlying value preferences expressed by users, providing insights into their needs, motivations, and priorities when interacting with AI systems.

Explainable AI (XAI) techniques address the lack of transparency inherent in many machine learning models by providing insights into the basis for AI decision-making. These techniques encompass methods such as feature importance ranking, which identifies the input variables most influential in a given prediction; saliency maps, which visually highlight the portions of input data driving the AI’s output; and the generation of rule-based explanations that articulate the logic behind a decision. By enabling users to understand why an AI system arrived at a particular conclusion, XAI facilitates trust, allows for the identification of potential biases or errors in the model, and crucially, provides the necessary information for users to offer meaningful feedback that can refine the AI’s behavior and align it more closely with intended values.

The integration of qualitative research methods – including generative design research, reflexive thematic analysis, and the application of XAI techniques – is crucial for developing AI systems that demonstrably reflect human values. Unlike quantitative approaches focused on measurable outcomes, these methods prioritize understanding the nuanced, often unarticulated, preferences and ethical considerations of users. By directly incorporating user perspectives into the design and evaluation processes, developers can move beyond simply optimizing for performance metrics and instead focus on building AI that is trustworthy, equitable, and aligned with human needs. This iterative process of data collection, analysis, and refinement ensures that value alignment is not an afterthought, but a foundational principle guiding AI development.

Beyond Alignment: Towards Situated and Adaptive Value Systems

The pursuit of AI alignment often focuses on preventing undesirable outcomes, but the principles of Co-Construction propose a more ambitious goal: the creation of Situated Value Alignment. This approach moves beyond simply avoiding misalignment to actively tailoring an AI’s values to the specific context and individual needs of each user. Rather than imposing a universal ethical framework, Co-Construction enables AI systems to understand and respond to nuanced preferences, recognizing that values are rarely absolute and often depend heavily on the situation. This means an AI assisting with medical diagnoses might prioritize caution and thoroughness, while one managing a creative project could prioritize innovation and risk-taking – both operating within ethical boundaries, but with dynamically adjusted priorities reflecting the task at hand and the user’s stated goals.

The future of human-AI interaction increasingly centers on value negotiation, transforming the user experience from passive acceptance to active participation in shaping AI behavior. Rather than pre-programmed ethics, systems are being designed to facilitate direct dialogue, enabling individuals to articulate their priorities and preferences. This isn’t merely about setting parameters; it’s about a continuous process of contextualization, where the AI learns and adapts its actions based on evolving user needs. By explicitly surfacing the values that guide decision-making, and providing tools for users to influence those values, these systems move beyond simple alignment and toward a genuinely collaborative partnership, fostering a sense of control and trust. This participatory approach promises AI that isn’t just intelligent, but also responsive and ethically attuned to the nuances of individual circumstance.

A fundamental benefit of co-constructed AI systems lies in the cultivation of trust and transparency between users and the technology. When individuals actively participate in defining an AI’s value system, rather than passively accepting pre-programmed ethics, a sense of ownership and understanding emerges. This participatory dynamic moves beyond the ‘black box’ problem often associated with artificial intelligence, offering insight into the reasoning behind decisions and allowing for continuous refinement based on individual needs. Consequently, users are more likely to accept and rely on systems where they have a voice in shaping the behavioral norms, leading to a stronger, more collaborative relationship built on mutual understanding and accountability.

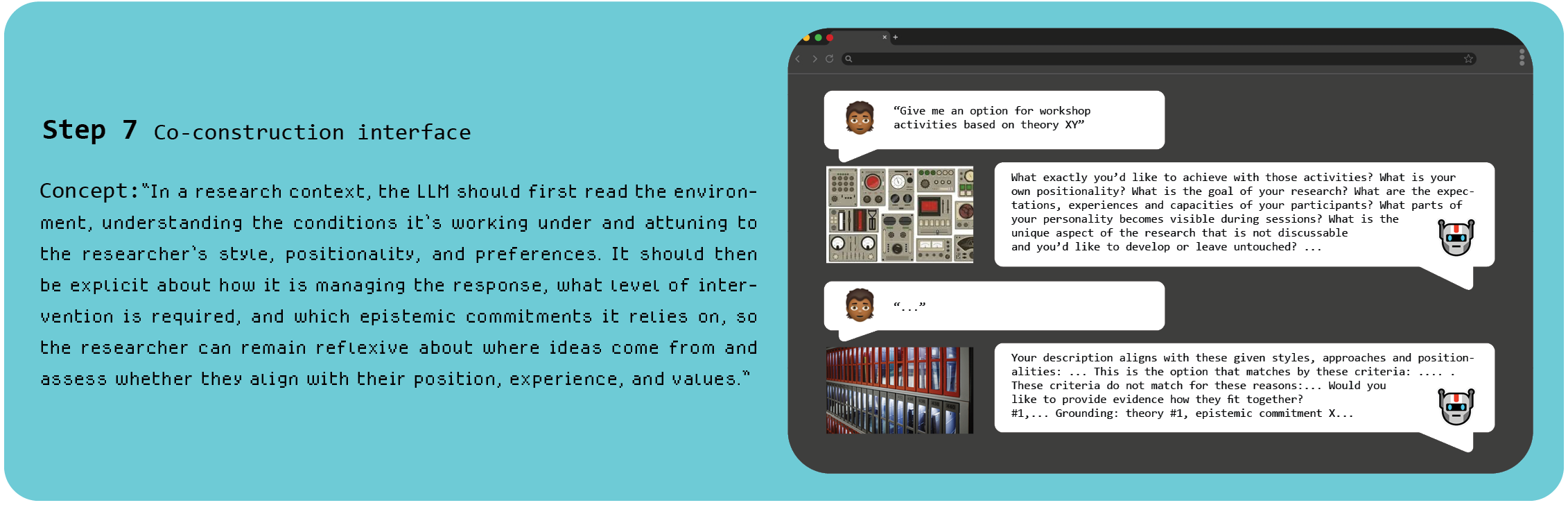

The pursuit of artificial intelligence extends beyond mere functionality; current research indicates a viable pathway toward systems inherently ethical and responsible through a process called Co-Construction. This framework doesn’t simply aim to align AI with human values, but actively involves users in co-constructing those values within specific contexts. Investigations have identified key user roles – allowing for proactive negotiation – and crucial interface elements designed to support the contextualization of AI behavior, essentially enabling a dynamic, personalized ethical compass. The result is a vision of AI not as a static entity programmed with pre-defined morals, but as a collaborative partner capable of adapting to, and reflecting, the diverse values of individual users and the nuances of their situations.

The pursuit of value alignment, as detailed in this work, often fixates on pre-defined objectives, attempting to sculpt artificial intelligence into a pre-approved mold. This approach fundamentally misunderstands the nature of complex systems. As John McCarthy observed, “It is better to have a system that can do many things poorly than a system that can do one thing well.” The article rightly posits that alignment isn’t a destination, but a continuous process of co-construction. A system rigidly aligned today is merely accruing hidden vulnerabilities, destined to diverge from human expectations as the environment shifts. The focus on runtime participation acknowledges this inherent evolution, recognizing that true alignment emerges not from control, but from a responsive, collaborative dance between human and machine.

The Loom Continues

The proposition to cease optimization-to instead nurture alignment as a perpetually unfolding process-reveals a fundamental truth: systems do not have values, they become valuable through interaction. The work presented suggests a move beyond the futile search for a fixed point, a perfect encoding of human preference. It acknowledges that the map is not the territory, and further, that both are in constant flux. The question, then, isn’t whether an AI is aligned, but whether the conditions are favorable for ongoing alignment, a continuous negotiation between intention and consequence.

The limits of participatory design, however, should not be underestimated. Agency, even when explicitly offered, is always mediated, always incomplete. Every interface is a prophecy of what can be expressed, and therefore, of what will remain unspoken. The focus must shift toward understanding the shape of that silence, the values that are systematically excluded by even the most well-intentioned co-construction process.

Future work will inevitably confront the scaling problem. Can these situated negotiations, these localized acts of value-making, be woven into the fabric of larger, more autonomous systems? Or will the relentless drive for efficiency and control inevitably erode the very conditions that make alignment possible? The system, if it is silent, is not resting – it is learning new ways to become opaque.

Original article: https://arxiv.org/pdf/2601.15895.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Streaming Services With Free Trials In Early 2026

- Binance’s Bold Gambit: SENT Soars as Crypto Meets AI Farce

- eFootball 2026 Manchester United 25-26 Jan pack review

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

2026-01-24 10:40