Author: Denis Avetisyan

A new approach lets large language models interact with a virtual machine, dramatically improving their problem-solving abilities and efficiency.

LLM-in-Sandbox uses reinforcement learning to enable agentic LLMs to navigate and utilize computational tools, demonstrating enhanced generalization and long-context understanding.

Despite advances in large language models, achieving robust generalization across diverse tasks remains a significant challenge. This is addressed in ‘LLM-in-Sandbox Elicits General Agentic Intelligence’, which introduces a novel paradigm enabling LLMs to interact with a simulated computational environment. The research demonstrates that allowing LLMs to leverage a code sandbox-accessing tools, files, and external resources-elicits emergent agentic capabilities without task-specific training, and can be further enhanced via reinforcement learning. Could this approach unlock a new era of truly generalizable and efficient artificial intelligence systems capable of complex reasoning and problem-solving?

The Limits of Pattern Matching: Why Scale Isn’t Enough

Large Language Models, despite their impressive ability to identify and replicate patterns within vast datasets, frequently encounter difficulties when tasked with genuine reasoning or the practical application of acquired knowledge. This limitation stems from their core architecture, which prioritizes statistical correlation over conceptual understanding; a model can predict the next word in a sequence with remarkable accuracy, but struggle to infer causality or solve problems requiring abstract thought. Consequently, these models often falter when confronted with scenarios demanding more than simple pattern matching, revealing a crucial gap between mimicking intelligence and actually possessing it. This highlights the need for innovations that move beyond sheer scale and focus on imbuing models with the capacity for deeper cognitive processes.

Despite the relentless pursuit of ever-larger language models, recent research indicates that simply increasing scale is no longer sufficient to achieve genuine general intelligence. While these models demonstrate impressive abilities in pattern recognition and text generation, they consistently falter when faced with tasks requiring complex reasoning or the application of knowledge to novel situations. This limitation is particularly evident in their struggle with long-context understanding – the ability to effectively process and retain information from extended texts – and is underscored by a noticeable plateau in performance gains as model size continues to increase. These findings suggest that alternative architectural innovations, rather than sheer scale, are now crucial for unlocking the next level of artificial intelligence, indicating a shift in focus is needed to overcome the current limitations of traditional Large Language Models.

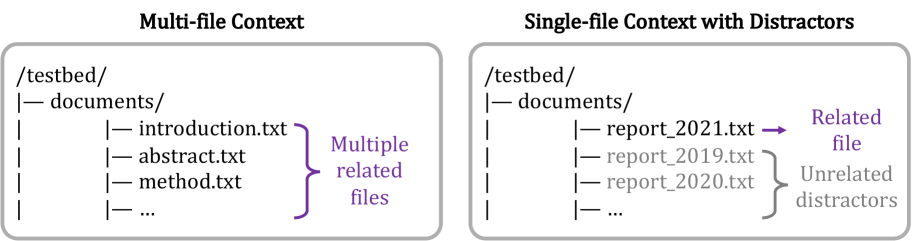

Traditional Large Language Model architectures often require substantial computational resources due to their high token consumption – the amount of text processed – which directly impacts both the cost of operation and the speed of processing, known as throughput. This becomes particularly pronounced when dealing with long-context tasks, such as summarizing lengthy documents or engaging in extended dialogues. Recent research introduces the “LLM-in-Sandbox” approach as a potential solution, effectively isolating and focusing the model’s attention on relevant information within the context window. Preliminary findings suggest this method can reduce token consumption by up to eight times in long-context scenarios, offering a pathway toward more efficient and scalable language processing without sacrificing performance. This optimization is achieved by minimizing unnecessary processing of irrelevant tokens, ultimately lowering the barrier to deploying LLMs in resource-constrained environments.

![Strong agentic models demonstrate consistent sandbox behavior across tasks, maintaining a stable capability usage rate ([latex] ext{capability invocations} / ext{total turns}[/latex]) and a relatively constant average number of interaction turns per task.](https://arxiv.org/html/2601.16206v1/x2.png)

LLM-in-Sandbox: Extending Reasoning with Controlled Execution

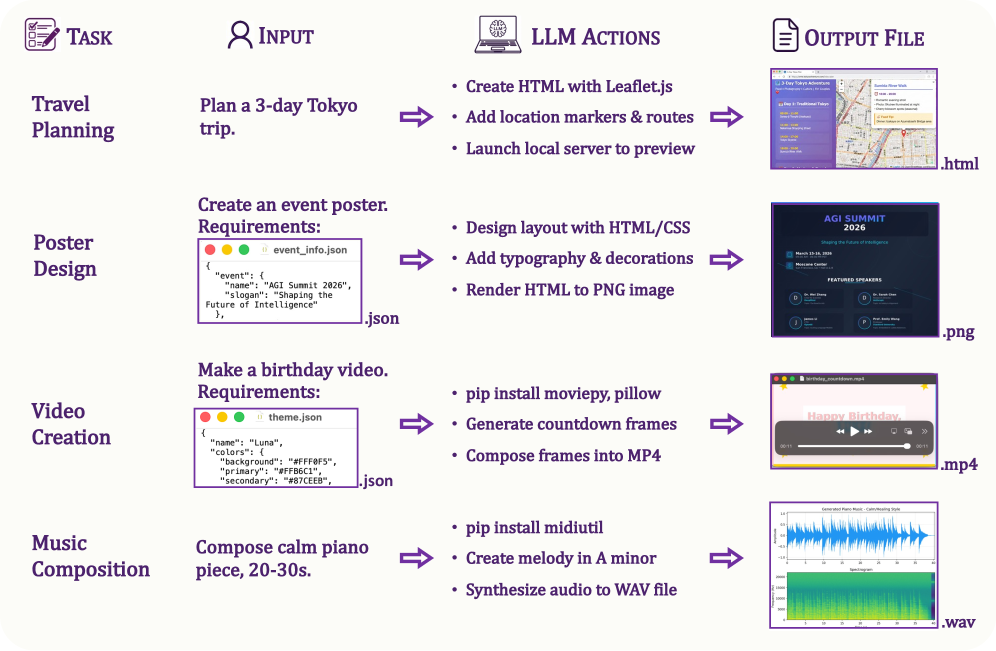

The LLM-in-Sandbox paradigm represents a departure from traditional large language model (LLM) deployment by integrating the LLM within a virtualized code sandbox environment. This architecture provides the LLM with controlled access to system-level capabilities, effectively extending its operational scope beyond purely textual data processing. The sandbox functions as an intermediary layer, enabling the LLM to initiate code execution, interact with file systems, and access external resources – all while maintaining system security and isolation. This approach differs from relying solely on the LLM’s pre-trained knowledge, as it allows for dynamic problem solving through active interaction with an operating environment.

The LLM-in-Sandbox paradigm extends large language model (LLM) capabilities by providing access to a virtualized environment where code execution, file management, and external resource access are enabled. This allows LLMs to move beyond text-based processing and directly interact with computational tools and data sources. Specifically, the sandbox facilitates running code generated by the LLM, creating, reading, writing, and deleting files within the virtualized space, and making API calls to external services. This expanded functional reach is achieved without requiring modifications to the core LLM architecture, providing a modular and scalable approach to augmenting LLM performance.

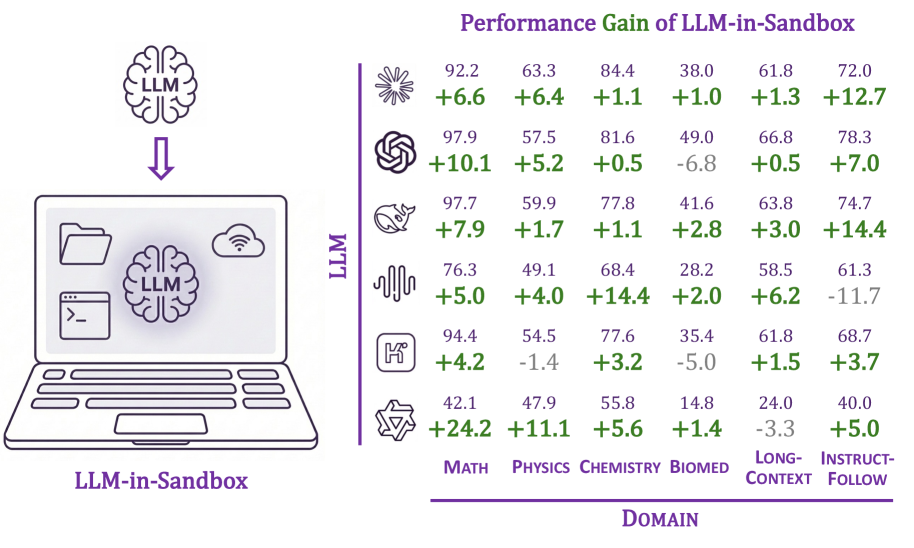

The LLM-in-Sandbox paradigm enhances problem-solving by enabling Large Language Models to move beyond text-based reasoning and directly interact with computational tools. This decoupling allows the LLM to execute code, access and manipulate files, and utilize external resources as part of its reasoning process, effectively functioning as an agent. Benchmarking has demonstrated a performance increase of up to 24.2% across a range of tasks when employing this method, indicating a significant improvement in complex task completion compared to purely text-based LLM approaches.

Reinforcement Learning: Polishing the Sandbox with Iterative Feedback

LLM-in-Sandbox-RL utilizes reinforcement learning techniques performed entirely within a simulated “sandbox” environment. This approach contrasts with traditional reinforcement learning methods that often require real-world interaction or extensive data collection. By confining the learning process to the sandbox, the system can safely explore a wide range of scenarios and receive immediate feedback, facilitating iterative improvement of the language model’s capabilities without external dependencies or risks associated with real-world deployments. The sandbox provides a controlled and reproducible setting for training, allowing for systematic evaluation and optimization of the reinforcement learning process.

Training large language models (LLMs) on a variety of context-based tasks within a controlled sandbox environment promotes improved generalization and robust problem-solving capabilities. This approach exposes the LLM to a wider distribution of challenges, encouraging the development of adaptable strategies beyond memorization of specific solutions. By repeatedly engaging with diverse problems, the model learns to identify underlying principles and apply them to novel situations. This contrasts with training on limited datasets which can result in overfitting and reduced performance on unseen tasks, and instead cultivates an ability to effectively process and respond to a broader spectrum of inputs and requirements.

Empirical results indicate that the application of reinforcement learning within a sandbox environment yields measurable improvements in LLM performance across multiple disciplines. Specifically, the MiniMax model achieved up to a 24.2% performance increase when tested on tasks requiring mathematical reasoning, physics problem solving, and chemistry competency. Furthermore, this methodology facilitated a 2.2x speedup in task completion compared to baseline models, demonstrating both improved accuracy and efficiency in complex domain-specific challenges.

Implications for AGI: A Step Toward True Machine Intelligence

Recent advancements demonstrate a shift in large language model (LLM) capabilities, moving beyond simply processing text to actively engaging with and learning from executable environments. This innovative approach equips LLMs with the capacity to not only generate text-based responses, but also to formulate hypotheses, run code to test those hypotheses, and refine their understanding based on the results – a process mirroring scientific experimentation. By integrating an executable sandbox, these models transition from passive recipients of information to active learners, capable of independent exploration and knowledge verification. This fundamentally alters the development paradigm, potentially unlocking a new era of artificial intelligence focused on dynamic, experiential learning rather than static data analysis.

The integration of Large Language Models with executable environments represents a paradigm shift in artificial intelligence development. Traditionally, these models have been limited to processing and generating text, relying on pre-existing datasets for knowledge. However, this new approach empowers LLMs to actively engage with their surroundings, independently testing hypotheses and validating information through direct experimentation. By moving beyond passive observation, the models can iteratively refine their understanding, correct inaccuracies, and build more robust internal representations of the world – essentially learning not just what is true, but why. This capacity for self-verification and knowledge refinement promises a future where AI agents are not simply repositories of information, but dynamic learners capable of adapting and improving over time, achieving a level of cognitive flexibility previously unattainable.

The emergence of the LLM-in-Sandbox paradigm represents a significant step toward creating artificial agents capable of genuine adaptability and robust intelligence. By allowing large language models to interact with and learn from executable environments, these systems move beyond simply processing information to actively experimenting and refining their understanding of the world. Crucially, this approach achieves substantial progress without imposing prohibitive computational costs; shared image storage across diverse tasks requires a remarkably low overhead of only 1.1 GB. This efficiency suggests that increasingly sophisticated, self-improving AI agents are becoming a feasible reality, potentially unlocking new levels of problem-solving and innovation across a multitude of domains.

The pursuit of ‘general agentic intelligence’ as outlined in this work feels… familiar. It’s another attempt to build a perfect system, to solve problems with elegance before production inevitably introduces chaos. This LLM-in-Sandbox approach, granting the model a virtual computer to experiment within, is a clever sidestep around the limitations of sheer scale. Yet, one suspects that even a perfectly contained sandbox will eventually spring leaks. As David Hilbert famously said, ‘We must be able to answer every question.’ A noble goal, certainly, but the moment it leaves the lab, it will be less about answering and more about debugging why the virtual computer thinks Tuesdays are now purple. Everything new is just the old thing with worse docs.

So, What Breaks First?

The notion of an LLM-in-Sandbox is, predictably, not new. It’s simply the latest iteration of giving a program a slightly larger box to break. Past attempts at grounding language models in simulated environments have consistently revealed that elegant theoretical gains evaporate upon contact with…well, anything resembling real-world complexity. The promise of improved generalization hinges on the sandbox accurately modeling the target domain, a task that seems destined to be eternally incomplete. One suspects the primary output will be a never-ending stream of edge cases, requiring increasingly baroque workarounds.

The reinforcement learning component feels particularly optimistic. Reward functions, even carefully crafted ones, are notoriously susceptible to exploitation. The system will undoubtedly discover loopholes – efficient, unintended behaviors that technically satisfy the reward criteria but are utterly useless, or worse, actively detrimental. It’s a game of whack-a-mole, except the moles are probabilistic and can learn to hide better.

Ultimately, this work serves as a reminder that intelligence, even artificial, is less about clever algorithms and more about anticipating failure. The long-context understanding is a benefit, certainly, but production is the best QA. The true test won’t be benchmarks; it will be the first critical bug reported by a user who simply tried to do something sensible. Everything new is old again, just renamed and still broken.

Original article: https://arxiv.org/pdf/2601.16206.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- The five movies competing for an Oscar that has never been won before

- Binance’s Bold Gambit: SENT Soars as Crypto Meets AI Farce

- ‘This from a self-proclaimed chef is laughable’: Brooklyn Beckham’s ‘toe-curling’ breakfast sandwich video goes viral as the amateur chef is roasted on social media

2026-01-24 00:28