Author: Denis Avetisyan

A new framework leverages unified 3D scene representations and large language models to enable robots to perform tasks in the real world with minimal real-world training data.

Point Bridge utilizes point clouds and vision-language models for zero-shot sim-to-real transfer in robotic manipulation.

Despite advances in robot foundation models, scaling generalist robotic agents remains hindered by the scarcity of real-world manipulation datasets. This limitation motivates the development of simulation-based training, yet performance suffers from the visual domain gap between synthetic and real environments-a challenge addressed by ‘Point Bridge: 3D Representations for Cross Domain Policy Learning’. This work introduces a framework leveraging unified, domain-agnostic point-based scene representations and vision-language models to enable zero-shot sim-to-real transfer, significantly reducing reliance on real-world data and complex alignment procedures. Will this approach unlock truly scalable and robust robotic manipulation capabilities across diverse, unseen environments?

The Illusion of Control: Data as the Bottleneck of Robotic Evolution

The development of reliable robotic systems is fundamentally challenged by the sheer volume of data required for training. Unlike many machine learning applications that thrive on readily available datasets, robots operating in complex, real-world environments demand extensive experience to navigate unforeseen circumstances and perform tasks consistently. Acquiring this data through physical experimentation is not only expensive – considering robot hardware, maintenance, and operational time – but also painstakingly slow. Each interaction with the physical world represents a valuable data point, but the process of collecting enough of these points to achieve robust performance can stretch over months or even years. This reliance on real-world data creates a significant bottleneck, hindering the rapid development and deployment of robotic solutions across various industries and limiting the exploration of more complex and adaptive robotic behaviors.

A significant obstacle in advancing robotic learning lies in the persistent discrepancy between simulated environments and the complexities of the real world – a phenomenon known as the ‘Domain Gap’. Policies meticulously trained within the controlled parameters of a simulation often falter when deployed onto a physical robot, due to unmodeled dynamics, sensor noise, and unpredictable environmental factors. This gap arises because simulations, while increasingly sophisticated, inevitably simplify reality; they cannot perfectly replicate the nuanced interactions between a robot and its surroundings. Consequently, a policy that functions flawlessly in simulation may exhibit instability or poor performance when confronted with the imperfections and uncertainties inherent in physical systems, necessitating costly and time-consuming real-world fine-tuning or the development of more robust adaptation strategies.

Despite advancements in simulation technologies, creating synthetic datasets capable of reliably training robust robotic policies remains a significant challenge. Current methods often fall short in replicating the complexity and nuance of the real world, leading to policies that perform well in simulation but fail when deployed in physical environments. This limitation stems from difficulties in accurately modeling factors like sensor noise, material properties, and unpredictable external disturbances. Consequently, the scalability of robotic learning is hindered; generating sufficient diverse and realistic data to bridge the ‘reality gap’ requires immense computational resources and careful parameter tuning, ultimately restricting the ability to rapidly adapt robots to new tasks and environments. The pursuit of richer, more representative synthetic data is therefore crucial for unlocking the full potential of simulation-based robotic learning.

Synthetic Data: A Necessary Fiction for Scalable Robotics

Synthetic data generation provides a viable approach to overcome limitations associated with acquiring real-world datasets, particularly in scenarios where data collection is expensive, time-consuming, or poses privacy concerns. This method involves creating datasets programmatically within simulated environments, allowing for the production of large volumes of labeled data with precise control over data distribution and characteristics. These datasets can be generated to represent a wide range of conditions and edge cases, facilitating the training of robust machine learning models. The scalability of synthetic data generation is a key advantage, enabling the rapid creation of datasets tailored to specific model requirements without the constraints of real-world data acquisition processes.

MimicGen and similar techniques address limitations in dataset size and variability by programmatically extending existing demonstrations to new, unseen environments. This is achieved through the manipulation of scene parameters – such as lighting, object positions, and textures – while preserving the core kinematic structure of the demonstrated behavior. By applying transformations and randomizations to these parameters, MimicGen generates a significantly larger dataset with increased diversity, exposing machine learning models to a wider range of conditions. This, in turn, improves the robustness and generalization capabilities of those models, particularly in situations where real-world data acquisition is expensive, time-consuming, or poses safety concerns. The resulting synthetic data serves as a cost-effective means to augment or even replace real-world datasets for training and validation purposes.

Addressing the domain gap in synthetic data requires advancements beyond simply increasing dataset size. While quantity is a factor, the realism and representativeness of the generated data are critical for successful model transfer to real-world scenarios. Improved fidelity involves accurately modeling physical properties – such as lighting, textures, and material interactions – and sensor characteristics. Representation concerns the diversity and balance of the synthetic data, ensuring it comprehensively covers the variations expected in the target domain and avoids introducing biases that could negatively impact model performance. Without sufficient fidelity and representative coverage, models trained on synthetic data may fail to generalize effectively when deployed in real-world applications, regardless of the dataset’s overall size.

Point Bridge: An Abstraction Layer for Zero-Shot Sim-to-Real Transfer

Point Bridge employs a unified framework centered on domain-agnostic point-based representations to facilitate zero-shot sim-to-real transfer. This approach represents scenes as collections of points, abstracting away domain-specific characteristics inherent in image- or mesh-based representations. By decoupling scene representation from the specific simulation or real-world environment, the framework enables the transfer of learned policies between these domains without requiring retraining or adaptation. The use of points allows for consistent representation across varying data sources and sensor modalities, forming the basis for a generalized transfer capability. This representation is not tied to specific object categories or environments, thereby enabling generalization to unseen scenarios.

Point Bridge constructs detailed scene representations by integrating data from multiple perception methods operating on point cloud data. Specifically, Foundation Stereo provides depth estimation for robust 3D reconstruction, while SAM-2 (Segment Anything Model 2) facilitates instance segmentation within the point cloud, identifying and isolating individual objects. Co-Tracker further enhances this by providing consistent tracking of these segmented objects across consecutive frames, enabling the framework to maintain object identities and trajectories over time. The combination of these techniques allows Point Bridge to create a comprehensive and dynamically updated understanding of the environment, crucial for sim-to-real transfer.

Point Bridge integrates Vision-Language Models (VLMs), specifically Molmo, to improve scene understanding and enable cross-domain policy transfer by leveraging the VLM’s ability to process both visual and linguistic information. This integration allows the system to interpret high-level instructions and relate them to the observed point cloud data, facilitating generalization across different environments and tasks. Furthermore, the framework utilizes a Transformer architecture, enabling scalability to handle complex scenes and large datasets, crucial for robust zero-shot transfer learning. The Transformer’s attention mechanism allows Point Bridge to focus on relevant features within the point cloud, improving performance and efficiency.

Robot Pose Regression and Point Track Prediction are integrated into the Point Bridge framework to enhance predictive capabilities. Robot Pose Regression predicts the six-degrees-of-freedom pose of the robot at future timesteps, providing a continuous trajectory forecast. Simultaneously, Point Track Prediction focuses on predicting the future locations of individual points within the scene, effectively tracking dynamic objects and scene changes. These predictions are crucial for anticipating environmental interactions and ensuring robust policy execution in novel environments, contributing to the overall improvement in zero-shot transfer performance observed in the framework.

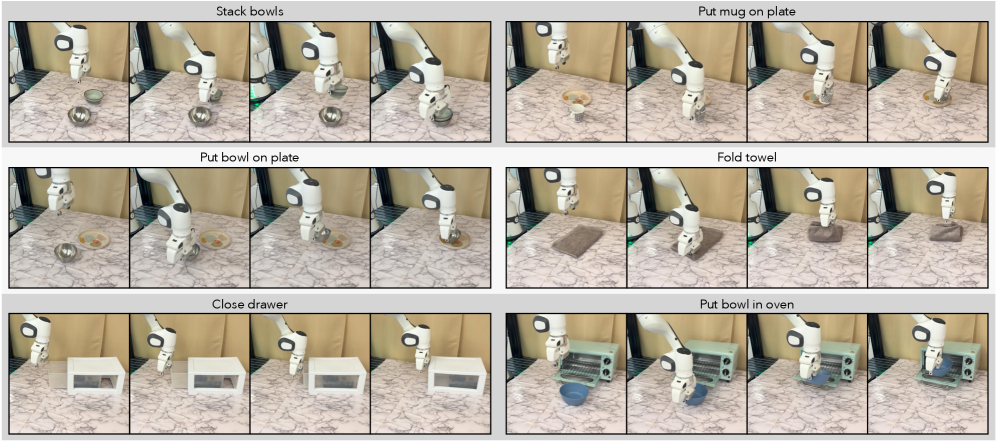

Evaluations of the Point Bridge framework demonstrate substantial performance gains in zero-shot sim-to-real transfer. Specifically, the system achieves a 76% success rate in single-task transfer and an 80% success rate in multitask transfer scenarios. These results represent a 39% improvement over baseline methods in single-task performance and a 44% improvement in multitask transfer capability, indicating a significant advancement in the ability to generalize learned policies to unseen real-world environments.

Beyond Simulation: Towards Adaptable Robotic Systems

Point Bridge establishes a novel approach to robotic skill acquisition by seamlessly connecting simulated learning environments with real-world application through behavior cloning. The system allows robots to learn complex tasks from demonstrations performed in simulation – a cost-effective and safe method – and then directly transfer those learned skills to physical robots operating in unstructured environments. This transfer isn’t simply replication; Point Bridge actively addresses the ‘sim-to-real’ gap by learning a mapping that correlates simulated observations to corresponding actions, allowing the robot to adapt its behavior when confronted with the inherent discrepancies between simulation and reality. Consequently, robots can execute tasks effectively without requiring extensive, and often impractical, real-world training data, fostering a pathway towards more adaptable and deployable robotic systems.

A significant challenge in robotics lies in the extensive data requirements for training robots to operate in the real world. This framework addresses this limitation by demonstrating a remarkable capacity to generalize learned skills across diverse objects and environments, thereby minimizing the need for vast amounts of real-world data collection. Instead of requiring robots to experience countless scenarios firsthand, the system leverages simulation data and effectively transfers knowledge to new, unseen situations. This generalization isn’t simply about recognizing different shapes or colors; it’s about understanding the underlying principles of interaction, allowing the robot to adapt its behavior to novel circumstances with minimal additional training. The result is a more efficient and scalable approach to robotic intelligence, reducing both the time and resources required to deploy robots in dynamic and unpredictable settings.

Point Bridge represents a significant step towards creating robotic systems capable of thriving in unpredictable, real-world settings. The framework achieves this by effectively minimizing the discrepancy between simulated training environments and the complexities of physical reality – a challenge that has long hindered the widespread adoption of robot learning. This diminished gap allows robots to transfer knowledge gained in simulation directly to new, unseen situations without requiring extensive retraining or adaptation. Consequently, robotic deployment becomes far more efficient, reducing both the time and resources needed for real-world data collection and experimentation. Ultimately, this approach fosters scalability, enabling the creation of robotic solutions that are not only adaptable but also readily deployable across a diverse range of tasks and environments, promising a future where intelligent robotic assistance is both accessible and reliable.

The capacity to function effectively in unpredictable, real-world settings represents a significant hurdle for robotic intelligence; however, recent developments are poised to overcome this challenge. Deploying robots beyond controlled laboratories necessitates an ability to navigate and interact with complex, unstructured environments – spaces lacking the predictability of factory floors or carefully curated datasets. This progress isn’t simply about improved locomotion or sensing; it unlocks the potential for genuinely intelligent robotic assistants capable of adapting to novel situations and performing tasks without explicit, pre-programmed instructions. Such robots promise to revolutionize fields ranging from elder care and disaster response to agricultural automation and in-home assistance, offering a level of flexibility and autonomy previously unattainable and fostering a future where robots seamlessly integrate into the fabric of daily life.

Recent advancements in robotic intelligence demonstrate a significant performance boost through a co-training methodology that strategically incorporates limited real-world data. Evaluations reveal that this approach achieves a 61% improvement in single-task scenarios when contrasted with previously established techniques. Notably, the benefits extend to more complex applications, with a 66% performance gain observed in multitask scenarios. These results underscore the effectiveness of leveraging simulation for initial learning, then refining the system’s capabilities with a modest amount of authentic data, ultimately creating more efficient and capable robotic systems without the extensive data requirements of traditional methods.

The pursuit of seamless sim-to-real transfer, as demonstrated by Point Bridge, echoes a fundamental truth about complex systems. The framework doesn’t build a solution; it cultivates an environment where robotic manipulation can grow across domains. This approach, leveraging unified point-based representations and vision-language models, acknowledges the inherent unpredictability of real-world application. As Ken Thompson observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not going to be able to debug it.” Point Bridge doesn’t attempt to perfectly model reality, but rather, anticipates its inevitable divergence, offering a path toward resilience where certainty ends and adaptation begins. The reduction in reliance on real-world data isn’t a triumph over complexity, but a recognition of its pervasive nature.

What Lies Ahead?

Point Bridge, in its attempt to unify representation, offers a glimpse of what is often mistaken for progress. It is a beautiful grafting, this blending of point clouds and language, yet one suspects the resulting hybrid will, in time, reveal unforeseen vulnerabilities. The reduction in reliance on real-world data is not a victory over reality, but a deferral. The cost will be paid in subtle failures, in the uncanny valleys of robotic behavior, and in the eventual necessity of acknowledging that any simulation, no matter how detailed, is but a shadow of the world it seeks to mimic.

The true challenge isn’t the transfer itself, but the acceptance of inherent instability. Systems do not become reliable; they merely accumulate experience. Future work will inevitably focus on refining the fidelity of these representations, but a more fruitful path may lie in embracing the ephemerality of robotic intelligence. To build a system that anticipates its own failures is to build something closer to life-and life, as any gardener knows, is a constant negotiation with entropy.

One anticipates a proliferation of such ‘bridge’ frameworks, each attempting to span the gap between the digital and the physical. But each span, however elegantly constructed, will bear the weight of its own limitations. The question isn’t whether these systems will fail, but how they will fail, and what stories those failures will tell about the illusions of control we so readily embrace.

Original article: https://arxiv.org/pdf/2601.16212.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- The five movies competing for an Oscar that has never been won before

- EUR ILS PREDICTION

- Binance’s Bold Gambit: SENT Soars as Crypto Meets AI Farce

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- CS2 Premier Season 4 is here! Anubis and SMG changes, new skins

2026-01-23 20:56