Author: Denis Avetisyan

Researchers have developed a new framework that allows robots with different physical forms to share a common understanding of movement, simplifying cross-embodiment control.

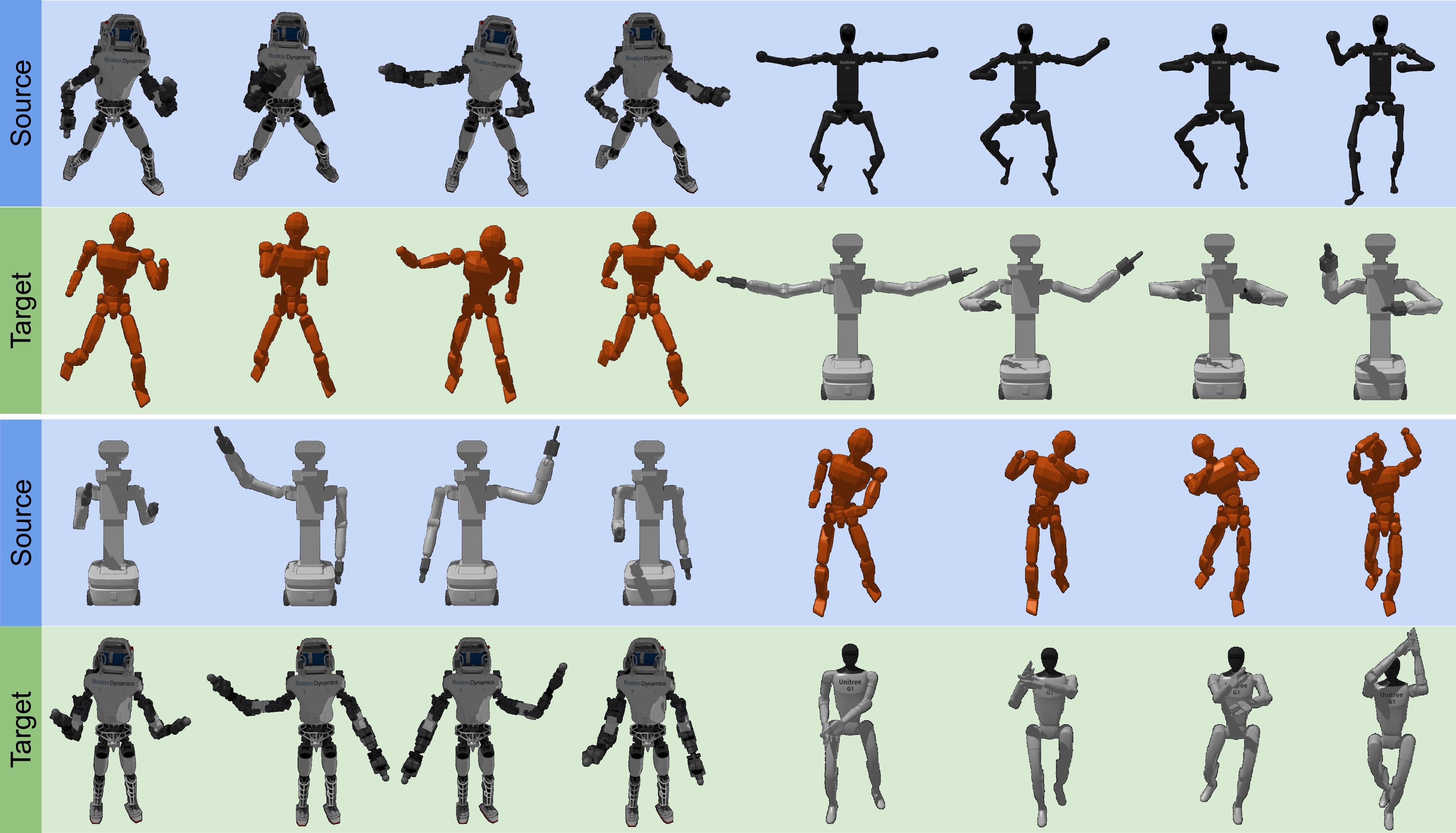

![The architecture learns a unified latent representation of motion by decoupling a shared latent space into five body-segment subspaces - left arm, right arm, trunk, left leg, and right leg - and employing robot-specific embedding layers [latex]E_r[/latex] to project varying pose dimensionalities into a common feature space, subsequently reconstructed via inverse mappings [latex]D_r[/latex], thereby enabling cross-embodiment motion modeling across humans and diverse robotic platforms.](https://arxiv.org/html/2601.15419v1/figures/modeloverview.png)

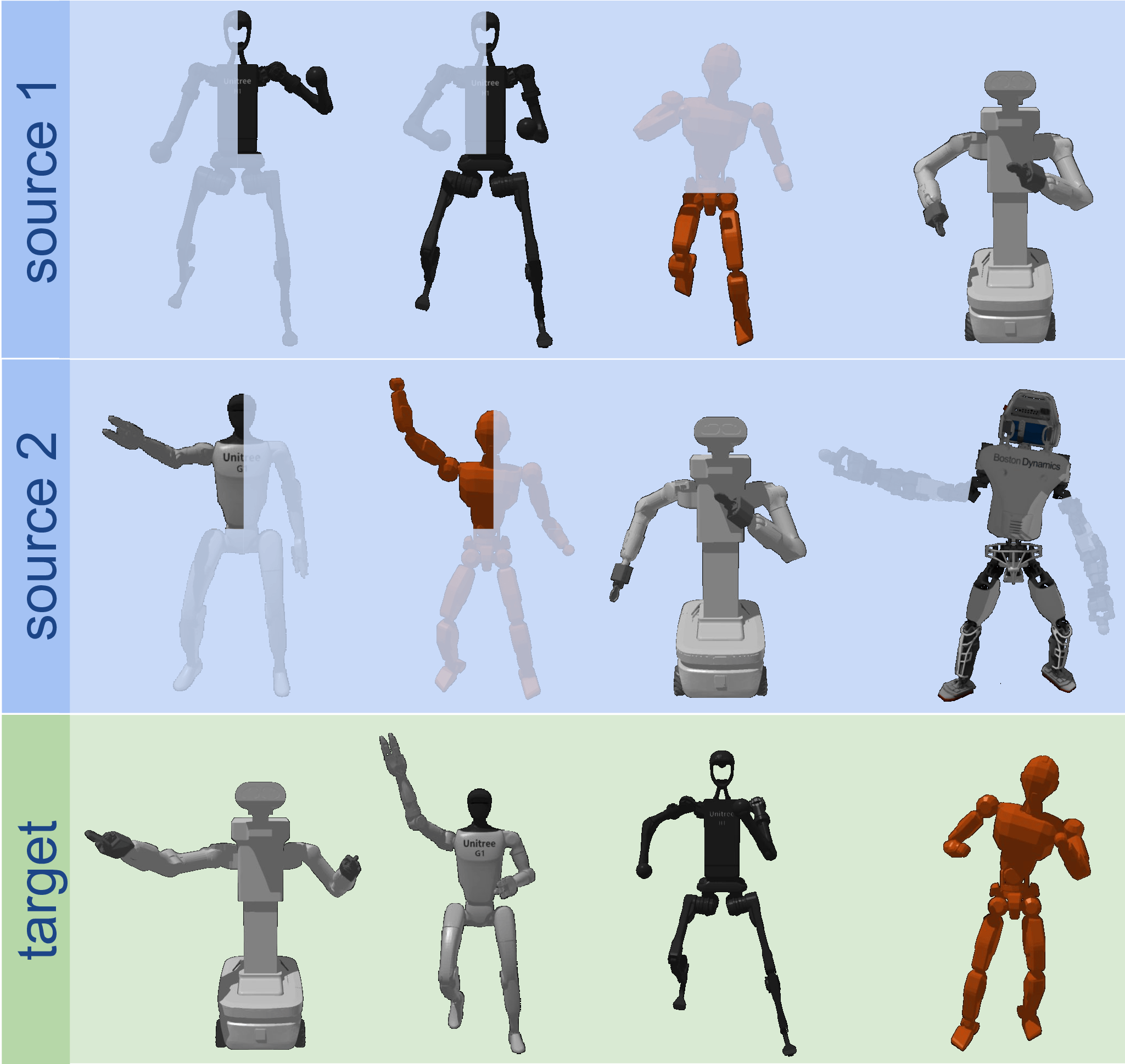

This work introduces a contrastive learning approach for building a unified latent space, enabling the transfer of human motions and control policies across diverse robotic platforms without per-robot fine-tuning.

Controlling increasingly diverse robotic platforms remains a challenge due to the need for embodiment-specific adaptation. This paper, ‘Learning a Unified Latent Space for Cross-Embodiment Robot Control’, introduces a framework for scalable humanoid robot control by learning a shared latent space that unifies motion across both humans and robots with varying morphologies. By leveraging contrastive learning and tailored similarity metrics, the approach enables the transfer of control policies learned from human data directly to new robotic embodiments without per-robot fine-tuning. Could this paradigm shift pave the way for more intuitive and adaptable human-robot interaction across a wider range of platforms?

The Challenge of Embodiment: A Matter of Mathematical Inelegance

The persistent challenge of controlling robots with diverse physical forms presents a considerable obstacle to widespread robotic deployment. Traditional control strategies are often painstakingly tailored to a specific robot’s morphology and dynamics; transferring these methods to even slightly different hardware typically demands substantial re-tuning, a process that is both time-consuming and requires specialized expertise. This reliance on embodiment-specific control loops limits a robot’s ability to adapt to new situations and hinders the development of truly versatile machines capable of operating effectively across a range of tasks and environments. Consequently, a robot expertly navigating one terrain or manipulating one object may struggle significantly with a subtly altered scenario, highlighting the need for more robust and generalizable control architectures.

The inability of robotic control systems to readily transfer learned behaviors across different physical forms presents a substantial obstacle to their widespread implementation in unpredictable environments. Current robotic deployments are often limited to highly structured settings precisely because even minor variations in a robot’s morphology-a change in limb length, joint configuration, or even the addition of external tools-typically necessitate a complete retraining of the control algorithms. This fragility dramatically restricts a robot’s utility in real-world applications, such as search and rescue, disaster response, or in-home assistance, where encountering novel situations and adapting to unforeseen physical challenges is paramount. Without robust generalization across embodiments, robots remain tethered to the specific designs for which they were initially programmed, hindering their potential to operate autonomously and effectively in the dynamic complexity of the real world.

Achieving robust cross-embodiment control demands a departure from traditional robotics approaches that tightly couple control algorithms with specific physical characteristics. Instead, a successful framework must effectively abstract away the nuances of each robot’s morphology – its size, shape, joint configuration, and actuator properties. This abstraction allows a single control policy to generalize across diverse robotic platforms, sidestepping the need for laborious re-tuning with every new design. By focusing on high-level task objectives rather than low-level motor commands tailored to a particular body, researchers aim to create controllers that operate on an ‘embodiment-agnostic’ level, promoting adaptability and simplifying the deployment of robots in unpredictable, real-world environments. This shift towards abstraction is not merely a convenience; it represents a fundamental step towards creating truly versatile and intelligent robotic systems.

Defining a Robot-Agnostic Motion Space: An Exercise in Abstraction

The system utilizes a Shared Latent Space designed to represent robot motions based on their underlying intent, rather than the specific robot morphology. This space is a high-dimensional vector space where motions expressing the same action – such as reaching, grasping, or walking – are mapped to nearby points, irrespective of the robot’s kinematic structure or degrees of freedom. Consequently, a motion learned on one robotic platform can be represented as a point in this space and then reconstructed for execution on a different robot, facilitating cross-embodiment transfer of learned behaviors. The effectiveness of this approach relies on the ability to disentangle the intent of a motion from the robot-specific details of its execution, allowing for generalization across diverse robotic systems.

Human motion data is acquired using motion capture systems, which record the 3D positions of markers placed on a human performer. This raw data is then processed and fitted to the SMPL (Skinned Multi-Person Model) – a parametric, 3D statistical model of the human body. The SMPL model allows for the creation of realistic and controllable human poses, providing a standardized representation of human motion that is independent of the specific motion capture hardware used. Utilizing the SMPL model ensures data consistency and facilitates the generation of a comprehensive dataset of human movements for training purposes, effectively serving as the foundation for learning a robot-agnostic motion space.

The Robot-Specific Embedding Layer is a learned, parameterized function integral to mapping robot-specific pose data into the Shared Latent Space. This layer takes as input the configuration of a particular robot – defined by its joint angles and end-effector positions – and transforms it into a vector representation compatible with the shared space. The embedding is trained using paired data of robot poses and corresponding human motions, enabling the system to learn a consistent mapping between robot configurations and human intent. Crucially, this allows motions learned from one robot embodiment to be transferred and adapted for execution on different robotic platforms, effectively decoupling motion planning from specific robot morphology.

![A conditional variational autoencoder (c-VAE) learns goal-directed robot motion from human demonstrations by predicting latent displacements [latex] \hat{d}_t [/latex] based on the current latent pose [latex] z_t [/latex] and average end-effector velocity [latex] \overline{v}_{ee} [/latex] towards a user-defined goal, iteratively updating the latent state to generate smooth, goal-directed movements.](https://arxiv.org/html/2601.15419v1/figures/RLC_CVAE.png)

Motion Alignment Through Contrastive Learning: Establishing a Hierarchy of Similarity

Contrastive learning is employed as the training methodology, functioning by minimizing the distance between embeddings of similar motion sequences within a shared latent space and maximizing the distance between embeddings of dissimilar sequences. This is achieved through the definition of positive and negative pairs; positive pairs consist of variations of the same motion – for example, the same action performed by different robots or with slight parameter changes – while negative pairs comprise unrelated motions. The system learns to map similar motions to proximate points in the latent space and dissimilar motions to distant points, effectively creating a structured representation based on motion similarity. This process relies on a loss function that quantifies the distance between embeddings, guiding the network to generate meaningful and separable representations.

Disentangled representation learning, achieved through contrastive learning, results in a latent space where motion characteristics are separated into distinct factors. Specifically, the system learns to differentiate between the intent of a motion – the desired goal or action – and the embodiment-specific details – the particular way that motion is executed by a specific robot or body. This separation allows the system to generalize across different embodiments; a learned intent can be executed by various robots without requiring retraining, as the embodiment-specific aspects are isolated and do not interfere with the core intent representation. This is achieved by minimizing the correlation between intent-related features and embodiment-related features within the shared latent space.

Teleoperation data contributes significantly to system performance by providing a dataset of expert demonstrations. This data consists of motion capture recorded while a human operator directly controls the robotic system, effectively serving as a ground truth for desired behaviors. Incorporating this data allows the system to learn from skilled human performance, improving its ability to generalize to new tasks and environments. The use of teleoperation data is particularly valuable for complex motions where defining explicit rewards or objectives is difficult, as it provides a direct signal of successful task completion. The resulting dataset is used during the training process to guide the learning of the shared latent space and refine the system’s motion generation capabilities.

Towards Generalized Robot Control: A Paradigm Shift in Robotic Versatility

A central innovation lies in the development of a shared latent space, enabling the transfer of learned control policies across robots exhibiting diverse physical characteristics. This space functions as a common language, abstracting away the complexities of individual robot designs – variations in limb length, joint configuration, or overall morphology – and representing desired movements in a generalized form. Consequently, a policy trained on one robotic platform can be readily adapted to control a completely different robot, simply by learning a mapping between this shared space and the new robot’s specific kinematics. This circumvents the need for extensive, robot-specific retraining, significantly accelerating the deployment of robotic solutions and paving the way for more versatile and adaptable automation systems.

The successful transfer of control policies between disparate robots hinges on a fundamental understanding of their individual kinematic structures. This framework leverages robot kinematics – the mathematical description of a robot’s motion and configuration – to establish a precise mapping between the shared latent space and the resulting end-effector control. Essentially, the system doesn’t directly command motor positions; instead, it defines desired end-effector poses, and the robot’s kinematics translate these poses into the necessary joint movements. This abstraction allows a single, unified control policy, residing in the latent space, to be interpreted and executed differently by robots with varying arm lengths, joint configurations, and overall morphology, ensuring consistent and accurate performance across platforms.

A key advancement lies in the efficiency of adapting control to previously unseen robotic systems. The developed framework achieves performance comparable to fully retrained robots, but with a dramatically reduced training time; a new robot can be integrated and operational in approximately 15 minutes using only a lightweight embedding layer. This contrasts sharply with traditional methods, which necessitate hours of retraining for each new robotic platform. This accelerated adaptation is achieved by leveraging a shared latent space, allowing the system to generalize learned control policies across diverse robot morphologies and kinematics without extensive computational overhead, ultimately paving the way for more agile and versatile robotic deployments.

Across diverse robotic platforms, the framework consistently achieves sub-centimeter end-effector accuracy – maintaining errors below 1 cm – while operating at a control frequency of 100 Hz. This high-speed, precise control is made possible by the latent-space representation, allowing for rapid adjustments and responses to dynamic situations. The demonstrated performance signifies a substantial advancement in robotic dexterity and responsiveness, enabling the execution of intricate tasks with a level of accuracy previously requiring significantly more computational resources and calibration time. Such capabilities are crucial for applications demanding both speed and precision, including advanced manufacturing, surgical robotics, and complex manipulation tasks in unstructured environments.

The development of a shared latent space for robot control signifies a crucial advancement in the field of robotics, paving the way for systems exhibiting remarkable adaptability. Traditionally, robots have been largely confined to pre-programmed tasks within rigidly defined environments; however, this new framework allows for the transfer of learned control policies across diverse robotic platforms, regardless of their physical characteristics. This capability moves beyond task-specific programming, enabling robots to generalize skills and apply them to novel situations and unfamiliar surroundings. The potential impact extends to various sectors, including manufacturing, healthcare, and exploration, promising a future where robots can respond dynamically to changing demands and operate effectively in unpredictable environments with minimal human intervention.

![A unified policy operating in latent space successfully guides multiple robots from diverse starting positions [latex]\mathbf{x}_{init}[/latex] (green) to arbitrary goal positions [latex]\mathbf{x}_{goal}[/latex] (blue), generating smooth trajectories with intermediate waypoints (purple).](https://arxiv.org/html/2601.15419v1/figures/robot_control2.png)

The pursuit of a unified latent space, as detailed in this work, mirrors a fundamental tenet of mathematical elegance. This research demonstrates the power of distilling complex robotic control into a shared, abstract representation – a pursuit of provable generalization rather than simply achieving functionality on specific embodiments. As Grace Hopper famously stated, “It’s easier to ask forgiveness than it is to get permission.” This sentiment subtly applies here; the framework doesn’t demand perfect adaptation for each robot, but rather establishes a common ground from which control can emerge, prioritizing a logically complete system over rigid, pre-defined solutions. The contrastive learning approach ensures that the latent space captures essential motion characteristics, promoting a solution that is, at its core, mathematically sound.

What Remains Constant?

The pursuit of a unified latent space for robotic control, as demonstrated in this work, skirts a fundamental question. Let N approach infinity – what remains invariant? The current approach successfully maps diverse embodiments to a shared representation, allowing for motion retargeting and policy transfer. However, this transfer relies on statistical correlation, not necessarily on a deeper understanding of the underlying dynamics. The system learns how motions correspond, not why they are feasible or optimal. Scaling to truly disparate robotic morphologies and environmental conditions will inevitably expose the limitations of purely data-driven approaches.

Future work must address the issue of abstraction. The latent space, while effective, remains tied to the observed data. A truly generalizable system requires a latent space grounded in physical principles – one that encodes constraints and affordances independent of specific embodiments. Contrastive learning, while a powerful tool for representation discovery, lacks the axiomatic rigor required for provable stability and performance guarantees. The elegance of a mathematically derived solution, guaranteed to converge under defined conditions, remains a distant, yet compelling, ideal.

Ultimately, the challenge lies in moving beyond empirical similarity to principled understanding. The current framework is a promising step, but the true test will be its ability to extrapolate beyond the training distribution – to generate novel, robust behaviors in unforeseen circumstances. The pursuit of cross-embodiment control is not merely an engineering problem; it is a quest for a universal language of motion, and such a language must be built on the foundations of mathematical truth.

Original article: https://arxiv.org/pdf/2601.15419.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Binance’s Bold Gambit: SENT Soars as Crypto Meets AI Farce

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- The five movies competing for an Oscar that has never been won before

- Top 3 Must-Watch Netflix Shows This Weekend: January 23–25, 2026

- New Gundam Anime Movie Sets ‘Clear’ Bar With Exclusive High Grade Gunpla Reveal

- Simulating Society: Modeling Personality in Social Media Bots

2026-01-23 12:43