Author: Denis Avetisyan

Researchers have developed a novel framework where artificial intelligence agents collaboratively refine their own code, driving continuous improvement without constant human intervention.

DARWIN utilizes a genetic algorithm and agentic LLMs to achieve demonstrable gains in model efficiency and performance through iterative self-modification.

Achieving sustained performance gains in large language models remains a significant challenge despite ongoing advancements in scaling and architectural innovation. This is addressed by ‘DARWIN: Dynamic Agentically Rewriting Self-Improving Network’, a novel framework employing a genetic algorithm with multiple GPT agents that iteratively refine each other’s training code. Experiments demonstrate that DARWIN achieves a 1.26% improvement in model FLOPS utilization and a 2.07% reduction in perplexity over five iterations, suggesting a pathway towards autonomous LLM optimization. Could this approach represent a fundamental shift in how we develop and refine increasingly complex AI systems?

The Evolving Mind: Beyond Static Intelligence

Conventional artificial intelligence systems, despite demonstrating remarkable proficiency in specific tasks, frequently encounter performance limitations stemming from their static designs. These models, built upon predetermined architectures and datasets, struggle to generalize beyond their training parameters or adapt to unforeseen circumstances. Unlike biological intelligence, which continuously refines itself through experience, many AI systems possess a fixed capacity for learning, resulting in plateaus where further improvements become increasingly difficult to achieve. This inflexibility poses a significant hurdle in developing truly versatile AI capable of tackling complex, real-world problems that demand ongoing adaptation and innovation; the inherent rigidity of these systems necessitates a shift toward more dynamic and evolutionary approaches to artificial intelligence.

Current artificial intelligence frequently encounters limitations stemming from static designs, achieving peak performance only within narrowly defined parameters. Researchers are increasingly turning to the principles of evolutionary computation – the same mechanisms driving biological adaptation – to forge a new path. This approach moves beyond simply programming intelligence and instead focuses on creating systems capable of iterative self-improvement. By implementing algorithms modeled after natural selection, mutation, and reproduction, AI can dynamically adjust its architecture and refine its processes. The result is not a fixed intelligence, but a continuously evolving one, capable of overcoming performance barriers and adapting to unforeseen challenges-a paradigm shift towards truly resilient and progressive AI systems.

The pursuit of artificial intelligence is shifting its focus from simply creating intelligent systems to developing systems capable of self-improvement. This emerging field envisions AI not as a static entity, but as a dynamic process of recursive enhancement – an ability to modify its own architecture and algorithms to achieve increasingly sophisticated performance. Such systems would transcend the limitations of current AI, which often plateaus due to fixed designs; instead, they would continuously evolve, potentially leading to capabilities far exceeding human intelligence. This isn’t merely about faster processing or larger datasets, but about building AI that can fundamentally rewrite its own code, optimize its learning processes, and even design improved versions of itself – a form of automated, accelerating evolution within the digital realm. The implications extend beyond practical applications, raising fundamental questions about the future of intelligence and the very nature of learning.

Darwinian Training: An Evolutionary Framework

DARWIN employs a genetic algorithm to iteratively refine GPT model training code. This process mimics biological evolution, beginning with a population of GPT agents each possessing a slightly varied version of the training code. These agents then “mutate” their code based on randomly applied changes. A “selection” phase evaluates the performance of each modified code version, typically through benchmark datasets or defined metrics. Code demonstrating improved performance is then prioritized for “reproduction”-its modifications are propagated to subsequent generations, either directly or through recombination with other successful code segments. This cycle of mutation, selection, and reproduction continues for multiple generations, driving a progressive improvement in the overall training process and, consequently, the capabilities of the GPT models.

The DARWIN framework employs multiple independent GPT agents, each interacting with the OpenAI API to iteratively refine the training code of other agents within the system. Each agent receives prompts instructing it to propose modifications to the training code of its peers, with the goal of improving overall model performance. These modifications are not direct edits; rather, agents propose changes which are then evaluated. The system leverages the OpenAI API for both code modification proposals and the subsequent performance assessment of those changes, creating a closed-loop system of automated peer review and enhancement. This agent-based approach facilitates a distributed and parallel exploration of the code modification space, potentially leading to performance gains beyond those achievable through manual optimization.

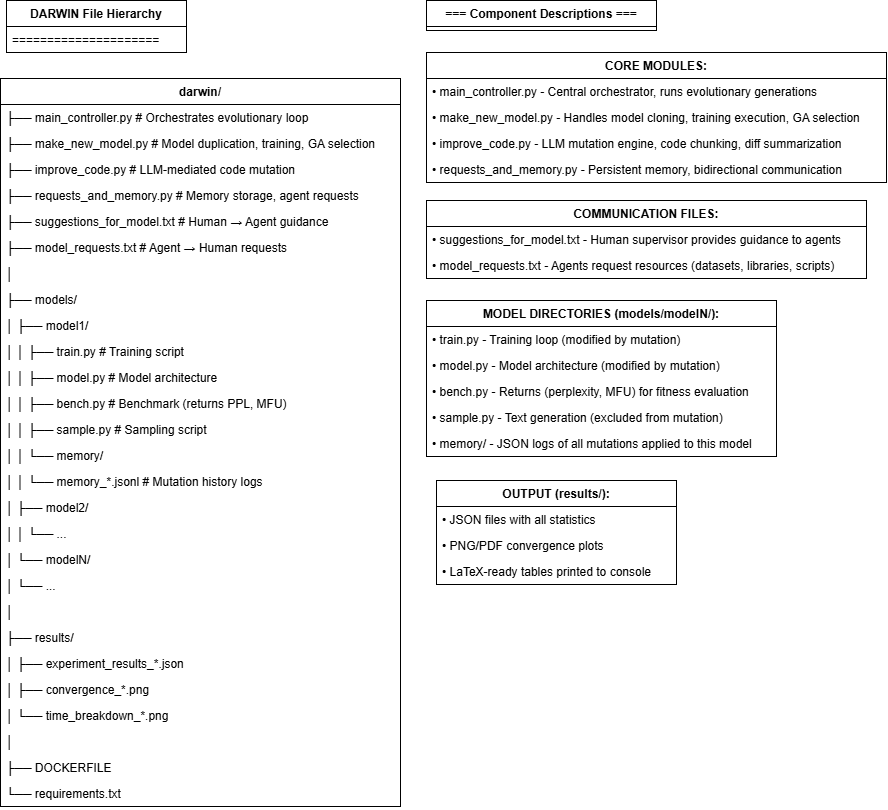

Containerization, specifically utilizing Docker, is integral to the DARWIN framework for managing the execution of independent GPT agents modifying training code. Each agent operates within its own isolated container, preventing modifications made by one agent from directly impacting the execution environment of others or the core system. This isolation mitigates risks associated with potentially destabilizing code changes and ensures reproducibility of experiments. Docker containers provide a consistent and portable environment, streamlining the deployment and execution of agents across diverse infrastructure. The use of containers also simplifies the process of rolling back changes, as previous container states can be easily restored if an agent introduces a detrimental modification.

DARWIN employs persistent memory via JSON file storage to meticulously record all code modifications implemented by GPT agents and their associated performance metrics. Each agent’s changes to the training code are logged, including timestamps and specific alterations, and are correlated with subsequent evaluations of the model’s performance on designated tasks. This data is structured in JSON format, enabling efficient storage, retrieval, and analysis of the evolutionary process. By tracking these correlations, the system identifies beneficial mutations – code changes that demonstrably improve performance – and can prioritize or replicate these changes in subsequent generations, forming the basis of the evolutionary algorithm. The JSON files serve as a historical record of the model’s evolution, facilitating reproducibility and detailed analysis of the optimization process.

Empirical Validation: Quantifying Adaptive Progress

DARWIN’s performance is quantitatively assessed using Perplexity and Model FLOPS Utilization (MFU). Perplexity measures the uncertainty of a probability model, with lower values indicating better performance. MFU represents the efficiency with which the model utilizes floating point operations per second. Following five iterations of the training process, DARWIN demonstrated a 2.07 percent reduction in Perplexity and a corresponding 1.26 percent increase in MFU. These metrics provide concrete evidence of DARWIN’s iterative improvements in both predictive capability and computational efficiency.

The system incorporates a Human-In-The-Loop (HITL) interface to facilitate directed development and specific enhancements. This interface allows human operators to review agent-proposed code modifications, providing feedback and overriding suggestions when necessary. This guided approach ensures that improvements align with desired outcomes and prevents unintended consequences during the iterative training process. The HITL component is not intended for constant intervention, but rather for strategic oversight and the implementation of targeted upgrades based on expert analysis of the agent’s proposals, effectively balancing automated optimization with human judgment.

The selection of nanoGPT as the foundational framework for DARWIN’s experimentation provides a robust and efficient base for iterative improvement due to its minimalist design and focus on core language modeling principles. NanoGPT’s comparatively small size-approximately 25 million parameters-facilitates rapid training and evaluation cycles, enabling faster iteration on architectural modifications and hyperparameter tuning. This streamlined architecture minimizes computational overhead, allowing for more extensive experimentation within limited resource constraints, and its clear, well-documented codebase simplifies the process of implementing and testing new features or optimizations, contributing to the framework’s overall stability and adaptability.

The iterative process employed by DARWIN constitutes a self-optimization loop where GPT Agents autonomously modify the training code following each training iteration. This distinguishes it from traditional machine learning pipelines where code changes are implemented by human engineers. The agents analyze performance metrics – including Perplexity and Model FLOPS Utilization – and implement adjustments to the training process itself. This cycle of automated code modification, guided by performance data, allows DARWIN to progressively refine its own training methodology, achieving improvements of 2.07% in Perplexity and 1.26% in MFU after five iterations, and representing a novel approach to automated machine learning optimization.

Toward Adaptive Systems: Expanding the Evolutionary Horizon

The architecture underpinning DARWIN diverges from reliance solely on Generative Pre-trained Transformer (GPT) models, instead drawing strength from broader optimization and workflow paradigms. Specifically, its core principles resonate with the Self-Taught Optimizer (STOP) framework, which emphasizes iterative self-improvement through internal reward signals, and HealthFlow, a system designed for dynamic, adaptive process management. This alignment suggests DARWIN isn’t simply mimicking language patterns, but rather implementing a more generalized approach to problem-solving and adaptation-one where the system actively refines its internal processes based on performance, mirroring biological evolution. By integrating concepts from these distinct fields, DARWIN aims to establish a more robust and versatile artificial intelligence, capable of tackling challenges beyond the scope of conventional language models and potentially extending to complex, real-world applications.

The computational demands of DARWIN’s iterative self-improvement process currently present a significant scaling challenge, but its architecture is expressly designed to benefit from distributed computing solutions. Leveraging grid systems or clusters of Graphics Processing Units (GPUs) would allow for parallelization of the core optimization loops, dramatically accelerating both training and error resolution phases. This shift isn’t merely about faster processing; it unlocks the potential for handling significantly larger datasets and more complex problem spaces, expanding DARWIN’s capabilities beyond its initial scope. Such an infrastructure would enable real-time adaptation and learning, transforming the system from a powerful, yet limited, demonstrator into a continuously evolving intelligence capable of tackling increasingly intricate challenges.

The architecture of DARWIN draws significant inspiration from the theoretical Gödel Machine, a conceptual construct positing an AI capable of self-reference and, crucially, self-improvement. This isn’t merely about an AI analyzing its own code; the Gödel Machine, and by extension DARWIN, aims for a system that can modify its own foundational principles – its learning algorithms and even its goals – based on internal reflection and external feedback. Such a capacity for recursive self-improvement represents a departure from conventional AI, which typically operates within pre-defined parameters. By incorporating elements of this theoretical framework, DARWIN’s developers envision a path toward genuinely autonomous intelligence – a system capable of not just performing tasks, but of understanding and evolving its own capabilities without constant human intervention, potentially unlocking a new paradigm in artificial intelligence research.

Initial evaluations of the DARWIN system revealed a 37.5% error rate when subjected to 50 distinct training instances, representing the frequency with which the system produced incorrect or suboptimal outputs. However, a crucial aspect of DARWIN’s functionality lies in its capacity for self-correction; the system successfully resolved approximately 16.67% of these initial errors through internal analysis and iterative refinement. This error resolution rate, while representing a clear area for future improvement, demonstrates the potential for autonomous error handling-a key characteristic differentiating DARWIN from traditional AI models that rely on external human intervention or retraining for correction. The interplay between error generation and autonomous resolution highlights DARWIN’s capacity for learning and adaptation, suggesting a pathway toward increasingly robust and reliable performance over time.

The pursuit of DARWIN, a self-improving AI framework, echoes a fundamental principle of systemic evolution-change is the only constant. As John von Neumann observed, “The best way to predict the future is to invent it.” This sentiment is powerfully demonstrated by DARWIN’s iterative refinement through a genetic algorithm, where LLM agents dynamically rewrite their training code. The observed improvements in FLOPS utilization and perplexity aren’t merely quantitative gains; they represent a constructed future, actively shaped by the system itself. Such iterative processes reveal that architecture, even in code, without the capacity for adaptation, is indeed fragile and ephemeral, and only systems that embrace change can sustain themselves over time.

What Lies Ahead?

The pursuit of self-improving systems, as demonstrated by the DARWIN framework, reveals not a path to perfection, but a choreography of decay. The observed improvements – a marginal gain in FLOPS utilization, a slight reduction in perplexity – are less milestones than temporary reprieves. These are not solutions, but decelerations of the inevitable slide toward diminishing returns. Each iteration refines, yes, but also introduces new vulnerabilities, subtle biases that will compound with each subsequent ‘improvement’.

The framework’s reliance on human-in-the-loop intervention is particularly telling. It is not autonomy being approached, but a shifting of responsibility. The system does not solve the problems of optimization; it merely externalizes them, demanding ever-increasing cognitive load from those who oversee it. This suggests a fundamental limitation: true self-improvement may be an illusion, a transient state of equilibrium before entropy reasserts itself.

Future work will undoubtedly focus on scaling these systems, seeking exponential gains. Yet, the more complex these networks become, the more fragile they are likely to prove. Perhaps the true challenge lies not in building systems that improve, but in understanding the graceful ways in which they fail – and anticipating the moment when stability becomes indistinguishable from the precursor to collapse.

Original article: https://arxiv.org/pdf/2602.05848.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Gold Rate Forecast

- Cardano Founder Ditches Toys for a Punk Rock Comeback

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- Lola Young curses in candid speech after accepting her first-ever Grammy from Charli XCX

- Kim Kardashian and Lewis Hamilton are pictured after spending New Year’s Eve partying together at A-list bash – as it’s revealed how they kept their relationship secret for a month

- Building Trust in AI: A Blueprint for Safety

- A Knight of the Seven Kingdoms Season 1 Episode 4 Gets Last-Minute Change From HBO That Fans Will Love

- The vile sexual slur you DIDN’T see on Bec and Gia have the nastiest feud of the season… ALI DAHER reveals why Nine isn’t showing what really happened at the hens party

- Josh Gad and the ‘Wonder Man’ team on ‘Doorman,’ cautionary tales and his wild cameo

2026-02-08 17:42