Author: Denis Avetisyan

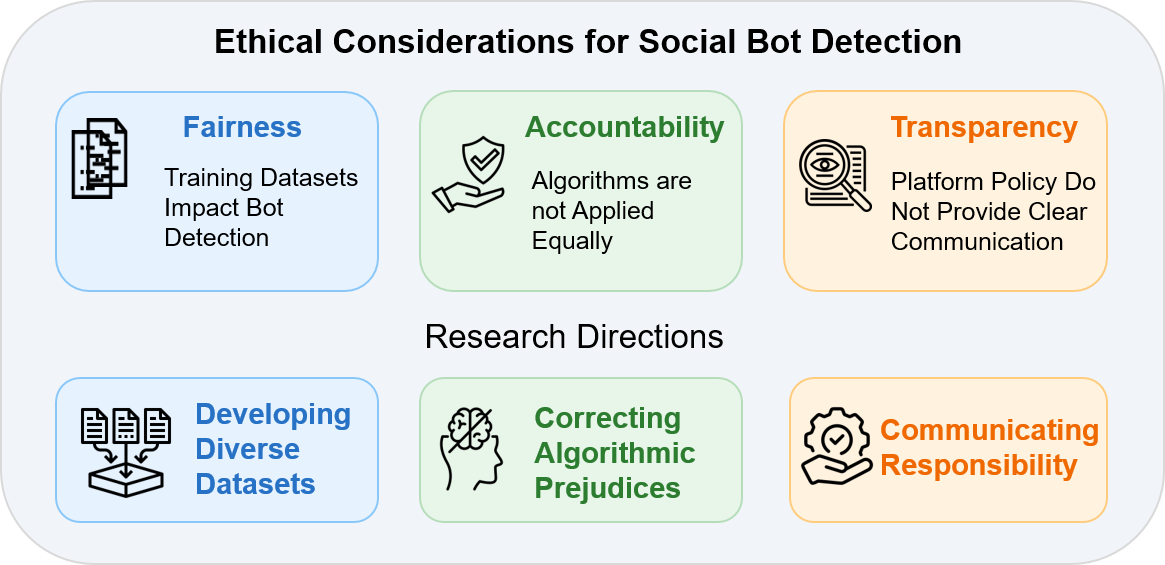

As social media bot detection becomes increasingly sophisticated, critical ethical questions about fairness, accountability, and transparency demand urgent attention.

This review examines the ethical implications of social media bot detection, focusing on algorithmic bias and the need for responsible AI development.

Despite growing recognition of the societal harms amplified by social media bots, automated detection systems raise critical ethical concerns beyond mere accuracy. This paper, ‘FATe of Bots: Ethical Considerations of Social Bot Detection’, analyzes these concerns through the lens of Fairness, Accountability, and Transparency, revealing potential biases embedded within training data and algorithmic design. Our examination demonstrates that seemingly objective bot detection can disproportionately impact certain user groups, necessitating careful consideration of socio-technical implications. How can researchers proactively develop more responsible and equitable bot detection systems that mitigate harm without exacerbating existing inequalities?

The Pervasive Threat of Automated Deception

The modern digital landscape faces a growing challenge as social media platforms become increasingly susceptible to manipulation through automated accounts, commonly known as social media bots. These aren’t simply unsophisticated programs; advancements in artificial intelligence allow for the creation of bots capable of mimicking human behavior with startling accuracy, generating realistic text, and even adapting to conversations. This capacity enables the widespread dissemination of biased information, the artificial inflation of trending topics, and the creation of illusory consensus. Consequently, genuine online discourse is distorted, making it difficult for individuals to discern authentic opinions from those manufactured by automated systems. The sheer volume of bot activity can overwhelm platforms, drowning out legitimate voices and eroding trust in the information presented, posing a significant threat to informed public debate and potentially influencing critical social and political processes.

Automated accounts, commonly known as social media bots, actively work to reshape online conversations by strategically amplifying specific narratives and viewpoints. This isn’t simply about increasing the volume of discussion; sophisticated bots can target influential users, mimic authentic engagement, and coordinate the spread of disinformation to create an artificial sense of consensus. The implications extend beyond mere annoyance, potentially swaying public opinion during critical events like elections or exacerbating societal divisions. Research demonstrates that bot activity can significantly alter the perceived popularity of candidates, distort the framing of policy debates, and even incite real-world unrest by manipulating the flow of information and eroding trust in legitimate sources. Consequently, the capacity of these bots to influence public sentiment presents a substantial threat to democratic processes and social stability, demanding ongoing investigation and mitigation strategies.

The preservation of trustworthy online information hinges on effectively countering the disruptive influence of social media bots. These automated accounts don’t simply add noise; they actively degrade the quality of online discourse by artificially inflating the prominence of certain viewpoints and suppressing others. Research demonstrates that bot detection and mitigation strategies – ranging from sophisticated machine learning algorithms that identify suspicious activity patterns to platform-level interventions that limit bot reach – are vital for safeguarding the integrity of public debate. Without these measures, manipulated trends can distort perceptions, erode trust in legitimate sources, and ultimately undermine informed decision-making, posing a significant threat to democratic processes and societal well-being. Consequently, ongoing innovation in bot detection and proactive platform policies are paramount for fostering a healthier and more reliable online ecosystem.

The Pitfalls of Data-Driven Bias

Current bot detection algorithms demonstrate efficacy contingent on the quality and representativeness of their training datasets. However, a substantial majority – 94.2% – of these datasets are sourced from the X platform. This heavy reliance on a single data source introduces a critical lack of diversity in the training data, potentially limiting the generalizability and robustness of these algorithms across different online environments and user behaviors. The concentration of data from a single platform creates a systemic bias, impacting the ability of bot detection systems to accurately identify malicious actors and distinguish them from legitimate users on platforms outside of X.

Algorithmic bias in bot detection stems directly from the composition of training datasets used to develop these systems. A significant over-representation of data from a single platform, coupled with linguistic skew – with 84.3% of datasets predominantly featuring English language posts – introduces systematic errors in classification. This results in disproportionate misidentification of users who do not conform to the patterns prevalent in the training data, meaning non-English speakers or users from underrepresented platforms are more likely to be incorrectly flagged as bots. Consequently, bot detection algorithms may exhibit reduced accuracy and fairness when applied to diverse online communities and user groups.

The remediation of bias in bot detection algorithms extends beyond purely technical adjustments and is fundamentally linked to the principles of fairness and equity in online environments. Misclassification rates stemming from biased training data can disproportionately impact specific demographic or linguistic groups, potentially leading to censorship, account restrictions, or the suppression of legitimate online discourse. Consequently, addressing algorithmic bias isn’t simply about improving detection accuracy; it’s a necessary condition for upholding inclusive online spaces where all users have equal opportunity for participation and expression, and where automated systems do not perpetuate or amplify existing societal inequalities.

Accountability and Transparency in Automated Systems

Addressing potential algorithmic bias requires the implementation of comprehensive accountability mechanisms, specifically including user appeal processes for classifications impacting individuals or organizations. These processes should allow users to contest automated decisions, providing a clearly defined pathway for review by a human adjudicator. Successful appeal systems necessitate documented rationale for initial algorithmic classifications, enabling informed assessment during the appeal phase. Furthermore, data collected from appeals-including reasons for disputes and outcomes of review-should be systematically analyzed to identify and mitigate sources of algorithmic bias, thereby improving the overall fairness and accuracy of the system over time.

Explainable AI (XAI) techniques applied to bot detection algorithms provide insights into the decision-making processes beyond simple binary classifications. These techniques encompass methods like feature importance analysis, which identifies the specific data points contributing most to a bot score, and surrogate models, which approximate the complex algorithm with a more interpretable one. Furthermore, meta-explanations, or explanations of the explanations, can detail the limitations and confidence levels associated with the bot detection process itself. By revealing the factors influencing classifications – such as posting frequency, network characteristics, or content patterns – XAI and meta-explanations enhance transparency, allowing for auditability and fostering trust in the system’s outputs. This level of detail is crucial for understanding both correct and incorrect classifications, enabling continuous improvement and addressing potential biases within the algorithms.

Human-in-the-Loop (HITL) verification integrates human review into automated processes to improve accuracy and address limitations inherent in algorithmic decision-making. This approach is particularly vital in bot detection, where algorithms may misclassify legitimate users or fail to identify sophisticated malicious actors. HITL systems route flagged accounts or uncertain classifications to human analysts for manual review, leveraging human judgment to confirm or override algorithmic outputs. This not only reduces error rates but also provides valuable feedback for refining the algorithm’s training data and improving its performance over time. The integration of human oversight ensures a critical safety net and allows for nuanced assessment of contextual factors that algorithms may not adequately consider.

The Pursuit of Equitable Detection Strategies

Bot detection algorithms often struggle with fairness, disproportionately misclassifying users from certain demographics or with unique online behaviors. Multi-task learning offers a powerful solution by training a single model to perform multiple, related tasks simultaneously – not just identifying bots, but also understanding nuanced aspects of human user activity. This approach encourages the algorithm to develop a more generalized understanding of online behavior, rather than relying on potentially biased features that might unfairly target specific groups. By learning to differentiate between genuine and automated accounts while simultaneously recognizing diverse user patterns, the model becomes more robust and less prone to discriminatory outcomes, ultimately fostering a more equitable online experience.

Bot detection algorithms often rely on single metrics, potentially leading to inaccurate classifications disproportionately affecting specific user groups. However, incorporating multi-faceted analysis-examining behavioral patterns, content characteristics, network interactions, and temporal activity concurrently-significantly mitigates this risk. This holistic approach allows the algorithms to discern genuine user behavior from automated activity with greater precision, reducing false positives and ensuring fairer outcomes. By weighting these diverse factors, the algorithms move beyond simplistic rules, recognizing that authentic online engagement is rarely defined by a single characteristic, and ultimately improving the overall accuracy and equity of bot detection systems.

A detailed examination of user-submitted reports revealed a significant number of instances where legitimate Reddit users were incorrectly flagged as automated bots. Researchers analyzed 60 distinct threads dedicated to these misclassifications, uncovering patterns in the errors and the frustration experienced by affected individuals. This analysis underscores a critical need for refinement in bot detection algorithms, moving beyond simplistic rules to more nuanced methods that account for diverse user behaviors. Furthermore, the volume of reported errors highlights the importance of establishing clear and accessible appeal processes, allowing users to contest inaccurate classifications and ensuring a fairer online experience. These findings suggest that improved algorithms, coupled with robust appeal mechanisms, are vital for maintaining trust and fostering a healthy community within the platform.

The pursuit of accurate bot detection, as detailed in this analysis of ethical considerations, inherently demands a rigorous approach to algorithmic construction. It’s a matter of mathematical truth, not merely empirical success. Donald Davies famously stated, “The only thing worse than not being able to solve a problem is believing you’ve solved it when you haven’t.” This resonates deeply with the core argument concerning data bias; a bot detection system built upon flawed or unrepresentative data may appear effective, but it fundamentally fails to achieve genuine fairness and accountability. Such a system, while potentially flagging numerous accounts, delivers a solution devoid of verifiable correctness, masking underlying inequities rather than resolving them. The study emphasizes that transparency isn’t simply about revealing the algorithm, but about demonstrably proving its validity.

What’s Next?

The preceding analysis of automated bot detection reveals a predictable truth: the imperfections of the algorithm merely mirror those of its creators, and, more fundamentally, the data upon which it relies. Current approaches, often celebrated for incremental improvements in precision and recall, skirt the core issue. A system capable of identifying statistical anomalies, however sophisticated, remains vulnerable to biases embedded within the very fabric of social networks. The pursuit of ‘fairness’ metrics, while laudable, offers only a palliative; a statistically equitable outcome does not guarantee ethical rectitude.

Future work must abandon the illusion of objective classification. The focus should shift from detecting bots to understanding the systemic pressures that produce bot-like behavior in human users – the echo chambers, the coordinated campaigns, the algorithmic amplification of extreme viewpoints. A provably fair algorithm, one demonstrably free of bias, is a chimera. The true challenge lies in building systems that acknowledge their inherent limitations and prioritize transparency, allowing for auditability and reasoned contestation of their outputs.

Ultimately, in the chaos of data, only mathematical discipline endures. But discipline demands not merely clever engineering, but a rigorous accounting of assumptions, a frank admission of uncertainties, and a willingness to abandon solutions that, despite empirical success, lack theoretical grounding. The field requires a move beyond pragmatic heuristics toward formally verifiable models, however complex, if it is to move beyond the performance art of pattern recognition.

Original article: https://arxiv.org/pdf/2602.05200.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Gold Rate Forecast

- Cardano Founder Ditches Toys for a Punk Rock Comeback

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- Lola Young curses in candid speech after accepting her first-ever Grammy from Charli XCX

- Kim Kardashian and Lewis Hamilton are pictured after spending New Year’s Eve partying together at A-list bash – as it’s revealed how they kept their relationship secret for a month

- Building Trust in AI: A Blueprint for Safety

- A Knight of the Seven Kingdoms Season 1 Episode 4 Gets Last-Minute Change From HBO That Fans Will Love

- The vile sexual slur you DIDN’T see on Bec and Gia have the nastiest feud of the season… ALI DAHER reveals why Nine isn’t showing what really happened at the hens party

- Josh Gad and the ‘Wonder Man’ team on ‘Doorman,’ cautionary tales and his wild cameo

2026-02-08 10:43