Author: Denis Avetisyan

Researchers have developed a novel neural network architecture that bridges the gap between the reliability of formal logic and the adaptability of machine learning.

The Stochastic Boolean Circuit achieves universal and certifiable reasoning with provable guarantees, utilizing networks that reliably sample meaningful Boolean circuits.

Despite the theoretical existence of neural networks capable of emulating any Boolean function, trained models frequently fall short of this ideal, raising questions about certifiable and universal reasoning in artificial intelligence. In the paper ‘Certifiable Boolean Reasoning Is Universal’, we address this challenge by introducing a novel deep learning architecture-the Stochastic Boolean Circuit-which parameterizes distributions over Boolean circuits with the guarantee of almost surely sampling valid and certifiable circuits. We prove that, for any Boolean function [latex]f:\{0,1\}^B\to\{0,1\}[/latex], there exists a parameter configuration under which the sampled circuit computes [latex]f[/latex] with arbitrarily high probability, while achieving efficient parameter scaling for sparse Boolean functions. Can this approach unlock more robust and interpretable AI systems capable of provably correct logical reasoning?

Beyond Determinism: Embracing Uncertainty in Computation

Conventional digital circuits excel at executing precise calculations, but their inherent reliance on definitive states proves problematic when processing information gathered from the physical world. Real-world data is rarely clean; it’s invariably contaminated by noise – sensor inaccuracies, environmental interference, or inherent randomness. This noise can easily disrupt the predictable ‘on’ or ‘off’ signals that underpin Boolean logic, leading to errors and unreliable outcomes. While error correction codes can mitigate some issues, they add significant complexity and computational overhead. The fundamental limitation lies in the circuits’ inability to gracefully handle ambiguity; a slight deviation from the expected input can trigger a cascade of failures, highlighting the need for computational systems that are inherently tolerant of imperfect information and capable of extracting meaningful signals from the chaos.

The foundations of modern computation rely heavily on Boolean logic, a system demanding definitive true or false states. However, this rigidity presents a fundamental limitation when dealing with the complexities of real-world data, which is often ambiguous and imprecise. Representing uncertainty within this framework requires cumbersome workarounds, effectively forcing nuanced information into discrete, binary values. This process not only diminishes the efficiency of computation but also hinders the system’s ability to adapt to novel or incomplete data. Consequently, the inherent inflexibility of Boolean logic struggles to effectively model probabilistic scenarios or gracefully handle noisy inputs, creating a bottleneck in applications requiring intelligent interpretation and flexible responses to imperfect information.

The limitations of conventional computing necessitate a shift towards models that acknowledge the inherent uncertainty present in real-world data. Unlike the deterministic nature of Boolean logic, these emerging computational approaches leverage probabilistic reasoning, allowing systems to operate effectively even with noisy or incomplete information. This means moving beyond simply seeking a ‘correct’ answer and instead calculating the likelihood of various outcomes, enabling more robust and adaptable artificial intelligence. By embracing stochasticity – the tendency of systems to exhibit randomness – researchers aim to create systems that don’t just process data, but rather infer meaning from it, mirroring the nuanced way biological systems handle complexity and ambiguity. This paradigm shift promises to unlock new capabilities in fields ranging from medical diagnosis to financial modeling, where dealing with uncertainty is paramount.

Current computational approaches to complex reasoning face a fundamental scaling problem: as the depth of logical inference increases, the computational resources required grow at an exponential rate. This limitation stems from the need to track and manipulate a rapidly expanding number of possibilities with each successive reasoning step. While shallow inferences can be managed efficiently, even moderately complex problems quickly overwhelm available resources, rendering many practical applications intractable. The difficulty isn’t merely a matter of faster processors or increased memory; it reflects an inherent limitation in how these systems represent and process information. Consequently, researchers are actively exploring alternative paradigms that can approximate probabilistic reasoning without incurring such prohibitive costs, seeking methods that trade absolute certainty for computational feasibility and enable deeper, more nuanced analysis of uncertain data.

Introducing the Stochastic Boolean Circuit: A Framework for Probabilistic Computation

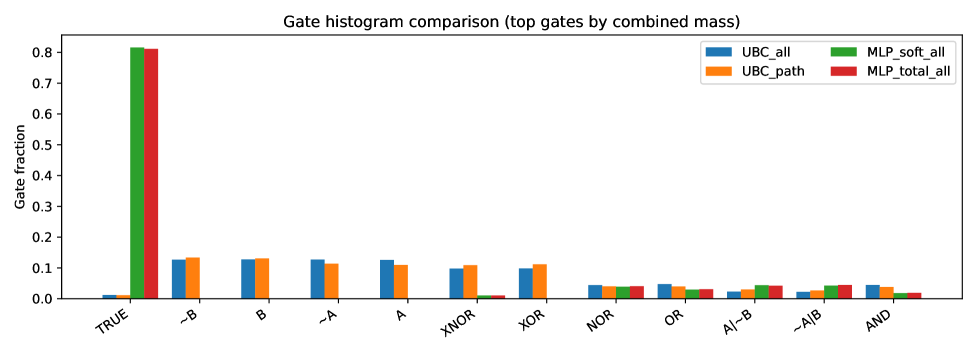

The Stochastic Boolean Circuit (SBC) represents a class of neural networks distinguished by its use of stochastic Boolean gates for computation. Unlike deterministic circuits employing fixed logic, SBCs utilize probabilistic gates where inputs are not rigidly defined as true or false, but rather as probability distributions. These gates perform Boolean operations – AND, OR, NOT, etc. – based on these probabilities, producing probabilistic outputs. This framework enables the network to be trained using standard backpropagation techniques, adjusting gate parameters to optimize performance on a given task. The core functionality lies in representing and manipulating uncertainty, allowing the SBC to model complex relationships and potentially offering increased robustness compared to traditional neural networks.

Traditional Boolean circuits operate on deterministic principles, yielding a single, fixed output for a given input. In contrast, the Stochastic Boolean Circuit leverages a probabilistic framework where gate outputs are represented as probability distributions rather than discrete values. This allows the circuit to model uncertainty and ambiguity inherent in real-world data, leading to more flexible reasoning capabilities. The probabilistic approach also enhances robustness; the circuit can maintain functionality even with noisy or incomplete input data, as the probability distributions account for variations and allow for inferences based on likely outcomes rather than requiring precise, error-free inputs. This inherent resilience differentiates it from the brittle nature of deterministic circuits, which are highly sensitive to input errors.

The Bit-Lifting Channel is a stochastic process integral to the Stochastic Boolean Circuit’s functionality, enabling the conversion of continuous-valued signals into binary values required for Boolean gate operations. This channel operates by introducing probabilistic noise during the conversion, specifically by modeling the binary output as a function of the input value and a random variable. The probability of outputting a ‘1’ is determined by a sigmoid function applied to the input value; mathematically, [latex]P(bit = 1 | x) = \sigma(x)[/latex], where σ is the sigmoid function and x is the continuous input. This probabilistic approach allows the circuit to handle uncertainty and facilitates gradient-based learning by providing a differentiable path for backpropagation through the binarization process, which is crucial for training the network.

The SM Function, or Sigmoid Mixture Function, is integral to the Stochastic Boolean Circuit’s operational stability by normalizing the outputs of each gate. This normalization process scales gate outputs to a range between 0 and 1, effectively transforming them into probabilities. Without this normalization, the cumulative effect of multiple gate operations could lead to unbounded values, causing instability during probabilistic inference. The SM Function utilizes a mixture of Gaussian distributions to achieve this normalization, allowing for a flexible representation of uncertainty and preventing signal propagation issues that can arise in deep networks. Specifically, the function’s output, [latex] p = \sigma(\sum_{i=1}^{n} w_i x_i + b) [/latex], where σ is the sigmoid function, [latex] x_i [/latex] represents the input values, [latex] w_i [/latex] are the weights, and [latex] b [/latex] is the bias, ensures that the probabilistic reasoning remains well-defined and computationally tractable.

Formalizing Uncertainty: Sampling and Computational Power

The Stochastic Boolean Circuit (SBC) is formally proven to sample a given Boolean circuit with a probability of 1, as detailed in Theorem 4.1. This means that for any valid Boolean circuit input, the SBC will generate the corresponding output with certainty over repeated trials. The proof relies on establishing a direct correspondence between the probabilistic activation of neurons within the SBC and the deterministic evaluation of the target Boolean circuit. Specifically, the SBC’s stochastic activation process is constructed to perfectly mirror the truth table of the Boolean function represented by the circuit, ensuring a sampling probability of 1 for any input vector. This characteristic is foundational to the model’s ability to accurately represent and compute Boolean functions.

Theorem 4.2 formally establishes the universal computational capacity of the Stochastic Boolean Circuit. This theorem demonstrates that, for any Boolean function [latex]f: \{0, 1\}^n \rightarrow \{0, 1\}[/latex], a circuit implementing that function can be constructed within the model, provided a sufficient number of neurons are allocated. Specifically, the theorem guarantees the existence of a circuit configuration that will accurately compute the output of [latex]f[/latex] for all possible input vectors. The number of neurons required is dependent on the complexity of [latex]f[/latex], but the theorem confirms the principle of computability for any Boolean function within the model’s framework.

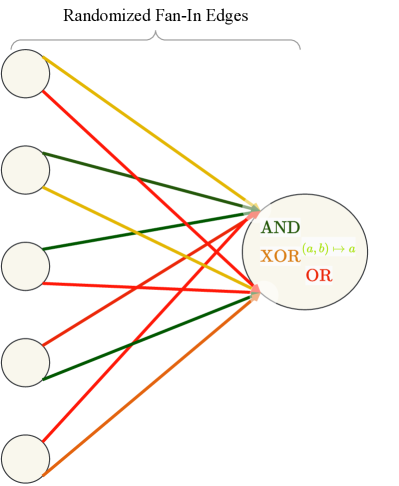

The network architecture utilizes a ‘Fan-in 2 Gate’ and ‘Fan-out 1 Gate’ constraint to manage the complexity of computations and network connections. The ‘Fan-in 2 Gate’ limits each neuron to receive input from a maximum of two preceding neurons, thereby controlling the number of weighted inputs to each processing unit. Conversely, the ‘Fan-out 1 Gate’ ensures that each neuron provides its output to only one subsequent neuron, preventing excessive branching and facilitating a more directed flow of information. These constraints collectively reduce the overall parameter count and computational demands of the network, contributing to efficient processing without sacrificing expressive power, particularly when dealing with sparse Boolean functions.

The model’s parameter count scales linearly with the size of the input for sparse Boolean functions, a characteristic achieved when the target function is an O(log B)-junta. An O(log B)-junta is a Boolean function dependent on a logarithmic number of input variables, where ‘B’ represents the size of the input space. This contrasts with the exponential scaling typical of general Boolean functions; the model’s architecture allows it to represent and compute these sparse functions with a parameter count of O(n log B), where ‘n’ is the number of neurons, effectively decoupling the complexity from the full input space size and enabling efficient computation for functions with limited dependencies.

Towards Robust Intelligence: Implications and Future Directions

The established AC0 complexity class, foundational in computational theory, defines the limits of circuits with limited depth but unrestricted fan-in. The Stochastic Boolean Circuit represents a significant advancement by extending this class, introducing probabilistic elements that fundamentally alter its computational power. This isn’t merely an addition of randomness; it’s a structured incorporation of probability that allows the circuit to represent and process information in a more nuanced way. By embracing stochasticity, the model gains the capacity to approximate complex functions previously beyond its reach and exhibits a surprising resilience to errors. The result is a computational framework that isn’t simply more expressive – capable of representing a wider range of functions – but also inherently more robust, offering a pathway towards artificial intelligence systems that can function reliably even in the presence of noisy or incomplete data.

The Stochastic Boolean Circuit distinguishes itself through an intrinsic ability to handle imperfect data, a characteristic vital for practical deployment in unpredictable environments. Unlike traditional circuits demanding precise inputs, this architecture leverages probability, allowing it to function effectively even when faced with noisy or ambiguous information. This resilience stems from the circuit’s probabilistic gates, which don’t require absolute truth values, but rather operate on probabilities, effectively ‘averaging out’ errors and uncertainties. Consequently, the system exhibits a robustness rarely seen in current AI models, potentially unlocking applications in fields like medical diagnosis, autonomous navigation, and financial modeling – all areas where real-world data is inherently flawed or incomplete, and reliable performance under such conditions is paramount.

Current artificial intelligence systems often struggle when confronted with incomplete or uncertain information, leading to unreliable outputs in real-world scenarios. This new computational framework, leveraging stochastic Boolean circuits, offers a potential solution by inherently embracing probabilistic reasoning. Unlike traditional systems that demand definitive inputs, this approach allows for calculations even with ambiguous data, effectively modeling uncertainty and reducing the risk of catastrophic failures. The ability to reason reliably under ambiguity is particularly impactful for applications like medical diagnosis, autonomous navigation, and financial modeling, where decisions must be made despite inherent data limitations, promising a shift towards more trustworthy and adaptable AI.

Ongoing research prioritizes expanding the Stochastic Boolean Circuit’s architectural capacity to tackle increasingly intricate reasoning challenges. This scaling process isn’t merely about computational power; it involves developing strategies to manage the inherent complexity of probabilistic circuits while maintaining computational efficiency. Investigations are underway to assess the framework’s performance on benchmark reasoning tasks-areas where current AI frequently falters due to sensitivity to noisy data or ambiguous inputs. Success in these endeavors promises a new generation of AI systems exhibiting enhanced robustness and reliability, capable of making sound judgments even in uncertain environments and fostering greater trust in critical applications like autonomous decision-making and complex data analysis.

The pursuit of certifiable reasoning, as detailed in this work concerning Stochastic Boolean Circuits, echoes a fundamental principle of system design: structure dictates behavior. The paper’s emphasis on constructing networks that ‘almost surely sample meaningful Boolean circuits’ reveals an elegant attempt to impose order on stochastic processes, ensuring predictable outcomes. This aligns with Dijkstra’s assertion: “It’s not enough to show something works; you must prove why it works.” The ability to certify reasoning isn’t merely about achieving a result, but understanding the underlying mechanisms that guarantee it, a principle crucial to building robust and reliable systems from the foundational level of propositional logic.

What Lies Ahead?

The demonstration of certifiable reasoning within a stochastic Boolean circuit, while a notable step, merely shifts the locus of difficulty. The architecture itself is elegant in its simplicity – a quality often mistaken for ease of implementation. The true challenge now lies not in building such circuits, but in scaling them to problems of genuine complexity. A network that can flawlessly solve toy problems is, after all, a parlor trick, not a breakthrough. The current work suggests a pathway, but the practical limitations of translating real-world data into neat Boolean representations remain considerable.

Furthermore, the reliance on ‘almost surely’ sampling introduces a subtle fragility. While the probabilistic guarantee is mathematically sound, it sidesteps the question of how to efficiently verify a correct solution in any given instance. A system that requires exponentially many samples to confirm its own accuracy is, ironically, not particularly useful. Future research must address this verification bottleneck, perhaps by exploring alternative sampling strategies or by incorporating mechanisms for self-correction.

Ultimately, the pursuit of certifiable reasoning is a search for structure. If a design feels clever, it’s probably fragile. The most robust systems will likely be those built on the fewest assumptions, relying on fundamental principles of logic rather than ad-hoc heuristics. The simplicity of the Boolean circuit is therefore not merely an aesthetic preference, but a pragmatic necessity. The next generation of neural architectures will likely echo this principle: fewer layers, fewer parameters, and a greater emphasis on provable guarantees.

Original article: https://arxiv.org/pdf/2602.05120.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Gold Rate Forecast

- Cardano Founder Ditches Toys for a Punk Rock Comeback

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- A Knight of the Seven Kingdoms Season 1 Episode 4 Gets Last-Minute Change From HBO That Fans Will Love

- How TIME’s Film Critic Chose the 50 Most Underappreciated Movies of the 21st Century

- Bob Iger revived Disney, but challenges remain

- All The Celebrities In Taylor Swift’s Opalite Music Video: Graham Norton, Domnhall Gleeson, Cillian Murphy, Jodie Turner-Smith and More

- Building Trust in AI: A Blueprint for Safety

- Wanna eat Sukuna’s fingers? Japanese ramen shop Kamukura collabs with Jujutsu Kaisen for a cursed object-themed menu

2026-02-08 00:48