Author: Denis Avetisyan

New research reveals how people interpret and react to errors made by robots operating in public spaces, highlighting the complexities of real-world human-robot interaction.

Field studies demonstrate that while human social signals provide valuable information about robot errors, effective error recovery requires careful contextualization and interpretation.

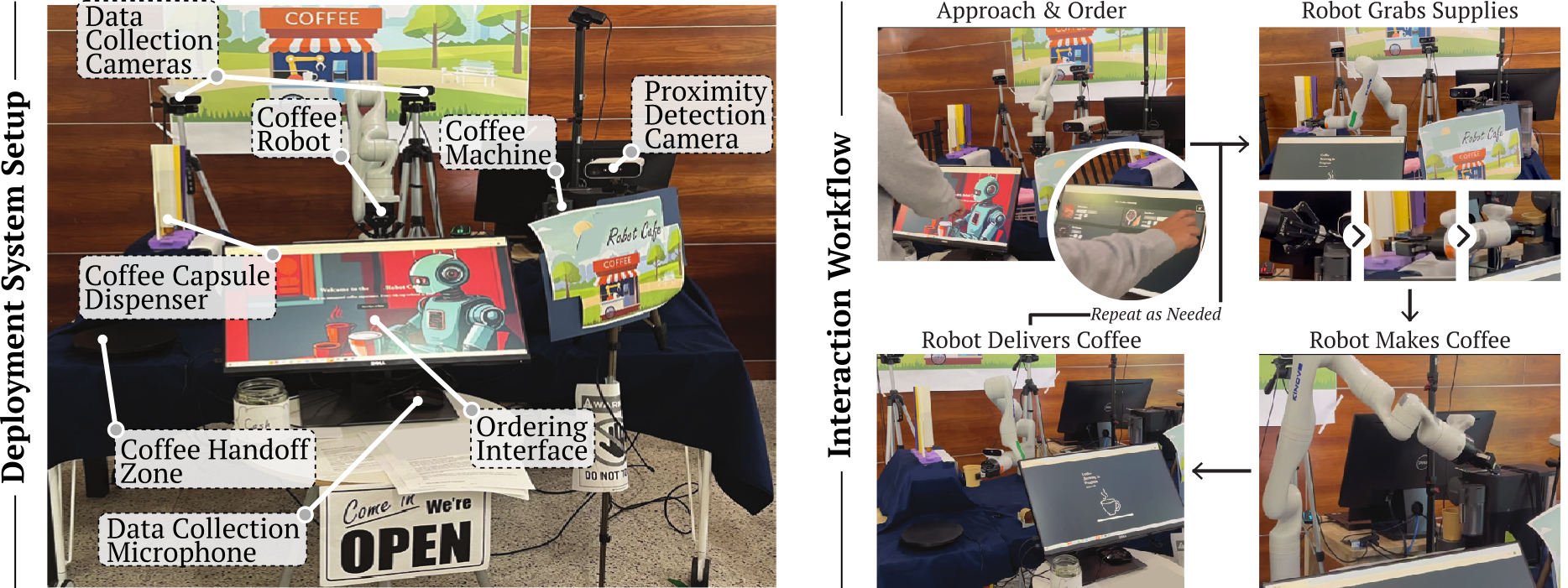

While robotic systems increasingly operate in human environments, understanding nuanced human responses to inevitable errors remains a challenge. This paper, ‘Signal or ‘Noise’: Human Reactions to Robot Errors in the Wild’, investigates how people socially signal their reactions to a robot’s mistakes during public interactions, deploying a coffee-serving robot with [latex]\mathcal{N}=49[/latex] participants. Our findings reveal that these signals are rich and varied, yet often “noisy,” requiring careful contextualization to be effectively interpreted by adaptive robotic systems. How can we best leverage-and filter-these natural human signals to create more robust and intuitive human-robot interactions in real-world settings?

Predictable Robots: The Illusion of Intuitive Interaction

Effective collaboration between humans and robots isn’t simply about technical functionality; it fundamentally relies on anticipating how people expect a robot to behave. These expectations, often formed through prior experiences with technology or interactions with other people, create internal ‘mental models’ of how the robot should operate – its capabilities, intentions, and even personality. When a robot’s actions align with these pre-conceived notions, interaction feels natural and intuitive, fostering trust and efficient teamwork. Conversely, discrepancies between a user’s mental model and the robot’s actual behavior create confusion, frustration, and potentially even a rejection of the technology. Therefore, designing robots that proactively consider and respond to human expectations is paramount, demanding a focus on predictable, interpretable actions and clear communication of the robot’s internal state and goals.

When a robot’s actions deviate from a user’s expectations – a phenomenon termed ‘Robot Errors’ – interaction quickly becomes strained. These errors aren’t simply functional missteps; they represent a breakdown in anticipated social behavior, triggering feelings of frustration and eroding trust in the machine. A robot that misunderstands a gesture, responds inappropriately to a vocal cue, or fails to acknowledge a social norm doesn’t just perform a task incorrectly – it violates an implicit contract of predictable interaction. This perceived inconsistency introduces uncertainty, forcing the user to expend cognitive effort to reinterpret the robot’s intent and adjust their own behavior, ultimately hindering collaboration and fostering negative perceptions of the technology’s reliability and social intelligence.

Existing methods for enabling robots to understand human interaction frequently struggle with the subtle complexities of social signaling. While a robot might recognize basic commands, interpreting nuanced cues – such as shifts in body language, vocal tone, or even micro-expressions – proves considerably more challenging. This limitation stems from a reliance on simplified models that often fail to capture the full range of human communicative behaviors, particularly those influenced by context, cultural background, and individual personality. Consequently, robots can misinterpret intentions, respond inappropriately, or fail to recognize when a user is experiencing difficulty, hindering effective collaboration and eroding trust. Addressing this requires advancements in areas like affective computing, machine learning, and the development of more sophisticated models of human social intelligence, moving beyond simple command recognition towards genuine interactive understanding.

Reading the Room: Decoding the Signals We Miss

Humans communicate internal states and reactions through a continuous stream of ‘Social Signals’. These signals encompass both verbal cues – including speech rate, tone, and specific word choices – and non-verbal behaviors such as facial expressions, body posture, gestures, and eye contact. While often subconscious, these signals provide information regarding emotional state, attentiveness, agreement or disagreement, and intent. The transmission of social signals is a fundamental aspect of human interaction, facilitating coordination, establishing rapport, and conveying meaning beyond the explicit content of communication. Observable signals can be categorized as either ‘expressed’ – intentionally conveyed – or ‘emitted’ – occurring without conscious control, though both contribute to the overall communicative effect.

The interpretation of social signals is not inherent to the signal itself, but rather dependent on the surrounding context and situational factors. A smile, for example, can indicate happiness, politeness, or even sarcasm depending on the accompanying verbal cues, the relationship between the individuals, and the broader environment. Similarly, direct eye contact can signify engagement and honesty in some cultures, but be considered aggressive or disrespectful in others. Accurate interpretation, therefore, requires ‘Contextualization’ – the process of integrating the observed signal with all available information regarding the physical setting, the social dynamics, and the cultural background of the participants to arrive at a meaningful understanding.

Group interactions significantly increase the complexity of interpreting social signals due to the compounding effect of multiple communicators. Studies indicate a marked rise in ‘irrelevant speech’ – utterances not directly related to the primary task or topic – within group settings. Specifically, observed rates of irrelevant speech average 4.18 sentences per minute in group conversations, a substantial increase from the 0.10 sentences per minute recorded during one-on-one interactions. This heightened volume of communication necessitates more sophisticated analysis to differentiate meaningful signals from background conversational noise and accurately assess individual and collective states.

Volunteered information, encompassing statements made beyond direct questioning, serves as a valuable supplemental data source for assessing user state. This data can include unsolicited details regarding task difficulty, emotional responses, or perceived usability, offering insights not readily apparent through observation of behavioral signals alone. Analysis of volunteered information requires natural language processing techniques to extract relevant sentiments and key topics, providing a more comprehensive understanding of the user’s internal state and experience. The combination of observed signals and volunteered information allows for a more robust and accurate assessment than reliance on either data source independently.

Autonomous Coffee: Observing Interaction in the Wild

The Autonomous Coffee Robot was deployed in a public environment as a platform for observational data collection regarding human-robot interaction. All data acquisition procedures were designed with ethical considerations as a primary concern; participant interaction is initiated only after presentation of an informed consent notice via the robot’s interface. Collected data includes interaction duration, order frequency, and expressed sentiment, but excludes any personally identifiable information unless explicitly and voluntarily provided by the participant. Data storage utilizes encryption at rest and in transit, adhering to relevant data privacy regulations, and all collected data is anonymized prior to analysis to protect participant privacy.

The Autonomous Coffee Robot employs an ‘Ordering Interface’ consisting of a touchscreen display and integrated audio prompts to manage user interaction and secure informed consent. Prior to beverage preparation, the interface presents a clear explanation of data collection practices, specifying the types of data recorded – including order history, interaction timestamps, and anonymized demographic information – and the purpose of this data collection for research and development. Users are required to actively acknowledge and consent to these terms via a touchscreen confirmation before proceeding with their order; data recording commences only upon explicit consent. The interface also includes a readily accessible ‘Privacy Policy’ detailing data handling procedures and user rights, and provides an option to request data deletion.

The Autonomous Coffee Robot’s safety architecture relies on a combined ‘Proximity Handler’ and ‘Intrusion Detector’ system. The ‘Proximity Handler’ utilizes ultrasonic and infrared sensors to create a dynamic safety radius around the robot, continuously monitoring for nearby obstacles and pedestrians. Simultaneously, the ‘Intrusion Detector’ employs a combination of pressure sensors in the robot’s base and computer vision to identify potential physical tampering or attempts to force movement. Data from both systems is fed to the ‘Robot Controller’, which initiates evasive maneuvers – including stopping or altering course – to prevent collisions and maintain a safe operational distance. This layered approach ensures redundant safety checks and rapid response to both predictable obstacles and unexpected intrusions.

The Autonomous Coffee Robot employs Large Language Model (LLM)-powered interaction to move beyond scripted responses and facilitate more nuanced communication with users. This system enables the robot to process and generate human-like conversational turns, improving user experience and fostering a more natural interaction flow. Beyond simple order taking, the LLM allows the robot to dynamically adjust its responses based on user cues and to elicit specific signals, such as confirmation of preferences or clarification of ambiguous requests. This capability is crucial for gathering richer data regarding user behavior and preferences during the interaction, going beyond explicit inputs to capture implicit signals conveyed through natural language.

Real-World Validation: The Predictability of Human Assistance

Moving beyond the controlled conditions of a laboratory is essential for assessing the true viability of human-robot interaction systems. Recent work emphasizes the importance of ‘field deployment’ – subjecting the technology to real-world testing in uncontrolled environments like homes and offices. This approach allows researchers to capture the complexities of genuine human behavior, accounting for unpredictable variables such as background noise, varying lighting conditions, and the diverse range of individual user responses. By evaluating the system’s performance outside of carefully curated settings, developers gain critical insights into its robustness and identify potential failure points that would likely remain hidden during traditional testing methods, ultimately paving the way for more reliable and adaptable robotic companions.

Analysis of field deployment data indicates a remarkably consistent human response to robotic failures; 97.67% of instances where the robot made an error prompted a detectable reaction from the user. This finding suggests a robust and reliable level of user engagement with the system, and confirms that individuals are actively monitoring robotic actions and readily acknowledge deviations from expected behavior. The high rate of elicited reactions is particularly significant because it establishes a foundation for developing adaptive systems capable of recognizing user awareness of errors, which is a crucial step toward building trust and facilitating seamless human-robot collaboration. This consistent responsiveness highlights the potential for real-time error correction and improved interaction protocols.

Analysis of real-world interactions revealed a significant tendency for users to spontaneously offer clarifying information when a robot committed an error, occurring in 58.14% of instances. This volunteered data wasn’t simply a reaction to the mistake, but a contextualization of it; users often explained the situation, clarified their intentions, or offered suggestions for correction. This behavior suggests that individuals don’t treat robotic errors as isolated events, but actively attempt to bridge the communication gap and aid the robot in understanding the environment and user goals. Consequently, this freely provided information offers a valuable window into the user’s internal state – their expectations, their understanding of the situation, and their willingness to collaborate – presenting a crucial resource for building more adaptable and trustworthy robotic systems.

The capacity for humans and robots to collaborate effectively hinges on a deep understanding of the subtle interplay during interaction, particularly when errors occur. Research indicates that a significant majority of robotic failures don’t simply halt progress, but actively elicit a response from users, suggesting a consistent level of engagement even in the face of imperfection. More compellingly, over half of these instances prompted volunteered information from the user, revealing an intrinsic willingness to offer assistance and clarify the situation. This dynamic highlights a crucial point: fostering trust isn’t about eliminating errors entirely, but rather about establishing a predictable and understandable response to those errors, allowing humans to feel informed, engaged, and capable of contributing to a shared problem-solving process, ultimately paving the way for truly seamless human-robot collaboration.

The study meticulously details the chaos of real-world deployment, confirming a suspicion long held: elegant error handling quickly devolves into managing human perception of failure. It appears the ‘signal’ is perpetually buried under layers of ‘noise,’ demanding contextualization just to register as a problem. Vinton Cerf observed, “Any sufficiently advanced technology is indistinguishable from magic.” This rings true; the expectation of seamless operation creates a fragile illusion, and even minor robotic stumbles shatter it. The research highlights how quickly that ‘magic’ fades, leaving developers to grapple with the very human tendency to interpret imperfection as systemic breakdown. Production, as always, finds a way to expose the cracks in the theory.

So, What Breaks Next?

This work, predictably, confirms that humans are messy. Rich social signals abound when robots stumble – a veritable firehose of non-verbal data. The surprise, of course, isn’t that the signals exist, but that interpreting them as anything other than ‘noise until proven otherwise’ remains a challenge. The ambition to build robots that seamlessly integrate into human spaces requires anticipating every possible failure mode, and then correctly deciphering the resulting exasperated sighs, pointed glances, and muttered complaints. Good luck with that.

Future work will inevitably focus on contextualization – because simply detecting an error isn’t enough. The real trick is understanding why the human is annoyed. Was it a critical failure, a minor inconvenience, or just a demonstration of the robot’s questionable life choices? The pursuit of truly adaptive systems necessitates moving beyond simple error recovery to anticipating human reaction, and preemptively apologizing for the robot’s inevitable shortcomings.

One can’t help but suspect that this entire field is a sophisticated exercise in renaming existing problems. ‘Human-robot interaction’ is merely a fresh coat of paint on ‘building things that occasionally break and then trying to placate the users.’ Production, as always, will be the ultimate arbiter. If it works – wait.

Original article: https://arxiv.org/pdf/2602.05010.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- A Knight of the Seven Kingdoms Season 1 Episode 4 Gets Last-Minute Change From HBO That Fans Will Love

- How TIME’s Film Critic Chose the 50 Most Underappreciated Movies of the 21st Century

- Bob Iger revived Disney, but challenges remain

- Building Trust in AI: A Blueprint for Safety

- Wanna eat Sukuna’s fingers? Japanese ramen shop Kamukura collabs with Jujutsu Kaisen for a cursed object-themed menu

- Jacobi Elordi, Margot Robbie’s Wuthering Heights is “steamy” and “seductive” as critics rave online

- TOWIE’s Elma Pazar stuns in a white beach co-ord as she films with Dani Imbert and Ella Rae Wise at beach bar in Vietnam

- First look at John Cena in “globetrotting adventure” Matchbox inspired movie

2026-02-07 01:12