Author: Denis Avetisyan

Researchers have developed a novel data pipeline that allows robots to learn complex painting tasks from human demonstrations, regardless of robot type or viewing angle.

A Real-Sim-Real pipeline leveraging 3D Gaussian Splatting and tactile retargeting enables effective training of robotic Vision-Language-Action models for sim-to-real transfer.

Scaling dexterous manipulation in robotics is hindered by the scarcity of large, high-fidelity datasets required for training advanced Vision-Language-Action (VLA) models. This work, ‘RoboPaint: From Human Demonstration to Any Robot and Any View’, introduces a Real-Sim-Real pipeline that efficiently transforms human demonstrations into robot-executable data, bypassing the need for costly and time-consuming teleoperation. By leveraging tactile-aware retargeting and photorealistic simulation, the authors demonstrate the generation of datasets capable of achieving an 80% average success rate on complex tasks like pick-and-place, pushing, and pouring-effectively “painting” robot training data from human expertise. Could this approach unlock a new era of adaptable and cost-effective robotic systems for a wider range of real-world applications?

Decoding the Reality Gap: Why Robots Struggle to Mimic Us

Historically, controlling robots has depended significantly on teleoperation, where a human operator directly commands the robot’s movements. While effective for specific tasks, this approach presents substantial limitations when scaling to complex scenarios or large robot fleets. The need for a skilled human for every robot, and the inherent delays in transmitting commands, restricts the speed and efficiency of operation. Moreover, teleoperation struggles with adaptability; even slight environmental changes or unexpected obstacles require immediate human intervention, hindering a robot’s ability to function autonomously in dynamic, real-world settings. Consequently, researchers are actively pursuing methods to reduce reliance on constant human oversight and empower robots with greater independent decision-making capabilities.

The successful imitation of human actions by robots faces a significant hurdle known as the ‘embodiment gap’. This arises from fundamental differences in the physical structure – morphology – and sensory apparatus between humans and their robotic counterparts. A human can effortlessly grasp an object using nuanced tactile feedback and a complex hand structure, while a robot may possess a gripper with limited degrees of freedom and rely on vastly different sensor data, such as force-torque sensors or vision systems. Consequently, directly translating human demonstrations – movements recorded from a human performing a task – to a robot often results in clumsy or failed attempts. The robot struggles to interpret the intended actions due to the mismatch in physical capabilities and how information about the environment is perceived, necessitating complex algorithms to adapt and reconcile these discrepancies before effective imitation can occur.

The acquisition of robust manipulation skills in robotics is fundamentally bottlenecked by the challenges of generating sufficient training data. Unlike deep learning applications that can leverage vast, readily available datasets – like images or text – training robots to physically interact with the world demands meticulously curated examples of successful task completion. Each scenario requires precise sensor data, robot configurations, and corresponding actions, making data collection a laborious and expensive undertaking. Simulating these interactions presents its own difficulties, as accurately modeling the complexities of physical contact, friction, and object deformation remains a significant hurdle. Consequently, the scarcity of diverse and realistic training data limits a robot’s ability to generalize to novel situations, hindering the development of truly adaptable and reliable manipulation capabilities.

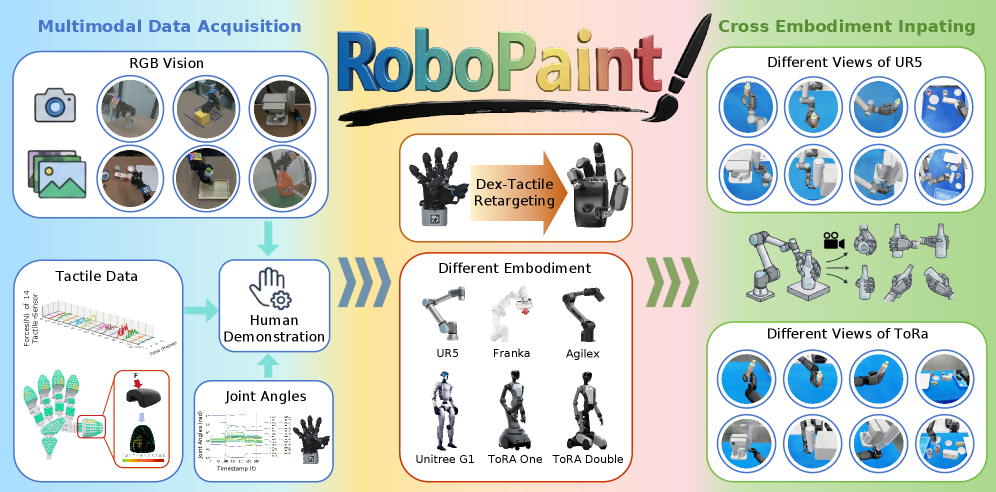

Forging a Synthetic Reality: The RoboPaint System

The RoboPaint system employs a Real-Sim-Real data pipeline consisting of three core stages: real-world data acquisition, simulation environment creation, and model training. Human painting demonstrations are initially captured using instrumented gloves and multi-view cameras, providing data on both motion and tactile interaction. This real-world data then informs the creation of a photorealistic simulation environment, reconstructed via 3D Gaussian Splatting and formatted using Universal Scene Description. Finally, the simulated environment is used to generate a large dataset for training robust Visual Language Action (VLA) models, enhancing their ability to generalize to novel painting tasks and real-world conditions.

The RoboPaint data acquisition process relies on a specialized Data Acquisition Room outfitted with instrumented gloves and a multi-view camera system. The instrumented gloves provide precise, high-resolution tracking of hand pose and applied forces during demonstration, capturing both motion and tactile information. The multi-view camera array, consisting of synchronized cameras positioned around the workspace, enables comprehensive 3D reconstruction of the demonstrations from multiple perspectives. This combined sensor suite facilitates the accurate capture of human painting actions, creating a dataset suitable for training and validating robotic manipulation models.

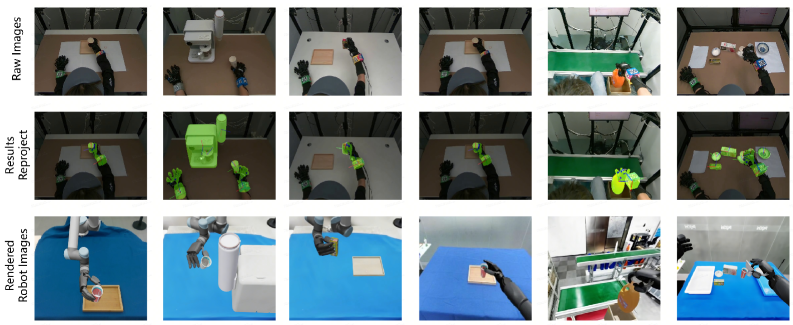

The RoboPaint pipeline employs 3D Gaussian Splatting to reconstruct the physical environment from multi-view camera data, generating a detailed 3D representation. This reconstruction is then formatted using Universal Scene Description (USD), a framework enabling the creation of complex, scalable digital twins. USD facilitates high-fidelity simulation by providing a standardized scene description and supporting physically-based rendering, material definitions, and articulation of objects within the virtual environment. This combination allows for the creation of synthetic data that closely mirrors the real-world Data Acquisition Room, crucial for training robust vision-language models.

![This real-sim-real pipeline leverages multiview data capture with instrumented gloves, object pose estimation, and [latex] ext{Dex-Tactile}[/latex] retargeting to transfer human demonstrations to a simulated environment reconstructed via 3D Gaussian Splatting, enabling robot control and demonstration recording from various viewpoints.](https://arxiv.org/html/2602.05325v1/x2.png)

Bridging the Gap: Dex-Tactile Retargeting and Multi-Modal Data

Dex-Tactile Retargeting achieves robust robot manipulation by simultaneously optimizing for kinematic alignment and contact consistency. Kinematic alignment ensures the robot’s movements are within its physical limitations and avoids joint displacement errors, while contact consistency verifies stable interactions with the target object throughout the manipulation process. This dual optimization strategy addresses a key challenge in robot learning: generating motions that are both plausible for the robot’s morphology and reliably maintain contact during tasks. By prioritizing both aspects, the method results in feasible and stable robot movements, improving success rates and reducing the risk of collisions or dropped objects during complex manipulation sequences.

Dex-Tactile Retargeting leverages a data stream integrating visual information – typically RGB or RGB-D images – with kinematic data detailing robot joint angles and end-effector pose. Critically, the system incorporates tactile signal information, derived from sensors embedded in the robot’s grippers or fingertips, providing direct feedback on contact forces and slippage. This multi-modal data fusion allows the system to not only understand the robot’s actions and the object’s visual properties, but also to perceive the physical interaction between them, which is essential for stable and reliable manipulation. The combination of these data types provides a more complete and robust representation of the manipulation task than any single modality could achieve independently.

The 6D pose estimation process within the data acquisition pipeline is enhanced through the implementation of both FoundationPose and ARuco Marker technologies. FoundationPose provides an initial, robust pose estimate, which is then refined using visual detections of ARuco Markers strategically placed within the environment. These markers, easily identifiable fiducial patterns, allow for precise localization and orientation tracking, improving the accuracy and stability of the robot’s perceived position and orientation in 3D space. This combined approach mitigates drift and enhances the overall reliability of pose estimation, crucial for accurate robot control and data collection.

Data augmentation, specifically employing techniques such as Object Material Swap and Background Swap, is critical for enhancing the robustness and generalization capabilities of machine learning models used in robotic manipulation. Object Material Swap involves altering the visual texture and appearance of objects within the training dataset, exposing the model to variations in surface properties without changing the object’s geometry. Similarly, Background Swap modifies the surrounding environment in the training images, increasing the model’s invariance to changes in scene context. These methods artificially expand the size and diversity of the training dataset, mitigating overfitting and improving the model’s ability to perform reliably in unseen environments and with novel object appearances.

Unlocking True Potential: Validation and Impact of VLA Models

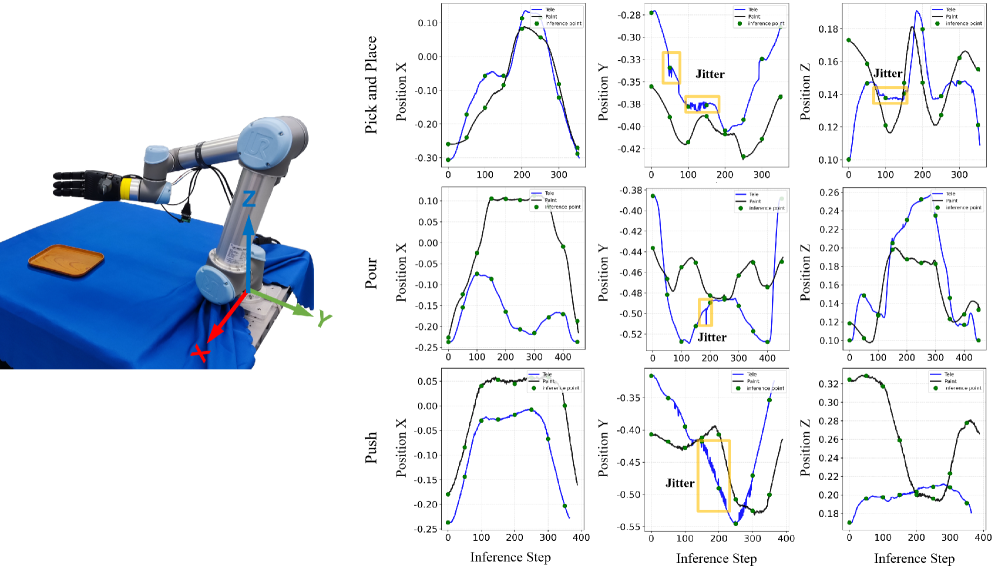

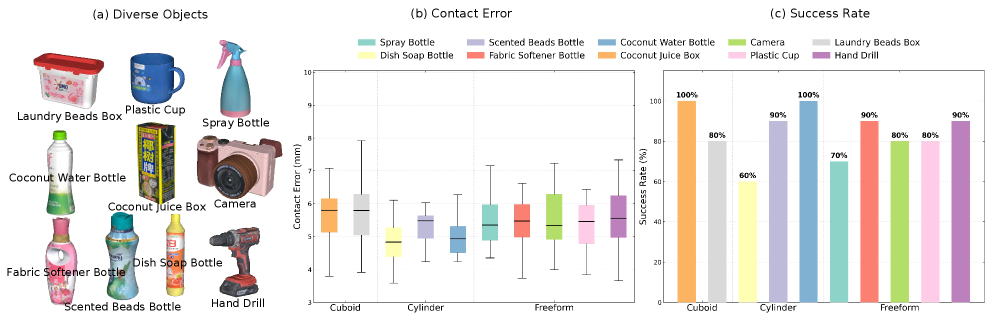

Recent advancements in robotic manipulation demonstrate a marked improvement in dexterity through the application of Visual Language Action (VLA) models. These models, trained utilizing data generated by the RoboPaint simulation platform, have achieved an 80% success rate in complex manipulation tasks. This figure represents a substantial leap forward, indicating the potential for robots to reliably perform intricate actions in simulated environments. The ability to train robots efficiently through synthetic data offers a cost-effective and scalable pathway towards developing more adaptable and intelligent systems capable of handling a diverse range of real-world challenges, effectively bridging the gap between robotic potential and practical application.

Dex-tactile retargeting, a core component of this research, successfully bridges the gap between human dexterity and robotic manipulation through remarkably precise contact mapping in simulation. Achieving an average contact error of only 3.86mm represents a significant advancement in replicating the nuanced tactile interactions inherent in human skill. This level of accuracy allows robotic systems to not only understand where a human is making contact with an object, but also to accurately reproduce the force and orientation of that contact. Consequently, complex manipulation tasks, previously requiring painstaking manual programming, can be learned by observing human demonstrations and then faithfully retargeted onto the robot, paving the way for more intuitive and adaptable robotic systems capable of seamless human-robot collaboration.

A key demonstration of the developed system’s practicality lies in its ability to successfully execute learned policies in the physical world; the Real-World Replay Success Rate reached 84% across a diverse set of objects. This high percentage indicates a robust transferability of skills acquired in simulation to actual robotic manipulation tasks. Achieving such a rate signifies that the policies learned are not merely optimized for the simulated environment, but generalize effectively to the complexities and variations inherent in real-world scenarios – a critical step toward deploying adaptable and reliable robotic systems beyond controlled laboratory settings. This performance suggests the potential for significantly reducing the time and resources required to implement robotic solutions in practical applications.

The creation of robust robotic manipulation policies traditionally demands extensive datasets acquired through painstaking teleoperation – a process that is both time-consuming and resource intensive. However, the development of RoboPaint introduces a paradigm shift, accelerating data collection by up to 5.33x when contrasted with conventional teleoperation techniques. This substantial speedup is achieved through automated data generation, enabling researchers to rapidly train and refine robotic control algorithms. Consequently, development timelines are dramatically reduced, fostering a more agile and iterative approach to robotics research and allowing for the exploration of a wider range of complex manipulation tasks with greater efficiency.

The demonstrated advancements in robotic manipulation, achieved through validated VLA models and Dex-Tactile Retargeting, represent a substantial leap beyond conventional approaches to robotic control. Prior methodologies often relied on painstakingly hand-engineered solutions or required extensive real-world data collection – processes that are both time-consuming and limited in their ability to generalize to new scenarios. This work, however, leverages simulated environments and innovative data generation techniques to achieve performance benchmarks – including an 84% Real-World Replay Success Rate – that were previously unattainable. Consequently, the development of more adaptable and intelligent robotic systems is no longer constrained by the limitations of traditional programming or the need for vast amounts of real-world training data, opening doors to applications demanding greater dexterity, precision, and autonomy.

The pursuit within RoboPaint isn’t simply about replicating human action, but fundamentally questioning the boundaries of robotic perception and control. It posits a system where limitations aren’t dead ends, but invitations to innovate. This echoes Tim Bern-Lee’s sentiment: “The Web is more a social creation than a technical one.” The work deftly blends real and simulated data, mirroring the Web’s collaborative and evolving nature. Just as the Web transformed through user contributions, RoboPaint leverages human demonstration as a foundational layer, building a robust dataset for Vision-Language-Action models. The researchers don’t merely aim for sim-to-real transfer; they architect a dynamic system, open to refinement and adaptation, recognizing that the true potential lies in the interplay between the digital and physical realms – a concept deeply resonant with the Web’s own origins and continued development.

Beyond the Brushstroke

The construction of ‘RoboPaint’-a pipeline for translating human gesture into robotic action-reveals a fundamental truth: the most effective exploits of robotic systems begin not with a defined goal, but with a questioning of the sensory input itself. This work establishes a foundation for imitation, yet sidesteps the deeper challenge of robotic originality. The fidelity achieved through 3D Gaussian Splatting and tactile retargeting is impressive, but merely replicates what is already known. The true frontier lies in enabling robots to interpret ambiguous or incomplete demonstrations, to extrapolate beyond the dataset, and to discover novel solutions-even unintended ones.

Current limitations reside not in the mechanics of manipulation, but in the constraints of the learned action space. A robot proficient at mimicking painting will still struggle with sculpting, or assembling, or even intentionally making a mess. The pipeline, for all its ingenuity, remains tethered to the specific task for which it was trained. Future work must address this rigidity, perhaps by exploring meta-learning approaches or by incorporating intrinsic motivation-a robotic equivalent of curiosity-to drive exploration and adaptation.

Every exploit starts with a question, not with intent. This principle holds true for robotics. RoboPaint offers a powerful tool for transferring human skill, but the ultimate measure of success will not be how flawlessly a robot can reproduce a masterpiece, but how readily it can dismantle, deconstruct, and redefine the very notion of creation.

Original article: https://arxiv.org/pdf/2602.05325.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- First look at John Cena in “globetrotting adventure” Matchbox inspired movie

- TOWIE’s Elma Pazar stuns in a white beach co-ord as she films with Dani Imbert and Ella Rae Wise at beach bar in Vietnam

- Kim Kardashian and Lewis Hamilton are pictured after spending New Year’s Eve partying together at A-list bash – as it’s revealed how they kept their relationship secret for a month

- Matthew Lillard Hits Back at Tarantino After Controversial Comments: “Like Living Through Your Own Wake”

- Outlander’s Caitríona Balfe joins “dark and mysterious” British drama

- Demon1 leaves Cloud9, signs with ENVY as Inspire moves to bench

- A Knight of the Seven Kingdoms Season 1 Episode 4 Gets Last-Minute Change From HBO That Fans Will Love

- Building Trust in AI: A Blueprint for Safety

2026-02-06 15:17