Author: Denis Avetisyan

As artificial intelligence permeates education, we must critically examine its impact beyond test scores and consider the broader effects on students’ cognitive development, autonomy, and well-being.

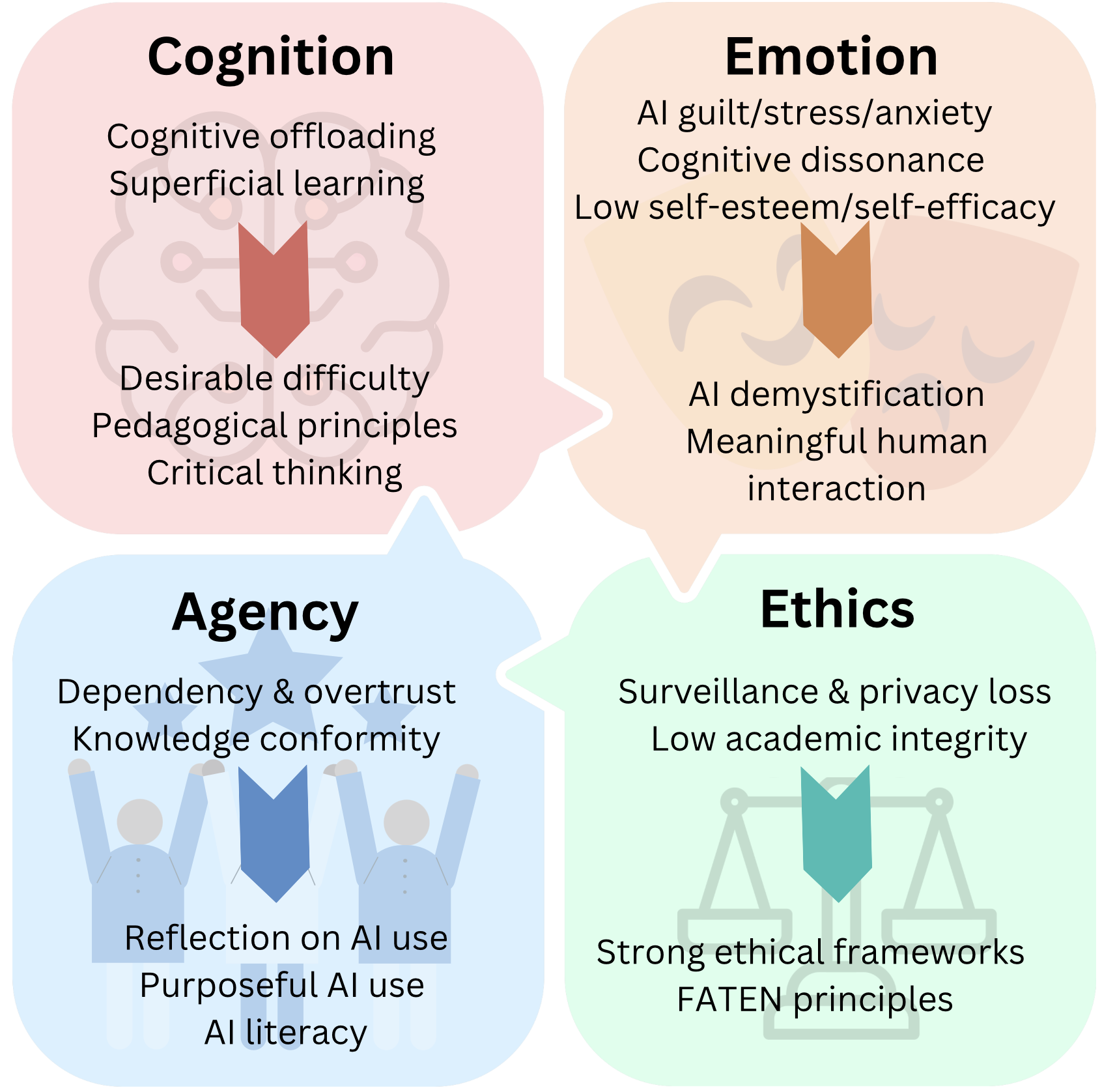

This review assesses the potential risks and benefits of AI in education, focusing on cognition, agency, emotion, and ethical considerations for a future of AI literacy.

While artificial intelligence promises to personalize and streamline education, its uncritical adoption risks unintended consequences extending beyond mere academic achievement. This paper, ‘AI in Education Beyond Learning Outcomes: Cognition, Agency, Emotion, and Ethics’, offers an integrative framework examining how AI impacts not only cognitive development, but also learner agency, emotional well-being, and ethical considerations. The analysis reveals that AI-driven cognitive offloading, diminished autonomy, and surveillance practices can mutually reinforce one another, potentially undermining critical thinking and civic engagement. Can we design and govern AI systems to truly safeguard the social and civic purposes of education, fostering both effective learning and a resilient, informed citizenry?

The Evolving Landscape of Learning: Promise and Peril

The integration of artificial intelligence into education holds the potential to revolutionize learning experiences, offering tailored support and individualized pathways previously unattainable at scale. However, this shift challenges long-held pedagogical principles centered on active recall, independent problem-solving, and the cultivation of intrinsic motivation. Traditional teaching methods often prioritize the process of learning – grappling with concepts, making mistakes, and refining understanding – while AI-powered tools can readily provide answers or complete tasks, potentially circumventing these crucial cognitive steps. This presents educators with a fundamental dilemma: how to harness the benefits of AI – such as immediate feedback and access to vast knowledge resources – without inadvertently fostering a passive learning style or diminishing students’ capacity for deep, conceptual understanding. Successfully navigating this new landscape requires a thoughtful re-evaluation of assessment methods, curriculum design, and the very definition of what it means to be an educated individual in the age of intelligent machines.

Intelligent Tutoring Systems have shown promise in bolstering educational outcomes, yet the widespread availability of tools like ChatGPT presents a complex challenge to traditional learning environments. Current data indicates that over one-third of young adults in the United States are regularly utilizing these AI platforms, not necessarily for learning assistance, but for direct content generation. This ease of access raises significant concerns regarding academic honesty and, crucially, the development of essential critical thinking skills. The potential for students to outsource cognitive processes to AI, while offering short-term convenience, may ultimately impede their ability to analyze information, formulate arguments, and solve problems independently – skills vital for success beyond the classroom.

The increasing accessibility of artificial intelligence tools, such as ChatGPT, presents a notable risk of cognitive offloading, where individuals become overly reliant on external systems to perform tasks that previously demanded internal cognitive effort. Recent data indicates a significant trend in this direction, with nearly half of young adults initiating academic papers and projects with the assistance of AI. While offering convenience, this dependence may inadvertently hinder the development of crucial skills like critical thinking, problem-solving, and deep comprehension. The concern isn’t simply about completing assignments, but rather the potential for long-term cognitive consequences as individuals outsource the very processes necessary for robust intellectual growth and independent learning, potentially diminishing their capacity for complex reasoning and innovative thought.

Agency and Adaptation: Cultivating the Learner’s Role

Effective learning is fundamentally predicated on students actively constructing their own understanding, a principle central to both constructivism and active learning methodologies. This approach moves away from passive reception of information towards engagement in processes like exploration, experimentation, and reflection. Students build knowledge by connecting new information to existing frameworks, formulating hypotheses, and testing them through practice. Active learning environments prioritize student participation – through discussions, problem-solving activities, and project-based work – fostering critical thinking and deeper comprehension compared to traditional lecture-based models. This contrasts with behaviorist models where learning is seen as a response to external stimuli; instead, constructivist and active learning approaches emphasize internal cognitive processes and the learner’s role in shaping their own knowledge.

Scaffolding, in an educational context, refers to a variety of instructional techniques used to provide temporary support to students as they learn new concepts or skills. This support is intentionally designed to be reduced as learners gain proficiency, ultimately fostering independent problem-solving capabilities. Effective scaffolding might include providing hints, breaking down complex tasks into smaller, manageable steps, offering templates or graphic organizers, or modeling desired behaviors. The core principle is to create a “zone of proximal development” – a gap between what a learner can accomplish independently and what they can achieve with guidance – and systematically close that gap through diminishing support, thereby promoting self-sufficiency and deeper understanding.

Generative AI tools offer potential for supporting self-regulated learning through the delivery of personalized feedback and adaptive educational resources. However, implementation requires careful consideration to mitigate the risk of fostering learner dependency. Current usage patterns indicate significant adoption, with approximately 48% of young adults utilizing platforms like ChatGPT for text summarization. This widespread use necessitates a deliberate approach to AI integration, ensuring that these tools are employed to augment, rather than supplant, core cognitive processes and maintain student agency in knowledge construction.

AI-supported learning tools should be designed to augment, rather than substitute, core cognitive functions such as critical thinking, problem-solving, and creative synthesis. Effective implementation prioritizes maintaining student agency by ensuring learners actively engage in the knowledge construction process, utilizing AI as a resource for feedback, personalized content, and adaptive challenges. This approach avoids fostering dependency on AI-generated outputs and instead empowers students to retain control over their learning pathways, develop metacognitive skills, and ultimately take ownership of their educational journey. The goal is to leverage AI’s capabilities to facilitate deeper understanding and skill development, not to bypass the essential cognitive work required for genuine learning.

The Subtle Costs: Assessing Learner Wellbeing in the Age of AI

The readily available assistance offered by artificial intelligence tools can foster an ‘Illusion of Fluency’ in learners, wherein the perceived ease of task completion overshadows the cognitive effort required for genuine knowledge acquisition. This phenomenon occurs because AI can generate outputs that appear to demonstrate understanding without the learner actively engaging in the processes of critical thinking, problem-solving, and knowledge construction. Consequently, learners may prioritize utilizing AI for quick solutions over investing in the deliberate practice and sustained effort-key components of deep and durable learning-potentially hindering long-term retention and transfer of knowledge.

Technostress and digital fatigue represent significant challenges to learner wellbeing and academic success. Prolonged exposure to digital learning environments can lead to physiological and psychological strain, manifesting as anxiety, reduced concentration, and emotional exhaustion. Research indicates that these stressors negatively correlate with self-efficacy – a learner’s belief in their ability to succeed – and intrinsic motivation, leading to decreased engagement with course materials. Consequently, diminished motivation and self-belief can directly hinder academic performance, resulting in lower grades and reduced knowledge retention. These effects are exacerbated by the always-on culture often promoted by digital learning platforms, preventing adequate recovery and contributing to chronic fatigue.

AI Guilt manifests as a specific form of discomfort experienced by learners who perceive a conflict between utilizing AI tools and maintaining academic integrity or personal authenticity. This discomfort arises when the ease of AI-assisted task completion clashes with deeply held values regarding original work and demonstrating personal understanding. Research indicates that learners experiencing AI Guilt may exhibit reduced confidence in their abilities and a diminished sense of agency over their learning process, potentially leading to decreased motivation and performance. The sensation is distinct from plagiarism concerns, focusing instead on the internal conflict between leveraging AI for efficiency and upholding principles of genuine intellectual effort.

Effective mitigation of risks associated with AI in learning environments necessitates a dual focus on cultivating learner intrinsic motivation and fostering a healthy relationship with technology. Current data indicates a generally positive reception of AI support; a global survey of university students reveals that 63% find timely AI assistance valuable. However, simultaneous concerns regarding data privacy are prevalent, with 61% of the same student population expressing these reservations. This indicates a need for balanced implementation strategies that leverage the benefits of AI while proactively addressing privacy concerns and promoting learner agency, rather than solely relying on the convenience of automated tools.

A Model for Empathetic AI: Introducing Maike

Maike utilizes the Socratic Method, an inquiry-based approach to learning, by responding to student input with clarifying questions rather than direct answers. This technique compels learners to actively construct their own understanding of a topic through reasoned argument and self-evaluation. The chatbot’s architecture is designed to identify knowledge gaps and formulate questions that prompt students to examine their assumptions, analyze evidence, and refine their thinking. By prioritizing guided discovery over rote memorization, Maike aims to cultivate deep comprehension and foster the development of independent thought processes, while simultaneously preserving user privacy through data minimization techniques.

Maike is designed to cultivate student agency and self-reflection as core components of the learning process. This is achieved by prompting students to actively question information, analyze their own reasoning, and articulate their perspectives. The system avoids direct provision of answers, instead facilitating a process of guided discovery where students construct understanding through iterative self-assessment and critical thinking. By emphasizing these skills, Maike aims to equip learners with the capacity to independently evaluate information sources, identify biases, and formulate well-reasoned, informed opinions – abilities crucial for navigating increasingly complex information landscapes.

The implementation of the Socratic method within Maike’s design actively reduces the potential for intellectual conformity by prompting learners to critically examine underlying assumptions. Rather than passively receiving information, users are guided through a series of questions that necessitate independent thought and justification of beliefs. This process encourages the questioning of established norms and the formulation of original perspectives, thereby fostering intellectual curiosity and a proactive approach to knowledge acquisition. By prioritizing reasoned argumentation and self-reflection, Maike aims to cultivate learners who are less susceptible to accepting information without critical evaluation.

Maike’s architectural design serves as a replicable model for constructing AI-driven educational tools centered on holistic learner development. This blueprint emphasizes features beyond simple knowledge transfer, incorporating elements that actively support student agency and self-directed learning. Specifically, the system prioritizes features that foster critical thinking skills, encourage ongoing self-assessment, and build intrinsic motivation. By centering design around these principles, Maike demonstrates a pathway for creating AI learning experiences intended to cultivate not only academic competence but also a sustained, positive relationship with learning throughout a user’s life.

The exploration of AI’s influence on education inevitably confronts the tension between progress and preservation. This study rightly highlights the potential for cognitive offloading to diminish fundamental skills, a process akin to structural decay within a complex system. As Andrey Kolmogorov observed, “The most important things are those that are difficult to express.” This rings true when considering the subtle erosion of agency and critical thinking – qualities not easily quantified by learning outcomes, yet vital for a resilient society. The paper’s call for thoughtful design and governance represents an attempt to slow entropy, to ensure these systems age gracefully rather than collapsing under their own complexity. The challenge lies in recognizing what remains unsaid, the intangible aspects of learning that AI must not displace.

What Lies Ahead?

The exploration of artificial intelligence within education, as this paper suggests, isn’t a quest for better learning outcomes-those are, at best, transient indicators. The true challenge resides in understanding how these systems reshape the very processes of cognition, agency, and ethical reasoning. It is not errors in algorithms that will ultimately define the trajectory, but the inevitable erosion of skills as reliance deepens. Systems age not because of errors, but because time is inevitable, and offloading cognitive work isn’t progress if the capacity atrophies.

Future research must move beyond the measurement of ‘impact’ and confront the more fundamental question of what it means to learn in an age of readily available, artificially generated knowledge. The development of ‘AI literacy’ feels less like a solution and more like a palliative, a temporary delaying action against a shifting baseline of competence. Sometimes stability is just a delay of disaster.

The ethical considerations, naturally, will not remain static. As generative AI becomes more sophisticated, the boundaries of authorship, originality, and intellectual property will blur further, demanding a continuous reassessment of pedagogical practices. The field needs to focus less on controlling AI and more on understanding its influence-not as a tool, but as an environmental force, subtly altering the landscape of the mind.

Original article: https://arxiv.org/pdf/2602.04598.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- Arknights: Endfield Weapons Tier List

- Davina McCall showcases her gorgeous figure in a green leather jumpsuit as she puts on a love-up display with husband Michael Douglas at star-studded London Chamber Orchestra bash

- Olivia Wilde teases new romance with Ellie Goulding’s ex-husband Caspar Jopling at Sundance Film Festival

- 1st Poster Revealed Noah Centineo’s John Rambo Prequel Movie

- Here’s the First Glimpse at the KPop Demon Hunters Toys from Mattel and Hasbro

2026-02-05 13:54