Author: Denis Avetisyan

Researchers have developed a novel robotic platform that combines soft robotics, artificial intelligence, and puppetry principles to create more engaging and emotionally resonant human-robot interactions.

![The system establishes a closed-loop interaction where human vocal input is processed by integrated software and a large language model to generate dynamic gestural responses from a robotic platform, thereby influencing subsequent human behavior and completing a cycle of affective feedback driven by [latex] \text{voice} \rightarrow \text{gesture} \rightarrow \text{response} [/latex].](https://arxiv.org/html/2602.04787v1/Figures/Affective_Expression_Loop.jpg)

PuppetAI integrates cable-driven robotics with large language model-driven emotion analysis to enable customizable and tactile-rich expressive gestures.

Creating truly engaging and nuanced human-robot interaction remains a challenge due to limitations in robot expressiveness and customization. This paper introduces PuppetAI: A Customizable Platform for Designing Tactile-Rich Affective Robot Interaction, a modular system leveraging cable-driven soft robotics and large language models to enable a new level of gestural control and emotional responsiveness. By decoupling software layers for perception, affective modeling, and actuation, PuppetAI facilitates the design of highly specific, tactilely-rich interactions. Could this platform unlock more natural and empathetic connections between humans and social robots, paving the way for truly intuitive collaborative experiences?

The Architecture of Empathy: Bridging the Communication Gap

Conventional robotic systems often struggle to facilitate seamless interactions with humans due to limitations in their communicative abilities. While proficient at executing programmed tasks, these robots typically lack the capacity to interpret subtle human cues – facial expressions, tone of voice, body language – leading to frequent misinterpretations and, consequently, user frustration. This communication deficit isn’t simply a matter of adding speech; it’s a deeper issue of lacking the expressive range necessary to convey intent, acknowledge understanding, or signal potential errors. The result is an interaction that feels mechanical and transactional, hindering true collaboration and potentially eroding trust, as humans struggle to reconcile the robot’s actions with their own expectations of social communication.

The future of human-robot collaboration hinges on a critical advancement: emotional intelligence. Current robotic systems excel at executing pre-programmed tasks, but lack the ability to perceive, interpret, and appropriately respond to the subtle emotional signals inherent in human communication. Truly collaborative robots require more than just functional performance; they must be capable of recognizing facial expressions, vocal tones, and even physiological cues to understand a user’s intent, frustration, or approval. This necessitates integrating advanced sensor technology and sophisticated artificial intelligence algorithms that allow robots to not only detect emotional states, but also to modulate their own behavior – through verbal responses, body language, or task adjustments – in a way that fosters trust, enhances communication, and ultimately improves the efficacy of human-robot teams.

The future of robotics hinges on a reimagining of design principles, moving past a sole focus on efficiency and utility. Historically, robotic development has centered on what a machine can do, but increasingly, attention must shift to how it communicates its actions and intentions. This necessitates integrating expressive capabilities – nuanced body language, subtle vocalizations, and adaptable facial displays – directly into the core architecture of robots. Such features aren’t merely aesthetic additions; they are critical for establishing shared understanding with humans, mitigating ambiguity, and fostering trust. A robot capable of signaling its internal state – confirming task completion, indicating uncertainty, or expressing a need for clarification – becomes a collaborative partner rather than a tool, paving the way for seamless human-robot interaction in complex environments.

Decoding Affective Signals: The Expression Loop Architecture

The Affective Expression Loop is a closed-system architecture designed to translate human vocalizations into corresponding robotic gestures. This system functions by receiving audio input, processing it to identify emotional cues, and then generating appropriate motor commands for a robotic platform. The loop’s design emphasizes real-time processing to minimize latency between human expression and robotic response, facilitating a more naturalistic interaction. This bidirectional communication-human vocalization triggering robotic gesture, potentially influencing subsequent human vocalization-forms the core of the research, with the goal of establishing a responsive and emotionally aware robotic interface.

LLM-based Emotion Analysis forms the core of the Affective Expression Loop by processing acoustic features of speech to determine the emotional state of the speaker. This process utilizes Large Language Models trained on extensive datasets of vocalizations labeled with corresponding emotional categories – including but not limited to happiness, sadness, anger, and neutrality. The models identify subtle vocal nuances such as pitch, tone, speech rate, and intensity variations, converting these acoustic features into quantifiable emotional scores. These scores are then translated into discrete emotional labels or continuous values representing the intensity of specific emotions, ultimately generating actionable signals that drive robotic expression and facilitate nuanced human-robot interaction.

The system’s reliability in assessing emotional content is significantly enhanced through the implementation of the SenseVoiceSmall model for vocal input processing. This model is specifically designed for robustness against variations in speech patterns, background noise, and recording quality, ensuring accurate feature extraction even in non-ideal conditions. SenseVoiceSmall employs a streamlined architecture optimized for real-time performance without compromising accuracy in identifying key acoustic features indicative of emotional states. The resulting data provides a consistent and dependable foundation for the LLM-based emotion analysis component, minimizing errors introduced by imperfect vocal input and maximizing the overall fidelity of emotional assessment.

Directly linking human vocal input to robotic expression is intended to facilitate more natural and effective communication between humans and robots. This approach bypasses the need for complex programming of pre-defined responses, allowing the robot to react in real-time to the emotional tone of the speaker. By mirroring vocal cues – such as pitch, cadence, and intensity – through corresponding robotic gestures and movements, the system aims to create a sense of empathy and understanding. This responsiveness is hypothesized to increase user engagement and improve the perceived intuitiveness of human-robot interaction, ultimately leading to more seamless collaboration and communication.

PuppetAI: A Platform for Expressive Robotic Gestures

PuppetAI is a modular soft robotic platform developed to facilitate expressive, non-verbal communication through robotic gestures. The design prioritizes adaptability and nuanced movement, allowing for the replication of subtle human expressions. Modularity is achieved through segmented construction, enabling configuration variations and potential scaling of the robotic structure. The platform is intended for applications requiring compelling and relatable robotic interaction, focusing on communication beyond traditional verbal methods. This is accomplished through a focus on bio-inspired design principles and materials, resulting in a robot capable of fluid and lifelike motions.

PuppetAI’s core mechanics rely on cable-driven continuum robotics, enabling a high degree of flexibility and precise control over the robot’s morphology. This approach utilizes cables running internally through a flexible, continuously deformable structure, allowing movement to be achieved by selectively actuating these cables. The resulting deformations are not limited to discrete joints, but rather flow continuously along the robot’s body, mirroring the fluid and nuanced motions characteristic of traditional puppetry. This is achieved through the tensioning and relaxing of individual cables, which pull on specific sections of the deformable structure to create bends and curves, offering a wide range of possible poses and gestures.

The PuppetAI platform utilizes elastic ropes integrated into its cable-driven continuum robot design to streamline control and impart realistic motion characteristics. These ropes provide a natural restoring force, passively returning the robot’s segments to a neutral position when not actively driven by the control system. This passive compliance reduces the computational load required for precise positioning and allows for more fluid, organic movements. By offsetting the need for continuous active control to counteract gravity or maintain posture, the elastic ropes contribute to a more energy-efficient system and facilitate the replication of subtle, nuanced gestures.

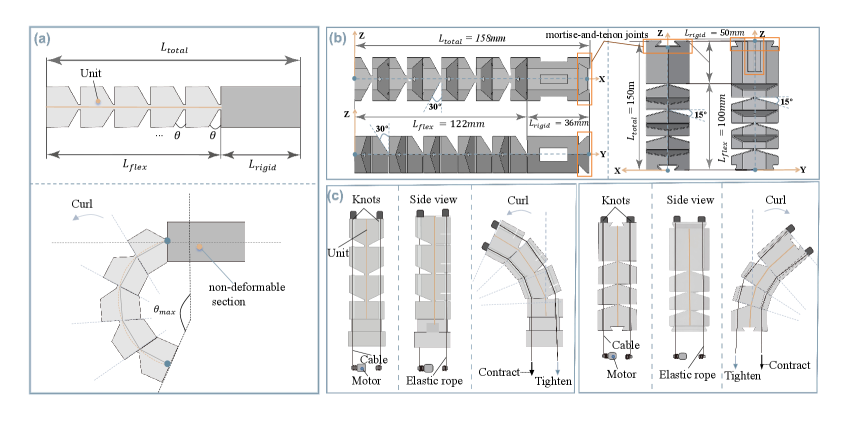

The PuppetAI robot’s articulation is achieved through the strategic implementation of cutting units within both the arm and body segments. The arm exhibits a total bending range of 150°, facilitated by five individual cutting units, each contributing 30° of movement. Similarly, the body is capable of a 45° bending range, realized through the use of three cutting units, each providing 15° of articulation. This segmented approach to actuation allows for precise control and a wide range of expressive postures.

Cable routing within the PuppetAI platform is specifically designed to facilitate consistent deformation across the robotic structure. The arm utilizes deformable sections measuring 122 mm in length, coupled with a 36 mm non-deformable segment, to enable a range of motion while maintaining structural integrity. Similarly, the body incorporates 100 mm deformable sections paired with a non-deformable section. This segmented design, achieved through precise cable pathways, ensures coordinated bending and prevents localized stress concentrations during operation, contributing to fluid and realistic gestural expression.

From Perception to Action: Orchestrating Empathetic Response

The culmination of this research lies in a fully integrated system where emotional data directly fuels robotic response. By connecting the ‘Affective Expression Loop’ – responsible for recognizing and interpreting emotional cues – with the ‘PuppetAI’ platform, a continuous cycle of perception and action is established. This closed-loop architecture allows the robot to not simply react to stimuli, but to dynamically adjust its behavior based on the perceived emotional state of its environment. The system effectively creates a feedback mechanism; the robot’s actions are informed by emotional input, and the resulting changes in the environment are then re-evaluated, refining future responses and fostering a more nuanced and believable interaction. This continuous processing enables the robot to exhibit what appears to be empathetic behavior, moving beyond pre-programmed routines to engage in a responsive and seemingly intelligent manner.

The robot’s ability to express emotion through movement isn’t simply a matter of triggering individual gestures; it requires a carefully orchestrated sequence. An Action Sequence Scheduler serves as the central nervous system for these expressions, meticulously managing the timing, order, and blending of multiple robotic movements. This scheduler doesn’t just string actions together; it anticipates transitions, resolving potential conflicts and ensuring a fluid, coherent performance. By prioritizing smooth kinematics and dynamic stability, the system avoids jerky or unnatural motions, instead delivering expressive gestures that convincingly convey the intended emotional state. The scheduler utilizes a hierarchical approach, breaking down complex expressions into smaller, manageable sub-sequences, and dynamically adjusting parameters based on the detected emotional input and the robot’s physical capabilities.

The robot’s ability to execute nuanced emotional displays hinges on a sophisticated system of low-level actuation control. This crucial component bridges the gap between abstract commands – such as ‘express sadness’ or ‘initiate a comforting gesture’ – and the physical movements of the robotic platform. Rather than simply triggering pre-programmed motions, this control system meticulously manages individual motor actions, accounting for factors like velocity, acceleration, and force. It dynamically adjusts these parameters to achieve fluid, lifelike movements, preventing jerky or unnatural robotic behavior. Through precise control of each actuator, the system enables the robot to subtly modulate facial expressions, posture, and gestures, resulting in a more convincing and emotionally resonant performance. This detailed motor control is essential for translating high-level emotional intent into tangible, observable actions.

The robot’s ability to perceive and react to its environment hinges on a sophisticated auditory processing system. Integrating voice activity detection and keyword spotting allows the platform to move beyond simply hearing sound to actively understanding spoken cues. This isn’t merely about recognizing pre-programmed commands; the system identifies when speech is present, isolates relevant keywords, and interprets their meaning within the ongoing conversation. Consequently, the robot can differentiate between general conversation and direct instructions, or even discern emotional tone from vocal inflections, enabling contextually appropriate responses – from offering a sympathetic acknowledgment to initiating a requested action. This nuanced auditory perception is crucial for creating a more natural and effective human-robot interaction, allowing the robot to move beyond rigid programming and demonstrate genuine responsiveness.

The development of PuppetAI exemplifies a holistic approach to robotic interaction, mirroring the interconnectedness of systems. The platform’s integration of soft robotics, large language models, and puppetry-inspired gestures isn’t merely additive; it’s a deliberate orchestration of components designed to elicit nuanced emotional responses. As Marvin Minsky observed, “Common sense is the collection of things everyone knows, but no one can explain.” PuppetAI attempts to bridge this gap by embodying ‘common sense’ interaction-gestures and expressions that, while intuitively understood, require complex engineering to replicate. The system’s modularity and focus on tactile feedback reveal an understanding that behavior arises from structure, and that altering one element necessitates consideration of the whole.

Future Directions

The presented work, while demonstrating a functional integration of disparate technologies, merely scratches the surface of what is possible with truly expressive robotic systems. The current architecture, reliant on a layered approach – perception via large language models informing actuation through cable-driven mechanisms – inherently introduces latency and potential for misinterpretation. A more holistic design would necessitate a fundamental rethinking of robotic ‘anatomy’, moving beyond the imitation of human musculature towards principles of embodied cognition where sensing and action are inextricably linked.

Further investigation must address the limitations imposed by the puppetry paradigm itself. While offering a degree of intuitive control, it risks becoming a performative constraint rather than a genuine avenue for emotional communication. The challenge lies not in replicating human expression, but in discovering novel forms of robotic affect, ones that are legible and meaningful within the unique constraints of a non-human form.

Ultimately, the true test of this platform, or any like it, will not be its technical sophistication, but its capacity to foster genuine connection. The pursuit of ‘affective computing’ too often prioritizes the simulation of emotion over the cultivation of empathy. A more fruitful path lies in designing systems that are not merely responsive, but resilient – capable of adapting to the subtle, often illogical, nuances of human interaction, and of forging relationships built on mutual understanding, not mimicry.

Original article: https://arxiv.org/pdf/2602.04787.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- Olivia Wilde teases new romance with Ellie Goulding’s ex-husband Caspar Jopling at Sundance Film Festival

- 1st Poster Revealed Noah Centineo’s John Rambo Prequel Movie

- Here’s the First Glimpse at the KPop Demon Hunters Toys from Mattel and Hasbro

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- Arknights: Endfield Weapons Tier List

2026-02-05 11:57