Author: Denis Avetisyan

A new approach combines intuitive design tools and movement analysis to empower creators to craft compelling and natural robotic motions.

This review details a framework integrating physical interaction, a custom remote controller, Blender software, and Laban Movement Analysis for designing expressive robotic behavior.

As robots transition from performing purely functional tasks to inhabiting shared human environments, a critical gap emerges between robotic capability and the need for intuitively communicative movement. This paper, ‘Shaping Expressiveness in Robotics: The Role of Design Tools in Crafting Embodied Robot Movements’, details an integrated design pedagogy and accompanying toolbox-including a custom remote controller and Blender-based animation software-to facilitate the creation of expressive robotic arm motions. By grounding the design process in principles of Laban Movement Analysis, we demonstrate how designers can bridge the divide between human intent and robotic execution, yielding more engaging and naturalistic behaviors. Could this approach unlock a new generation of robots capable of truly resonant and meaningful interactions with people?

The Erosion of Rigid Motion: Towards Expressive Robotics

For decades, robotic engineering has centered on achieving precise task completion, prioritizing efficiency and repeatability above all else. This focus has inadvertently resulted in movements that, while technically proficient, often appear rigid, jerky, and lack the subtle nuances inherent in natural human motion. Consequently, interpreting a robot’s intentions becomes challenging; a lack of ‘expressiveness’ means observers struggle to predict upcoming actions or understand the underlying goals driving those actions. This disconnect isn’t merely an aesthetic concern; it fundamentally hinders effective human-robot collaboration, creating a barrier to seamless interaction and potentially fostering distrust as actions appear unpredictable or even threatening. The pursuit of purely functional robotics, therefore, has inadvertently created a communication gap that must be addressed to unlock the true potential of these machines.

Successful collaboration between humans and robots hinges on more than simply achieving a desired outcome; it necessitates a nuanced understanding of communication through movement. Research demonstrates that humans instinctively interpret subtle cues in physical expression, and this expectation extends to robotic partners. When robots exhibit motions that are not only efficient but also expressive – conveying information about their internal state, anticipated actions, or even ‘emotional’ intent – humans respond with increased trust, comfort, and a greater willingness to cooperate. This is because expressive movements allow people to predict robotic behavior, interpret its goals, and ultimately, build a more intuitive and natural working relationship, moving beyond a purely transactional exchange towards genuine interaction.

The future of robotics hinges on a shift in design philosophy, moving beyond purely functional movements toward a more nuanced form of communication. Current robotic systems, while capable of precise actions, often lack the subtle cues – the slight hesitations, anticipatory gestures, and variable speeds – that humans instinctively use to interpret intent. Researchers are now exploring how to integrate principles of human kinesiology and nonverbal communication into robotic control systems, allowing robots to not simply do tasks, but to express their intentions. This involves developing algorithms that modulate robotic movements to convey information about planned actions, internal states, and even simulated emotions, fostering a more intuitive and collaborative interaction with humans. Ultimately, this new paradigm aims to create robots that are not just tools, but social partners capable of seamless and meaningful communication.

Embodied Design: A Kinesthetic Approach to Robotic Interaction

Movement-Centric Design Pedagogy fundamentally alters the design process by prioritizing direct physical engagement with robotic systems. Rather than relying on purely digital modeling or abstract programming, this approach necessitates designers physically manipulating and experiencing robot movements as a core component of development. This hands-on methodology places embodied interaction – the reciprocal relationship between a physical form and human perception – at the center of design decisions, informing the creation of robot motions and behaviors. The intention is to move beyond solely functional robot operation and instead focus on designing for expressive and intuitive interaction through physical exploration.

Direct physical engagement with robotic systems is central to understanding their communicative capabilities. Designers employing this methodology move beyond abstract modeling and directly manipulate a robot’s actuators, observing the resulting motions and iteratively refining them. This hands-on approach allows for an intuitive grasp of how specific movements are perceived, facilitating the creation of nuanced expressions and avoiding unintended communication. By experiencing the physical effort, velocity, and resulting forces associated with each motion, designers can better calibrate the robot’s movements to achieve desired communicative outcomes and ensure the robot’s behavior is interpretable by human observers.

Prioritizing physical interaction in robot motion design directly addresses the challenges of translating abstract programming parameters into perceptible and meaningful actions. Designers who physically manipulate a robot – through direct teaching, kinesthetic guidance, or teleoperation – develop an embodied understanding of how specific movements feel to an observer. This experiential knowledge allows for the refinement of motion profiles beyond purely computational optimization, enabling the creation of trajectories that are more easily interpreted and predicted by humans. Consequently, robot movements become more intuitive, reducing cognitive load for users and increasing engagement through perceived naturalness and responsiveness. This iterative, physically-grounded design process is demonstrably more effective in generating motions that elicit desired emotional or behavioral responses from human observers than approaches relying solely on simulation or pre-programmed sequences.

Embodied interaction, as the foundational principle for movement-centric design, posits that effective robot motion is directly linked to how humans perceive and interpret those movements. This is achieved by aligning robot kinematics and dynamics with human perceptual and cognitive systems; successful interaction requires the robot to move in ways that are predictable, understandable, and intuitively meaningful to a human observer. Specifically, this involves considering factors like velocity profiles, acceleration limits, and the use of anticipatory movements – mirroring how humans naturally move – to foster a sense of connection and facilitate seamless interaction. The goal is not simply to achieve functional movement, but to create motions that are readily interpreted as intentional and communicative, thereby enhancing the user experience and reducing cognitive load.

From Digital Sculpting to Physical Manifestation: A Comprehensive Toolkit

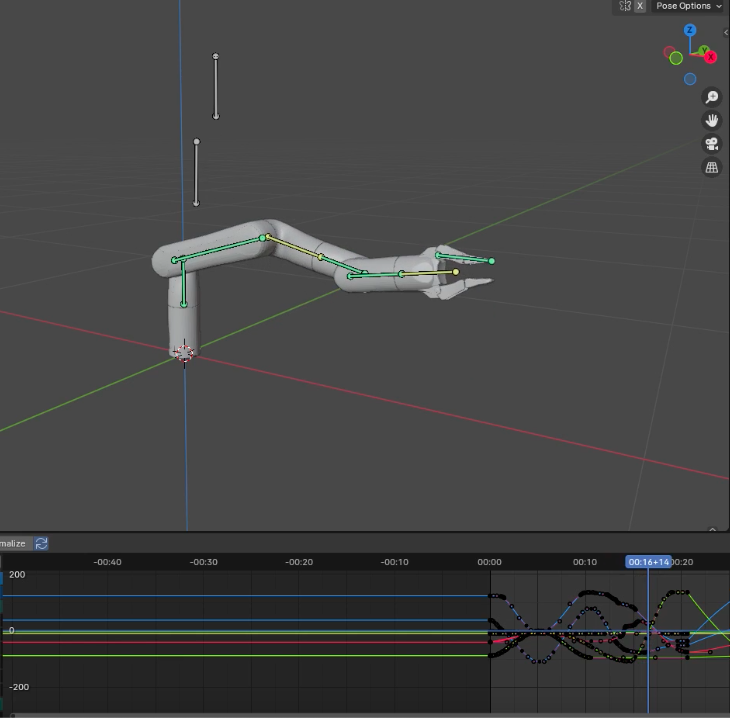

Blender provides a comprehensive environment for the creation and iterative refinement of robotic motion sequences. Its keyframe animation tools allow designers to specify the position, rotation, and velocity of robot joints over time with high precision, down to individual frames. The software supports complex kinematic calculations, enabling the visualization of robot trajectories and the identification of potential collisions or singularities before implementation. Furthermore, Blender’s modifiers and constraints facilitate the creation of smooth and natural movements, and its Python API allows for programmatic control and automation of animation tasks, streamlining the design process for robotic applications.

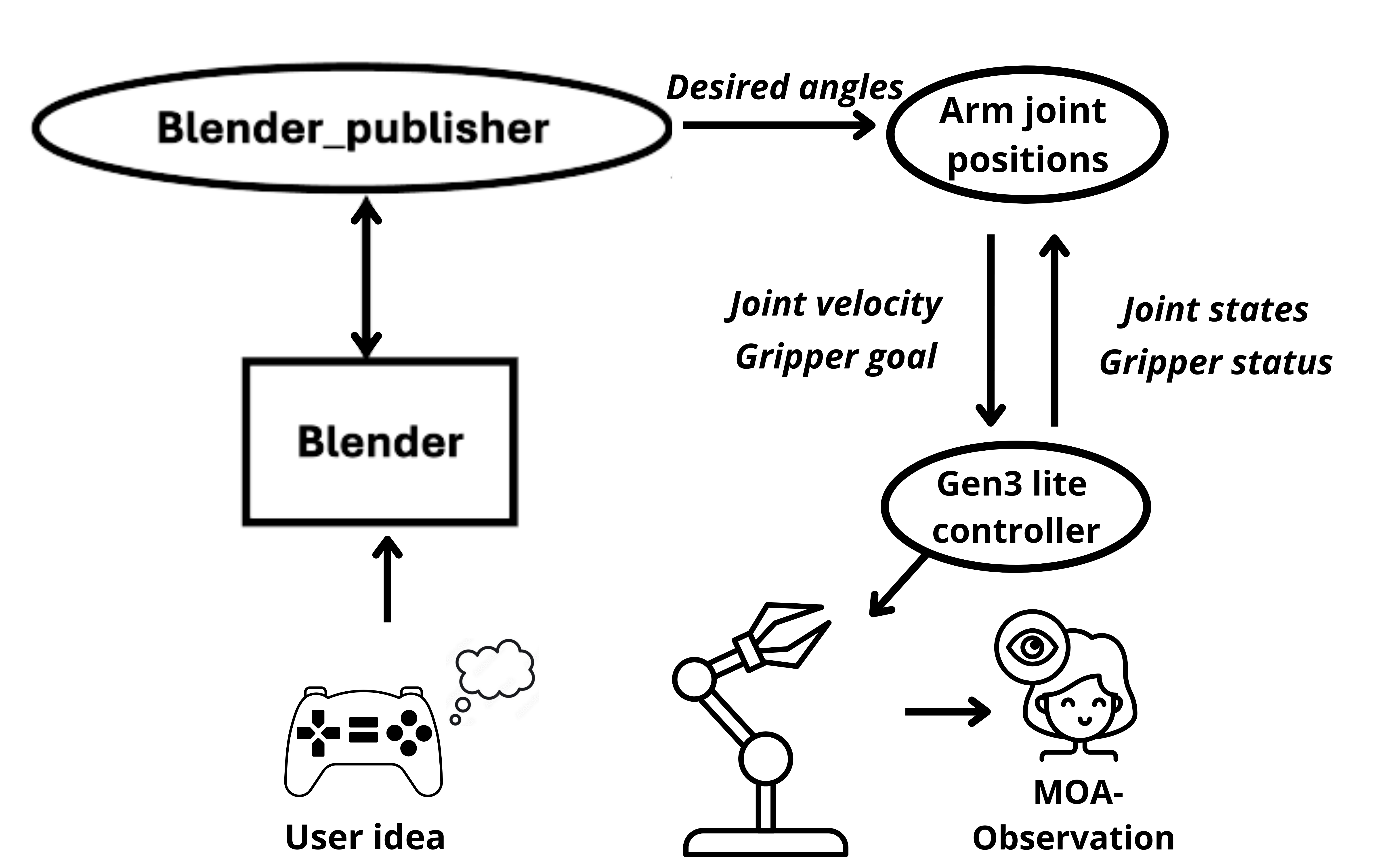

Communication between Blender and the Kinova Gen3Lite robotic arm is facilitated by utilizing the ZeroMQ protocol in conjunction with the Robot Operating System (ROS). ZeroMQ acts as a high-performance asynchronous messaging library, enabling efficient data transfer between the two systems. ROS provides the framework for structuring this communication, defining message types and services for transmitting animation data – including joint angles and trajectories – from Blender to the Kinova arm. Specifically, Blender can publish these trajectories as ROS messages, which the Kinova arm subscribes to and interprets as movement commands. This integration allows for real-time data exchange, enabling direct translation of animated designs into physical robot actions without requiring intermediate file conversions or manual reprogramming.

The integration of Blender animation with robotic control systems establishes a workflow where digitally created movements directly inform robot actions. This process begins with designing trajectories in Blender, which are then converted into a series of commands understood by the Kinova Gen3Lite arm via the ZeroMQ protocol and ROS. This capability bypasses traditional robot programming methods – often requiring specialized code – and instead allows designers to iterate on movement sequences visually and rapidly deploy them to the physical robot for testing. Consequently, the time required for prototyping and refining robotic tasks is significantly reduced, enabling faster development cycles and more efficient exploration of potential solutions.

The Proportional-Derivative (PD) controller is implemented to minimize the error between the desired trajectory, generated from animation software, and the actual position of the Kinova Gen3Lite robotic arm. The proportional term provides output proportional to the current error, while the derivative term reacts to the rate of change of the error. This combination serves to dampen oscillations and prevent overshoot, resulting in smoother and more accurate tracking of the desired movements. The PD controller’s parameters – proportional and derivative gains – are tuned to optimize performance based on the arm’s dynamics and the specific trajectory being executed, ensuring stable and precise motion control. Specifically, the derivative component anticipates future error, allowing the controller to preemptively correct the robot’s path and maintain accuracy even during rapid movements.

Echoes of Life: Design Approaches Inspired by Biology and Expertise

The creation of truly expressive robotic movements often benefits from looking to the natural world. Biomimetic strategies and zoo-anthropomorphic approaches – essentially, learning from biology and animal behavior – offer a rich source of inspiration for roboticists. By studying how creatures move – the subtle nuances of a cheetah’s sprint, the fluid grace of a jellyfish, or even the purposeful gestures of primates – designers can imbue robots with a sense of life and intention. This isn’t simply copying movements; it’s understanding the underlying principles of efficient, adaptable, and communicative motion found in living organisms and translating those principles into robotic control systems. The result is not merely functional movement, but movement that conveys meaning, personality, and believability, enhancing the robot’s ability to interact with – and be understood by – humans.

Laban Notation provides a systematic vocabulary for deconstructing and articulating the subtleties of human and animal movement, extending beyond simple kinematics to capture qualities like flow, weight, and emotional expression. This framework, developed by Rudolf Laban, allows designers to analyze existing movements – be it a bird’s flight or a dancer’s gesture – and then precisely recreate or adapt those qualities in robotic systems. By representing movement not just as a sequence of positions, but as a dynamic interplay of effort, space, and shape, Laban Notation empowers designers to move beyond purely functional robotic motions and instead craft movements that are nuanced, intentional, and resonate with a sense of naturalness and expressiveness. This approach fosters a deeper understanding of how movement communicates meaning, enabling the creation of robots capable of more compelling and believable interactions.

The burgeoning field of artificial intelligence is now capable of generating remarkably fluid and realistic robot movements, thanks to recent progress in Large Language Models. These LLMs, traditionally employed for natural language processing, are demonstrating an unexpected aptitude for motion synthesis, producing animations that rival the quality achieved by skilled professional animators. By training on extensive datasets of human and animal movement, these models learn the subtle nuances of timing, posture, and dynamics that contribute to believable and expressive motion. This capability opens new avenues for rapidly prototyping and refining robot behaviors, potentially streamlining the design process and enabling more intuitive and engaging interactions between robots and humans.

The capacity for nuanced control over complex robotic movements was significantly enhanced through the implementation of a customized PlayStation 4 remote controller. This familiar interface allowed participants, even those without prior experience in motion design or movement analysis, to intuitively explore and refine generated movements. By leveraging the established ergonomics and responsiveness of the PS4 controller, the system bypassed the need for specialized input devices or extensive training, fostering a more direct and engaging interaction with the robot’s kinematics. This approach not only facilitated a smoother workflow but also enabled a broader range of individuals to contribute to the creative process of robotic animation, proving that accessible control schemes are key to unlocking the full potential of expressive robot design.

The efficacy of this design methodology was rigorously tested with a cohort of twenty-one participants, all possessing engineering expertise but notably lacking any formal training in motion design or movement analysis. This deliberate selection criteria served to highlight the approach’s accessibility and ease of use, demonstrating that compelling and expressive robotic movements could be generated even by individuals without specialized artistic backgrounds. The study’s results indicated a significant ability among participants to effectively utilize the system, suggesting that the tools and techniques developed are intuitive and readily adaptable for a broad range of users within technical fields – potentially expanding the creative possibilities in robotics beyond the purview of dedicated animators and movement specialists.

Navigating the Spectrum of Control: Joint Control vs. Cartesian Planning

Joint-space planning empowers robotic systems with an exceptional degree of maneuverability by directly controlling each individual joint. This approach bypasses the need to define a path in terms of end-effector position, instead focusing on the angles and velocities of each motor. Consequently, robots employing joint-space planning can execute remarkably intricate movements, including those with redundancies-multiple ways to achieve the same end position-and subtle, nuanced actions that would be difficult or impossible with simpler methods. The ability to finely tune each joint allows for precise control over posture, orientation, and the coordination of complex, multi-degree-of-freedom motions, making it ideally suited for tasks demanding high precision and dexterity, such as delicate assembly, surgical procedures, or artistic expression.

Cartesian-space planning prioritizes direct control over the robot’s end-effector – its ‘hand’ – by specifying desired positions and orientations in three-dimensional space. This approach significantly streamlines trajectory planning; instead of calculating individual joint angles, the system focuses on guiding the end-effector along a defined path. However, this simplification comes with a trade-off: the resulting movements may lack the nuanced complexity achievable through precise joint control. Certain orientations or intricate maneuvers, easily executed by coordinating individual joints, can become difficult or impossible to realize solely through end-effector positioning, potentially restricting the robot’s range of motion and expressive capabilities. This limitation arises because the system must internally translate the desired Cartesian path into corresponding joint angles, and not all configurations are reachable or feasible without compromising smoothness or stability.

Effective robotic motion planning hinges on a fundamental design trade-off: whether to prioritize precise control over individual joints or to focus on the end-effector’s overall path. Selecting the appropriate methodology – joint-space or Cartesian-space planning – isn’t a matter of superiority, but of suitability to the application’s needs. Joint-space control excels in scenarios demanding nuanced movements and dexterity, while Cartesian planning streamlines trajectory generation for tasks prioritizing speed and simplicity. Designers must carefully consider these competing priorities; a complex manipulation task might necessitate the fine-grained control of joint-space, whereas a rapid pick-and-place operation could benefit significantly from the efficiency of Cartesian control. Ultimately, a thorough understanding of this trade-off empowers developers to optimize robotic performance and achieve desired outcomes with greater precision and efficiency.

Current robotic motion planning often forces a choice between the precision of joint-space control and the intuitive simplicity of Cartesian-space planning. However, emerging research prioritizes the development of hybrid methodologies designed to synergistically combine the advantages of both approaches. These systems aim to leverage the nuanced control offered by manipulating individual joint angles while retaining the ease of defining trajectories in terms of end-effector position and orientation. Such integration promises to unlock more versatile and adaptable robotic movements, potentially enabling robots to perform complex tasks with greater efficiency and responsiveness – a crucial step toward more sophisticated automation in dynamic environments. The anticipated outcome is a planning framework that doesn’t simply choose between control types, but intelligently allocates them based on the specific requirements of each motion segment.

The design choices between joint control and Cartesian planning were investigated through a collaborative workshop involving eight teams, each comprised of two to three members. This team-based approach fostered dynamic discussion and allowed participants to collectively explore the strengths and limitations of each planning methodology. By working in small groups, individuals were encouraged to share insights, critique approaches, and build upon each other’s ideas, ultimately leading to a more comprehensive understanding of the trade-offs inherent in robot motion design. The collaborative environment proved instrumental in surfacing nuanced perspectives that might have been missed through individual exploration, and highlighted the importance of considering diverse viewpoints in the development of robotic systems.

A dedicated workshop period of 3.5 hours proved instrumental in fostering a deep understanding of robotic motion planning among participants. This timeframe allowed the eight teams, comprised of two to three members each, ample opportunity to not only become familiar with the available tools but also to explore the nuances of both joint-space and Cartesian-space control. The extended duration facilitated a hands-on approach, enabling participants to move beyond theoretical understanding and directly experience how different planning methodologies impact the expressiveness and complexity of robotic movements, ultimately informing their design choices.

“`html

The pursuit of expressive robotics, as detailed in this work, reveals a fascinating interplay between design and decay. Systems, even those as complex as embodied robots, are not static entities but rather evolve within the constraints of their creation and interaction. The presented toolbox – integrating physical interaction, a custom remote controller, and Blender – seeks to shape this evolution, allowing for nuanced control over robotic movement guided by principles of Laban Movement Analysis. As Linus Torvalds once stated, “Talk is cheap. Show me the code.” This sentiment aptly reflects the approach outlined in the paper; theoretical frameworks are valuable, but true understanding and progress emerge from the practical implementation and iterative refinement of these design tools, acknowledging that even the most carefully crafted systems will eventually age and require adaptation.

The Trajectory of Expression

The presented work, in its careful orchestration of physical interaction, digital tools, and movement analysis, reveals not a solution, but a sharpening of the central problem. Expressive robotics isn’t about achieving expression, but about understanding its decay – the inevitable loss of nuance as intention translates through mechanical systems. Each imperfect trajectory, each subtly missed cue, is a moment of truth in the timeline of interaction, a testament to the inherent friction between the digital and the embodied. The tools presented are, therefore, less about creation and more about precisely documenting this erosion.

Future work will inevitably focus on increasing fidelity – finer sensors, more responsive actuators, more sophisticated algorithms. However, this pursuit risks obscuring a crucial point: perfect replication of human movement isn’t the goal, nor is it even possible. The value lies in recognizing – and perhaps even embracing – the unique qualities that emerge from the limitations of the robotic form. The real challenge isn’t building a robot that moves like a human, but designing one whose ‘imperfections’ are meaningfully expressive.

Ultimately, this field will be defined not by the tools it creates, but by the questions it asks. The current focus on design tools is a necessary stage, but the long-term trajectory requires a shift toward understanding the temporal dynamics of interaction – how meaning shifts and degrades over time, and how these changes shape the experience of being with a machine. Technical debt, in this context, isn’t simply a coding problem; it’s the past’s mortgage paid by the present, a constant reminder of the compromises inherent in bringing intention into physical form.

Original article: https://arxiv.org/pdf/2602.04137.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- 1st Poster Revealed Noah Centineo’s John Rambo Prequel Movie

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

- Here’s the First Glimpse at the KPop Demon Hunters Toys from Mattel and Hasbro

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- Arknights: Endfield Weapons Tier List

- Davina McCall showcases her gorgeous figure in a green leather jumpsuit as she puts on a love-up display with husband Michael Douglas at star-studded London Chamber Orchestra bash

2026-02-05 06:58