Author: Denis Avetisyan

New research challenges the idea that humans apply a double standard when interacting with robots, suggesting a surprisingly balanced moral assessment.

An empirical study finds no evidence of ethical asymmetry in human evaluations of positive and negative actions directed towards robots.

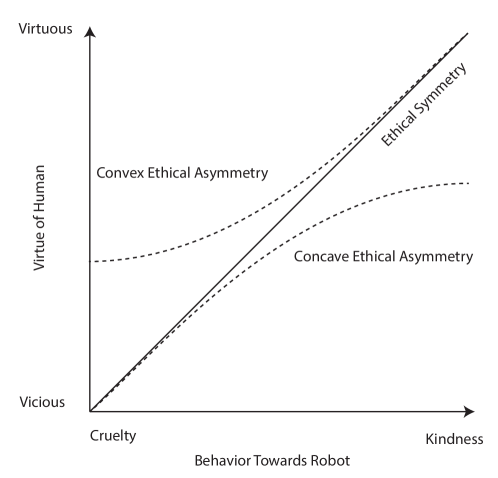

Despite growing integration into daily life, the ethical considerations surrounding human interaction with robots remain contested, particularly regarding asymmetrical moral responses. This study, ‘Ethical Asymmetry in Human-Robot Interaction – An Empirical Test of Sparrow’s Hypothesis’, empirically investigated the proposition that negative actions toward robots are judged more harshly than equivalent positive actions. Results from a mixed experimental design revealed a symmetrical, rather than asymmetrical, relationship between perceived moral permissibility of action and assessed virtues-challenging Sparrow’s initial hypothesis. How will a deeper understanding of these symmetrical responses shape the development of ethical guidelines for increasingly sophisticated human-robot collaborations?

Beyond Simple Rules: The Limits of Traditional Ethics in HRI

Conventional ethical systems, such as deontology and consequentialism, frequently struggle when applied to the complexities of human-robot interaction. Deontology, with its emphasis on strict rules and duties, can prove inflexible in situations requiring contextual judgment, while consequentialism, focused solely on maximizing positive outcomes, risks overlooking the importance of intent and moral character. These frameworks often treat actions as isolated events, failing to account for the ongoing development of trust and rapport crucial in HRI. Consequently, a reliance on rules or outcomes alone can lead to interactions that, while technically ‘ethical’ by these standards, feel impersonal, untrustworthy, or even manipulative, ultimately hindering the potential for beneficial collaboration between humans and robots.

Rather than prescribing actions based on rigid rules or projected outcomes, virtue ethics proposes a framework centered on the development of moral character in both humans and robots. This approach posits that ethical behavior arises from cultivating virtues – traits like compassion, honesty, and fairness – within an agent. For robotics, this means moving beyond simply programming robots to do the right thing, and instead focusing on designing systems that embody virtuous characteristics. Such a foundation offers greater adaptability to complex, unpredictable situations, as virtuous agents can apply their understanding of moral principles to novel scenarios, rather than being limited by pre-defined rules. By prioritizing character alongside capability, this ethical framework aims to foster trust and ensure that increasingly autonomous robots integrate seamlessly and beneficially into human society.

As robots transition from specialized tools to consistent companions and collaborators, the ethical landscape necessitates a move beyond simple rule-following or outcome-based assessments. The increasing integration of these agents into the very fabric of daily life – assisting in caregiving, education, and even emotional support – demands a profound consideration of moral character, not just in human-robot interaction, but potentially within the robots themselves. This isn’t merely about preventing harm; it’s about fostering trust, encouraging prosocial behaviors, and navigating the complex subtleties of social dynamics. A nuanced understanding of how character is perceived, expressed, and responded to becomes paramount, as the success of these interactions hinges on more than just functional correctness; it requires a shared understanding of virtuous conduct and reliable moral standing.

Successfully integrating robots into daily life hinges not merely on technical proficiency, but on establishing genuine trust, and that trust is profoundly affected by anticipating and mitigating unintended consequences. Current ethical guidelines often prove insufficient when robots navigate complex social situations, leaving room for unforeseen harms or eroded confidence. A nuanced understanding of how robots appear to act – their perceived intentions and moral character – is therefore critical; even a technically flawless robot can damage trust through seemingly insensitive or inappropriate behavior. Building robots capable of recognizing and responding to subtle social cues, and designing interactions that consistently demonstrate beneficial intent, is not simply about avoiding negative outcomes, but about proactively fostering positive relationships and solidifying human acceptance of increasingly autonomous machines.

Measuring Moral Character: The Cardinal Virtue Questionnaire

A questionnaire was developed to evaluate human perceptions of the four cardinal virtues – prudence, justice, temperance, and courage – specifically as they relate to interactions with robotic agents. This instrument was adapted from existing validated scales measuring these virtues in social contexts, but modified to focus on scenarios involving robots performing both beneficial and harmful actions. The questionnaire presents participants with hypothetical situations and asks them to rate the extent to which a robot’s response demonstrates each of the four virtues, providing a quantifiable assessment of perceived virtuous behavior in human-robot interaction.

The Questionnaire on Cardinal Virtues is a measurement tool designed to assess human perceptions of virtuous behavior exhibited by robots. It functions by presenting scenarios involving both positive and negative actions performed by a robotic agent, followed by questions gauging the extent to which the robot’s response reflects the cardinal virtues of prudence, justice, temperance, and courage. Responses are quantified using a Likert scale, enabling a numerical assessment of perceived virtuousness in human-robot interaction contexts. This allows researchers to move beyond qualitative observations and establish a quantifiable metric for evaluating robotic behavior from an ethical standpoint.

The reliability of the Questionnaire on Cardinal Virtues was assessed using PVS measurement, which demonstrated high internal consistency across all four virtue scales. Cronbach’s Alpha ranged from 0.961 to 0.971 for each scale – prudence, justice, temperance, and courage. These values indicate a strong level of agreement among items within each scale, suggesting the questionnaire provides a dependable and accurate measure of perceived virtuous responses in human-robot interactions. This high level of reliability supports the validity of the questionnaire as a tool for quantifying the cardinal virtues.

The study utilized an online experimental design to facilitate data collection from a diverse participant pool and maintain rigorous control over experimental variables. This approach involved presenting scenarios involving human-robot interactions to participants via a web-based platform. Utilizing an online environment allowed for recruitment beyond geographical limitations, yielding a larger and more representative sample size than would be feasible with traditional laboratory-based experiments. Furthermore, the platform enabled standardized presentation of stimuli and automated recording of responses, minimizing potential experimenter bias and ensuring consistency in data acquisition. This controlled environment was crucial for isolating the effects of specific variables related to perceptions of cardinal virtues in human-robot interaction.

Testing the Symmetry of Moral Judgment: Sparrow’s Hypothesis Under Scrutiny

Our research was predicated on the assertion of Sparrow’s Ethical Asymmetry Hypothesis, which posits a disproportionate negative reaction to harming robots compared to a positive reaction to benefiting them. Specifically, we hypothesized that a given action causing harm to a robot would elicit a stronger condemnation than the equivalent action providing benefit. This expectation stems from the perceived lack of reciprocal moral obligation towards non-sentient entities, suggesting a lower threshold for negative moral judgment when robots are the recipients of harmful acts. The study aimed to empirically test whether negative interactions with robots are indeed subject to a greater degree of moral scrutiny than positive ones, potentially revealing an asymmetry in human moral assessment of human-robot interactions.

To investigate moral responses to human-robot interaction, a questionnaire was adapted and paired with specifically designed stimuli. These stimuli depicted scenarios involving human actions directed towards robots, systematically varying the valence of those actions. Positive actions – such as providing assistance or expressing gratitude – were presented alongside negative actions – including instances of neglect or mild harm. The intent was to create a controlled set of conditions allowing for the measurement of differing moral judgements based solely on the action’s valence, independent of the severity of the action itself. This manipulation formed the basis for assessing whether condemnation of negative acts would be stronger than praise for comparable positive acts.

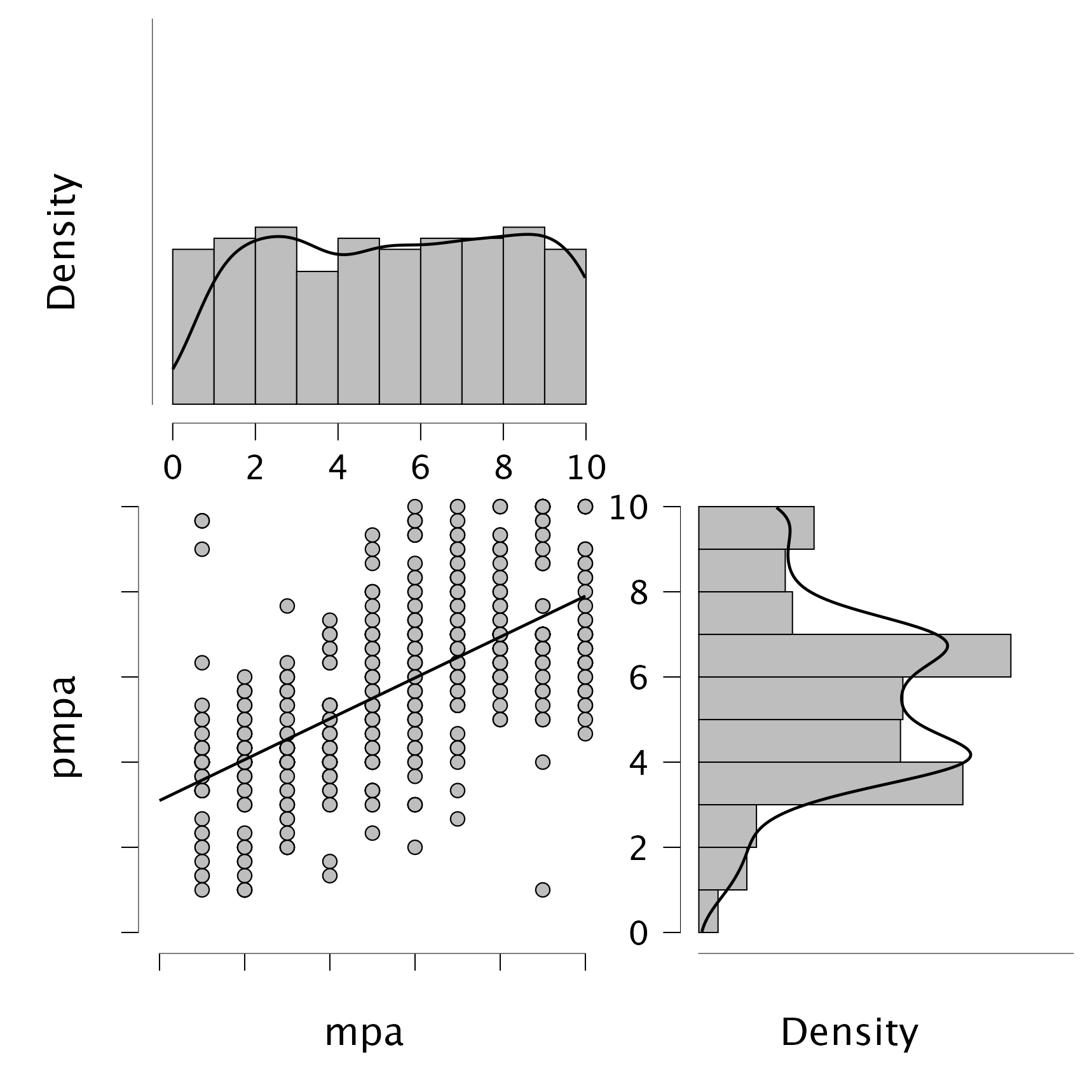

The manipulation check confirmed the efficacy of the stimuli in eliciting differential perceptions of moral permissibility. Analysis indicated that the designed scenarios successfully induced varying levels of acceptance for actions performed towards robots, accounting for 42.5% of the variance observed in participants’ Perceived Moral Permissibility of Action (PMPA) scores. This result demonstrates a statistically significant relationship between the manipulated action valence and the degree to which participants considered the actions morally justifiable, validating the stimuli as a reliable tool for further investigation into moral assessment in human-robot interaction.

Statistical analysis of participant responses, utilizing Factor Analysis, indicated a symmetrical relationship between perceived virtue and moral permissibility when evaluating human-robot interactions. The Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy was 0.984, exceeding the generally accepted threshold of 0.7 and confirming the data’s suitability for factor analysis. This finding directly challenges Sparrow’s Ethical Asymmetry Hypothesis, which posited a greater condemnation of negative actions towards robots than praise for positive actions; instead, the data demonstrate that increases in perceived virtue correlate with proportionally similar increases in moral permissibility, and vice versa, resulting in symmetrical curves.

Beyond Damage Control: Implications for Ethical HRI Design

Recent studies examining human moral assessment of robotic actions reveal a surprising symmetry in how individuals perceive positive and negative behaviors. Contrary to expectations that harm caused by robots would elicit stronger negative reactions than praise for beneficial actions, research indicates a relatively balanced response. This suggests that designers need not solely focus on minimizing potential damage when developing robots; instead, a holistic approach prioritizing both beneficial contributions and the prevention of negative outcomes is warranted. The findings challenge the assumption that mitigating harm should be the dominant ethical consideration, implying that fostering positive interactions is equally crucial for building trustworthy human-robot relationships. Ultimately, this symmetry underscores the importance of a nuanced ethical framework that considers the full spectrum of robotic behavior, rather than solely prioritizing damage control.

The observed symmetry in human responses to robotic actions-where negative outcomes aren’t significantly more condemned than positive actions are praised-underscores a critical need for proactive ethical frameworks in human-robot interaction. This isn’t an argument for diminished safety precautions, but rather a call for equally diligent consideration of both potential harms and benefits. Robust safety mechanisms are essential to prevent detrimental interactions, yet ethical guidelines must also address the responsible implementation of beneficial actions, ensuring fairness, transparency, and the avoidance of unintended consequences. A comprehensive approach to ethical HRI requires anticipating not only how to mitigate risks, but also how to cultivate trustworthy relationships founded on reciprocal respect and well-being, demanding a forward-looking strategy that governs both the prevention of harm and the responsible delivery of assistance.

The assessment of robot actions, and the attribution of moral standing to them, is not universally consistent; therefore, future investigations must account for the significant role of cultural and individual differences. Studies indicate that ethical judgments in human-robot interaction (HRI) are shaped by pre-existing cultural norms regarding agency, intentionality, and social responsibility, meaning a robot’s behavior perceived as benevolent in one culture might be viewed with suspicion in another. Beyond broad cultural trends, individual variations – encompassing personality traits, prior experiences with technology, and personal values – further modulate these assessments. A deeper understanding of these nuanced perspectives is critical for developing HRI systems that align with diverse ethical expectations, avoid unintentional offense, and foster genuinely trustworthy relationships across a global user base. This necessitates moving beyond standardized ethical frameworks to embrace more adaptable and personalized approaches to robot design and behavior.

Integrating virtue ethics into the design of human-robot interactions offers a pathway towards cultivating relationships built on trust, respect, and mutual benefit. This approach moves beyond simply avoiding harm, instead proactively embedding qualities like honesty, compassion, and fairness into a robot’s operational framework. By considering how a virtuous agent would act, designers can create robots that not only perform tasks effectively, but also inspire confidence and encourage positive social interactions. This isn’t merely about programming rules, but about fostering a design philosophy where the robot’s actions consistently demonstrate moral character, leading to more meaningful and enduring connections with humans and promoting a collaborative, rather than transactional, dynamic. Ultimately, prioritizing virtue in design anticipates and encourages ethical behavior, shaping robots as partners capable of enriching human lives.

The study’s finding-a symmetrical rather than asymmetrical response to actions directed at robots-feels predictably human. It suggests that even when dealing with artificial entities, the core principles of moral evaluation remain stubbornly consistent. As Andrey Kolmogorov observed, “The most important things are often the most obvious.” Perhaps the lack of observed asymmetry isn’t a surprising anomaly, but a simple affirmation of how humans apply-or fail to apply-ethical considerations, regardless of the target. This mirrors the inherent tension in system design; elegant theories about human-robot interaction will inevitably confront the messy reality of deployment, revealing that virtue assessment, even in these novel contexts, remains firmly rooted in established cognitive biases. Everything optimized will one day be optimized back.

So, What Breaks Next?

The absence of demonstrated asymmetry in human judgment of robotic interactions-that is, humans don’t appear to disproportionately condemn negative acts against robots-is… predictably anticlimactic. The initial hypothesis posited a moral shielding of sorts for artificial agents, a notion that now seems optimistic, even naive. Perhaps humans are simply consistent in their tolerance for appalling behavior, regardless of the target’s silicon-based anatomy. One suspects production-in this case, increasingly realistic and integrated robots-will eventually provide a definitive answer. Until then, the field continues to refine its ability to state the obvious.

Future work will undoubtedly explore increasingly nuanced scenarios-robotic deception, betrayal, the infliction of digital suffering. Each iteration will reveal further limitations in current ethical frameworks, exposing the tendency to retrofit human moralities onto entities that may not be subject to them. The challenge isn’t building ethical AI; it’s acknowledging that humans will find a way to be disappointed by it, no matter how cleverly programmed.

It’s a comforting truth, really. Everything new is old again, just renamed and still broken. The quest for robotic ethics, therefore, feels less like innovation and more like an elaborate exercise in documenting inevitable failure. One anticipates a wealth of papers detailing how things went wrong, long after the robots have stopped caring-if they ever did.

Original article: https://arxiv.org/pdf/2602.02745.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- eFootball 2026 Epic Italian League Guardians (Thuram, Pirlo, Ferri) pack review

- Here’s the First Glimpse at the KPop Demon Hunters Toys from Mattel and Hasbro

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- Arknights: Endfield Weapons Tier List

- Davina McCall showcases her gorgeous figure in a green leather jumpsuit as she puts on a love-up display with husband Michael Douglas at star-studded London Chamber Orchestra bash

- Olivia Wilde teases new romance with Ellie Goulding’s ex-husband Caspar Jopling at Sundance Film Festival

- The Elder Scrolls 5: Skyrim Lead Designer Doesn’t Think a Morrowind Remaster Would Hold Up Today

2026-02-05 02:07