Author: Denis Avetisyan

As robots become increasingly autonomous, ensuring their software is rigorously tested is paramount, and this review examines the current state of robotics testing practices.

This paper maps robotics testing to established software engineering standards, identifying critical gaps in automation, coverage, and bridging the simulation-to-reality divide.

Despite increasing reliance on robotic systems in critical applications, ensuring their reliability remains a significant challenge due to the complexities of real-world interaction and autonomous operation. This paper, ‘Before Autonomy Takes Control: Software Testing in Robotics’, presents a mapping study of 247 robotics testing publications, relating current practices to established software testing theory. Our analysis reveals a gap between traditional software testing standards and the unique demands of robotics, particularly regarding systematic test automation and bridging the simulation-to-reality gap. What novel approaches are needed to comprehensively validate and verify the safety and robustness of increasingly autonomous robotic systems?

The Inevitable Complexity of Real-World Robotics

Robotic systems, unlike conventional software, operate within the unpredictable realm of the physical world, demanding a testing paradigm that transcends code-based verification. Traditional software testing focuses on logical correctness, but a robot’s reliability hinges on its ability to navigate imperfect sensors, react to unforeseen environmental changes, and maintain stable physical interactions. This introduces complexities such as calibration errors, mechanical wear, and the sheer variability of real-world objects – factors absent in purely digital systems. Consequently, evaluating a robot requires not only confirming its algorithms but also validating its physical performance through extensive simulations and, crucially, real-world trials that expose it to a diverse range of conditions and potential failure modes. The challenge lies in creating test scenarios that adequately capture this physical complexity and ensure safe, dependable operation.

Robotic systems, unlike purely digital ones, operate within a continuous and often unpredictable physical environment. This necessitates testing methodologies that move beyond simply verifying code; instead, evaluations must encompass a robot’s ability to perceive, react, and adapt to a vast spectrum of real-world scenarios. Comprehensive testing requires not only simulated environments but also extensive physical trials, exposing the robot to diverse conditions – varying lighting, unexpected obstacles, and imperfect surfaces – to assess its robustness. Varied approaches, including randomized motion planning, adversarial testing with deliberately challenging inputs, and long-duration deployment studies, are crucial for uncovering edge cases and ensuring reliable performance across a wide range of operational contexts. The inherent complexity of these interactions demands a shift towards holistic testing strategies that prioritize adaptability and resilience in the face of environmental uncertainty.

The potential for unpredictable behavior in robotic systems underscores a critical deficiency in current testing practices. A comprehensive review of the field reveals a significant gap in systematic approaches to validation, meaning that many robots are deployed with inadequately assessed performance in real-world scenarios. This lack of rigorous testing isn’t merely a matter of inconvenience; it directly translates to potential safety hazards for humans interacting with these machines and substantial delays in bringing beneficial robotic technologies to market. Addressing this requires moving beyond traditional software verification to encompass the complexities of physical interaction, environmental variability, and long-term operational reliability-a need that necessitates the development and adoption of robust, standardized testing methodologies across the robotics community.

A Layered Approach: Because Nothing Ever Works the First Time

Simulation-based testing forms the initial phase of robotic system validation due to its capacity for rapid iteration and comprehensive scenario coverage. This approach leverages virtual environments to execute a large number of tests, enabling the identification of design flaws and control logic errors before physical implementation. By manipulating variables and conditions within the simulation, developers can assess system behavior under a wide range of inputs and edge cases, significantly reducing development time and cost. The speed of simulation allows for repeated testing and refinement of algorithms and hardware configurations, focusing on areas where potential issues are identified prior to resource-intensive physical prototyping and field trials.

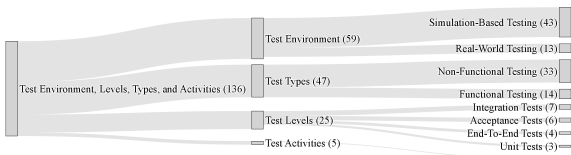

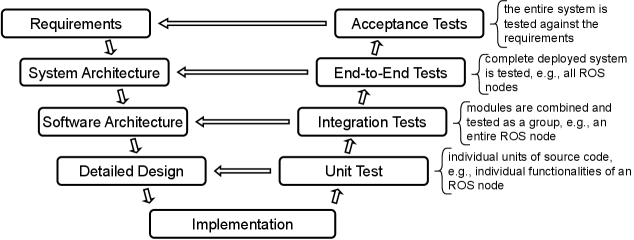

While simulation-based testing is prevalent in initial robotics development, a systematic review of current literature reveals a notable gap in the consistent application of foundational testing methodologies. Specifically, `UnitTesting` – verifying individual software modules – `IntegrationTesting` – confirming the correct interaction between components – and `SystemLevelTesting` – evaluating the complete integrated system – are underutilized despite their proven effectiveness in software engineering. This limited adoption suggests a potential reliance on broader system testing without sufficient verification of core functionalities and component interactions, potentially increasing debugging time and the risk of undetected errors in complex robotic systems.

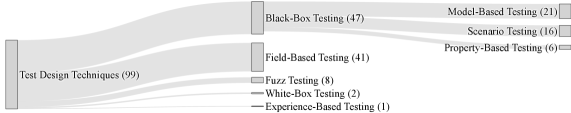

Field-based testing represents a crucial validation step following simulation, subjecting the robotic system to the unpredictable variables present in its intended operational environment. This phase assesses performance metrics – including navigation accuracy, manipulation success rates, and sensor reliability – against real-world disturbances such as variable lighting, uneven terrain, and dynamic obstacles. Data collected during field tests informs refinement of both software algorithms and hardware configurations, identifying discrepancies between simulated and actual behavior, and allowing for iterative improvements to system robustness and overall performance. Unforeseen circumstances encountered during field testing frequently necessitate modifications to the robot’s control logic or physical design, highlighting limitations of the initial simulation parameters and providing valuable data for future iterations.

The Inevitable Disconnect: Simulation Lies, But It Gives You a Starting Point

The discrepancy between robotic system performance in simulation and the physical world, termed the SimulationRealityGap, poses a substantial challenge to robust robotic development and deployment. This gap arises from several key limitations inherent in simulation environments. Imperfect models of physical phenomena – including friction, contact forces, and material properties – fail to accurately replicate real-world dynamics. Furthermore, simulated sensors are subject to idealized noise profiles that do not reflect the complexities of physical sensor data, and actuators are often modeled without accounting for real-world constraints, backlash, or non-linearities. Consequently, control policies and algorithms validated in simulation may exhibit degraded or unpredictable behavior when transferred to a physical robot, necessitating extensive real-world testing and iterative refinement.

Field-based testing is essential for robotic system validation because simulations, while capable of covering a wide range of scenarios, inherently deviate from real-world conditions due to model inaccuracies and unmodeled disturbances. These discrepancies manifest as performance differences between the simulated environment and actual deployment, necessitating evaluation in the target operational environment. Field testing allows for the identification of these systemic errors, providing data to refine simulation parameters, validate control algorithms against real-world physics, and ultimately improve the reliability and robustness of robotic systems before widespread deployment. This process reveals limitations not apparent in simulation, such as the impact of unpredictable environmental factors or the nuances of sensor behavior in non-ideal conditions.

Mitigation of the `SimulationRealityGap` relies on techniques such as domain randomization, which involves training robots on a wide distribution of simulated parameters to increase robustness to unforeseen real-world variations, and sensor calibration, which reduces systematic errors in sensor readings. Current research indicates these methods demonstrably improve transfer learning performance from simulation to real-world deployment. However, a lack of standardized procedures for implementing domain randomization and sensor calibration hinders comparability of results and impedes widespread adoption; this review highlights the necessity for improved standardization in these areas to facilitate more reliable and reproducible robotic system development.

Standardization and Automation: Because Chaos Isn’t a Business Model

The implementation of standardized testing processes is fundamental to building reliable robotic systems, as it establishes a predictable framework throughout the entire testing lifecycle. These processes move beyond ad-hoc evaluations by defining clear protocols for test design, execution, and reporting, ensuring that tests are consistently applied and interpreted. This consistency is not merely about uniformity; it allows for meaningful comparisons between tests conducted at different times or by different teams, enabling the identification of trends and regressions with greater accuracy. Crucially, standardization facilitates traceability – a documented path from system requirements to specific test cases and results – which is essential for verification, validation, and ultimately, demonstrating the robot’s adherence to specified performance criteria and safety standards.

Test automation fundamentally alters the pace and scope of robotic system validation. By employing specialized tools and scripting languages, developers can execute a significantly larger volume of tests in a fraction of the time required for manual procedures. This acceleration isn’t simply about speed; it unlocks the potential for vastly expanded test coverage, allowing for the exploration of a wider range of operating conditions and edge cases. Automated tests can repeatedly and consistently verify functionality, identify subtle regressions introduced by code changes, and ultimately, contribute to a more robust and dependable robotic system. The efficiency gained through automation enables iterative development cycles, facilitating faster prototyping and quicker refinement of designs, while also freeing up human testers to focus on more complex and nuanced evaluations.

Rigorous verification of robotic systems hinges on the effective implementation of automated tests, crucially informed by a well-defined SystemRequirementsSpecification. These tests don’t merely confirm functionality; they provide a systematic means of ensuring the robot consistently meets its intended design goals. By automating the testing process and linking it directly to documented requirements, developers can efficiently identify deviations from the specification – known as regressions – that might otherwise go unnoticed. This proactive approach allows for rapid correction of errors, ultimately enhancing the robot’s performance, safety, and dependability throughout its operational lifespan. The efficiency gained through automation allows for more frequent testing cycles, providing a higher degree of confidence in the system’s reliability and accelerating the development process.

A robot’s dependability is fundamentally linked to the thoroughness of its testing, specifically the degree to which tests actively challenge the system – a metric known as test coverage. Greater test coverage, efficiently achieved through test automation, builds stronger confidence in the robot’s reliability by revealing potential flaws and ensuring adherence to specified requirements. However, a recent systematic review of the field indicates a critical gap: the absence of universally adopted standards for testing procedures and coverage metrics. This lack of standardization not only impedes consistent evaluation of robotic systems but also significantly hinders the reproducibility of research findings, slowing overall progress and creating challenges for validating advancements in robotics.

The pursuit of fully autonomous systems in robotics inevitably encounters the limitations of testing. This paper diligently charts the evolution of testing practices, revealing a familiar pattern: what begins as innovative automation often devolves into complex, brittle infrastructure. It’s a cycle observed across decades of software development. As the article highlights, bridging the simulation-to-reality gap remains a persistent challenge, a testament to the inherent unpredictability of real-world deployments. Linus Torvalds aptly observed, “Most programmers think that if their code works, it must be right.” The irony isn’t lost; elegant simulations offer a comforting illusion, but production, as always, will find a way to expose the flaws, demanding a pragmatic acceptance of ongoing maintenance and adaptation.

What’s Next?

The pursuit of comprehensive robotics testing, as this review demonstrates, inevitably circles back to the oldest problem in computing: managing complexity. Each layer of abstraction, each attempt to optimize for specific scenarios, adds to the surface area for unexpected failures. The drive toward full automation will undoubtedly yield gains in regression testing, but it also promises a future where edge cases are discovered not in controlled environments, but by increasingly autonomous systems in the wild. Every optimization will one day be optimized back, and the simulation-to-reality gap will not be bridged, precisely-it will be continually, expensively, and creatively circumvented.

The mapping of established software testing principles onto robotics reveals less a deficiency in methodology and more a fundamental difference in scale. Verification becomes less about proving the absence of bugs and more about accepting a defined rate of failure. System-level testing, therefore, isn’t about achieving absolute coverage-an impossibility in a continuously interacting world-but about establishing confidence intervals. Architecture isn’t a diagram; it’s a compromise that survived deployment.

The field doesn’t refactor code-it resuscitates hope. Future work will likely focus not on creating perfect simulations, but on developing robust, adaptive testing frameworks that can learn from real-world failures, and on embracing the inherent uncertainty of physical systems. The real challenge isn’t building robots that don’t fail, but building systems that fail gracefully, and that can be safely and efficiently restarted.

Original article: https://arxiv.org/pdf/2602.02293.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Gold Rate Forecast

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- 1st Poster Revealed Noah Centineo’s John Rambo Prequel Movie

- Here’s the First Glimpse at the KPop Demon Hunters Toys from Mattel and Hasbro

- UFL – Football Game 2026 makes its debut on the small screen, soft launches on Android in select regions

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- Arknights: Endfield Weapons Tier List

2026-02-03 14:39