Author: Denis Avetisyan

As AI systems become increasingly pervasive, a robust mechanism for independent assurance is critical to ensure their safety, fairness, and alignment with societal values.

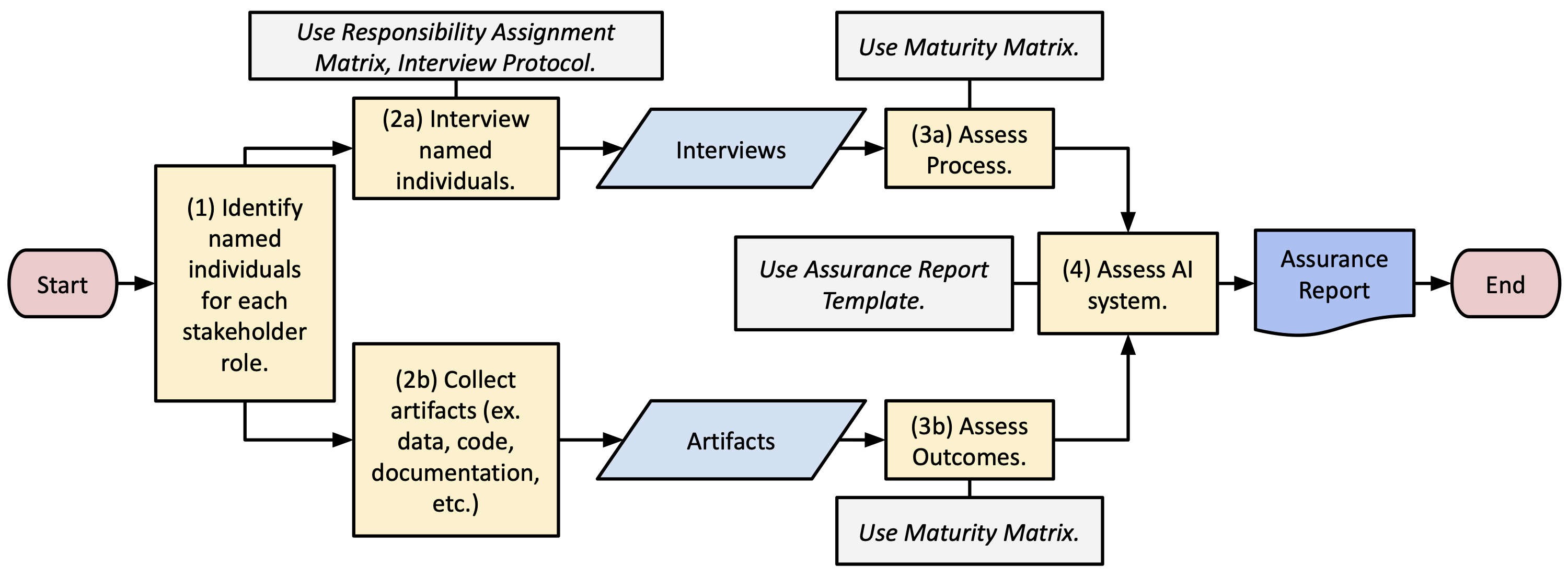

This paper details the design, prototyping, and initial testing of a third-party AI assurance framework focused on comprehensive risk management and outcome-based evaluation.

Despite growing recognition of the need for responsible AI, current evaluation frameworks often lack comprehensive, end-to-end approaches addressing both development processes and real-world outcomes. This paper, ‘Toward Third-Party Assurance of AI Systems: Design Requirements, Prototype, and Early Testing’, introduces a novel third-party assurance framework designed to bridge this gap, emphasizing credible, actionable evaluation throughout the AI lifecycle. By detailing a prototype-including responsibility matrices, stakeholder interview protocols, and a maturity assessment-we demonstrate its usability across diverse organizational contexts and its effectiveness in identifying bespoke AI system issues. Can this framework pave the way for more trustworthy and aligned AI deployments, ultimately fostering greater public confidence in these increasingly pervasive technologies?

The Inevitable Cascade: Understanding AI’s Expanding Risk Surface

The increasing integration of artificial intelligence into the foundational systems of modern life – encompassing power grids, financial markets, healthcare delivery, and transportation networks – fundamentally alters the nature of AI failures. No longer are algorithmic errors simply frustrating inconveniences; they represent escalating potential hazards with far-reaching consequences. A malfunctioning AI in a recommendation engine might lead to lost revenue, but a failure within an automated air traffic control system, or an AI-driven emergency response platform, could directly endanger lives and compromise public safety. This shift demands a proactive and rigorous approach to AI safety, recognizing that the stakes have moved beyond performance metrics to encompass systemic resilience and the prevention of catastrophic outcomes as these technologies become inextricably linked to essential services.

Contemporary assessments of artificial intelligence frequently prioritize achieving high scores on specific tasks, creating a skewed perception of overall system reliability. This narrow focus on performance metrics – such as accuracy or speed – often overlooks critical vulnerabilities related to unintended consequences, bias amplification, or susceptibility to adversarial attacks. Consequently, AI systems might excel in controlled environments but falter unpredictably when deployed in real-world scenarios with complex, unforeseen interactions. This disconnect between benchmark performance and real-world robustness poses significant risks, particularly as AI increasingly integrates into safety-critical applications, demanding a more holistic evaluation that encompasses systemic risks and ethical implications beyond simple performance scores.

To truly harness the power of artificial intelligence, a shift towards comprehensive, lifecycle-focused assurance is essential. This means moving beyond simply testing an AI system’s performance on specific tasks and instead implementing continuous evaluation throughout its entire existence – from initial design and development, through deployment and ongoing operation, and ultimately to retirement. Such an approach acknowledges that AI systems are not static entities, but evolve over time as they interact with new data and changing environments. By proactively identifying and mitigating potential risks – encompassing technical failures, ethical biases, and unintended consequences – throughout this lifecycle, developers and organizations can foster greater trust in AI’s reliability and safety. This, in turn, will unlock the technology’s full potential, allowing for wider adoption and more impactful applications across critical sectors like healthcare, finance, and infrastructure, ultimately benefiting society as a whole.

Despite a proliferation of ethical guidelines and governance frameworks for artificial intelligence, a significant gap persists between stated principles and practical implementation. Many organizations struggle to move beyond aspirational statements, lacking the concrete tools and methodologies needed to operationalize AI safety and fairness. Current frameworks frequently outline what should be achieved – transparency, accountability, bias mitigation – but offer limited guidance on how to achieve these goals throughout the entire AI lifecycle, from data collection and model training to deployment and monitoring. This disconnect hinders responsible AI development, leaving organizations vulnerable to unintended consequences and eroding public trust. Bridging this gap requires a shift towards actionable frameworks, incorporating standardized testing procedures, robust auditing mechanisms, and clear metrics for evaluating AI system performance beyond simple accuracy rates.

The Architecture of Oversight: A Lifecycle Framework for AI Assurance

The AI Assurance Framework structures evaluation of AI systems across the complete lifecycle, encompassing planning and design, data acquisition and preparation, model development and training, deployment, and ongoing monitoring and maintenance. This lifecycle approach necessitates assessment at each stage, considering inputs, processes, and outputs to identify potential risks and ensure adherence to pre-defined quality and ethical standards. Specifically, evaluation isn’t limited to final performance metrics; instead, it incorporates iterative checks on data quality, model bias, system robustness, and operational security throughout the entire process. The framework’s holistic nature allows for early detection of issues, reducing the likelihood of costly rework or deployment failures and fostering continuous improvement.

The AI Assurance Framework prioritizes preemptive risk management throughout the AI system lifecycle. This proactive stance centers on identifying potential failure points – encompassing data quality issues, model biases, security vulnerabilities, and operational limitations – during the design, development, and testing phases. By implementing mitigation strategies before deployment, organizations aim to reduce the likelihood of adverse outcomes and associated costs. This contrasts with reactive approaches that address issues only after they manifest in a deployed system, which often require costly remediation and can damage stakeholder trust. Early risk identification allows for iterative refinement and validation, increasing the robustness and reliability of the AI system.

The AI Assurance Framework’s dual assessment methodology evaluates AI systems through both process and outcome-based criteria. Process assessment examines the development lifecycle – data sourcing, model training, testing, and deployment – to verify adherence to established standards and identify potential risks in how the AI system is built. Complementing this, outcome assessment focuses on the AI system’s performance and impact, measuring what the system achieves in real-world application. This combined approach ensures comprehensive evaluation, addressing not only the functionality and accuracy of the AI, but also the integrity and reliability of the procedures used to create it, thereby facilitating continuous improvement and responsible AI deployment.

Effective implementation of the AI Assurance Framework necessitates clearly defined roles and responsibilities for all participating parties. This includes designating individuals or teams accountable for specific assurance activities – such as data validation, model testing, and risk assessment – at each stage of the AI lifecycle. Stakeholder involvement, extending beyond technical teams to include legal, ethical, and business representatives, is crucial for ensuring comprehensive evaluation and alignment with organizational policies. A matrix detailing roles, responsibilities, and required approvals should be established and maintained throughout the process, facilitating communication and accountability. This collaborative approach minimizes ambiguity and ensures that assurance activities are appropriately resourced and integrated into the overall AI development and deployment workflow.

Demonstrating Efficacy: Validating the Framework in Practice

Early validation of the framework involved deploying pilot applications in controlled environments and conducting interviews with subject matter experts. These pilot implementations allowed for the assessment of the framework’s practical usability and the identification of potential challenges in real-world scenarios. Expert interviews provided qualitative feedback on the framework’s design, functionality, and clarity, highlighting areas where refinement could improve its effectiveness and user experience. Data collected from both pilot applications and expert interviews was systematically analyzed to inform iterative improvements to the framework before broader deployment, ensuring alignment with user needs and technical requirements.

Data Quality Assessment (DQA) is a fundamental process for establishing confidence in AI system outputs. DQA involves evaluating data for accuracy, completeness, consistency, timeliness, validity, and uniqueness. Common DQA techniques include statistical analysis to identify outliers and anomalies, data profiling to understand data characteristics, and the implementation of data validation rules. Specifically, assessment focuses on identifying and mitigating issues such as missing values, incorrect data types, and inconsistencies across datasets. Rigorous DQA is essential because flawed or biased data directly impacts model performance, leading to inaccurate predictions and potentially harmful outcomes; therefore, it’s a prerequisite for reliable AI system training and evaluation.

While quantitative model performance evaluation – utilizing metrics such as accuracy, precision, recall, and F1-score – provides insight into a specific AI system’s capabilities, a comprehensive assessment necessitates integration with systemic analysis. This broader evaluation considers the AI system within its operational context, examining factors beyond predictive power, including data provenance, potential biases in training data, fairness implications across different demographic groups, and the robustness of the system to adversarial attacks or distributional shifts. Systemic analysis also encompasses an evaluation of the entire AI lifecycle, from data collection and labeling to model deployment, monitoring, and ongoing maintenance, to identify potential vulnerabilities and ensure long-term reliability and responsible use.

Effectiveness Assessment of the framework utilizes a multi-faceted approach to determine its capacity to detect deficiencies within AI systems and facilitate positive change. This assessment incorporates quantitative metrics such as the number of identified issues, resolution time, and reduction in error rates, alongside qualitative data gathered through user feedback and expert review. The process focuses on evaluating the framework’s ability to pinpoint root causes of AI system failures, suggest actionable improvements, and ultimately contribute to enhanced performance, reliability, and trustworthiness. Data is collected both during initial implementation and through ongoing monitoring to track the sustained impact of the framework on AI system quality.

Translating Assurance into Action: Reports, Maturity, and Accountability

The Assurance Report serves as a cornerstone for understanding an AI system’s performance, delivering a concise yet comprehensive overview of evaluation results. This document doesn’t simply declare success or failure; instead, it meticulously details both the demonstrable strengths and critical weaknesses identified during the assessment process. By systematically outlining areas where the AI excels – perhaps in predictive accuracy or efficiency – alongside areas requiring improvement, such as bias detection or data security, the report enables stakeholders to prioritize remediation efforts. This structured summary transcends a simple list of findings; it provides actionable intelligence, forming the basis for a targeted strategy to enhance the AI’s reliability, fairness, and overall effectiveness. The clarity of the report ensures that technical details are translated into understandable insights, facilitating informed decision-making and fostering a culture of continuous improvement around the AI system.

A robust Maturity Matrix serves as a crucial benchmark for evaluating an organization’s AI capabilities, moving beyond subjective impressions to deliver an objective score of current processes and resultant outcomes. This isn’t merely a snapshot in time; the Matrix is designed for longitudinal tracking, allowing organizations to chart progress as they refine their AI implementations. Typically structured across defined levels – from initial, ad-hoc approaches to fully optimized and governed systems – the Matrix assesses key dimensions like data quality, model development rigor, deployment procedures, and ongoing monitoring. By consistently reapplying the Matrix, organizations gain quantifiable insight into the effectiveness of improvement initiatives, identify persistent gaps, and demonstrate a commitment to responsible AI development and deployment over time. The resulting data empowers data-driven decision-making and facilitates a proactive approach to mitigating risks and maximizing the value derived from artificial intelligence.

A robust Responsibility Assignment Matrix is central to operationalizing AI assurance, moving beyond identification of improvements to sustained, accountable action. This matrix explicitly defines who is responsible, accountable, consulted, and informed for each recommendation stemming from an AI evaluation and for the ongoing maintenance of assurance protocols. By clearly delineating roles – whether it’s implementing a model monitoring system, addressing data bias, or ensuring regulatory compliance – the matrix prevents diffusion of responsibility and fosters ownership. This structured approach minimizes ambiguity, streamlines workflows, and enables effective tracking of progress, ultimately building a culture where AI governance isn’t a one-time exercise, but an embedded organizational practice. The result is a system that proactively addresses risks and maintains the integrity and reliability of AI systems over time.

Independent validation of an AI assurance framework through third-party assessment is increasingly vital for fostering confidence and accountability. This process involves engaging external experts to rigorously evaluate not only the framework’s implementation – verifying that processes are followed as designed – but also the resulting outcomes, ensuring they align with stated objectives and ethical guidelines. Such impartial scrutiny mitigates inherent biases and offers an objective perspective on the AI system’s performance, robustness, and fairness. The resulting assurance report, backed by an independent entity, then serves as a powerful communication tool, demonstrating a commitment to responsible AI practices to stakeholders, regulators, and the public, thereby building trust and enabling broader adoption.

The pursuit of AI assurance, as detailed in this work, reveals a fundamental truth about complex systems: they are not built, but cultivated. Much like a garden, an AI system’s health isn’t determined solely by initial design, but by ongoing evaluation and adaptation. Ada Lovelace observed that “the Analytical Engine has no pretensions whatever to originate anything.” This echoes the paper’s emphasis on outcome evaluation – assessing what the AI system actually does, rather than simply verifying the processes used to create it. The framework proposed isn’t about imposing rigid control, but establishing conditions for resilience and forgiveness between components, allowing the system to evolve and maintain alignment over time. A mature system acknowledges that failures will occur, and prioritizes the ability to learn and adapt from them.

What’s Next?

This work, aiming to formalize assurance of artificial intelligence, inevitably highlights the futility of seeking guarantees in complex systems. The proposed framework, with its maturity matrix and risk assessments, is not a destination but a temporary stabilization point. Each measured metric, each audited process, is a prophecy of the failure modes it doesn’t detect. Long stability, after all, is the sign of a hidden disaster, not a secure foundation. The true challenge isn’t building better checklists, but accepting that these systems will evolve into unexpected shapes.

Future efforts shouldn’t focus on perfecting evaluation – that’s merely rearranging the deck chairs – but on understanding the dynamics of failure. The field needs to move beyond assessing alignment with current intentions, and begin modeling the likely deviations that will emerge as these systems interact with increasingly complex environments. The question isn’t “is this AI behaving as intended?” but “what unintended behaviors are likely to arise, and what systemic vulnerabilities will amplify them?”

Ultimately, the pursuit of ‘trustworthy AI’ may be a category error. Systems don’t become trustworthy; they become understood, and even then, that understanding is provisional. The value lies not in eliminating risk, but in building ecosystems capable of absorbing and adapting to inevitable change. The goal isn’t a perfect audit, but a resilient capacity for continuous recalibration.

Original article: https://arxiv.org/pdf/2601.22424.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Gold Rate Forecast

- Genshin Impact Version 6.3 Stygian Onslaught Guide: Boss Mechanism, Best Teams, and Tips

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- Play-In stage at IEM Kraków 2026: s1mple and more to kick off the show

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- 10 Years Later, Taylor Sheridan’s Neo-Western ‘Hell or High Water’ Blasts Onto Netflix

- Arknights: Endfield Weapons Tier List

2026-02-02 20:10