Author: Denis Avetisyan

A new framework enhances human-robot teamwork by predicting user intent and dynamically adjusting assistance levels in real-time.

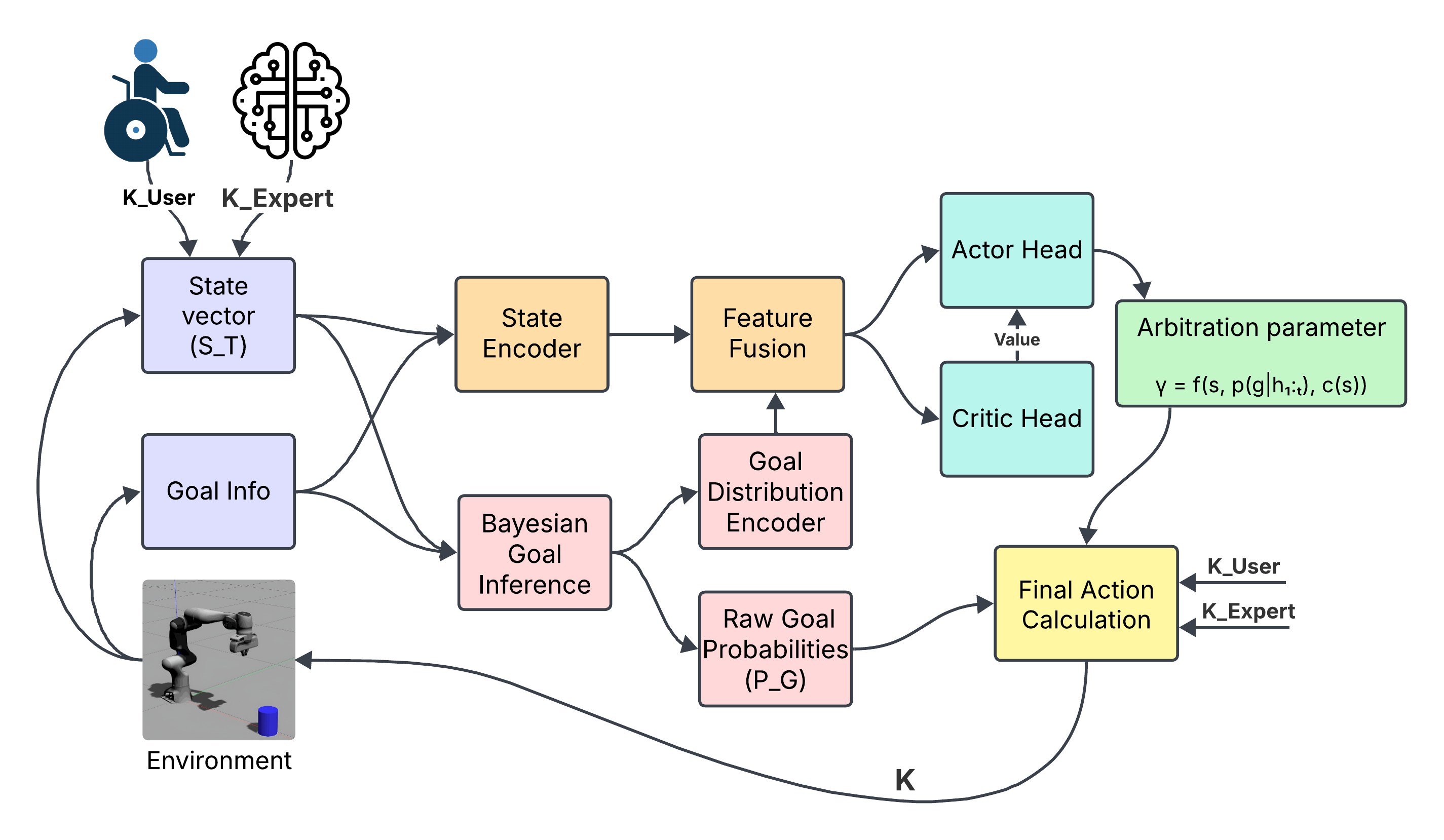

![A shared autonomy framework adaptively blends human kinematic input [latex]K_{user}[/latex] with an expert policy’s kinematic input [latex]K_{expert}[/latex]-governed by the parameter γ-to dynamically negotiate control and achieve nuanced, collaborative movement.](https://arxiv.org/html/2601.23285v1/Figures/General_Formulation.png)

This paper introduces BRACE, an end-to-end learning approach to shared autonomy that minimizes regret and maximizes collaborative performance through full belief conditioning.

Effective shared autonomy requires resolving the tension between providing helpful assistance and respecting user agency, yet current approaches often treat intent inference and assistance arbitration as separate problems. This work introduces a novel framework, BRACE-detailed in ‘End-to-end Optimization of Belief and Policy Learning in Shared Autonomy Paradigms’, which addresses this limitation through end-to-end learning and full belief conditioning. Our results demonstrate that integrating probabilistic goal beliefs into assistance policies yields substantial reductions in expected regret and significant improvements in task efficiency across diverse robotic domains. Could this integrated optimization approach unlock truly intuitive and collaborative human-robot interactions in increasingly complex, ambiguous environments?

Decoding Intent: The Challenge of Shared Control

Conventional robotic systems frequently encounter difficulties when tasked with subtle interactions alongside humans, a challenge particularly pronounced in assistive applications. These robots are often engineered for precise, pre-programmed motions, leaving them ill-equipped to handle the inherent ambiguity and unpredictability of human behavior. Unlike industrial robots operating in structured environments, assistive robots must navigate dynamic, real-world settings and respond to intentions communicated through imprecise gestures, incomplete commands, or even subtle physiological signals. This mismatch between robotic precision and human fluidity often results in awkward, inefficient, or even unsafe interactions, highlighting the need for more adaptable and intuitive robotic designs that prioritize seamless collaboration rather than rigid automation.

Achieving truly collaborative robotics hinges on a machine’s ability to anticipate what a human partner wants to accomplish, yet this presents a significant challenge due to the inherent unpredictability of human behavior. Unlike pre-programmed robots operating in static environments, systems designed for shared autonomy must constantly contend with the nuances of human intention, which is rarely expressed with robotic precision. Individuals exhibit substantial variability in how they perform tasks – speed, force, trajectory all differ – and often communicate goals implicitly, through gestures or subtle cues. Robust intent inference, therefore, requires algorithms capable of navigating this uncertainty, building probabilistic models of human action, and adapting in real-time to deviations from expected patterns. Without such sophistication, a robot risks misinterpreting a user’s desires, leading to inefficient collaboration or, in safety-critical applications, potentially hazardous outcomes.

Many current robotic systems designed for collaboration with humans operate on pre-programmed assumptions about how people will act, employing simplified models of user behavior to predict intentions and guide actions. However, this approach frequently falls short, as human movement and decision-making are inherently complex and unpredictable. These limitations can lead to suboptimal performance – the robot might misinterpret a user’s desired path or react slowly to changes in intent – but more critically, they introduce potential safety concerns. A robot operating on a flawed understanding of human behavior could initiate unintended movements, create collisions, or fail to provide necessary assistance when a user requires it, highlighting the need for more robust and adaptable models of human-robot interaction.

BRACE: Embracing Uncertainty as a Design Principle

BRACE employs belief conditioning to represent uncertainty regarding a user’s ultimate goal as a probability distribution. This distribution is not static; it is continuously updated using Bayesian inference as the system observes user inputs. By maintaining a range of likely goals, rather than committing to a single hypothesis, BRACE enhances its ability to handle ambiguous or incomplete requests. This probabilistic approach allows the framework to anticipate potential user needs and provide assistance even when the user’s intent is not explicitly stated, leading to more robust performance in dynamic and unpredictable interaction scenarios.

The BayesianInferenceModule operates by continuously updating a probability distribution representing the user’s likely intent. This is achieved through the application of Bayes’ Theorem, incorporating observed user inputs as evidence and combining them with pre-existing prior knowledge about typical user goals and behaviors. Specifically, the module calculates a posterior probability distribution over possible intents, weighted by the likelihood of the observed inputs given each intent and the prior probability of that intent. This iterative process allows the system to refine its understanding of the user’s needs with each interaction, enabling adaptation to ambiguous or incomplete input and improved accuracy in goal prediction.

BRACE facilitates assistance by dynamically assessing the probability of various user goals, moving beyond pre-defined responses. This belief-conditioned approach enables the system to prioritize support based on inferred intentions, resulting in a more adaptable and effective user experience. Empirical evaluation demonstrates a performance improvement of up to 13.1% in scenarios characterized by high uncertainty regarding the user’s objective, indicating the framework’s capacity to navigate ambiguous requests and maintain functionality when explicit instructions are limited.

![In the Fetch Pick & Place task, the BRACE assistance policy leverages goal uncertainty and environmental context to generate smoother, more direct trajectories [latex] (f) [/latex] with reduced hesitation, resulting in faster and safer task completion compared to IDA [latex] (d) [/latex] and DQN [latex] (e) [/latex], as demonstrated by decreasing belief entropy and dynamically peaking assistance during critical phases.](https://arxiv.org/html/2601.23285v1/Figures/BRACE3.png)

Refining Assistance: Quantifying and Mitigating Uncertainty

The AssistanceArbitrationPolicy functions as the core decision-making component within the BRACE system, translating the output of the BayesianInferenceModule into actionable assistance levels. This policy doesn’t operate on a single predicted user intent, but rather utilizes the full probabilistic distribution of potential actions generated by the module. Based on the confidence levels associated with each possible user action – quantified through the probabilities assigned by the Bayesian inference – the policy dynamically adjusts the degree of assistance provided. Higher probabilities for specific actions trigger less assistance, assuming a higher likelihood of correct prediction, while lower probabilities, indicating increased uncertainty, result in more proactive and conservative assistance to mitigate potential errors and ensure task completion. This adaptive approach allows BRACE to tailor its support to the user’s immediate needs and confidence levels, maximizing efficiency and minimizing intervention.

The BayesianInferenceModule within BRACE employs an AutoRegressiveModel to forecast subsequent user actions based on observed behavior. This predictive capability is coupled with MinimalJerkTrajectory planning, a method prioritizing smooth and continuous movements by minimizing changes in acceleration. The integration of these two components allows BRACE to not only anticipate user intent, but also to execute assistance that feels natural and avoids abrupt or jarring interventions. Specifically, MinimalJerkTrajectory calculates a path that minimizes the integral of the squared jerk – the rate of change of acceleration – ensuring a comfortable and predictable experience for the user.

BRACE improves upon traditional robotic assistance systems that rely on Maximum a Posteriori (MAP) estimation of user intent by utilizing the complete probabilistic distribution of possible user actions. MAP estimation selects the single most likely action, creating a potential failure point if the prediction is incorrect. By considering the entire distribution, BRACE quantifies prediction uncertainty and adapts assistance accordingly, reducing the risk of intervention errors. Performance evaluations demonstrate that this approach results in a 24.5% faster task completion time in scenarios characterized by high uncertainty compared to systems solely employing MAP selection for action prediction.

Beyond the Algorithm: Expanding Accessibility and Efficiency

The BRACE framework distinguishes itself through broad compatibility with a range of input devices, notably including consumer-grade options like the DualSenseController and specialized devices such as the ForceSensingJoystick. This adaptability isn’t merely technical; it unlocks significant potential in assistive technology applications. Individuals with limited motor skills can leverage familiar gaming controllers for interaction, while the precision of force-sensing joysticks enables nuanced control for tasks requiring fine motor movements. This versatility means BRACE isn’t confined to laboratory settings or bespoke hardware; it can be readily deployed in real-world scenarios, offering a customizable and accessible interface for a diverse population and promoting wider adoption of assistive technologies.

The BRACE framework demonstrates a remarkable capacity for efficient learning through the strategic implementation of TransferLearning and CurriculumLearning. This approach allows the system to leverage knowledge gained from previously mastered tasks, significantly accelerating its adaptation to new challenges. Notably, BRACE achieves 90% of its ultimate performance level using only 27% of the trainable parameters typically required for such complex systems. This substantial reduction in computational demand not only streamlines the learning process but also opens possibilities for deployment on resource-constrained platforms, making advanced assistive technologies more accessible and practical.

The BRACE framework incorporates GradientNormalization as a crucial component for robust and efficient machine learning. This technique actively manages the gradients during the training process, preventing them from becoming excessively large or vanishingly small – a common issue known as divergence that can derail learning. By consistently rescaling these gradients, the framework maintains a stable learning trajectory, allowing the model to converge more rapidly and reliably. This not only accelerates the training process but also enhances the overall performance by maximizing the utilization of trainable parameters, ultimately leading to a more effective and adaptable system.

![Qualitative evaluation of planar cursor control reveals that BRACE achieves significantly higher subjective ratings across seven dimensions-including ease of use and efficiency-compared to unassisted control, IDA, DQN, and manual-γ control [latex] (p < 0.05, p < 0.01).](https://arxiv.org/html/2601.23285v1/Figures/Spider.png)

The pursuit of seamless human-robot collaboration, as detailed in this work, echoes a fundamental tenet of system understanding: probing boundaries to reveal underlying structure. BRACE, with its focus on full belief conditioning and end-to-end learning, doesn’t simply accept the interface between human and machine; it actively tests it, refining assistance strategies to minimize regret. This mirrors Edsger W. Dijkstra’s insight: “It is not sufficient to show that something works; one must show why it works.” The framework isn’t merely demonstrating improved performance; it’s striving to illuminate the principles governing effective shared autonomy, dissecting the complex interplay between intent inference and assistive control to build a truly robust and predictable collaborative system.

Beyond Assistance: The Ghosts in the Machine

The framework presented here, while demonstrating a reduction in quantifiable regret, merely scratches the surface of the true challenge in shared autonomy. It optimizes for assistance, but doesn’t address the inevitable instances where optimized action becomes interference. Consider a skilled surgeon, momentarily guided by an assistive robot – the system’s “help” is valuable until it anticipates an intended, but non-optimal, maneuver – a deliberate, nuanced adjustment the robot’s regret-minimizing algorithm deems “incorrect”. True collaboration isn’t about eliminating error, but navigating it. The system, as it stands, learns what humans should do, not what they will do – a crucial distinction revealed only through persistent, real-world failure.

Future work must move beyond belief conditioning as a passive observation of human intent and embrace active ‘probing’ – subtly testing the boundaries of the operator’s control to refine the model of their decision-making process. This requires a shift from minimizing regret to maximizing ‘informative friction’ – deliberately introducing small, measurable challenges to elicit a more complete understanding of the human partner.

Ultimately, the quest for seamless shared autonomy isn’t about building machines that anticipate needs, but about building systems robust enough to misunderstand them – systems that can learn more from a frustrated correction than from a perfectly predicted action. The imperfections, it seems, are where the intelligence resides.

Original article: https://arxiv.org/pdf/2601.23285.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Gold Rate Forecast

- Genshin Impact Version 6.3 Stygian Onslaught Guide: Boss Mechanism, Best Teams, and Tips

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- Play-In stage at IEM Kraków 2026: s1mple and more to kick off the show

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

- Katie Price’s husband Lee Andrews explains why he filters his pictures after images of what he really looks like baffled fans – as his ex continues to mock his matching proposals

- 10 Years Later, Taylor Sheridan’s Neo-Western ‘Hell or High Water’ Blasts Onto Netflix

- Arknights: Endfield Weapons Tier List

2026-02-02 20:06