Author: Denis Avetisyan

This review examines the core limitations holding back fully autonomous vehicles and explores how emerging AI techniques are poised to unlock the next level of driving intelligence.

Analyzing the challenges of situation awareness and modularity, and evaluating the potential of end-to-end learning and foundation models for achieving Level 5 autonomy.

Despite significant progress, achieving fully autonomous driving remains challenged by the complexities of real-world environments and limitations in current system architectures. This paper, ‘Toward Fully Autonomous Driving: AI, Challenges, Opportunities, and Needs’, analyzes these hurdles-particularly regarding situation awareness and the semantic gap-and explores emerging artificial intelligence approaches like end-to-end learning and foundation models as potential solutions. Our investigation reveals that transitioning to higher levels of autonomy necessitates a re-evaluation of modularity and a focus on robust, adaptable AI systems. What further innovations in AI and system design will be critical to realizing the full potential of self-driving vehicles?

The Shifting Sands of Awareness: Situational Understanding in Autonomous Systems

The pursuit of genuinely autonomous vehicles extends far beyond simply responding to present conditions. While current systems excel at recognizing traffic lights or braking for pedestrians, true autonomy necessitates a holistic understanding of the surrounding world. This involves interpreting not just what is happening, but also why it is happening, and, crucially, anticipating what might happen next. A vehicle operating with mere stimulus-response capabilities is fundamentally limited in complex environments, prone to errors when faced with unusual or unpredictable scenarios. It is this ability to build a comprehensive mental model of the environment – to move beyond reaction and towards proactive, informed decision-making – that defines the next generation of self-driving technology and unlocks the potential for safe, reliable, and truly independent operation.

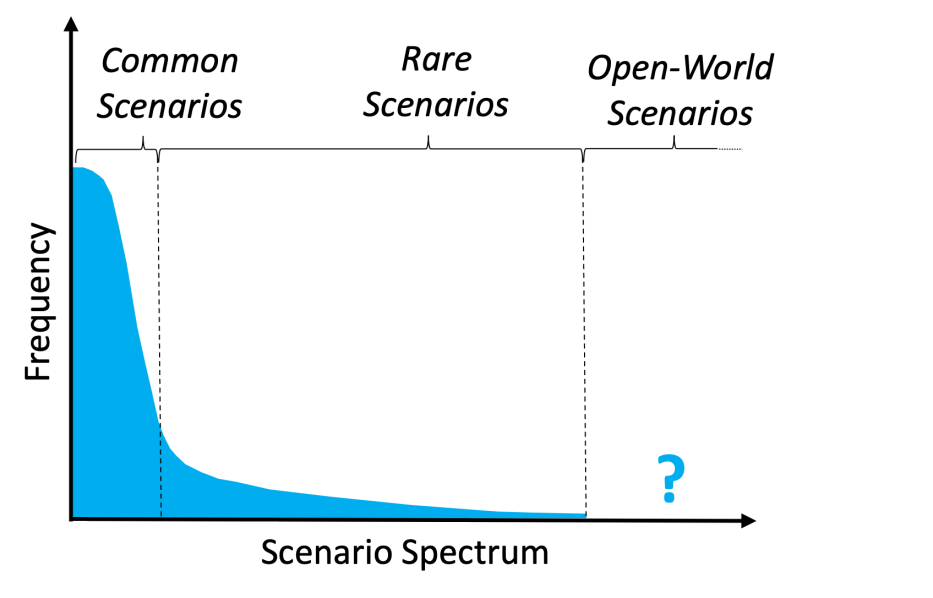

Situation Awareness, crucial for fully autonomous systems, isn’t a single process but a tiered understanding of the environment. It begins with perception, the gathering of raw data through sensors – identifying objects like pedestrians, vehicles, or lane markings. This data then moves to comprehension, where the system integrates the information, interpreting what each element means – recognizing a pedestrian is about to cross the street, for example. Finally, projection utilizes this understanding to predict future states – anticipating where that pedestrian will be in a few seconds, allowing the vehicle to proactively adjust its course. This layered approach, progressing from sensing to understanding to anticipating, provides the robust environmental model necessary for safe and effective navigation in dynamic real-world conditions.

The ability of autonomous vehicles to operate safely hinges on a capacity that extends beyond mere reaction time; it requires anticipating potential hazards and proactively adjusting to dynamic conditions. Without robust situational awareness, these vehicles encounter significant difficulties in complex scenarios, often leading to hesitant maneuvers or, critically, to failures in hazard avoidance. Studies demonstrate that a lack of comprehensive environmental understanding results in increased instances of near-miss collisions and a diminished capacity to handle unpredictable events like sudden pedestrian movements or adverse weather conditions. This deficiency isn’t simply a matter of sensor limitations; it’s a fundamental challenge in translating raw data into meaningful predictions about the future state of the environment, thereby impeding the vehicle’s ability to navigate safely and efficiently.

![Endsley’s model of situation awareness, as detailed in [85], outlines a process for dynamic decision-making.](https://arxiv.org/html/2601.22927v1/img/SA_Model.png)

The Engine of Autonomy: Perceiving, Predicting, and Planning

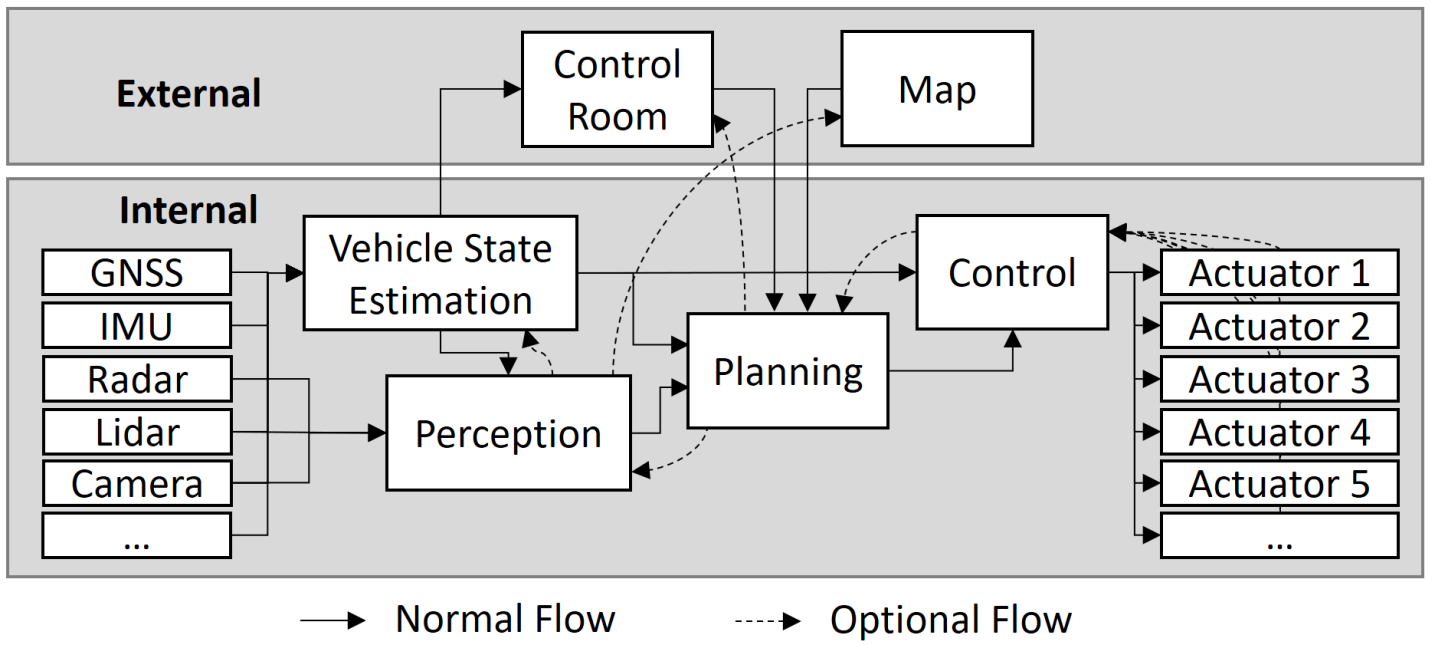

Artificial Intelligence (AI) is fundamental to the operation of contemporary autonomous systems, providing the computational framework for key functionalities. Specifically, AI algorithms enable perception – the processing of sensor data to build a representation of the surrounding environment – and prediction, which estimates future states of dynamic elements within that environment, including other vehicles, pedestrians, and changing conditions. These perceptions and predictions are then utilized by planning algorithms to determine a safe and efficient trajectory for the autonomous system. The integration of these three functions – perception, prediction, and planning – represents a core architecture in the development of self-driving vehicles, robotics, and other autonomous applications.

Vehicle perception systems utilize sensor data – including LiDAR, radar, and cameras – to construct a dynamic representation of the surrounding environment, identifying objects, classifying them, and determining their positions and velocities. This environmental understanding then feeds into the prediction module, which estimates the future trajectories of detected agents – such as pedestrians, cyclists, and other vehicles – as well as anticipates changes in static elements like traffic signals. Accurate prediction is crucial, as it allows the autonomous system to proactively respond to potential hazards and plan safe, efficient maneuvers; prediction algorithms commonly employ techniques like recurrent neural networks and physics-based modeling to forecast behavior over a defined time horizon.

Autonomous vehicle planning utilizes perceived data and behavioral predictions to determine a safe and efficient trajectory. To manage the complexity of these systems, a modular software architecture is commonly employed. This paper surveys four prominent architectural approaches: Interpretable End-to-End (E2E) systems prioritizing transparency; integrated motion prediction and planning which couples these functions for real-time responsiveness; differentiable prediction-to-control architectures enabling gradient-based optimization of the entire pipeline; and modular E2E planning, combining the benefits of modularity with the efficiency of end-to-end learning.

Beyond Brute Force: Learning with Limited Data

Traditional machine learning algorithms typically require large, labeled datasets to achieve acceptable performance; however, acquiring and labeling such datasets can be expensive and time-consuming. Consequently, few-shot and zero-shot learning techniques are increasingly utilized to mitigate these data limitations. Few-shot learning enables models to generalize from a small number of examples-often less than ten-while zero-shot learning aims to recognize and classify data without any explicit training examples for those specific classes. These approaches rely on leveraging pre-existing knowledge, transfer learning, and sophisticated model architectures to extrapolate from learned patterns and relationships, thereby reducing the dependency on extensive labeled data and expanding the applicability of AI in data-scarce environments.

Few-shot and zero-shot learning techniques enable AI models to perform tasks on previously unseen data with significantly reduced reliance on labeled examples. Traditional supervised learning requires extensive datasets for each specific task; however, these methods leverage prior knowledge or learned representations to generalize effectively. Few-shot learning utilizes a small number of examples – often less than ten – to adapt to a new task, while zero-shot learning aims to perform tasks without any explicit training examples by relying on descriptions or attributes of the unseen classes. This capability is achieved through techniques like meta-learning, transfer learning, and leveraging large-scale pre-trained models, ultimately increasing the adaptability and efficiency of AI systems in real-world applications where data acquisition is costly or impractical.

Foundation models, typically large neural networks pre-trained on massive datasets, establish a robust knowledge base transferable to downstream tasks. This pre-training allows for effective adaptation with limited task-specific data. Meta-learning, or “learning to learn,” complements this by training models not just to perform tasks, but to quickly adapt to new, unseen tasks. This is achieved by exposing the model to a distribution of learning tasks during training, enabling it to extract generalizable learning strategies and improve its sample efficiency when confronted with novel situations requiring minimal further training. The combination of these techniques significantly enhances a model’s ability to generalize and perform well in data-scarce environments.

![Architectural approaches to adversarial defense (AD) stacks generally align with the security assurance (SA) model described in [85].](https://arxiv.org/html/2601.22927v1/img/Overview.png)

Deconstructing Complexity: Modular Interfaces and Semantic Representation

Modular end-to-end architectures decompose complex autonomous system tasks into a series of interconnected modules, each responsible for a specific function. These systems utilize token-based interfaces, where information is exchanged using discrete, symbolic tokens representing concepts or objects, rather than raw sensor data. This approach enhances robustness by isolating failures within individual modules and facilitating easier debugging and modification. Flexibility is achieved through the ability to swap or reconfigure modules without requiring extensive retraining of the entire system. Tokenization also allows for abstraction, enabling modules to operate on conceptual representations and reducing the need for precise data synchronization between components. This modularity and abstraction contribute to improved scalability and adaptability in dynamic environments.

Semantic representation in modular autonomous systems facilitates data exchange based on the interpreted meaning of information, rather than the transmission of raw sensor data or token IDs. This approach utilizes formalized knowledge and ontologies to ascribe meaning to data, allowing modules to understand the intent and context of incoming information. By focusing on meaning, semantic interfaces reduce ambiguity and the need for extensive data re-interpretation by each module, leading to improved system robustness and a reduction in error rates associated with misinterpreting input. This contrasts with token-based systems which require explicit mapping and can be brittle to changes in environment or task definition.

Latent representations function as a compressed, lower-dimensional encoding of input data, significantly reducing the computational burden associated with processing high-dimensional raw data. This compression is achieved through techniques like autoencoders or dimensionality reduction algorithms, allowing for more efficient data transmission and processing within a modular system. Complementing this, attention mechanisms dynamically prioritize relevant features within the latent space, focusing computational resources on the most informative elements and mitigating the impact of noise or irrelevant data. This paper presents a comparative analysis of interface types – Semantic, Latent, Query-based, and Token-based – evaluating their respective strengths and weaknesses in the context of autonomous system architectures, with specific consideration given to the efficiency gains realized through latent representations and attention-based prioritization.

Towards True Autonomy: Adaptability and the Future of Mobility

The next generation of autonomous vehicles isn’t simply about improved sensors or faster processors; it’s a fundamental shift towards adaptability fueled by sophisticated artificial intelligence and innovative system design. Current autonomous systems often struggle with unforeseen circumstances, but researchers are now integrating advanced AI learning techniques – including reinforcement learning and generative adversarial networks – with modular vehicle architectures. This combination allows vehicles to learn from simulated and real-world experiences, rapidly adjusting to new environments and challenges. The modular design ensures that individual components – perception, planning, control – can be updated or replaced without overhauling the entire system, fostering continuous improvement and resilience. Ultimately, this synergistic approach promises vehicles capable of navigating complex, unpredictable scenarios with a level of intelligence previously unattainable, paving the way for truly driverless transportation.

Autonomous vehicles are steadily evolving beyond pre-programmed responses to encompass genuine adaptability in unpredictable environments. Current research focuses on equipping these systems with the capacity to not simply react to novel situations, but to reason through them – identifying unfamiliar elements, predicting potential outcomes, and selecting the safest course of action. This isn’t achieved through exhaustive pre-planning for every conceivable scenario, but through advanced machine learning algorithms that allow the vehicle to generalize from past experiences and apply that knowledge to new, unencountered events. The result is a projected increase in both safety – minimizing accidents caused by unforeseen circumstances – and reliability, enabling consistent performance across a wider range of driving conditions and ultimately fostering greater public trust in self-driving technology.

The advancement of autonomous vehicle technology extends far beyond simply replacing human drivers; it promises a fundamental reshaping of modern life. Streamlined logistics networks, capable of operating around the clock with increased efficiency, will redefine supply chains and reduce costs for businesses and consumers alike. Simultaneously, transportation itself will undergo a revolution, offering accessible and affordable mobility to a wider range of people, including those unable to drive. This shift necessitates proactive urban planning, envisioning cities designed around optimized traffic flow, reduced parking needs, and innovative public transport solutions. Ultimately, these interconnected developments have the potential to unlock significant economic growth, improve quality of life, and redefine the very fabric of how people live and work within increasingly connected urban and rural environments.

The pursuit of fully autonomous driving, as detailed in the analysis, reveals a fundamental tension between system complexity and graceful degradation. Current modular architectures, while offering a degree of interpretability, often struggle with unforeseen circumstances – a manifestation of increasing entropy. This echoes Alan Turing’s sentiment: “There is no escaping the fact that the machine will eventually have to be able to do everything a human can do.” The ambition isn’t merely to replicate driving tasks, but to instill a robust form of situation awareness – a capacity for adaptation that anticipates and accommodates the inevitable ‘erosion’ of perfect conditions. The study suggests foundation models represent a step towards imbuing systems with such resilience, allowing them to navigate the inherent imperfections of the real world with a degree of temporal harmony.

The Road Ahead

The pursuit of fully autonomous driving, as this analysis demonstrates, isn’t a problem of achieving perception, but of managing inevitable perceptual decay. Systems will invariably encounter states outside their training distributions-novelty is the only constant. The semantic gap, far from being closed by larger datasets, is merely obscured, a temporary reduction in error before the next unforeseen circumstance. Uptime is not progress; it’s simply the period before the system’s inherent limitations manifest.

The shift toward end-to-end learning and foundation models represents a re-evaluation of architectural principles, a tacit acknowledgement that hand-engineered modularity is an attempt to impose order on a fundamentally chaotic world. Yet, these approaches are not without their own latency-the tax every request must pay for generalized representation. The question isn’t whether these systems will fail, but when, and how gracefully they will degrade.

Future work must address not simply performance metrics, but the articulation of acceptable failure modes. Stability is an illusion cached by time; research should prioritize systems that minimize harm during inevitable breakdowns, rather than striving for an unattainable perfection. The goal, ultimately, isn’t to eliminate risk, but to manage its propagation.

Original article: https://arxiv.org/pdf/2601.22927.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Gold Rate Forecast

- Robots That React: Teaching Machines to Hear and Act

- Mobile Legends: Bang Bang (MLBB) February 2026 Hilda’s “Guardian Battalion” Starlight Pass Details

- Genshin Impact Version 6.3 Stygian Onslaught Guide: Boss Mechanism, Best Teams, and Tips

- 10 Years Later, Taylor Sheridan’s Neo-Western ‘Hell or High Water’ Blasts Onto Netflix

- UFL – Football Game 2026 makes its debut on the small screen, soft launches on Android in select regions

- 10 One Piece Characters Who Could Help Imu Defeat Luffy

- 1st Poster Revealed Noah Centineo’s John Rambo Prequel Movie

- UFL soft launch first impression: The competition eFootball and FC Mobile needed

2026-02-02 08:07