Author: Denis Avetisyan

New research explores how providing feedback specifically on an agent’s reasoning process can dramatically improve its ability to solve complex tasks and utilize tools effectively.

This paper introduces Agent-RRM, a reasoning reward model, and Reagent, a scheme for integrating multi-dimensional supervision to enhance reasoning and tool-use in large language model-powered agents.

While agentic reinforcement learning has shown promise in complex reasoning and tool use, current reliance on sparse, outcome-based rewards often hinders optimal training due to a lack of nuanced feedback. This work, ‘Exploring Reasoning Reward Model for Agents’, addresses this limitation by introducing Agent-RRM, a multi-faceted reward model providing structured signals-explicit reasoning traces, focused critiques, and overall performance scores-to guide agent learning. Through systematic evaluation of integration strategies, we demonstrate that leveraging this multi-dimensional feedback, particularly with the Reagent-U scheme, yields substantial performance gains-achieving 43.7% on GAIA and 46.2% on WebWalkerQA-raising the question of how such reasoning-focused rewards can further unlock the potential of large language model-powered agents.

Beyond the Limits of Traditional Agentic Systems

Despite recent advancements, current agentic Reinforcement Learning (RL) systems frequently encounter limitations when tasked with complex reasoning or adapting to novel situations. These systems, often reliant on extensive training data and monolithic model architectures, demonstrate a tendency to overfit to specific training environments, hindering their ability to generalize effectively. While proficient at mastering tasks within familiar parameters, performance degrades substantially when confronted with scenarios differing even slightly from those encountered during training. This fragility stems from a lack of robust, abstract reasoning capabilities; agents often excel at pattern recognition within the training distribution but struggle to extrapolate learned knowledge to truly unseen challenges, effectively limiting their real-world applicability and necessitating continuous retraining for even minor environmental shifts.

Current agentic reinforcement learning systems frequently depend on large, monolithic models-single, expansive neural networks-to process information and make decisions. While initially effective, this approach presents significant limitations when confronted with dynamic and unpredictable environments. The sheer size of these models necessitates extensive training data and computational resources, hindering their ability to quickly adapt to novel situations or generalize beyond the scope of their training. Moreover, updating a monolithic model requires retraining the entire network, a process that is both time-consuming and inefficient. This inflexibility restricts the agent’s capacity to learn continuously and respond effectively to changing conditions, ultimately limiting performance in real-world applications where environments are rarely static and predictability is low. The reliance on a single, all-encompassing model thus creates a bottleneck, impeding both the speed and efficiency of learning in complex and evolving scenarios.

Traditional reinforcement learning often relies on scalar rewards to guide agent behavior, but this simplistic feedback mechanism presents a significant bottleneck for complex tasks. Nuance is lost when an agent receives only a single number indicating success or failure; it struggles to discern why an action was good or bad, hindering efficient learning and generalization. Researchers are increasingly exploring methods to provide richer, more informative feedback, such as critiques that highlight specific aspects of an agent’s performance, or demonstrations that showcase desired behaviors. These approaches aim to move beyond merely signaling what to do, and instead communicate how to improve, allowing agents to learn more effectively from limited experience and navigate increasingly complex environments. The challenge lies in designing feedback signals that are both informative enough to guide learning and compact enough to be efficiently processed by the agent.

Reagent: A Framework for Nuanced Feedback

Reagent is a learning framework that moves beyond traditional scalar reward signals by incorporating rich textual critiques directly into the agent’s learning process. This integration allows for the conveyance of more nuanced feedback than simple positive or negative reinforcement. The framework is designed to process both quantitative rewards and qualitative, natural language feedback, enabling agents to understand why an action was good or bad, not just that it was. This combination aims to improve learning efficiency and allow agents to develop more complex behaviors by leveraging detailed explanations of their performance.

Integrating detailed textual critiques alongside scalar rewards enables more effective agent learning by providing explanatory signals that clarify why an action was good or bad. This contrasts with traditional reward-only reinforcement learning, where agents must infer the rationale behind a reward through trial and error. By directly receiving explanations, agents can more efficiently identify and correct suboptimal behaviors, accelerating the learning process and potentially leading to improved generalization. The ability to learn from textual feedback allows for the transmission of complex strategies and nuanced reasoning that would be difficult or impossible to encode solely through reward functions.

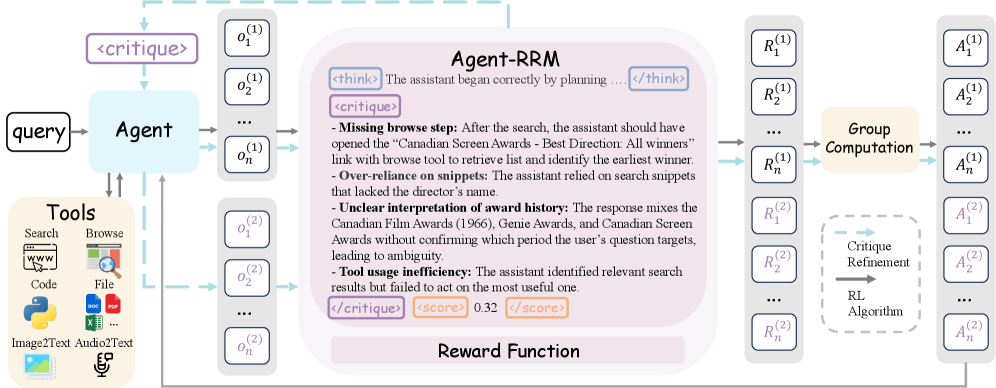

The Reagent framework incorporates three architectural variants – Reagent-C, Reagent-R, and Reagent-U – each designed to integrate scalar rewards and textual critiques via distinct methods. Reagent-C employs a contrastive learning approach, maximizing the similarity between agent behavior and positive critique embeddings while minimizing similarity with negative critiques. Reagent-R utilizes reinforcement learning to directly optimize a policy based on rewards derived from critique relevance; this variant treats critique relevance as an intrinsic reward signal. Finally, Reagent-U unifies both approaches by simultaneously applying contrastive learning and reinforcement learning, allowing the agent to benefit from both discriminative feedback and reward-based optimization.

Agent-RRM: Detailed Assessments for Refined Performance

Agent-RRM functions as a core feedback mechanism by analyzing agent trajectories and producing detailed assessments. This analysis results in three primary outputs: reasoning traces which document the logical steps taken during the agent’s process; critiques, offering specific points of strength and weakness in the trajectory; and holistic quality scores, providing a single, aggregated metric representing overall performance. These outputs collectively move beyond simple reward values by offering a more granular and interpretable signal, enabling more effective learning and refinement of agent behavior. The system’s ability to generate these varied assessments is central to its utility in complex environments.

Agent-RRM augments traditional rule-based reward systems by incorporating model-based signals derived from its reasoning traces. Rule-based rewards, while straightforward to implement, often lack the specificity to guide complex behaviors. Model-based signals, generated by analyzing the agent’s decision-making process, provide a more detailed assessment of performance beyond simple success or failure. This combination increases the granularity of the feedback loop, offering agents more informative signals for learning and refinement; instead of merely indicating what was done incorrectly, Agent-RRM can suggest why, thereby accelerating the learning process and improving overall performance.

Reagent-R and Reagent-U architectures critically depend on Agent-RRM for enhanced performance. These variants are specifically designed to utilize the detailed feedback generated by Agent-RRM – reasoning traces, critiques, and quality scores – as supplementary reward signals. This integration moves beyond traditional rule-based rewards, providing a more granular and informative basis for agent learning and trajectory optimization. Without Agent-RRM, Reagent-R and Reagent-U would be limited to less nuanced reward structures, hindering their ability to effectively refine agent behavior and achieve optimal results.

Empirical Validation: A Leap in Complex Reasoning

Recent experimentation reveals that the Reagent framework, when integrated with the Agent-RRM architecture, consistently surpasses existing performance levels across a suite of challenging benchmarks. Rigorous testing on datasets including GAIA, WebWalkerQA, AIME24, and GSM8K demonstrates Reagent’s capacity for complex reasoning and information retrieval. This achievement isn’t merely incremental; the system establishes new state-of-the-art results, signifying a substantial leap in agent-based AI capabilities and offering promising avenues for future development in automated problem-solving and knowledge acquisition.

The Reagent-U agent demonstrably elevates performance across challenging benchmarks, achieving a new state-of-the-art result of 43.7% on the GAIA benchmark and 46.2% on WebWalkerQA. These scores represent a significant advancement in the field, indicating the agent’s enhanced ability to navigate complex reasoning tasks and extract relevant information. The benchmarks, designed to assess an agent’s capabilities in areas like information retrieval and multi-step problem solving, highlight Reagent-U’s proficiency in processing nuanced queries and delivering accurate, contextually-aware responses. This level of performance underscores the potential of the Reagent framework to drive further innovation in artificial intelligence and automated reasoning systems.

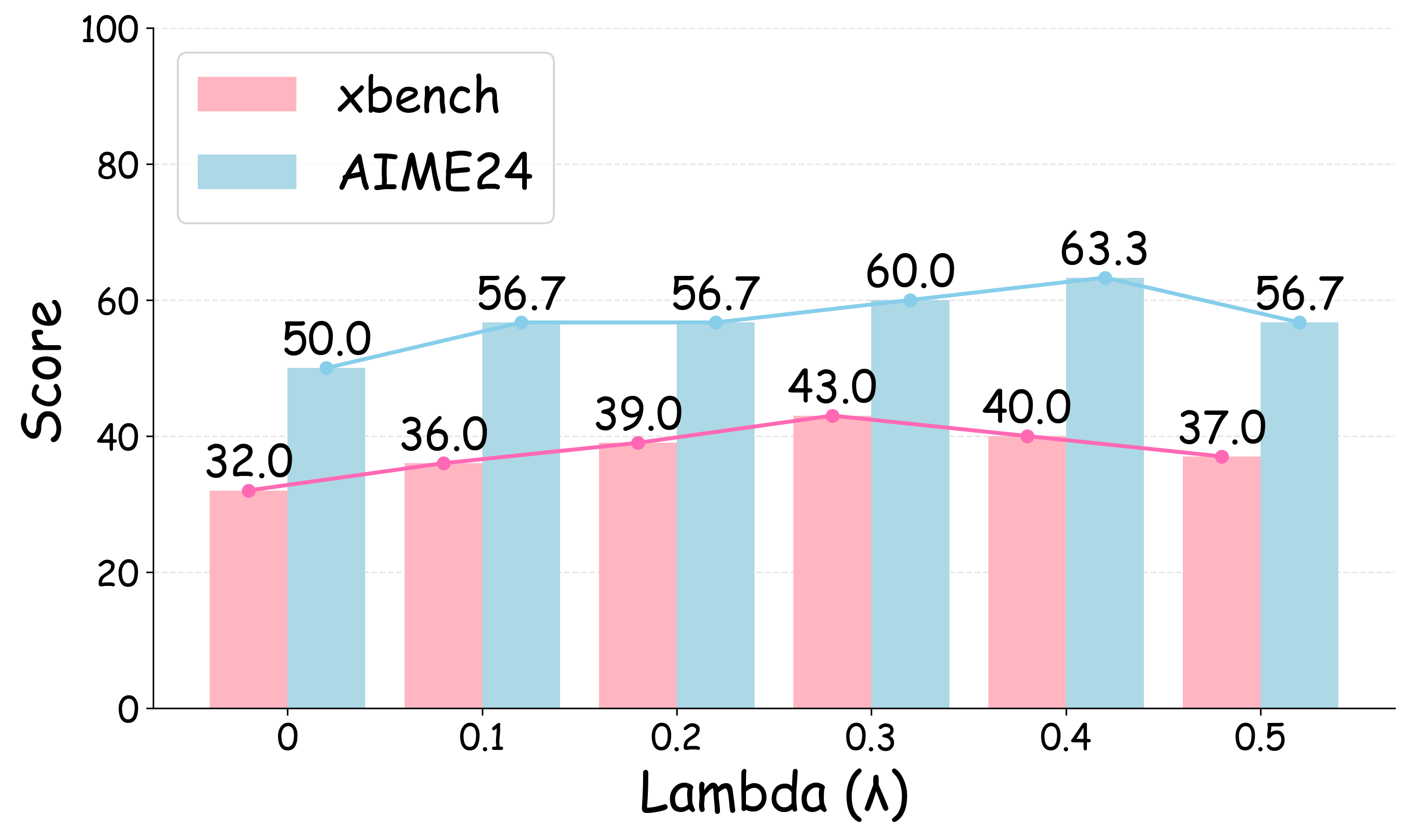

The Reagent-U agent demonstrates a powerful capacity for information acquisition and processing through the strategic utilization of external tools. By integrating capabilities like DeepSeek-Chat and the Bing Search API, the agent effectively navigates and synthesizes information from diverse sources to enhance its problem-solving abilities. This approach yields significant performance gains, evidenced by a 76.8% success rate on the Bamboogle benchmark and a 60.0% rate on AIME24, showcasing its aptitude for tackling complex tasks that demand both knowledge retrieval and reasoning capabilities.

To ensure the validity and reproducibility of performance metrics, the evaluation pipeline incorporates Qwen2.5-72B-Instruct, a large language model employed for automated assessment. This approach moves beyond simple metric-based comparisons by leveraging the model’s capacity for nuanced judgment and contextual understanding. By utilizing Qwen2.5-72B-Instruct, researchers can obtain more robust and reliable results, mitigating potential biases inherent in manual evaluations or simpler automated systems. The model’s capacity to assess the quality of generated responses across diverse benchmarks-including GAIA, WebWalkerQA, and others-provides a consistent and objective standard for measuring the capabilities of Reagent and Agent-RRM.

Towards Truly Adaptive and Robust Agents

Future iterations of this agent architecture will prioritize the integration of Whisper-large-v3, a sophisticated speech recognition system, to unlock the capacity for auditory input processing. This advancement will enable agents to directly interpret spoken commands and engage in more naturalistic dialogues with users, moving beyond text-based interactions. By leveraging Whisper-large-v3’s robust capabilities in transcribing diverse accents, background noise, and varying speech patterns, the agent promises heightened adaptability in real-world environments. The incorporation of audio processing is not merely about adding a new input modality; it fundamentally alters the agent’s potential for complex task execution, allowing it to respond to nuanced spoken requests and seamlessly integrate into human-centered applications.

Current agent training often struggles with sample efficiency and generalization to unseen scenarios. Researchers are actively investigating Group Relative Policy Optimization (GRPO) as a potential solution, a technique that reframes the reinforcement learning process by focusing on relative progress within a group of policies. This approach encourages more stable and efficient learning, as the agent assesses its performance not in absolute terms, but compared to its peers, fostering a more nuanced understanding of optimal behavior. By prioritizing relative improvement, GRPO aims to accelerate the training of complex agents, enabling them to master challenging tasks with fewer interactions and ultimately exhibit greater robustness in dynamic, real-world environments.

The development of agents capable of continuous learning and adaptation represents a significant leap towards truly intelligent systems. Unlike traditional artificial intelligence designed for specific, static tasks, this research establishes a foundation for agents that can refine their skills and strategies through ongoing interaction with dynamic, unpredictable environments. This ability to learn in situ-to improve performance based on real-world feedback-is crucial for deployment in complex scenarios where pre-programming every possible contingency is impossible. The implications extend beyond task completion; these agents promise increased robustness, resilience to unexpected events, and the potential to generalize knowledge across diverse situations, ultimately mirroring the adaptability observed in biological intelligence.

The pursuit of robust agentic systems, as detailed in this work, necessitates a holistic understanding of feedback mechanisms. Every simplification in reward design carries a cost, and every clever trick introduces potential risks – a principle keenly understood by Carl Friedrich Gauss. He once stated, “If other people would think differently about things, they would have thought of them themselves.” This sentiment resonates with the Agent-RRM approach; the model doesn’t simply optimize for a single reward signal but integrates multi-dimensional feedback, acknowledging the complexity inherent in true reasoning and tool-use. By considering various facets of an agent’s performance-beyond merely task completion-the system aims for a more nuanced and reliable form of intelligence, mirroring the interconnectedness Gauss valued in mathematical principles.

Future Directions

The introduction of Agent-RRM and Reagent represents a pragmatic step toward imbuing agents with more robust reasoning capabilities. However, the current paradigm largely treats reasoning as a discrete component, separable from the agent’s core operational logic. A more holistic approach-one where reasoning is the operation, not merely a pre- or post-processing step-may prove critical. Documentation captures structure, but behavior emerges through interaction; the model’s true limitations will become apparent only when subjected to genuinely novel, unpredictable scenarios.

The multi-dimensional feedback scheme, while promising, relies on carefully engineered reward signals. The artifice inherent in these signals raises questions about scalability and generalization. True intelligence doesn’t require explicit scoring; it adapts and thrives within complex, often ambiguous environments. Future work should explore methods for agents to autonomously derive intrinsic reward signals based on internal consistency and predictive accuracy, rather than external validation.

Ultimately, the pursuit of reasoning in agents is not merely a technical challenge, but a philosophical one. The ability to articulate why an action was taken, versus simply that it was taken, speaks to a deeper level of understanding. While Agent-RRM and Reagent offer a valuable toolkit, the path toward genuinely intelligent agents requires a continued reevaluation of what constitutes “reasoning” itself, and how it manifests in complex, embodied systems.

Original article: https://arxiv.org/pdf/2601.22154.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Gold Rate Forecast

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- January 29 Update Patch Notes

- Genshin Impact Version 6.3 Stygian Onslaught Guide: Boss Mechanism, Best Teams, and Tips

- Learning by Association: Smarter AI Through Human-Like Conditioning

- Mining Research for New Scientific Insights

- Robots That React: Teaching Machines to Hear and Act

- Arknights: Endfield Weapons Tier List

- Davina McCall showcases her gorgeous figure in a green leather jumpsuit as she puts on a love-up display with husband Michael Douglas at star-studded London Chamber Orchestra bash

2026-02-01 00:17