Author: Denis Avetisyan

A new framework moves beyond hand-crafted designs to automatically create intelligent agents by learning directly from experience.

ReCreate introduces an experience-driven approach to automated domain agent generation, leveraging reinforcement learning and scaffold optimization.

While large language model agents are rapidly transforming automation, building effective domain-specific agents remains labor-intensive and reliant on manual design. This paper introduces ReCreate: Reasoning and Creating Domain Agents Driven by Experience, a novel framework that overcomes this limitation by automatically creating and adapting agents through systematic learning from interaction histories. ReCreate uniquely leverages an “agent-as-optimizer” paradigm, extracting actionable insights from both successes and failures to refine agent behavior and generalize reusable patterns. Could this experience-driven approach unlock a new era of truly autonomous, adaptable agents capable of tackling increasingly complex real-world tasks?

The Inevitable Limits of Hand-Crafted Intelligence

Historically, crafting agents capable of navigating complex environments has demanded substantial, hands-on effort from skilled practitioners. This process isn’t simply about programming logic; it necessitates deep domain expertise to anticipate challenges and meticulously define the agent’s behaviors. Experts must painstakingly map out possible scenarios, establish appropriate responses, and then rigorously test and refine the agent’s performance-a cycle often requiring months or even years of dedicated work. The creation of these agents isn’t a matter of automated construction, but rather a careful, iterative sculpting process where human insight guides every crucial decision, making scalability a persistent obstacle and limiting the breadth of problems that can be effectively addressed.

Despite the recent advancements afforded by large language models, creating adaptable agents remains a considerable challenge. These models, while proficient at generating human-like text, often exhibit brittle behavior when confronted with scenarios differing even slightly from their training data. Consequently, significant human intervention is frequently necessary to refine agent performance, correct errors, and ensure reliable operation within dynamic, real-world environments. This refinement process isn’t merely tweaking parameters; it often demands substantial re-engineering of prompts, the addition of explicit rules, or even the implementation of entirely new modules to address unforeseen limitations. The need for ongoing human oversight therefore represents a key bottleneck, preventing the widespread deployment of truly autonomous agents capable of independent learning and robust performance across diverse and unpredictable situations.

The extensive manual effort required to build and refine agents presents a fundamental bottleneck to widespread adoption and advanced capabilities. Each new domain or task necessitates bespoke scaffolding – painstakingly crafted rules, examples, and reward functions – effectively limiting the number of agents that can be deployed. This reliance on human intervention not only restricts scalability but also prevents the emergence of true autonomy; agents remain tethered to their initial programming, unable to independently generalize or adapt to unforeseen circumstances. Consequently, the full potential of domain-specific agents – systems capable of tackling complex, real-world problems without constant human oversight – remains largely unrealized, hindering progress in fields ranging from automated scientific discovery to personalized education.

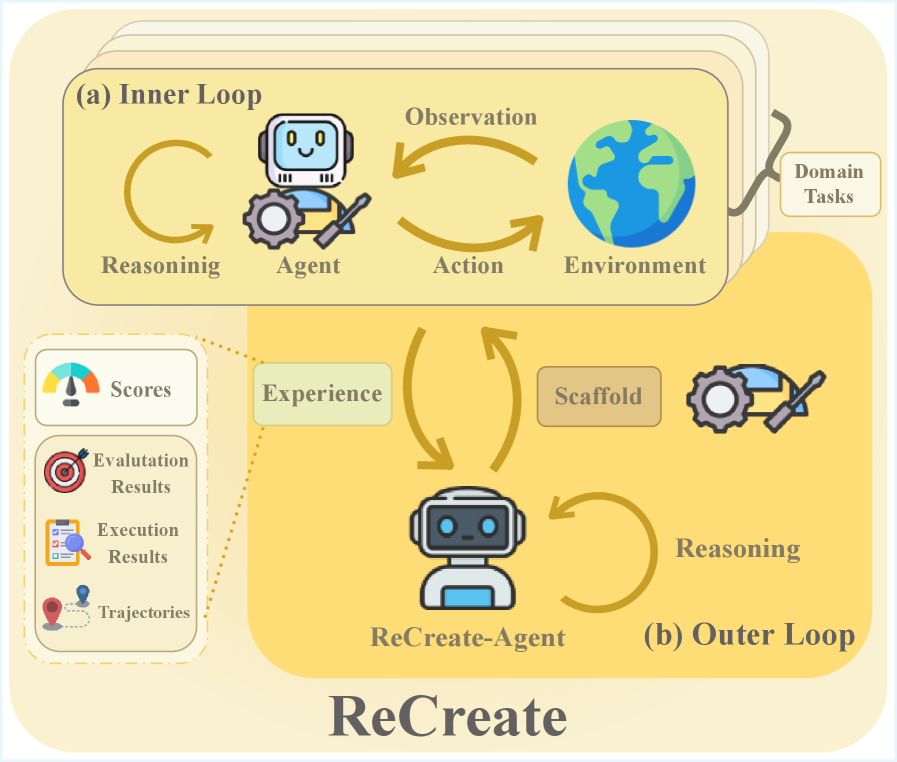

ReCreate: Cultivating Agents Through Experience

The ReCreate framework establishes automated domain agent creation through a process of iterative learning from interaction data. This automation significantly reduces the requirement for manual agent configuration and tuning; instead of explicitly programming agent behavior, ReCreate agents adapt based on their experiences. The system continuously analyzes interactions, identifies performance patterns, and refines the agent’s operational parameters accordingly. This approach allows for the rapid deployment of agents capable of handling complex tasks within specific domains, with minimal upfront engineering effort and ongoing maintenance requirements. The framework is designed to function without the need for extensive human oversight in defining or modifying agent functionalities.

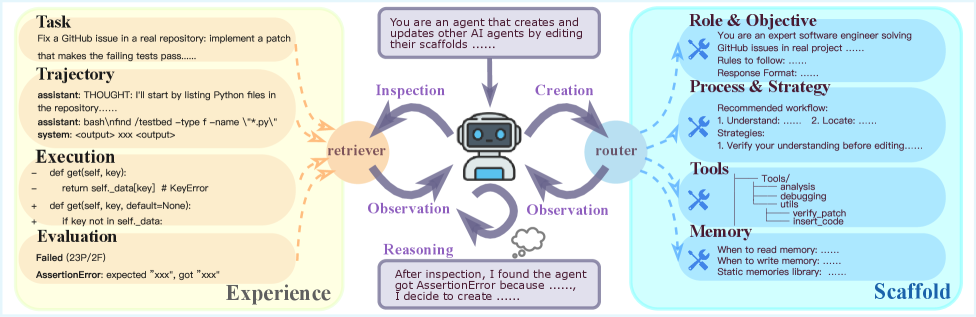

The ReCreate framework utilizes Large Language Model (LLM)-based agents as its core building blocks, but differentiates itself through the introduction of a dynamic ‘Agent Scaffold’. This scaffold is an intermediary layer positioned between the LLM and the agent’s environment, explicitly defining the agent’s permissible actions, available tools, and overall behavioral constraints. Unlike traditional LLM agents where these parameters are largely static, the ReCreate scaffold is mutable; its structure and content are subject to modification based on observed performance data and accumulated interaction experience, enabling adaptation and improved functionality over time. This separation of the LLM’s generative capabilities from the explicitly defined scaffold allows for targeted refinement of agent behavior without requiring retraining of the underlying language model.

The ReCreate framework’s central innovation lies in its treatment of the agent scaffold as a dynamically adjustable component. Unlike traditional agent designs with static behavioral definitions, ReCreate’s scaffold evolves through analysis of interaction experience – data gathered from the agent’s performance in real-world scenarios. This accumulated data informs iterative modifications to the scaffold, allowing the agent to refine its capabilities and adapt to changing demands without requiring explicit reprogramming. The framework continuously monitors performance metrics, identifies areas for improvement, and automatically updates the scaffold to optimize the agent’s behavior, effectively creating a self-improving system.

The Agent as Optimizer: A Cycle of Self-Refinement

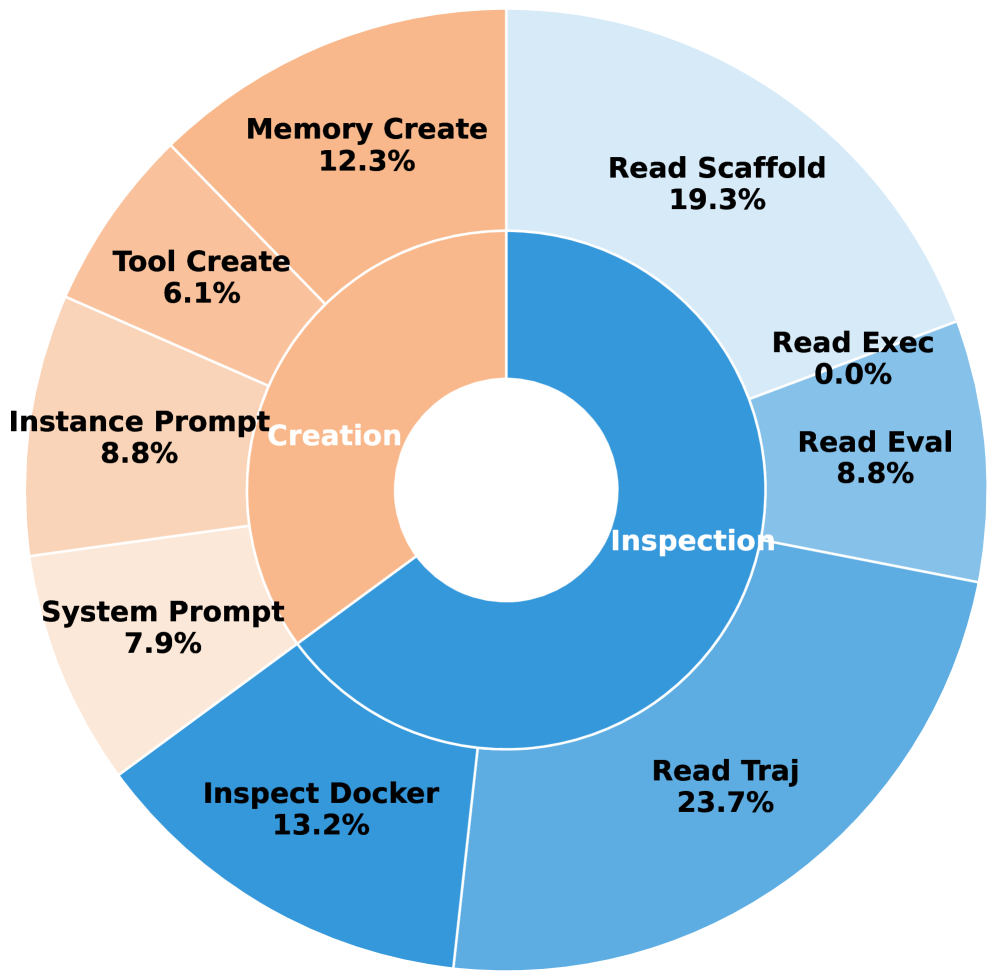

The ReCreate framework implements an ‘Agent-as-optimizer’ paradigm by enabling the agent to self-evaluate performance based on execution data. This involves actively monitoring outcomes, identifying areas of deficiency in its current approach, and subsequently modifying its internal ‘scaffold’ – the set of tools, strategies, and knowledge used to generate solutions. This scaffold is not static; the agent dynamically adjusts it based on observed performance, effectively creating a closed-loop system for continuous improvement. The agent’s capacity for self-inspection and adaptation distinguishes it from traditional approaches and allows for ongoing refinement of its problem-solving capabilities.

The Reasoning-Creation Synergy Pipeline in ReCreate functions as a closed-loop system for iterative refinement. Execution evidence, generated during task completion, is analyzed to identify performance bottlenecks and areas for improvement. This analysis then directly informs the creation of specific scaffold updates – modifications to the agent’s internal knowledge representation and problem-solving strategies. These updates are not random; they are precisely mapped to the observed execution evidence, ensuring targeted improvements. The resulting modified scaffold is then utilized in subsequent task attempts, completing the loop and enabling continuous adaptation and performance gains.

Experience Storage and Retrieval within ReCreate utilizes a dedicated memory system to retain data derived from agent execution. This system logs performance metrics, reasoning traces, and creation outcomes, forming a searchable repository of past interactions. The stored experiences are not simply archived; they are indexed and retrieved based on similarity to current states, allowing the agent to identify relevant past successes and failures. This enables the agent to apply previously learned strategies to new situations and refine its scaffold based on accumulated insights, driving continuous adaptation and improved performance over time. The efficiency of this retrieval process is critical for scaling the agent’s learning capabilities and maintaining responsiveness in dynamic environments.

Scaling Adaptability: The Emergence of Autonomous Skill

The ReCreate framework distinguishes itself through a hierarchical update mechanism that transcends simple, case-by-case learning. Instead of merely memorizing successful actions for specific scenarios, the system aggregates numerous instance-level refinements – small improvements gleaned from individual interactions – into generalized, reusable patterns applicable across a broader domain. This process effectively distills experience into transferable knowledge, allowing the agent to adapt to novel situations more efficiently. By identifying and consolidating common strategies, ReCreate achieves a level of generalization beyond typical reinforcement learning approaches, fostering robust performance even when faced with previously unseen challenges and ultimately enabling continuous, autonomous skill development.

The ReCreate framework distinguishes itself through a capacity for autonomous skill refinement. Unlike traditional agents requiring periodic retraining with labeled data, this system fosters continuous learning by internally aggregating experiences and identifying patterns for improvement. This iterative process allows the agent to progressively enhance its performance on existing tasks and even develop novel strategies without the need for external guidance. Essentially, the agent learns from its own interactions, adjusting internal parameters and refining its decision-making processes to optimize outcomes over time – a crucial step towards truly self-improving artificial intelligence capable of adapting to dynamic and unpredictable environments.

The ReCreate framework distinguishes itself through capabilities extending beyond initial skill development, actively fostering agent self-improvement via both tool learning and memory evolution. This isn’t simply about refining existing techniques; the system enables agents to independently discover and integrate new tools into their skillset, expanding their problem-solving capacity. Simultaneously, ReCreate facilitates the dynamic evolution of the agent’s memory, prioritizing the retention of successful strategies and discarding less effective ones – a process akin to biological learning. By autonomously acquiring new capabilities and refining its knowledge base, the agent demonstrates a capacity for continuous adaptation, moving beyond pre-programmed responses toward genuine, self-directed improvement and sustained performance gains in novel situations.

Automating Intelligence: The Inevitable Shift in Agent Creation

The advent of ReCreate signals a fundamental shift in how intelligent agents are brought into existence, moving beyond the historically labor-intensive process of manual design and programming. Instead of explicitly coding agent behaviors, this novel framework prioritizes experiential learning, allowing agents to develop and refine their capabilities through interaction with a defined environment. This self-evolving paradigm fosters a degree of autonomy previously unattainable, enabling agents to adapt to unforeseen challenges and optimize performance without constant human intervention. The implications are considerable; ReCreate doesn’t simply build agents, it cultivates them, potentially unlocking a new era of truly intelligent and versatile systems capable of tackling increasingly complex tasks with minimal oversight and continuous improvement.

ReCreate fundamentally alters agent creation by prioritizing learning through experience over traditional, manual design specifications. Instead of painstakingly programming an agent’s responses to every conceivable situation, this approach allows the agent to independently explore its environment and refine its behavior based on the outcomes of its actions. This experiential learning fosters a remarkable adaptability, enabling agents to navigate complex and unpredictable scenarios with minimal human intervention. Consequently, the agent doesn’t simply execute pre-defined instructions; it learns how to solve problems, improving its performance over time and effectively handling tasks that would otherwise require constant human oversight or reprogramming. This shift unlocks the potential for truly autonomous agents capable of operating effectively in dynamic real-world applications.

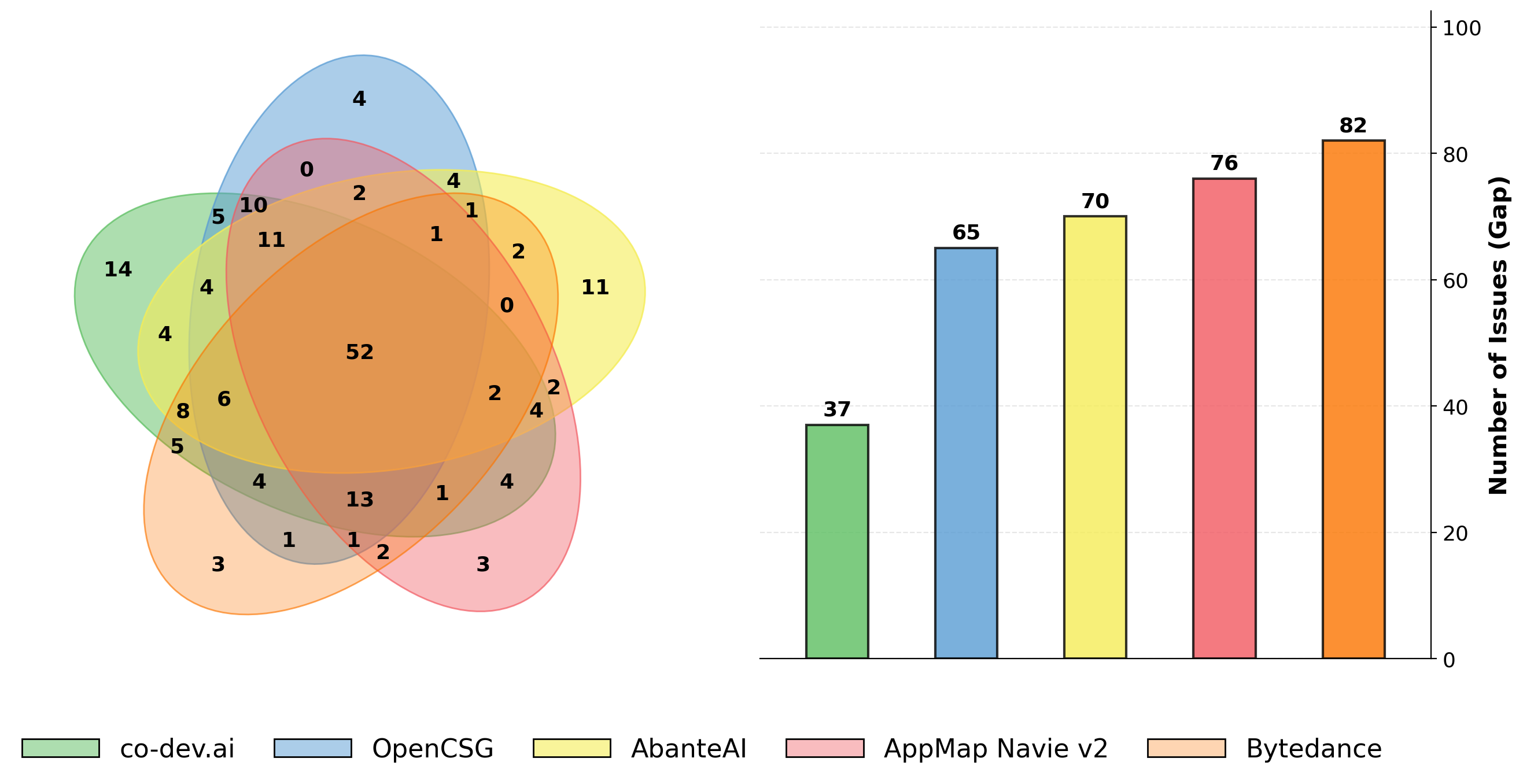

The advent of automated agent creation through experience-driven learning promises substantial advancements across Software Engineering and Data Science. By automating core development and deployment processes, this methodology demonstrably enhances problem-solving capabilities, achieving up to a 37% improvement in resolved issues when contrasted with conventionally designed agents. Furthermore, practical implementations reveal significant cost reductions-ranging from 36% to 82%-when compared to existing Automated Driver-Assistance Systems (ADAS), suggesting a pathway towards more efficient and scalable intelligent systems. This efficiency isn’t merely incremental; it signals a potential paradigm shift, allowing developers to focus on higher-level strategic challenges rather than the intricacies of manual agent construction and maintenance.

The pursuit of automated agent generation, as exemplified by ReCreate, inevitably courts increasing systemic dependency. The framework learns and optimizes through experience, yet each iteration solidifies a complex web of interactions, a growing reliance on past choices. This echoes Dijkstra’s observation: “In the long run, every program gets rewritten.” ReCreate, in its continuous learning, doesn’t so much build agents as cultivate a sprawling ecosystem of learned behaviors. The system’s future stability isn’t guaranteed by initial design, but by its capacity to adapt within an ever-more-intricate, self-created dependency network. The very act of scaffold optimization, while initially simplifying the creation process, ultimately contributes to this systemic entanglement.

What Lies Ahead?

The pursuit of automated agent creation, as exemplified by frameworks like ReCreate, inevitably shifts the locus of effort. It is no longer merely about designing better scaffolds – those temporary structures, so confidently erected, yet so quickly revealed as insufficient. The true challenge resides in cultivating systems that learn to discard them. Experience-driven optimization, while promising, merely trades one brittle compromise for another: the rigidity of manual design for the unpredictability of emergent behavior. The illusion of control persists, even as the underlying complexity increases.

One anticipates a future less concerned with “intelligent” agents and more with resilient ones. Systems that don’t strive for optimal performance, but for continued existence within a dynamic, and frequently hostile, environment. The focus will likely drift from reinforcement learning-a quest for reward-towards mechanisms of self-preservation and adaptation. Technologies change, dependencies remain; the accumulation of interaction experience will prove less valuable than the capacity to gracefully accommodate unforeseen consequences.

The ultimate irony, of course, is that in striving to automate creation, the field will rediscover the fundamental limitations of automation itself. Architecture isn’t structure – it’s a compromise frozen in time. The agents of tomorrow will not be built, but grown, and their success will be measured not by what they achieve, but by how well they endure.

Original article: https://arxiv.org/pdf/2601.11100.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- M7 Pass Event Guide: All you need to know

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 21:22