Author: Denis Avetisyan

New research explores how conversational AI can help users critically evaluate information found during online searches.

This review investigates the design of AI copilots aimed at boosting digital literacy and mitigating the spread of misinformation through enhanced source evaluation.

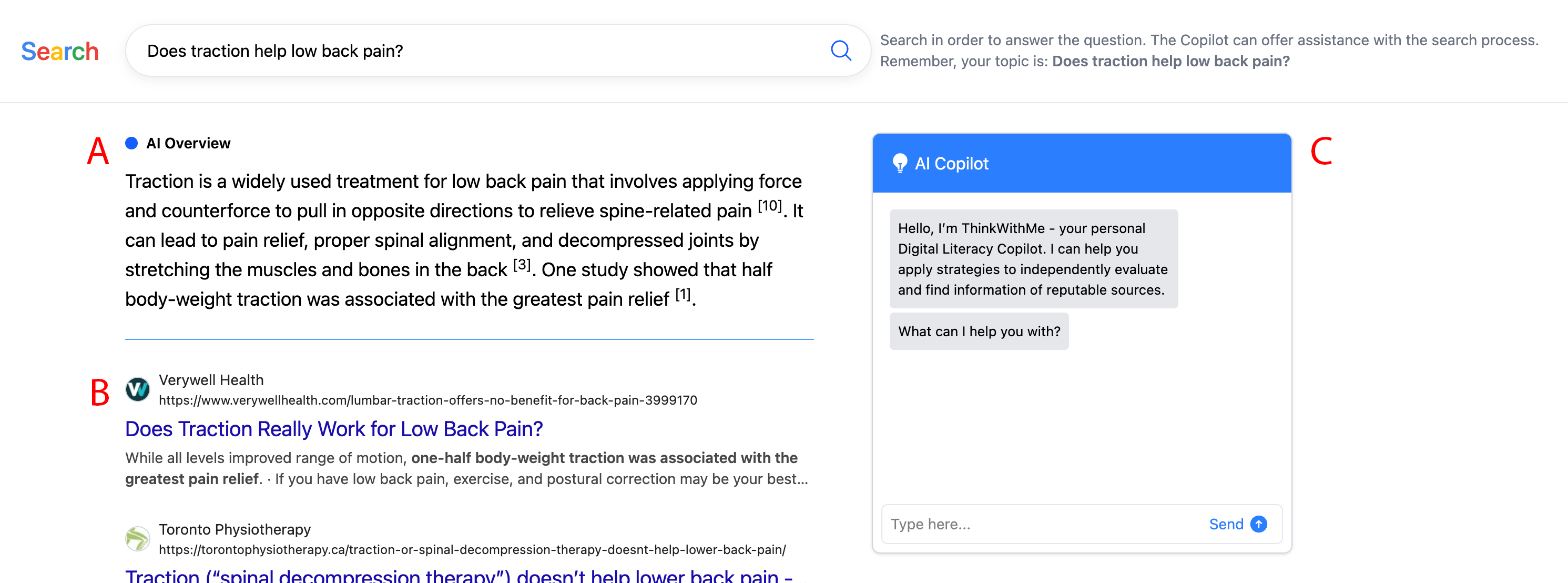

While generative AI promises to revolutionize information seeking, its tendency to provide fluent answers risks undermining critical evaluation and independent thought. This paper-“Can You Tell Me?”: Designing Copilots to Support Human Judgement in Online Information Seeking”-investigates an LLM-based conversational copilot designed to scaffold information evaluation, rather than simply providing answers. Results from a randomized controlled trial reveal that, although users deeply engaged with the copilot and demonstrated metacognitive reflection, improvements in answer correctness were limited by a trade-off between chat engagement and broader source exploration. How can pedagogical copilots be designed to simultaneously foster digital literacy and* meet users’ demands for efficient information access?

The Shifting Landscape of Knowledge Acquisition

Contemporary information access is fundamentally shaped by the dominance of search engines and the accelerating advancements in generative AI. These technologies have dramatically altered how individuals locate and synthesize knowledge, shifting from actively browsing numerous sources to receiving concise, algorithmically-curated responses. The convenience offered by these systems is undeniable, yet it simultaneously fosters a reliance on automated processes for knowledge discovery, effectively mediating the relationship between a user and the vast expanse of online information. This transition isn’t merely about speed; it represents a paradigm shift where the presentation of information – and therefore, the shaping of understanding – is increasingly delegated to complex algorithms and large language models, redefining the very act of inquiry.

Search engines and generative AI, while revolutionizing information access, are fundamentally shaped by the data they ingest, inheriting and often amplifying existing societal biases present on the web. This susceptibility to bias isn’t merely a technical glitch; it represents a systemic challenge where algorithms can perpetuate skewed perspectives on various topics, ranging from gender and race to political ideologies and cultural norms. Consequently, search results and AI-generated summaries may not reflect a neutral or comprehensive view, potentially reinforcing stereotypes, suppressing minority viewpoints, or even promoting misinformation as a function of biased training data. This impacts user understanding not by presenting falsehoods outright, but by subtly shaping perceptions through the selective presentation of information, creating an echo chamber effect and hindering critical evaluation of diverse perspectives.

The integration of AI Overviews into search engines introduces a critical shift in information access, presenting users with directly synthesized answers rather than lists of sources for independent evaluation. Recent studies demonstrate a concerning trend: reliance on these AI-generated summaries significantly diminishes answer correctness compared to traditional search methods. This decline stems from the potential for inaccuracies within the AI’s synthesis – errors or biases that bypass the user’s typical fact-checking process. Because the AI presents a seemingly definitive answer, users are less likely to critically assess the information or seek corroborating evidence, accepting the provided overview at face value and, consequently, increasing the risk of misinformation acceptance. This poses a fundamental challenge to established models of information seeking, where users actively engage with sources to construct their understanding.

Cultivating Critical Evaluation: A Necessary Adaptation

Historically, information literacy instruction has centered on techniques such as source triangulation and lateral reading – verifying information by consulting multiple, independent sources. However, contemporary search behaviors, particularly those facilitated by algorithmic search engines and AI-powered overviews, frequently prioritize speed and convenience. This emphasis on rapid information access often circumvents the deliberate, multi-source verification processes traditionally taught, as users may accept the first presented result or a summarized overview without further investigation. Consequently, established information literacy skills are frequently underutilized in modern information-seeking contexts, creating vulnerabilities to misinformation and biased content.

Cultivating effective information evaluation necessitates the development of dual competencies: the ability to locate relevant information and the capacity to critically assess its validity. This process is significantly enhanced through targeted skill-building, often referred to as “Boosting,” which focuses on specific areas of weakness in a user’s evaluation capabilities. Unlike broad-based information literacy training, Boosting concentrates on practical application and aims to improve the user’s ability to identify biases, assess source credibility, and recognize logical fallacies within information encountered. This targeted approach facilitates more efficient learning and demonstrable improvements in critical assessment skills, enabling users to move beyond simply finding information to actively evaluating its trustworthiness and relevance.

Adherence to established Digital Literacy Principles is essential when designing information systems intended to support user evaluation of claims and bias detection. Recent research indicates that while conversational copilot interventions do not demonstrably improve the factual correctness of answers provided, they can lessen the detrimental effects of biased information presented in AI-generated overviews. This suggests that system design focused on facilitating critical thinking and source evaluation, rather than solely focusing on answer accuracy, represents a valuable approach to mitigating the spread of misinformation and the amplification of bias in automated information environments.

The Conversational Copilot: A System for Guided Inquiry

The Conversational Copilot utilizes Large Language Models (LLMs) and Generative AI (GenAI) to deliver interactive support throughout the information seeking process. These models enable the system to understand user queries and respond with tailored guidance, moving beyond simple keyword-based search. Specifically, the LLM component facilitates natural language processing to interpret user intent, while GenAI capabilities allow the Copilot to dynamically generate prompts and suggestions designed to encourage critical evaluation of sources. This combination allows for a conversational interface that actively assists users in navigating information landscapes and verifying the validity of claims.

The Conversational Copilot actively promotes information evaluation techniques by guiding users through Lateral Reading and fostering Click Restraint. Lateral Reading involves opening new browser tabs to investigate the source of information, author credibility, and potential biases, rather than deeply reading the initially presented content. Click Restraint encourages users to pause before clicking on search results, prompting them to consider the potential validity of the source based on preview information and to avoid accepting the first result as definitive. This approach is designed to shift user behavior away from passive consumption and towards active verification of claims and source evaluation before forming conclusions.

The Conversational Copilot actively reduces the acceptance of incorrect information by prompting users to verify claims and explore alternative viewpoints during information seeking. User interaction data indicates an average of 5.35 conversational turns were completed with the Copilot during the evaluation process, suggesting a substantive engagement with the verification prompts. This interactive approach demonstrably decreases reliance on initial search results; users exhibited a 42.4% reduction in page visits compared to standard search methodologies, implying a more focused and critically-informed information evaluation process.

Beyond Information Retrieval: Empowering Informed Judgments

This system transcends conventional fact-checking by adopting a comprehensive framework for evaluating information, with broad applicability extending to crucial areas like medical research and patient care. Rather than simply verifying claims, it encourages users to consider the broader context, source credibility, and potential biases within the information they encounter. This holistic approach moves beyond identifying falsehoods to cultivating a more discerning mindset, enabling individuals to critically assess the reliability and validity of information across diverse domains. The framework’s adaptability positions it as a versatile tool for promoting informed decision-making, not just in response to specific queries, but as an ongoing process of intellectual engagement with the world.

The system doesn’t simply present information; it gently guides users towards more thorough evaluation of sources, employing techniques reminiscent of behavioral ‘nudges’. Rather than overt correction, the copilot encourages deeper engagement with the original material – prompting consideration of author credibility, potential biases, and supporting evidence. This subtle influence doesn’t dictate conclusions, but rather cultivates a more critical mindset, prompting users to actively synthesize information instead of passively accepting it. By encouraging this thoughtful approach, the system aims to move beyond merely identifying misinformation and instead empower individuals to become discerning consumers of information, fostering a habit of careful scrutiny that extends beyond the immediate task.

The system offers more than just a reactive defense against false information; it actively cultivates a more robust approach to evaluating evidence. Studies demonstrate this proactive support strengthens the basis for sound judgment, particularly within the increasingly complicated digital world. Crucially, the copilot maintains comparable levels of accuracy to standard search methods, even when confronted with skewed or biased summaries generated by artificial intelligence. This resilience suggests a valuable capacity to safeguard the integrity of information gathering and preserve reliable results amidst the proliferation of potentially misleading content, bolstering confidence in the decision-making process.

The research highlights a critical interplay between user agency and system support in navigating complex information landscapes. This echoes Donald Davies’ sentiment: “If a design feels clever, it’s probably fragile.” The conversational copilot, while aiming to boost digital literacy and mitigate misinformation, must avoid becoming overly prescriptive. A system that prioritizes thorough source exploration-allowing users to actively engage with and evaluate information-proves more robust than one relying on ‘clever’ shortcuts. The study’s findings suggest that a fragile design-one that oversteps in guiding user judgment-ultimately undermines the very skills it intends to foster, emphasizing the need for elegant simplicity in supporting human information seeking.

Where Do We Go From Here?

This work suggests that interventions aimed at bolstering human judgement in online spaces are not simply about presenting information, but about shaping the process of seeking it. The copilot’s success hinges on a delicate balance: sufficient engagement to prompt critical reflection, yet not so much as to curtail independent exploration of sources. Systems break along invisible boundaries – if a user is nudged too forcefully, the system itself becomes the sole authority, eroding the very skills it intends to cultivate. The observed dependence on copilot ‘boosting’ reveals a critical vulnerability; reliance on external validation, even from a seemingly benevolent AI, can mask underlying deficits in source assessment.

Future research must move beyond assessing whether a copilot works and focus on understanding how it alters a user’s cognitive framework over time. Longitudinal studies are needed to determine whether the benefits observed in controlled settings translate to sustained improvement in real-world information evaluation. Furthermore, the assumption of a singular ‘information seeker’ is overly simplistic. The copilot’s effectiveness will undoubtedly vary based on pre-existing levels of digital literacy, cognitive biases, and individual learning styles.

Ultimately, the challenge lies in designing systems that acknowledge their own limitations. A truly effective copilot will not merely flag misinformation, but will model the process of critical inquiry, encouraging users to question, verify, and ultimately, to trust their own informed judgement. Structure dictates behavior, and a system built on transparency and self-awareness will be far more resilient – and far more valuable – than one that promises easy answers.

Original article: https://arxiv.org/pdf/2601.11284.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- M7 Pass Event Guide: All you need to know

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 21:21