Author: Denis Avetisyan

New research reveals how automated bots participate in open-source projects and subtly influence the emotional tone of developer interactions.

Analysis of bot activity in open-source development shows distinct participation patterns and a positive impact on reducing uncertainty and increasing expressions of appreciation in human communication.

While collaborative open-source development relies increasingly on automated bots, their nuanced impact on human interaction remains poorly understood. This research, ‘Patterns of Bot Participation and Emotional Influence in Open-Source Development’, investigates bot behavior and emotional effects within the Ethereum ecosystem, revealing distinct participation patterns based on the type of contribution-pull requests versus issues. We find that even a small percentage of bots not only respond more quickly to code changes but also subtly shift the emotional tone of developer discussions, fostering gratitude and optimism while reducing confusion. Could strategically designed bots therefore play a key role in cultivating more positive and productive open-source communities?

The Evolving Landscape of Collaborative Code Development

Open source projects now function as intricate collaborations between human developers and automated bots, fundamentally reshaping the software creation process. These bots aren’t simply tools; they are active participants, consistently executing tasks ranging from code formatting and documentation updates to complex issue triage and pull request merging. This dynamic creates a unique collaborative landscape where the speed and scalability of automated systems are interwoven with the creativity and problem-solving abilities of human contributors. Consequently, the health and progress of many open source initiatives are increasingly dependent on the seamless interplay between these two forces, demanding a new understanding of collaborative software development where code is co-authored by both people and machines.

The operational backbone of modern open source projects is increasingly supported by automated bots that perform essential, yet often tedious, tasks. These digital collaborators aren’t simply automating code commits; they are proactively safeguarding project integrity through continuous security scanning, identifying vulnerabilities before they can be exploited. Simultaneously, bots expertly manage complex dependency networks, ensuring that projects remain compatible and stable as external libraries evolve. Crucially, they also streamline the continuous integration and delivery (CI/CD) pipeline, automatically building, testing, and deploying code changes – a process that dramatically accelerates development cycles and enables faster iteration. This shift towards automated workflows isn’t about replacing human developers, but rather freeing them to focus on more complex problem-solving and innovative design, ultimately fostering a more robust and rapidly evolving open source landscape.

The pervasive integration of automated bots within open source development necessitates a thorough understanding of their operational patterns and potential consequences. These bots, while designed to streamline processes such as code review, issue triage, and automated testing, exert a substantial influence on project velocity and overall code quality. Analyses of bot activity reveal that seemingly benign actions – like automatic dependency updates or pull request labeling – can inadvertently introduce regressions, create merge conflicts, or disproportionately burden human contributors. Consequently, monitoring bot behavior, identifying anomalous patterns, and establishing clear governance policies are now crucial for maintaining the health and sustainability of open source ecosystems, ensuring that automation enhances, rather than hinders, collaborative development efforts.

Discerning Automated Contributors: A Multifaceted Approach

A reliable bot detection framework is critical for accurate account categorization within open source repositories due to the increasing prevalence of automated accounts. These frameworks move beyond simple rule-based systems by analyzing complex patterns in user activity, allowing for differentiation between legitimate human contributors and automated bots. Accurate categorization is essential for maintaining data integrity, providing meaningful metrics about repository health, and ensuring efficient collaboration. Without a robust framework, metrics such as contributor counts and issue resolution times can be skewed by bot activity, hindering effective project management and community analysis.

The bot detection framework analyzes account behavior specifically within the context of ‘Issues’ and ‘Pull Requests’ to identify automated accounts. This analysis focuses on patterns in activity such as the frequency of comments, the types of actions performed (e.g., opening, closing, labeling), and the consistency of these actions over time. Examination of interactions with these repository features allows the framework to differentiate between typical human contributions and the repetitive, often rule-based, actions characteristic of bots. The framework doesn’t rely on a single indicator, but rather a combination of these behavioral patterns to categorize accounts.

The bot detection framework, following manual validation, currently achieves a precision of 27.9% and a recall of 89.5%. This indicates the framework effectively identifies a high percentage of bots (89.5%), though a significant portion of identified accounts require manual confirmation to eliminate false positives. Analysis of bot behavior revealed a median response time of 34.6 minutes to comments, which is comparable to the median human response time of 34.6 minutes. This similarity in response time suggests that, in certain interactions, bot activity can be difficult to distinguish from human activity based solely on temporal characteristics.

Unveiling Emotional Signatures: Bots Versus Humans

Emotion Classification leverages computational linguistics and machine learning to determine the emotional tone expressed in text. Current implementations utilize pre-trained models, such as RoBERTa-GoEmotions, which has been specifically trained on a large dataset of text labeled with emotional categories. This model analyzes textual input, identifying patterns and linguistic cues associated with emotions like joy, sadness, anger, and fear. The output is typically a probability distribution across these emotion categories, allowing for a quantitative assessment of the emotional content present in a given contribution, be it from a human or an automated bot account. This technique allows researchers to compare the emotional profiles of different user groups and identify potential differences in online communication patterns.

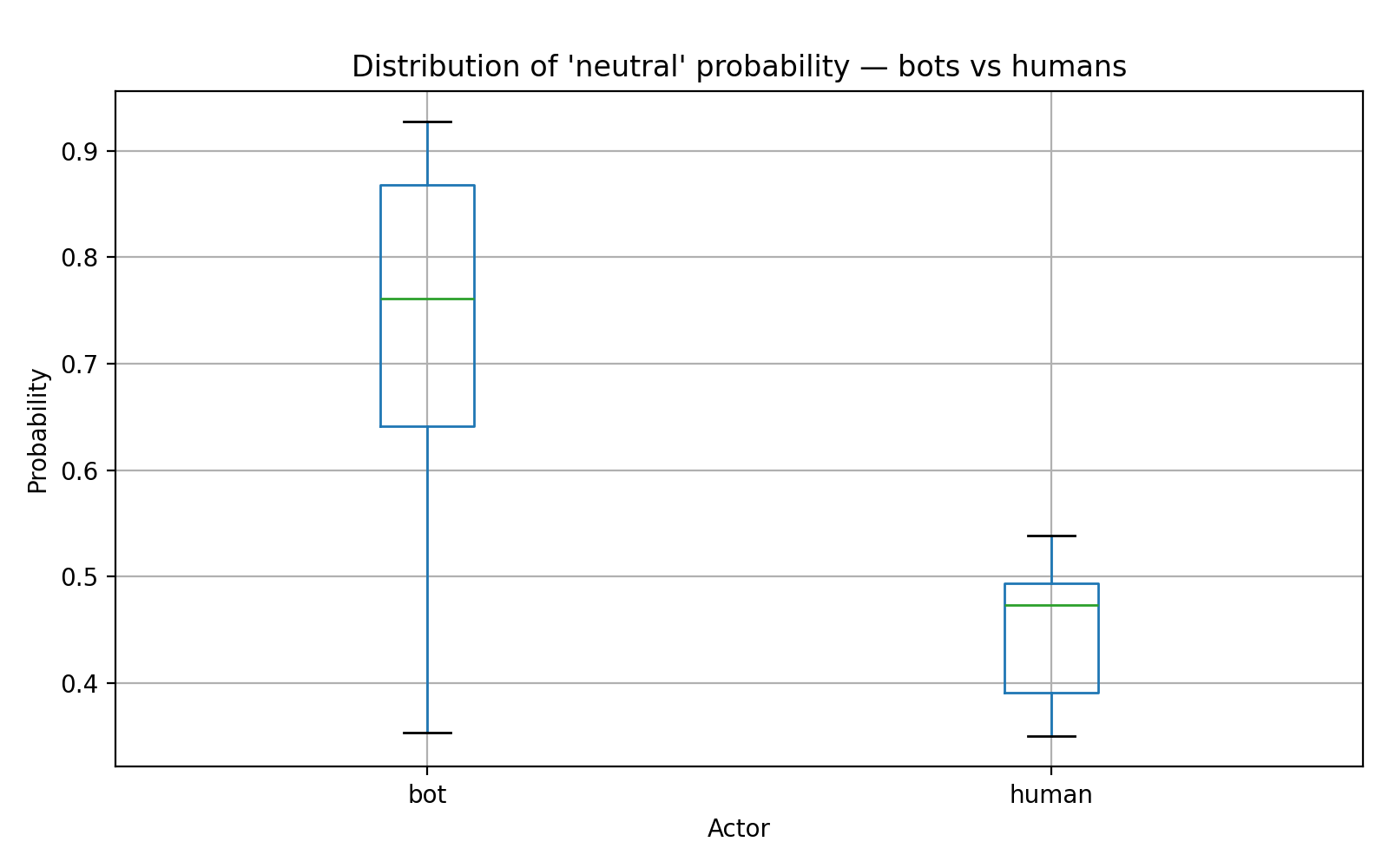

Quantitative analysis of online contributions demonstrates a marked difference in emotional expression between automated accounts (bots) and human users. Specifically, bot comments consistently exhibit a higher degree of neutrality, registering a neutrality score of 0.76. In contrast, human comments display a significantly lower neutrality score of 0.47. This 0.29 difference indicates that bots, on average, convey less emotional content in their textual output compared to human users, suggesting a key distinction in their communicative patterns and a tendency towards emotionally flat language.

Statistical significance of emotional distribution differences between bot and human contributions was assessed using non-parametric tests. The Mann-Whitney U test, employed to compare ranked data, determined that the distributions of emotional scores differed significantly (p < 0.001). Complementing this, the Kolmogorov-Smirnov test, which evaluates the maximum distance between the cumulative distribution functions of two samples, further confirmed a statistically significant difference (p < 0.001). These tests collectively provide quantitative evidence that bots and humans exhibit demonstrably different patterns of emotional expression in online text, beyond mere observation.

Decoding Behavioral Correlations and Their Implications

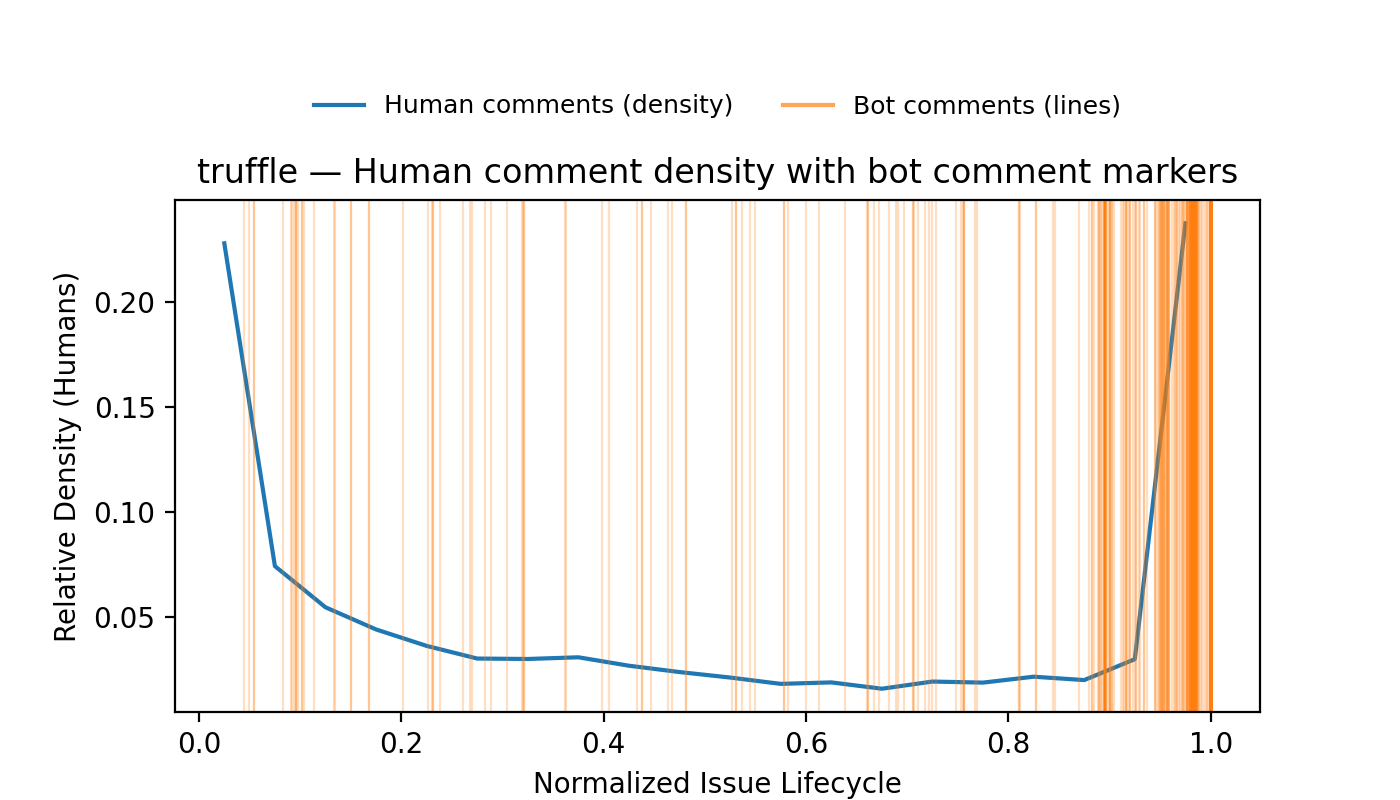

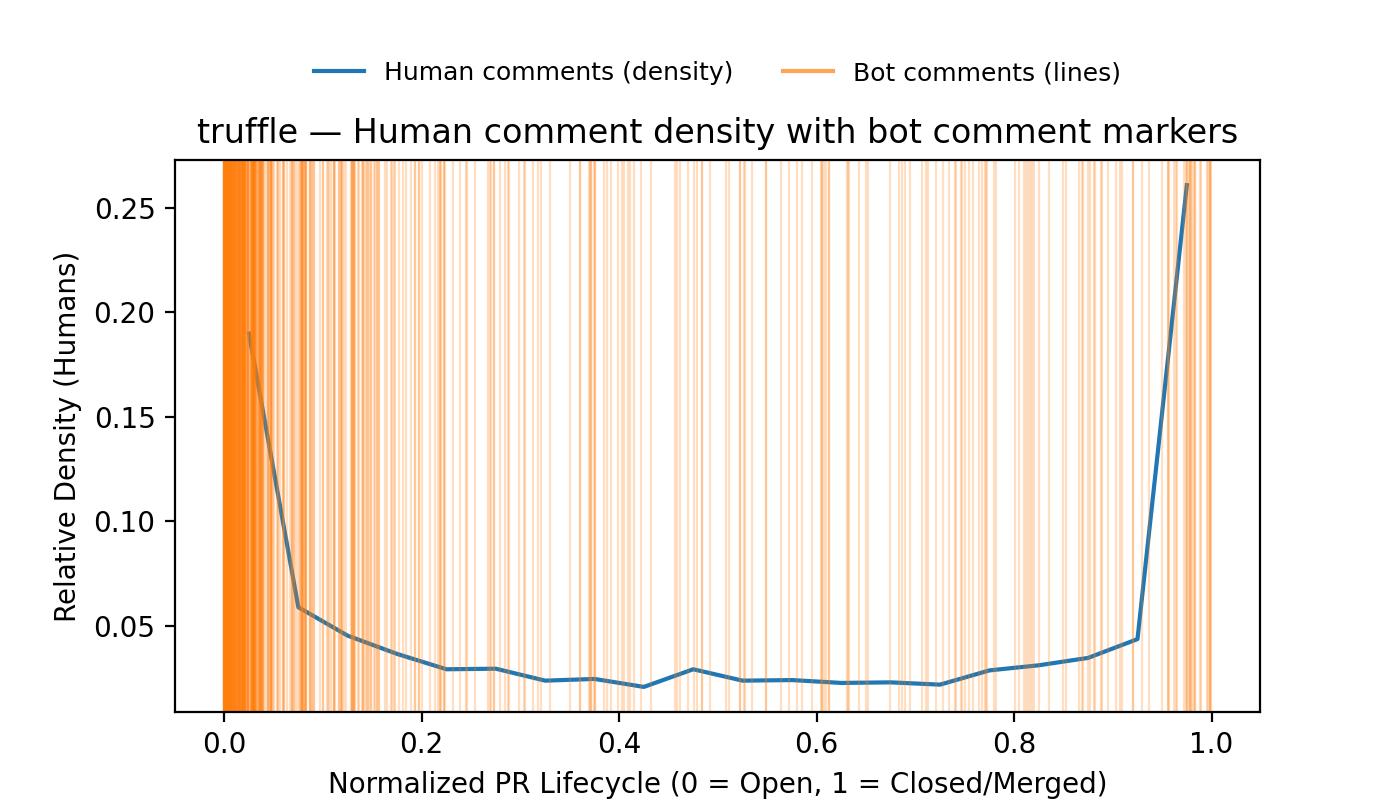

Analysis reveals a notable correlation between a bot’s level of emotional expression and the specific stages of software development it engages with, namely the ‘Issue Lifecycle’ and ‘Pull Request Lifecycle’. Bots don’t exhibit a broad range of emotions; rather, emotional cues – or the lack thereof – appear most prominently during critical junctures in the collaborative process. These patterns suggest that bots aren’t simply executing tasks, but are also, subtly, influencing the emotional tone of interactions surrounding bug reports, feature requests, and code contributions. Further investigation using metrics like sentiment analysis indicates that even minimal emotional signaling from bots can have a measurable impact on how human contributors perceive and respond to automated interventions, highlighting the potential for designing bots that foster more positive and productive collaborative environments.

Analysis of automated bot communication within software development platforms reveals a consistent pattern of neutral or minimal emotional expression. These bots, designed to facilitate tasks like code review and issue management, largely avoid language indicative of positive or negative sentiment. This tendency, while functionally efficient, potentially shapes the perceived collaborative environment for human contributors. The absence of emotional cues – even subtle acknowledgements or expressions of support – could lead to a perception of impersonal interaction, potentially diminishing feelings of rapport or shared purpose within the development team. Further investigation is needed to determine the long-term effects of this communication style on team dynamics and overall project success, but the initial findings suggest a noteworthy difference between human-to-human and human-to-bot emotional exchanges.

Analysis of human responses to bot interventions within software development workflows reveals a notable shift in expressed sentiment. Specifically, comments appearing after a bot’s action demonstrate a 42.1% increase in expressions of gratitude, coupled with a 19.2% reduction in indicators of confusion. This suggests that bots, when effectively integrated, can positively influence the collaborative environment and contributor experience. To quantify this divergence in emotional distributions between human and bot communication, researchers employed the Jensen-Shannon Divergence (JSD), a metric allowing for a precise assessment of how distinctly these two forms of communication are perceived. The findings indicate that, despite bots typically exhibiting neutral emotional tones, their contributions facilitate clearer communication and foster a more appreciative response from human collaborators.

Towards Empathetic Automation: A Vision for the Future

Investigations into artificial emotional intelligence suggest that bots capable of displaying appropriately nuanced responses have the potential to significantly enhance collaborative settings. Current research focuses not simply on detecting human emotion, but on formulating automated reactions that are contextually relevant and promote positive interactions. This involves exploring computational models of empathy, allowing bots to tailor their communication style – perhaps offering encouragement when a contributor faces difficulty, or expressing acknowledgement of successful contributions. Ultimately, the goal is to move beyond purely transactional bot interactions and cultivate a more supportive and productive environment, potentially unlocking greater creativity and efficiency within collaborative projects like those found in the Ethereum ecosystem and beyond.

Researchers investigating the effects of emotionally-aware bots on collaborative projects can rigorously quantify changes in human engagement using the Wilcoxon Signed-Rank Test. This non-parametric statistical method allows for the comparison of related samples – in this case, measuring a contributor’s engagement before and after interaction with a bot exhibiting varying degrees of emotional intelligence. The test assesses whether observed differences in engagement scores are statistically significant, even without assuming a normal distribution of data, which is crucial given the subjective nature of engagement metrics. By analyzing paired data – for example, time spent contributing, number of edits, or positive feedback given – the Wilcoxon Signed-Rank Test provides a robust means of determining if emotionally-aware bots genuinely foster a more positive and productive collaborative environment, offering valuable insights for the development of empathetic automation within complex systems like the Ethereum Ecosystem.

The potential for truly collaborative automation hinges on a nuanced understanding of how bots interact with humans, not just as tools, but as social entities. Research indicates that the effectiveness of automated systems within complex environments – such as the ‘Ethereum Ecosystem’ – is deeply intertwined with the perception of bot behavior and the conveyance of appropriate emotional cues. By carefully analyzing the dynamics of collaboration – how contributions are made, conflicts are resolved, and trust is established – developers can design bots capable of adapting their responses to foster more positive and productive interactions. This goes beyond simply mimicking human emotion; it requires a system that understands the impact of its actions on human contributors, paving the way for automated agents that are not only efficient, but genuinely empathetic and collaborative partners in a widening range of applications.

The study of bot participation in open-source development reveals a fascinating interplay between automated agents and human contributors. The research highlights how bots, though generally neutral in emotional expression, can subtly shift the overall emotional tone of developer communication, particularly by fostering clarity and reducing ambiguity – a critical component of successful collaboration. This aligns with Grace Hopper’s observation that, “It’s easier to ask forgiveness than it is to get permission.” Bots, by proactively addressing issues and clarifying uncertainties, effectively circumvent potential roadblocks and maintain project momentum. The research demonstrates that structure dictates behavior; bots, by providing consistent and predictable responses, influence the flow of communication and contribute to a more positive collaborative environment. Good architecture is invisible until it breaks, and only then is the true cost of decisions visible.

The Road Ahead

This investigation into automated participation reveals a predictable asymmetry: bots engage differentially with the structured demands of pull requests versus the more ambiguous terrain of issue discussions. This isn’t surprising; a system optimizes for what it can measure. The observation that bots exhibit a comparatively neutral emotional tone, while simultaneously nudging human communication towards positivity, hints at a fundamental principle. Every new dependency – here, the reliance on automated contributors – is the hidden cost of freedom. It demands a recalibration of the entire communicative ecosystem.

Future work must address the limitations of current emotion detection algorithms within the specialized context of technical discourse. Nuance is easily lost in translation, and the reduction of ‘uncertainty’-as measured by these tools-may simply reflect a chilling effect on genuine debate. A more holistic approach requires not only analyzing what is communicated, but how the structure of the platform itself encourages or discourages certain emotional responses.

Ultimately, the question isn’t whether bots can influence emotional tone, but whether they can participate in the creation of truly resilient, adaptive systems. Such systems aren’t built on positivity alone, but on the capacity to navigate complexity, embrace disagreement, and learn from failure – qualities that, as yet, remain distinctly human.

Original article: https://arxiv.org/pdf/2601.11138.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 19:33