Author: Denis Avetisyan

Researchers have developed a novel approach to building conversational agents that intelligently assess and address gaps in user knowledge to deliver truly helpful assistance.

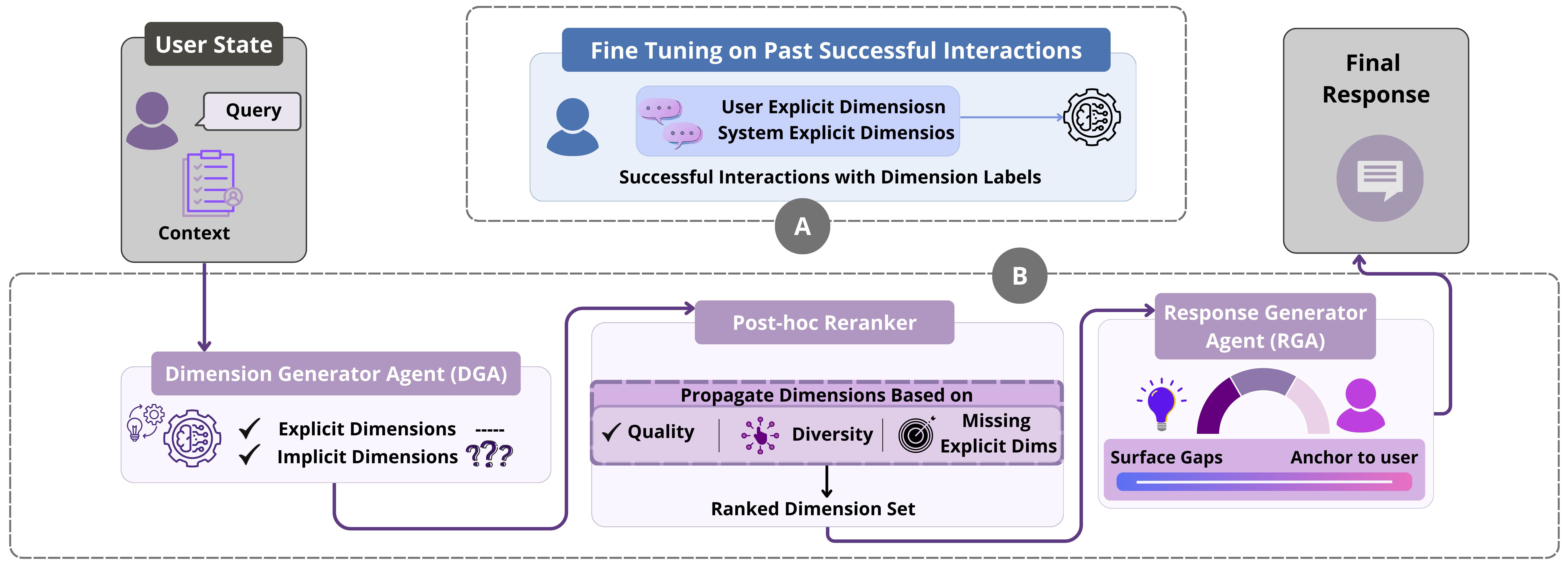

This review introduces the PROPER framework, a dimension-based system for calibrated proactivity and knowledge gap navigation in large language model agents.

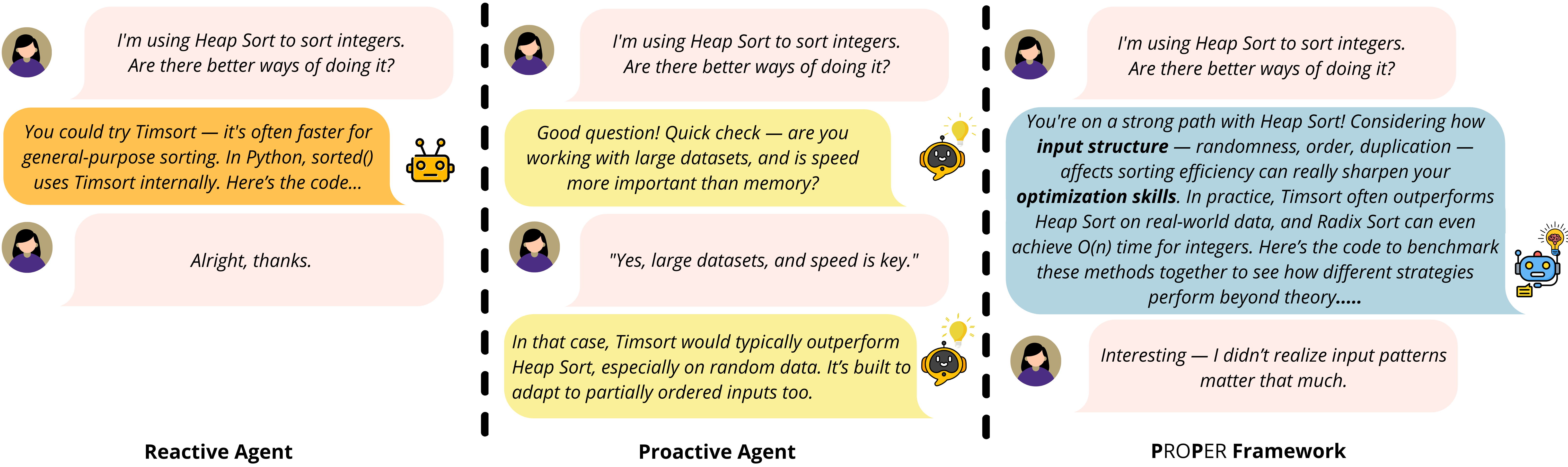

While most language assistants await explicit requests, critical user needs often remain unaddressed-a paradox this work directly tackles. In ‘The PROPER Approach to Proactivity: Benchmarking and Advancing Knowledge Gap Navigation’, we introduce ProPer, a novel two-agent architecture designed to proactively navigate these unmet needs by explicitly modeling underlying knowledge gaps. Our framework achieves substantial gains in quality and initiative appropriateness across multiple domains by balancing explicit requests with dynamically generated, implicit dimensions relevant to the user’s task. Could this calibrated proactivity represent a key step toward truly intelligent and helpful conversational agents?

The Limits of Reactive Assistance

Contemporary language models demonstrate remarkable proficiency in fulfilling explicitly stated requests, effectively operating as advanced response systems. However, their capabilities are largely confined to the immediate query, revealing a significant limitation in proactively addressing underlying user needs. These models typically lack the capacity to infer unstated goals or anticipate future requirements, meaning assistance remains tethered to direct prompts. While adept at answering what is asked, they struggle with understanding why it is being asked, hindering their potential in complex, multi-stage interactions that demand contextual awareness and foresight. This reactive nature restricts their utility beyond simple information retrieval, preventing them from truly serving as helpful, intelligent assistants.

The current generation of language models, while proficient at fulfilling direct commands, often falters when faced with situations demanding foresight and understanding of the broader context. This limitation stems from a fundamentally reactive design; assistance is only offered in response to explicit prompts, hindering effectiveness in complex, dynamic scenarios. Consider tasks requiring sustained support, such as troubleshooting a multifaceted technical issue or navigating a lengthy creative project – these benefit significantly from anticipating potential roadblocks and offering guidance before a user explicitly requests it. Without this proactive capability, the models remain tools for immediate execution rather than true collaborative partners, diminishing their value in situations where nuanced understanding and contextual awareness are crucial for success.

The current paradigm of artificial intelligence largely centers on reactive assistance – systems respond effectively to direct prompts, but fall short when users require support beyond explicitly stated needs. Truly helpful AI demands a transition beyond this model, necessitating systems capable of predicting user intent and proactively offering relevant information or solutions. This isn’t simply about faster response times; it’s about shifting from answering questions as asked to understanding the underlying goal and offering assistance even before it’s requested. Such a capability requires sophisticated modeling of user context, encompassing past interactions, inferred goals, and potential future needs, ultimately transforming AI from a tool that reacts to one that anticipates and genuinely assists.

The development of genuinely helpful artificial intelligence hinges on a robust understanding of user intent, moving beyond simple responsiveness to anticipate underlying needs. Current systems primarily react to explicit requests, but a true shift requires models capable of inferring what a user wants to achieve, not just processing what they ask. This necessitates advanced techniques in natural language understanding, allowing AI to decipher context, recognize implicit goals, and even predict future actions. Successfully modeling user intent involves disentangling stated objectives from unstated assumptions, and recognizing the broader situation influencing the request – a capability crucial for providing truly proactive and useful assistance. The ability to accurately interpret intent will ultimately define the difference between an AI that merely responds and one that genuinely helps.

ProPer: A Modular Architecture for Proactive Assistance

ProPer addresses the shortcomings of traditional, purely reactive agent architectures, which operate solely on immediate inputs without internal state or predictive capabilities. These systems often struggle with complex tasks requiring contextual understanding or proactive behavior. ProPer distinguishes itself by introducing an internal architecture that moves beyond simple stimulus-response mechanisms. Instead of directly generating a response to each input, ProPer incorporates processes for internal knowledge representation and gap identification. This allows the agent to evaluate the sufficiency of its current information before acting, and to initiate information-seeking behavior independent of external prompts – a capability absent in purely reactive designs. This shift enables more robust and adaptable performance in dynamic and uncertain environments.

ProPer’s architecture distinguishes between knowledge gap identification and response generation as discrete processes. Traditional agent systems often integrate these functions, leading to responses directly triggered by input without internal assessment of informational needs. ProPer, however, first analyzes incoming data to determine what information is lacking to provide a complete or optimal response. This separation allows the agent to formulate requests for missing data before constructing a reply, resulting in more informed and contextually relevant assistance. Consequently, the agent can proactively seek clarification or additional information, facilitating a more nuanced and effective interaction beyond simple reactive behavior.

ProPer’s modular design separates knowledge gap identification from response generation, enabling proactive information retrieval. Unlike systems that only react to direct prompts, ProPer can independently assess its understanding of a given context and, when necessary, initiate searches for missing information. This is achieved by a dedicated module responsible for monitoring internal states and external inputs, flagging areas where knowledge is insufficient to formulate a complete or confident response. The agent then autonomously queries relevant sources, integrating the newly acquired information before generating an output, effectively anticipating user needs without explicit instruction.

ProPer’s Calibration mechanism governs assistance delivery by evaluating the agent’s confidence in its current knowledge state and the potential utility of offering information. This process utilizes a confidence score, derived from internal model assessment, to determine if a user would benefit from a proactive response. Calibration isn’t simply about avoiding interruptions; it dynamically adjusts the type of assistance offered – ranging from subtle hints to detailed explanations – based on the assessed gap between the user’s likely understanding and the agent’s knowledge. A low confidence score, coupled with a perceived user need, triggers assistance, while high confidence suppresses it, preventing unnecessary or redundant information delivery. This nuanced approach ensures interventions are both timely and relevant, maximizing user experience and minimizing disruption.

ProPer in Action: Demonstrating Performance Across Domains

In the Medical domain, ProPer consistently delivers clinical insights that extend beyond the scope of the initial patient query. This functionality is achieved through its ability to proactively identify relevant medical concepts and relationships, allowing it to offer supplementary information and potential considerations not explicitly requested. Evaluations demonstrate ProPer’s capacity to synthesize information and generate responses that address underlying health concerns, contributing to a more comprehensive understanding of patient needs and supporting informed decision-making. Specifically, in multi-turn interactions, ProPer successfully indicated preferences in 11 out of 12 evaluated scenarios, signifying its proficiency in maintaining context and providing pertinent, extended clinical reasoning.

In the Code-Contests domain, ProPer facilitates developer workflows by offering assistance with complex programming challenges. This proactive support extends beyond simple code completion; ProPer analyzes problem statements and provides relevant code snippets, algorithmic suggestions, and debugging assistance. Evaluation indicates ProPer successfully identified user preferences in 9 out of 12 multi-turn interactions focused on coding problems, demonstrating an ability to anticipate developer needs and offer targeted support throughout the problem-solving process. This assistance aims to reduce development time and improve code quality for participants in coding contests and professional software development scenarios.

In the Recommendation Domain, ProPer improves user experience by leveraging conversational history to predict user preferences and offer relevant item suggestions. This proactive approach extends beyond simply responding to explicit requests; ProPer analyzes preceding interactions to infer implicit needs and tailor recommendations accordingly. Testing demonstrates ProPer successfully identified user preferences in all 12 multi-turn conversations within the PWAB dataset, indicating a high degree of accuracy in anticipating desired items and enhancing the overall recommendation process.

Quantitative analysis reveals ProPer consistently outperforms both baseline Large Language Models (LLMs) and Chain-of-Thought prompting techniques across a diverse set of benchmark datasets. Specifically, ProPer achieves a higher mean score when evaluated on datasets representing the Medical, Code-Contests, and PWAB (Progressive Web App) domains. This performance advantage isn’t isolated to specific tasks; the consistently elevated mean scores indicate a generalized improvement in ProPer’s ability to provide accurate and relevant responses across these disparate application areas, suggesting a robust and adaptable architecture.

Quantitative analysis across multiple datasets demonstrates ProPer’s consistent performance gains over base Large Language Models (LLMs). Specifically, ProPer achieved a superior outcome in 84% of evaluated instances. This metric was calculated by comparing ProPer’s responses to those of baseline LLMs across the Medical, Code-Contests, and PWAB domains. The consistently high percentage indicates a statistically significant and repeatable advantage for ProPer in providing accurate and relevant assistance, regardless of the specific application area.

Evaluations of ProPer’s performance in multi-turn conversational scenarios demonstrate a consistent ability to identify and incorporate user preferences. Across 12 interactions within the Medical domain, ProPer successfully indicated preference alignment in 11 cases. Performance in the Code-Contests domain yielded preference indication in 9 of 12 interactions, while the PWAB (Progressive Web App) domain achieved full preference alignment in all 12 interactions. These results indicate ProPer’s capacity to maintain contextual understanding and tailor responses effectively over extended conversational exchanges.

ProPer’s success across the Medical, Code-Contests, and Recommendation domains is directly attributable to its capacity for personalized assistance. This is evidenced by its consistently higher mean scores and outperformance of baseline Large Language Models (LLMs) and Chain-of-Thought prompting techniques across multiple datasets. Specifically, ProPer successfully identified user preferences in the vast majority of multi-turn interactions – 11 out of 12 in the Medical domain, 9 out of 12 in the Code domain, and all 12 interactions within the PWAB domain – indicating a robust ability to tailor responses to individual needs and maintain context throughout a conversation. This personalized approach extends beyond simply answering initial queries, enabling ProPer to provide proactive insights and relevant suggestions aligned with evolving user requirements.

Beyond Baseline: The Impact of ProPer’s Proactive Design

While Chain-of-Thought (CoT) prompting has proven valuable in guiding large language models through complex reasoning, its reliance on reactive knowledge – information accessed only when explicitly needed within the reasoning chain – presents limitations. This approach often struggles when a problem demands anticipating relevant information before it’s directly requested, hindering performance in scenarios requiring broader contextual understanding. CoT excels at processing information presented in the prompt, but lacks the capacity to proactively seek out and integrate external knowledge that might be crucial for a more complete or nuanced response. Consequently, its effectiveness diminishes when tackling tasks demanding foresight, background understanding, or the synthesis of information beyond the immediate scope of the query, highlighting a need for systems capable of anticipating informational needs.

Recent evaluations indicate that ProPer consistently achieves superior performance compared to traditional Chain-of-Thought (CoT) prompting across a diverse range of tasks. This improvement is largely attributed to ProPer’s innovative modular architecture, which separates knowledge retrieval from response generation. Unlike CoT, which generates reasoning steps and answers sequentially, ProPer proactively identifies and integrates relevant information before formulating a response. This decoupling allows the system to leverage a broader knowledge base and refine its reasoning process, resulting in more accurate and insightful outputs. The modularity also enhances adaptability, enabling ProPer to incorporate new knowledge sources and reasoning strategies with greater ease than its CoT counterparts, solidifying its position as a more robust and versatile approach to complex problem-solving.

Beyond simply achieving higher accuracy scores, ProPer distinguishes itself through the quality of its assistance, fostering more productive dialogues with users. Traditional language models often present information reactively, only addressing what is directly requested; ProPer, however, proactively anticipates potential user needs by integrating relevant knowledge before a question is fully formed. This anticipatory approach doesn’t just deliver answers, it constructs a more fluid and insightful conversational experience, offering clarifying details and related insights that enhance understanding. Consequently, interactions with ProPer feel less like interrogations and more like collaborative explorations, ultimately leading to more engaged users and a greater sense of helpfulness – a shift that moves beyond mere performance metrics to address the core goal of truly intelligent assistance.

Current approaches to artificial intelligence often conflate the processes of acquiring knowledge and formulating a response, limiting the potential for nuanced and insightful interactions. Recent findings indicate a significant advantage in systems that distinctly separate these functions; by first proactively identifying relevant information before constructing an answer, agents demonstrate a marked improvement in performance. This decoupling allows for a more thorough understanding of the context, enabling the generation of responses that are not only accurate but also more comprehensive and helpful. The ability to independently seek and integrate knowledge is increasingly recognized as a cornerstone of true intelligence, suggesting that this modular architecture represents a crucial advancement towards creating AI agents capable of genuine reasoning and problem-solving.

The pursuit of calibrated proactivity, as detailed in the Proper framework, inherently demands a holistic understanding of system dependencies. Every new capability added to an LLM agent, every attempt to bridge a knowledge gap, introduces a new layer of complexity. As Barbara Liskov aptly stated, “It’s one of the things I’ve learned-that you have to be willing to change your mind.” This resonates deeply with the core tenet of Proper – the need for continuous assessment and adaptation as the agent interacts with users and uncovers new informational voids. Ignoring these dependencies, striving for proactive assistance without acknowledging the hidden costs, risks creating a fragile system prone to unexpected behavior and ultimately undermines the agent’s effectiveness. The framework advocates a measured approach, acknowledging that true intelligence lies not in boundless ambition, but in elegant restraint and careful navigation of the known and unknown.

Where Do We Go From Here?

The PROPER framework, with its emphasis on explicit knowledge gap navigation, represents a step – a small one, admittedly – toward agents that don’t simply talk at users. It’s tempting to envision a future of seamless, anticipatory assistance. However, if the system looks clever, it’s probably fragile. The current iteration relies on a rather neat, dimension-based representation of knowledge, which feels… tidy. Nature rarely favors tidiness. Scaling this approach beyond carefully curated domains will necessitate a reckoning with the inherent messiness of real-world knowledge, and the limitations of any fixed dimensionality.

A significant challenge remains in defining, and more importantly, measuring, a ‘knowledge gap’ with sufficient granularity to drive genuinely useful proactivity. The calibration of initiative, the delicate balance between assistance and intrusion, isn’t merely a technical problem; it’s a question of situated cognition and trust. One suspects the most fruitful advancements will emerge not from more sophisticated algorithms, but from a deeper understanding of the human side of the interaction.

Ultimately, architecture is the art of choosing what to sacrifice. PROPER prioritizes explicit knowledge representation. Future work might explore the trade-offs between this explicitness and more implicit, learned models of user need. The pursuit of truly proactive agents is, perhaps, less about building a perfect mind-reader and more about accepting the inherent limitations of prediction, and designing systems that gracefully handle uncertainty.

Original article: https://arxiv.org/pdf/2601.09926.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 06:02