Author: Denis Avetisyan

A new framework, SyncTwin, enables robots to quickly create and maintain accurate digital representations of their surroundings for safe and efficient grasping of objects, even in challenging environments.

SyncTwin combines fast RGB-only 3D reconstruction with real-time synchronization to achieve robust sim-to-real transfer for robotic grasping and collision avoidance.

Despite advances in robotic manipulation, robust and safe grasping remains challenging in dynamic, visually occluded environments. This paper introduces SyncTwin: Fast Digital Twin Construction and Synchronization for Safe Robotic Grasping, a novel framework addressing this limitation through rapid 3D reconstruction and real-time synchronization. By combining RGB-only reconstruction with colored-ICP registration, SyncTwin enables collision-free motion planning in simulation and safe execution on real robots via a closed-loop system. Could this approach unlock more adaptable and reliable robotic systems capable of operating seamlessly in complex, real-world scenarios?

The Fragility of Embodied Intelligence

Conventional robotic systems often falter when confronted with the unpredictable nature of real-world environments. These machines typically depend on complete and static information, a luxury rarely afforded outside controlled laboratory settings. The reliance on pre-existing maps or the limitations of sensor ranges creates a fundamental fragility; an unanticipated obstacle, a moving person, or even a shifting shadow can disrupt operations and compromise safety. This difficulty stems from an inability to effectively process incomplete information and adapt to rapidly changing scenes, hindering the deployment of robots in dynamic spaces like homes, hospitals, or construction sites. Consequently, a robot’s capacity to function reliably diminishes significantly when faced with the messy, unscripted reality of the world around it, limiting its overall adaptability and usefulness.

Robotic systems designed with dependence on static, pre-mapped environments or constrained by limited sensor ranges exhibit significant vulnerabilities when confronted with real-world complexity. These systems often struggle with even minor deviations from their expected surroundings, encountering difficulties when faced with unforeseen obstacles or the presence of moving objects. This fragility arises because their operational parameters are tightly coupled to a specific, unchanging depiction of the world; any discrepancy between the map and reality can induce errors in path planning, manipulation, or even lead to complete operational failure. Consequently, robots operating under these constraints demonstrate a lack of robustness, hindering their deployment in dynamic and unpredictable settings where adaptability is paramount for reliable performance.

The pervasive challenge of partial observability significantly impedes a robot’s ability to function effectively in unpredictable environments. Unlike simulations or carefully controlled labs, real-world scenarios rarely present a complete picture; sensors have limited range, objects are often occluded from view, and dynamic elements introduce constant change. Consequently, reliable grasp planning becomes difficult, as a robot may attempt to secure an object that has moved or is obscured upon approach. Similarly, safe navigation is compromised when a robot bases its path on incomplete information, increasing the risk of collisions with unseen obstacles or moving agents. This fundamental limitation necessitates the development of robotic systems capable of reasoning under uncertainty, predicting future states, and adapting plans in real-time to overcome the inherent ambiguity of complex, real-world settings.

A fundamental limitation of current robotic systems lies in their difficulty maintaining a consistent and current model of the surrounding environment. These systems often struggle not because of sensor limitations alone, but because they fail to integrate perceptual data into a persistent understanding of the world. This disconnect between sensing and acting – the gap between perception and action – results in reactive behaviors rather than proactive planning. Without a continuously updated environmental representation, robots are easily disrupted by unexpected changes, hindering reliable navigation and manipulation. Effectively, the robot operates with a fragmented view, unable to predict or anticipate consequences, and thus compromising its ability to function robustly in dynamic, real-world scenarios. Bridging this gap requires moving beyond simple object detection to creating a cohesive, temporally consistent map that allows for informed decision-making and adaptable behavior.

The Dynamic Digital Twin: A Necessary Evolution

Traditional Digital Twin implementations typically create a static, point-in-time representation of a physical asset or environment. While valuable for certain applications, this approach proves inadequate when dealing with dynamic scenarios involving change over time. Real-world systems are rarely static; they evolve due to external factors, internal processes, or a combination thereof. A static Digital Twin lacks the ability to reflect these changes, leading to discrepancies between the virtual and physical realities and hindering its usefulness for real-time monitoring, predictive maintenance, or adaptive control. Consequently, a Digital Twin’s fidelity and value diminish rapidly in dynamic environments unless it can continuously update to mirror the current state of the physical world.

The Dynamic Digital Twin framework establishes a virtual environment that maintains continuous synchronization with its physical counterpart. This is achieved by consistently updating the virtual representation to reflect real-time changes occurring in the physical world. Unlike static digital twins which represent a single point in time, the Dynamic Digital Twin facilitates ongoing mirroring of state, allowing for analysis and prediction based on current conditions. The framework relies on rapid data acquisition and processing to minimize latency between physical events and their virtual representation, enabling applications requiring up-to-date insights and responsive control.

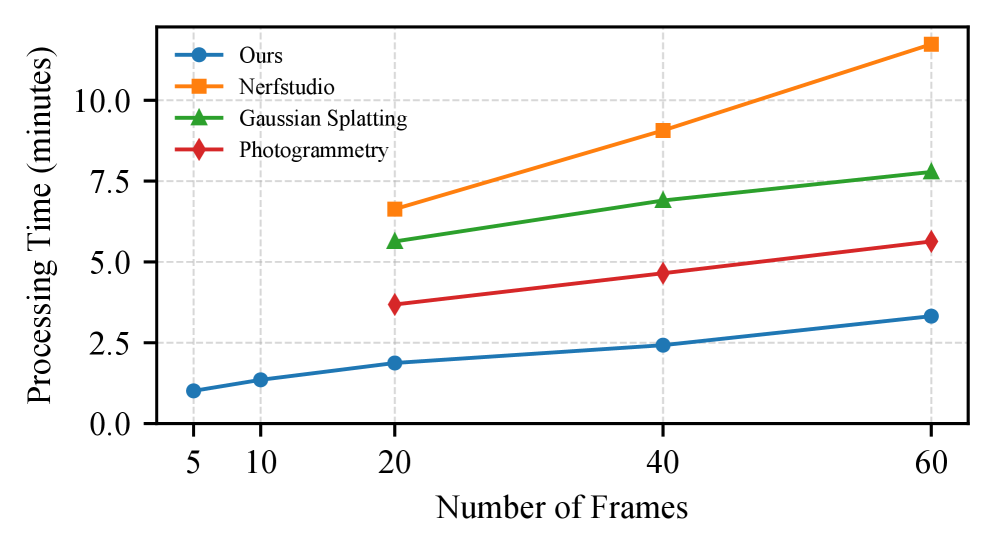

The Dynamic Digital Twin framework utilizes accelerated scene reconstruction techniques, specifically 3D Gaussian Splatting, Photogrammetry, and Neural Radiance Fields (NeRF), to create virtual environments rapidly. Current implementations achieve full scene reconstruction in 1-2 minutes using a dataset of 5-10 images. This represents a significant improvement in speed over conventional methods; traditional Photogrammetry, 3D Gaussian Splatting (3DGS), and NeRFstudio workflows typically require substantially longer processing times for comparable results. This accelerated reconstruction is further enhanced by Real-to-Sim Synchronization, ensuring the virtual environment accurately reflects the current state of the physical world.

The Dynamic Digital Twin facilitates robust decision-making in uncertain environments by integrating real-time perception with predictive modeling. This unification allows the system to not only accurately reflect the current state of the physical world – through continuous synchronization of reconstructed scenes – but also to forecast potential future states based on that data. By combining these capabilities, the framework can evaluate the consequences of different actions before they are implemented, enabling proactive and informed responses to changing conditions. This is particularly valuable in scenarios where complete information is unavailable or where unexpected events may occur, as the predictive element mitigates risk and optimizes outcomes based on the most current and projected data.

SyncTwin: Real-time Perception and Action – A Proof of Concept

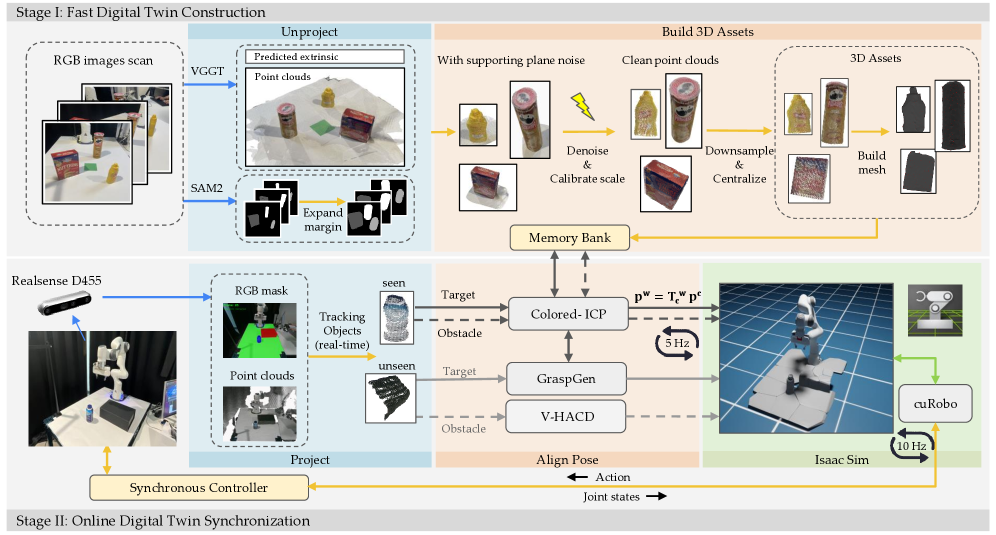

The SyncTwin framework achieves safe robotic grasping and navigation by integrating rapid, RGB-image-based 3D reconstruction with real-time synchronization of the physical robot and its digital twin. This synchronization allows for immediate feedback and adaptation to changes in the environment, effectively bridging the gap between perception and action. The system utilizes visual data alone – excluding depth sensors – to build and maintain a consistent digital representation of the surroundings, enabling the robot to plan and execute movements based on up-to-date information without reliance on potentially inaccurate or delayed data from other sensing modalities. This approach is critical for dynamic environments and applications requiring precise and responsive robotic control.

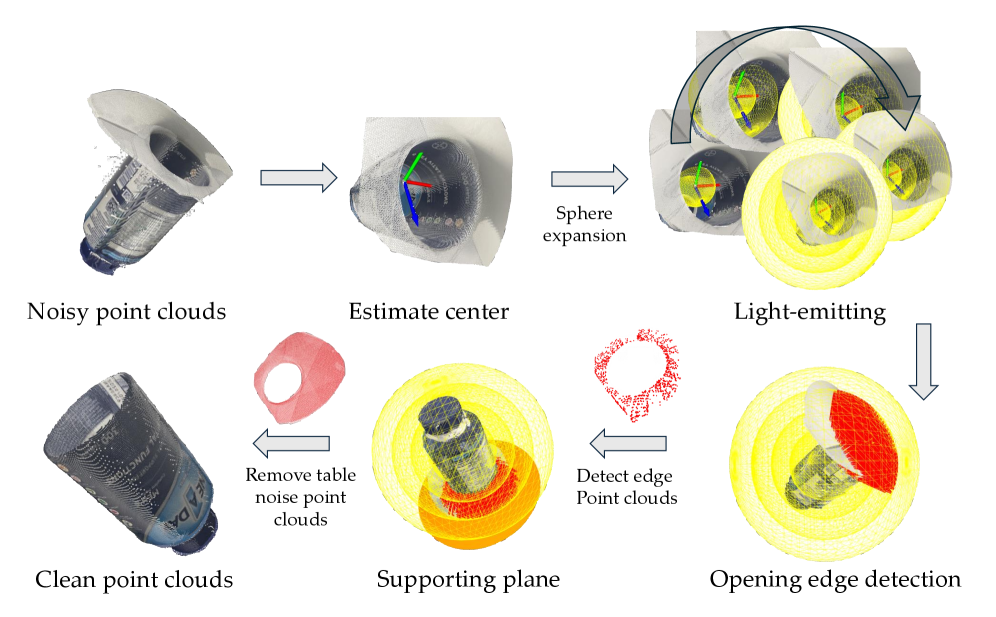

The SyncTwin framework utilizes a pipeline of established algorithms for real-time perception and action. Dense scene reconstruction is performed using VGGT, a technique for generating detailed 3D models from visual data. Alignment of the reconstructed scene with the robot’s environment is achieved through Colored-ICP, an iterative closest point algorithm that incorporates color information for improved accuracy. Finally, cuRobo MPC facilitates motion planning and execution, leveraging model predictive control on the GPU to enable rapid and precise robot movements based on the aligned digital twin.

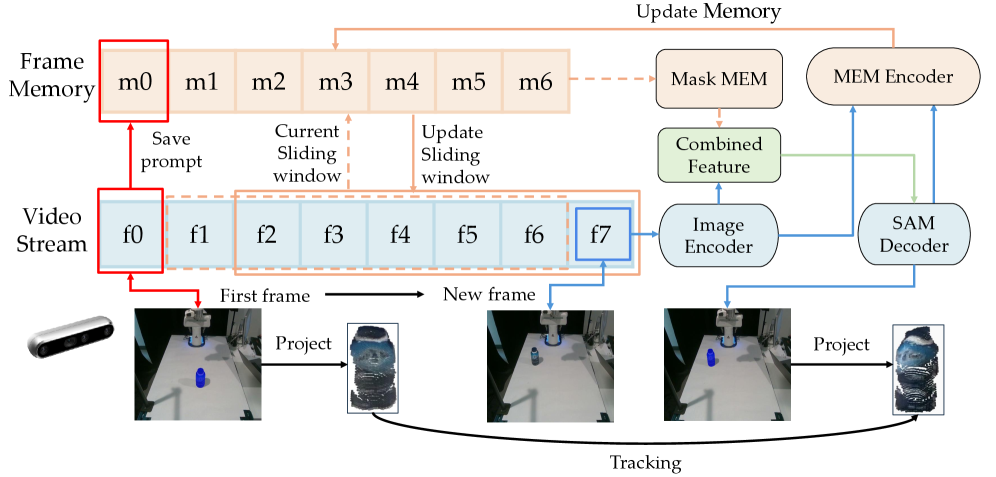

The SyncTwin framework utilizes two distinct tracking modules: SAM2 and SAM4D. SAM2 provides robust object tracking within the digital twin environment, maintaining consistent identification and pose estimation of observed objects. SAM4D specifically addresses the challenges presented by partial observations – instances where objects are temporarily obscured or only partially visible. It achieves this by accessing an ‘Object Memory Bank’, a repository of previously observed object characteristics, enabling the system to predict and maintain object identities even when current sensory data is incomplete. This allows for continued tracking and interaction with objects despite temporary visual limitations.

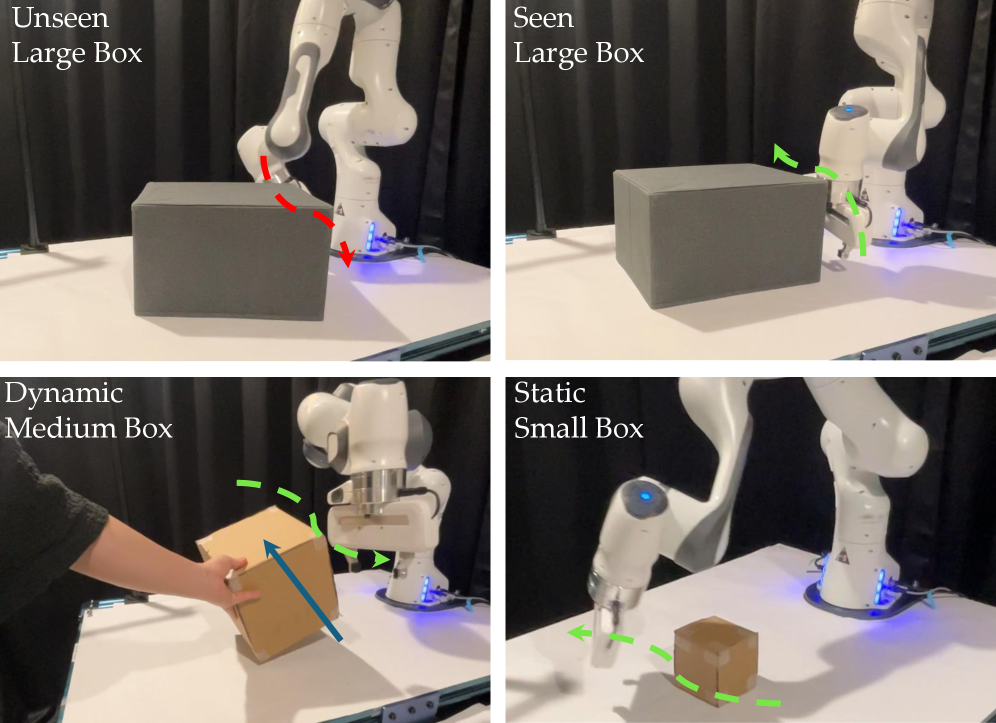

The SyncTwin framework’s integration with real-time perception capabilities enables dynamic adaptation to changing environmental conditions. This responsiveness yields a demonstrably higher obstacle avoidance success rate when compared to the NVBlox system. Furthermore, the utilization of complete asset meshes, facilitated by real-time perception, contributes to improved grasp success rates. The system continuously processes incoming sensor data to update the digital twin, allowing for immediate adjustments to planned trajectories and grasp configurations, thereby mitigating the impact of unforeseen obstacles or changes in object pose.

Enhanced Robustness and a Vision for the Future

SyncTwin achieves robust robotic navigation and manipulation by fusing immediate sensory input with a dynamic, virtual replica of the surrounding world. This system doesn’t simply react to what a robot sees; it anticipates and understands the environment through the continuously updated digital twin, which predicts object positions and potential obstacles. The real-time perception component feeds data into this virtual model, correcting discrepancies and refining its accuracy. This allows the robot to not only navigate complex spaces but also to reliably grasp objects, even in cluttered or changing conditions, as it’s operating with a predictive understanding of its surroundings rather than solely relying on instantaneous data. Essentially, SyncTwin provides the robot with a form of ‘situational awareness’ that dramatically improves its performance and adaptability.

SyncTwin’s safety profile is significantly bolstered by its capacity to integrate advanced obstacle avoidance techniques. By incorporating methodologies such as NVBlox and DRP, the framework moves beyond simple reactive collision prevention. NVBlox provides a computationally efficient means of generating navigable volumes around the robot, while DRP – Dynamic Reachability Planning – anticipates potential hazards by predicting future states and proactively adjusting trajectories. This combined approach allows for more nuanced and reliable navigation in dynamic environments, reducing the risk of collisions and enabling robots to operate safely alongside humans and within complex, unstructured spaces. The result is a system capable of not just avoiding obstacles, but intelligently navigating around them, contributing to a more robust and dependable robotic presence.

Ongoing development centers on enriching the ‘Object Memory Bank’ beyond simple object recognition to encompass semantic understanding. This means robots will not just identify what an object is, but also what it’s used for and how it interacts with other objects in the environment. By associating objects with their functions and relationships, the system anticipates potential uses and adjusts behavior accordingly – for example, recognizing a cup not just as a cylindrical object, but as something intended for holding liquids, prompting careful manipulation. This leap toward contextual awareness promises a new level of robotic adaptability, allowing machines to navigate ambiguous situations and perform tasks with greater intelligence and efficiency in dynamic, real-world settings.

The development of SyncTwin carries substantial promise across diverse sectors, extending far beyond initial demonstrations. Autonomous warehouse operations stand to benefit from more reliable and adaptable robotic systems capable of navigating dynamic environments and efficiently managing inventory. Similarly, the framework opens possibilities for advanced in-home assistance robots, providing support with tasks ranging from simple object retrieval to more complex household management, all while prioritizing safety and user interaction. However, the implications are not limited to these areas; potential applications also include precision agriculture, disaster response, remote inspection of infrastructure, and even space exploration, suggesting a broad and transformative impact on how robots interact with and operate within the physical world.

The presented SyncTwin framework embodies a commitment to provable correctness, mirroring the ideals of robust algorithm design. The system’s emphasis on fast, RGB-only 3D reconstruction and real-time synchronization isn’t merely about achieving speed; it’s about establishing a reliable foundation for robotic manipulation, particularly in challenging scenarios involving dynamic environments and partial occlusion. As Barbara Liskov aptly stated, “Programs must be correct, not just work.” SyncTwin seeks to minimize reliance on heuristics – accepting that a working solution isn’t sufficient if it lacks the underlying mathematical purity necessary for consistent, safe operation. The framework prioritizes a demonstrably accurate digital representation, thereby reducing the risk of failures stemming from imperfect approximations.

What’s Next?

The presented framework, while demonstrably functional, operates within a constrained axiomatic space. The reliance on RGB-only reconstruction, while computationally efficient, introduces inherent ambiguity. A rigorous error analysis quantifying the impact of chromatic variance and illumination shifts on reconstruction fidelity remains conspicuously absent. Such analysis is not merely an exercise in refinement, but a prerequisite for establishing provable guarantees regarding grasp stability – a condition currently assessed through empirical demonstration, not mathematical certainty.

Furthermore, the notion of ‘real-time synchronization’ deserves critical examination. The paper tacitly assumes a deterministic relationship between the physical and digital realms. However, sensor noise and actuator imprecision invariably introduce phase shifts and distortions. A formal treatment of these asynchronous events – perhaps through Kalman filtering or other state estimation techniques – would elevate the system from a practical implementation to a theoretically sound construct.

Ultimately, the true test lies not in achieving sim-to-real transfer, but in achieving provable equivalence. The current paradigm focuses on bridging the gap with increasingly sophisticated approximations. A more elegant solution would involve redefining the problem itself, formulating robotic manipulation as a purely mathematical operation, divorced from the messiness of physical instantiation. Only then can one speak of a truly robust and reliable system, built not on heuristics, but on the bedrock of logical necessity.

Original article: https://arxiv.org/pdf/2601.09920.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- M7 Pass Event Guide: All you need to know

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 04:27