Author: Denis Avetisyan

Researchers have developed a reinforcement learning framework that tackles the challenges of generating cohesive and well-structured scientific writing.

The OUTLINEFORGE system uses explicit state representations and hierarchical planning to improve long-horizon scientific text generation and establish a new benchmark for evaluation.

Despite advances in large language models, generating cohesive and factually sound scientific papers remains challenging due to limitations in long-range planning and structural consistency. This work introduces OUTLINEFORGE: Hierarchical Reinforcement Learning with Explicit States for Scientific Writing, a novel reinforcement learning framework that explicitly models document structure to improve scientific manuscript generation. By casting outline construction as a hierarchical planning problem with structured actions and a two-stage optimization procedure, we demonstrate consistent improvements over existing neural and LLM baselines, particularly in areas like citation fidelity and long-range coherence. Could this approach pave the way for automated scientific writing tools capable of producing genuinely insightful and reliable research?

Deconstructing Coherence: The Illusion of Understanding

Contemporary long-form text generation systems, while proficient at producing grammatically correct sentences, frequently falter when tasked with constructing extended scientific arguments. The core issue isn’t simply generating more text, but ensuring that each sentence builds logically upon the last, creating a cohesive narrative that supports a central hypothesis or conclusion. Without this inherent coherence, scientific writing becomes a fragmented collection of statements, obscuring the underlying reasoning and potentially leading to misinterpretations. Current models often struggle with maintaining topical consistency over extended passages, introducing irrelevant details or abruptly shifting focus, thus compromising the accuracy and clarity essential for effective scientific communication. This deficiency highlights a critical gap between statistical language modeling and the nuanced, structured thinking required for rigorous scientific prose.

Despite the rapid advancements in large language models (LLMs), simply increasing their size does not automatically translate to effective scientific communication. While LLMs excel at identifying patterns and generating text that appears coherent, they often struggle with the subtle yet crucial aspects of nuanced reasoning and logical organization that define robust scientific writing. These models can produce text that is grammatically correct but lacks the tightly-woven arguments, carefully constructed evidence chains, and precise conceptual framing essential for conveying complex scientific ideas accurately. The challenge lies not merely in generating words, but in structuring information in a way that reflects the rigorous, systematic thought processes inherent in scientific inquiry – a capacity that scaling alone cannot guarantee and requires dedicated architectural and training innovations.

Generating comprehensive scientific papers demands more than simply stringing together relevant sentences; a significant hurdle lies in achieving both structural correctness and thorough information coverage. Current automated systems frequently struggle to synthesize information into a logically organized format, often omitting crucial details or presenting them in a disjointed manner. A truly effective system must not only identify pertinent research but also integrate it cohesively, adhering to established scientific conventions regarding sections like introduction, methods, results, and discussion. This requires a nuanced understanding of the relationships between different concepts and the ability to prioritize information, ensuring that the resulting paper provides a complete and accurate representation of the state of knowledge on a given topic – a feat that remains a considerable challenge in the field of automated scientific writing.

Automated scientific writing systems frequently stumble not on generating text itself, but on upholding the rigorous standards of academic integrity and organization. Maintaining consistent and accurate citations-a cornerstone of credible research-proves particularly difficult, as systems may misattribute findings or fail to adhere to specific stylistic guidelines like APA or MLA. Beyond citations, structural validity-ensuring the logical flow from introduction to methods, results, and discussion-is often compromised, resulting in papers that, while grammatically correct, lack the cohesive narrative expected of scientific literature. This oversight isn’t merely aesthetic; inconsistencies in structure and citation can undermine the perceived reliability of the research, highlighting a critical need for improved algorithms that prioritize not just content creation, but also the meticulous adherence to established academic conventions.

OutlineForge: A Hierarchical Decomposition of Thought

OutlineForge employs a hierarchical reinforcement learning (HRL) framework to generate scientific documents. This approach decomposes the document generation process into multiple levels of abstraction, allowing the system to learn and optimize both the high-level structure and the low-level content. The framework utilizes a state-action space that explicitly represents the document’s organizational hierarchy, enabling targeted modifications at various levels-from section headings to individual sentences. Through HRL, OutlineForge aims to overcome limitations of traditional generative models by learning a policy that maximizes a reward function based on document quality metrics, facilitating the evolution of coherent and accurate scientific writing.

OutlineForge represents document structure as a state-action space, where each state defines the current organization and content, and actions correspond to structural modifications – such as adding, deleting, or moving sections – and content refinements. This explicit modeling allows the system to perform targeted edits; rather than generating text sequentially, it can evaluate the impact of structural changes on overall document quality. The state space encodes hierarchical relationships, enabling actions to operate at different levels of granularity – from modifying individual sentences to rearranging entire chapters. Consequently, the reinforcement learning agent learns to navigate this space, optimizing for document properties like coherence and logical flow through iterative structural and content adjustments.

Value-based training within OutlineForge utilizes a reward function to assess the quality of generated document structures at multiple hierarchical levels – encompassing sections, paragraphs, and sentences. This process assigns numerical values to different organizational arrangements, with higher values indicating greater coherence and accuracy as defined by the training data. The framework then employs reinforcement learning algorithms to iteratively refine the generation policy, favoring actions that lead to higher cumulative rewards. Specifically, the value function estimates the expected long-term reward achievable from a given state (document structure), guiding the selection of actions that maximize both local and global document quality. This hierarchical approach allows for optimization at each level of granularity, ensuring that improvements at lower levels contribute to overall document coherence and factual correctness.

Traditional scientific document generation methods primarily concentrate on content selection – determining what information to include based on a given prompt or dataset. OutlineForge diverges from this approach by explicitly modeling and optimizing document structure. This means the framework prioritizes the organization and arrangement of information – how that content is presented – as a distinct objective. By treating document hierarchy as a core element of the generation process, OutlineForge aims to improve coherence and readability beyond simply ensuring factual accuracy of included statements, focusing on the relational aspects of knowledge presentation rather than solely on knowledge representation itself.

Benchmarking with Automated Survey Generation: A Controlled Dissection

An automated survey generation task serves as a core benchmark for evaluating the proposed framework’s performance characteristics. This task provides a controlled experimental environment, isolating the system’s ability to produce structurally coherent and informative content without the confounding variables inherent in open-ended content creation or domain-specific knowledge requirements. By focusing on survey generation, the evaluation prioritizes logical organization, question formulation, and overall clarity, enabling a quantitative assessment of the framework’s capabilities in planning and content synthesis, independent of expertise in any particular scientific field. The task’s defined structure facilitates objective measurement of generated output quality, offering a consistent metric for comparing performance across different model sizes and configurations.

The automated survey generation benchmark is designed to evaluate a system’s capacity for coherent content creation irrespective of specialized knowledge. By focusing on the structure and informativeness of generated content, rather than requiring domain expertise, the evaluation isolates the model’s fundamental text generation capabilities. This methodology facilitates assessment of the framework’s ability to produce logically organized and meaningful outputs across a wide range of potential subjects, providing a generalized measure of performance unconstrained by the complexities of specific scientific fields or knowledge bases. The resulting data allows for direct comparison of different models based on their ability to construct well-formed, coherent text, independent of content accuracy within a particular discipline.

The benchmark utilizes compact Large Language Models (LLMs) – specifically Phi-3.8B, Gemma2-2B, and Qwen3-1.8B – for automated survey generation. These models were selected to assess performance with limited parameter counts. Evaluation of the generated surveys is performed using GPT-4o, which serves as a reference model to determine the quality and coherence of the output from the smaller LLMs. This evaluation process allows for a comparative analysis of survey generation capabilities across varying model sizes and architectures, focusing on the ability of compact models to produce structurally sound and informative content.

Empirical results demonstrate that our framework achieves superior survey generation quality utilizing compact language models containing only 2 billion parameters. This performance surpasses that of larger, general-purpose models, indicating that strategic framework design and optimized prompting can yield more effective results than simply increasing model size. Specifically, the 2B parameter models – Phi-3.8B, Gemma2-2B, and Qwen3-1.8B – consistently outperformed larger models when evaluated using GPT-4o, suggesting a more efficient use of parameters for this targeted task. This outcome highlights the potential for deploying high-quality content generation systems on resource-constrained platforms.

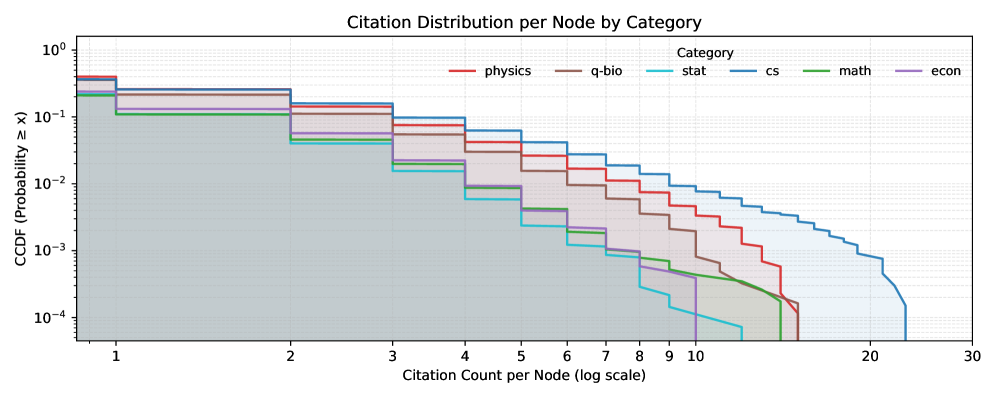

Analysis of article generation using arXiv data determined a planning horizon of 200-300 steps to be optimal for complete article construction. This finding is based on observed performance metrics during iterative content generation; extending the planning horizon beyond this range did not yield significant improvements in overall article quality. The data indicates that the initial 200-300 steps are critical for establishing core arguments and supporting evidence, after which further steps primarily contribute to refinement and detail rather than substantial content expansion. This established horizon served as a key parameter in evaluating the framework’s performance with compact language models.

Analysis of generated content demonstrates that citation relevance and density metrics reach a plateau after approximately 150 planning steps. This stabilization suggests that the initial phase of article generation – broad content expansion and foundational referencing – concludes within this timeframe. Subsequent steps beyond 150 primarily focus on refining existing content, ensuring coherence, and optimizing the relevance of citations rather than significantly expanding the overall scope or introducing entirely new references. The observed plateau indicates a transition from generative expansion to iterative refinement within the automated article generation process.

Extending Capabilities: Towards Autonomous Reasoning Systems

The foundational principles of OutlineForge, initially designed for hierarchical content generation, extend seamlessly into the realm of advanced agentic systems focused on structured reasoning and planning. By adapting the framework’s core mechanisms – iterative refinement, hierarchical decomposition, and goal-oriented synthesis – it becomes possible to construct agents capable of not merely generating text, but of actively reasoning about complex problems. These systems can break down high-level objectives into manageable sub-goals, explore potential solution pathways, and evaluate outcomes based on predefined criteria. This approach moves beyond simple pattern completion, enabling agents to formulate plans, anticipate challenges, and adapt their strategies – effectively mimicking a deliberate, structured thought process. The resulting agents demonstrate potential in fields requiring complex decision-making and strategic foresight, from automated scientific discovery to intricate logistical planning.

The capacity for complex problem-solving within agentic systems is significantly amplified by integrating frameworks such as ReAct and Tree-of-Thoughts with hierarchical reinforcement learning. ReAct facilitates an iterative process of reasoning and acting, allowing the agent to dynamically adjust its approach based on observations and feedback – essentially, thinking while doing. Simultaneously, Tree-of-Thoughts expands the search space for potential solutions by prompting the agent to explore multiple reasoning paths in parallel, rather than committing to a single line of thought. This combination moves beyond simple sequential decision-making, enabling the agent to systematically evaluate diverse strategies, assess their likelihood of success, and ultimately select the most promising course of action – a capability crucial for tackling complex scientific challenges requiring nuanced exploration and careful consideration of multiple hypotheses.

Chain-of-Thought prompting represents a significant advancement in artificial intelligence by encouraging large language models to externalize their internal reasoning steps. Rather than simply providing an answer, the system details the sequential logic employed to reach a conclusion, effectively creating a transparent audit trail. This detailed articulation isn’t merely for human comprehension; it allows for rigorous verification of the model’s thought process, identifying potential flaws in logic or reliance on spurious correlations. By explicitly outlining each step – from initial observation to final deduction – the system moves beyond a ‘black box’ approach, fostering greater trust and enabling targeted improvements in its reasoning capabilities. The result is a system capable of not only solving complex problems but also demonstrating how it arrived at the solution, a crucial step towards reliable and accountable artificial intelligence.

The convergence of hierarchical reinforcement learning with frameworks like ReAct and Tree-of-Thoughts presents a compelling pathway toward fully automated scientific writing. These systems aren’t simply compiling data; rather, they are capable of constructing nuanced arguments, mirroring the complex reasoning processes of human researchers. By leveraging Chain-of-Thought prompting, the system externalizes its thought process, offering a transparent audit trail of its conclusions and allowing for critical evaluation of its methodology. This synergy promises a future where comprehensive scientific reports and papers, complete with detailed justifications and supporting evidence, can be generated autonomously, accelerating the pace of discovery and potentially uncovering previously hidden connections within complex datasets.

The pursuit of automated scientific writing, as demonstrated by OutlineForge, inherently involves challenging established norms of composition. This framework doesn’t simply accept the rules of coherent argumentation; it dissects them via reinforcement learning, explicitly modeling state and reward to optimize for long-horizon planning. It’s a systematic dismantling and rebuilding of the writing process, mirroring a core principle of knowledge acquisition. As Blaise Pascal observed, “The eloquence of a man does not consist in what he says, but in the way he says it.” OutlineForge attempts to engineer that eloquence, breaking down the ‘how’ of scientific communication into quantifiable components and iteratively improving upon them. This approach, focused on structured credit assignment, suggests that even complex skills can be reverse-engineered and optimized through rigorous experimentation.

What’s Next?

The framework presented here, while demonstrating improved hierarchical planning in scientific writing, inevitably highlights the brittle nature of ‘scientific quality’ as a reward function. The benchmark established, however useful, remains a proxy – a cleverly engineered illusion of understanding. Future iterations must confront the core problem: can an agent truly reason about scientific validity, or merely mimic its stylistic hallmarks? The current approach excels at structural coherence, but the content itself remains largely derivative, a reshuffling of existing knowledge.

A critical limitation lies in the explicit state representation. While beneficial for credit assignment, it necessitates a pre-defined understanding of what constitutes a ‘scientific argument’ – a potentially circular dependency. The next step isn’t simply scaling the model, but exploring methods for emergent state discovery – allowing the agent to define its own metrics for ‘good’ science, even if those metrics initially appear nonsensical.

Ultimately, the best hack is understanding why it worked. Every patch-every refinement of the reward function-is a philosophical confession of imperfection. This work isn’t about automating scientific writing; it’s about reverse-engineering the cognitive processes underlying it, revealing the inherent messiness and ambiguity at the heart of knowledge creation. And that, perhaps, is a more valuable outcome than a perfectly polished paper.

Original article: https://arxiv.org/pdf/2601.09858.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

2026-01-18 18:21