Author: Denis Avetisyan

New research details a system enabling artificial intelligence to effectively utilize tools and collaborate in complex, multi-agent environments.

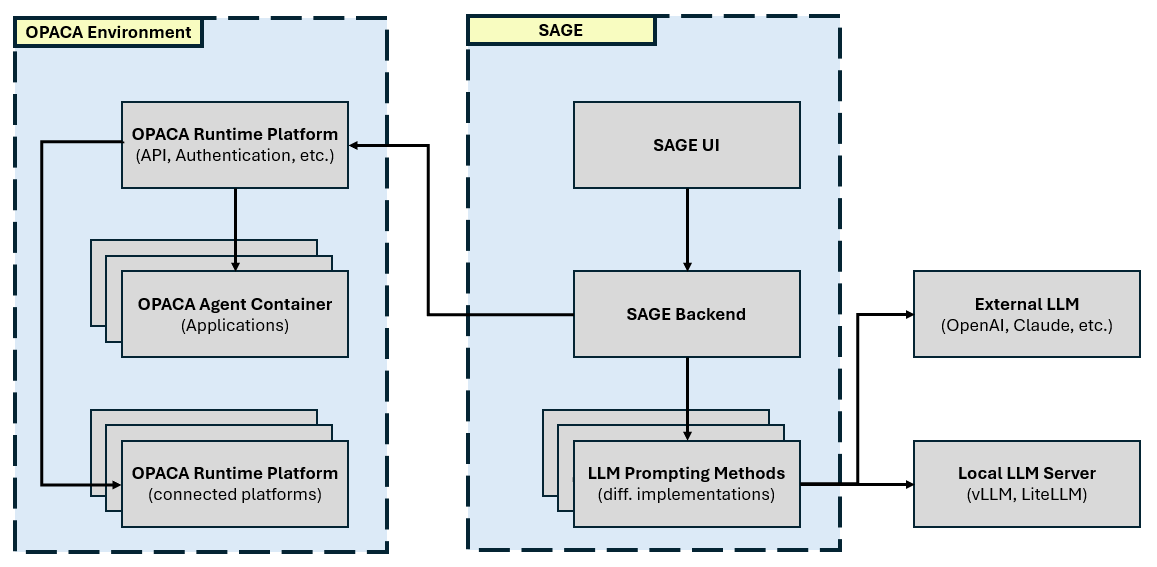

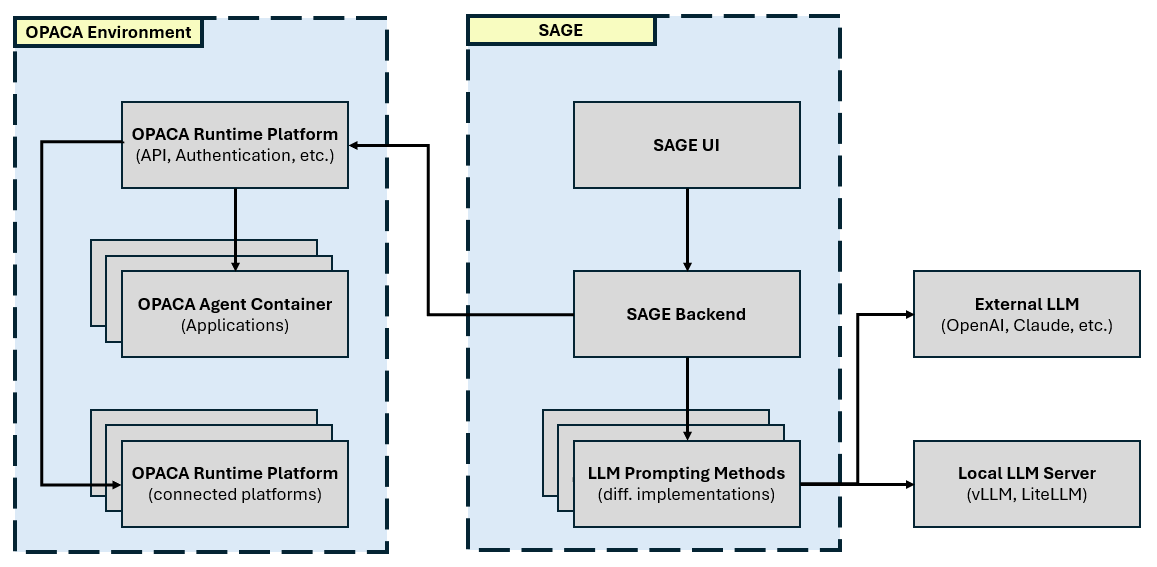

This paper introduces SAGE, a tool-augmented system leveraging the OPACA framework to demonstrate effective prompting strategies for LLM-powered task solving in scalable multi-agent systems.

While large language models excel at question answering, real-world utility demands dynamic access to tools for information retrieval and action-a challenge exacerbated by rapidly evolving software landscapes. This paper introduces SAGE: Tool-Augmented LLM Task Solving Strategies in Scalable Multi-Agent Environments, a system built on the OPACA framework to facilitate seamless tool integration and utilization by LLMs. We demonstrate that various agentic prompting strategies, orchestrated within SAGE, offer distinct strengths in complex task solving, enabling robust performance across a comprehensive benchmark of services. How can we further refine these strategies to unlock the full potential of LLMs in increasingly dynamic and scalable multi-agent systems?

The Limits of Prediction: Why Scaling Alone Isn’t Enough

Large Language Models demonstrate remarkable proficiency in areas such as text generation and pattern recognition, routinely achieving human-level performance on specific benchmarks. However, their capabilities begin to fray when confronted with challenges demanding intricate, multi-step reasoning. These models, while adept at identifying correlations within vast datasets, often struggle with tasks requiring causal inference, abstract thought, or the application of external knowledge not explicitly present in their training data. This limitation isn’t a matter of simply scaling up model size; even the most powerful LLMs exhibit difficulties in consistently arriving at logically sound conclusions when presented with complex problems, suggesting an inherent bottleneck in their capacity for genuine reasoning rather than mere statistical prediction. The models essentially excel at appearing intelligent, but falter when required to demonstrably be intelligent in a complex, problem-solving capacity.

Despite the impressive capabilities of Large Language Models (LLMs) and the sophistication of prompting techniques like zero-shot learning, complex reasoning remains a significant challenge. These models frequently stumble when confronted with problems demanding multiple layers of inference – tasks that require synthesizing information across several steps to arrive at a solution. Furthermore, LLMs exhibit limitations when external knowledge is crucial; while they possess a vast internal dataset, integrating and applying information beyond that training data proves difficult. This isn’t simply a matter of scale; even the largest models struggle to reliably connect disparate pieces of information or to perform nuanced reasoning that humans accomplish effortlessly, highlighting a fundamental bottleneck in their current architecture and prompting methodologies.

The inherent limitations of single, expansive Large Language Models in tackling intricate reasoning challenges are driving a pivotal architectural shift. Researchers are increasingly focused on developing modular systems-networks of specialized models that collaborate to dissect and resolve complex problems. These scalable architectures move beyond the “all-in-one” approach, instead distributing cognitive load across multiple components, each optimized for a specific sub-task – such as knowledge retrieval, logical inference, or planning. This orchestration allows for more transparent and controllable reasoning processes, enabling the system to leverage external tools and data sources with greater efficacy. Ultimately, this move toward modularity promises to unlock a new level of reasoning capability, surpassing the constraints of monolithic models and paving the way for truly intelligent systems.

OPACA: Dividing and Conquering the Reasoning Problem

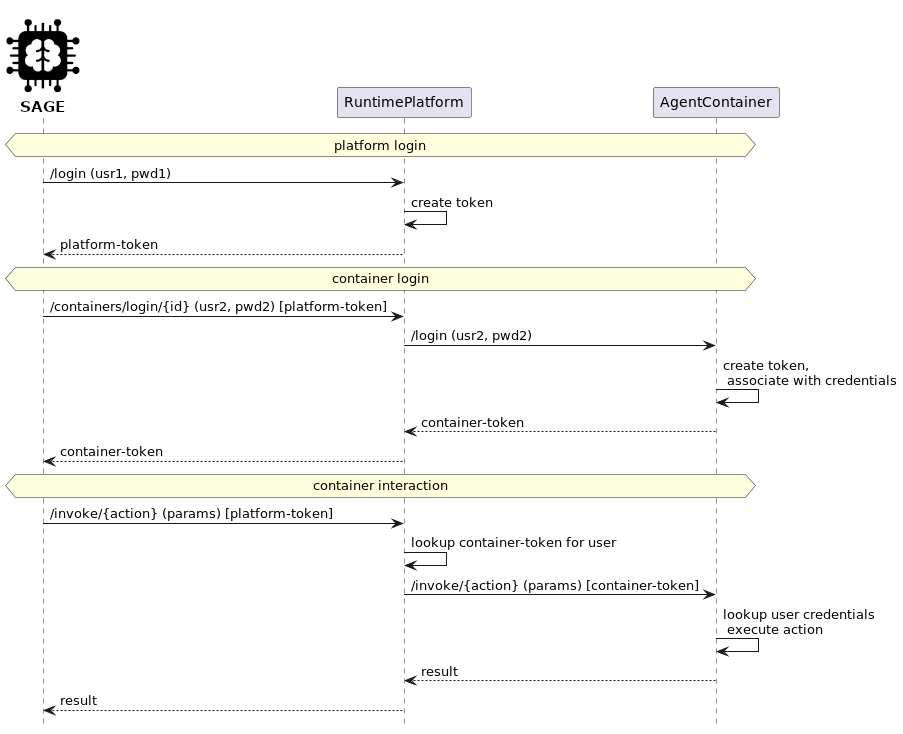

The OPACA framework utilizes a multi-agent system where distinct agents are assigned individual, specialized actions comprising a larger problem-solving process. This decomposition allows for modularity, with each agent functioning as an independent unit responsible for a specific task within the overall pipeline. Rather than a monolithic approach, OPACA distributes computational load and enables parallel execution of these actions. This design facilitates both the development and maintenance of complex systems by isolating functionality and promoting code reusability, as agents can be independently updated or replaced without impacting the entire framework.

Agent Containers within the OPACA framework provide a self-contained execution environment for each Agent, isolating them from external dependencies and potential conflicts. This encapsulation ensures that modifications or failures within one Agent do not impact the functionality of others or the overall system. Furthermore, the containerization strategy facilitates scalable deployment through the OPACA Runtime Platform; Agents can be independently instantiated and distributed across available resources, enabling parallel processing and efficient resource utilization. The Runtime Platform manages the lifecycle of these containers, including provisioning, monitoring, and termination, allowing for dynamic scaling based on workload demands and supporting both local and distributed execution environments.

OPACA’s orchestration capabilities facilitate the dynamic composition of Agents by analyzing task requirements and selecting the optimal sequence of actions. This process involves a central orchestrator that monitors incoming tasks, decomposes them into sub-tasks, and assigns each sub-task to a specialized Agent. The orchestrator manages inter-Agent communication and data flow, ensuring seamless execution of the overall pipeline. By dynamically adapting the Agent composition based on task characteristics – such as data type, complexity, or resource constraints – OPACA minimizes processing time and resource utilization, leading to increased efficiency compared to static, pre-defined pipelines.

From Simple to Orchestrated: A Spectrum of Prompting Strategies

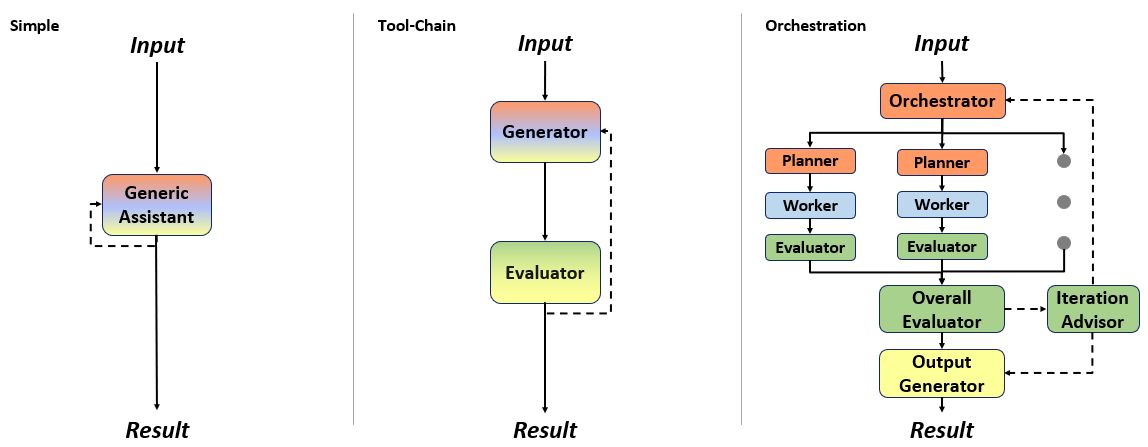

OPACA accommodates varying levels of prompting complexity, beginning with the ‘Simple Method’ which is designed for tasks requiring minimal instruction. For scenarios benefiting from external functionality, the ‘Simple-Tools Method’ is implemented; this leverages the Large Language Model’s (LLM) function calling capabilities, allowing it to interact with and utilize tools as defined by the system. This method provides a pathway for the LLM to extend its inherent knowledge with dynamically accessed information or perform actions beyond its native capabilities, while remaining relatively straightforward in its overall prompting structure.

The Tool-Chain Method within OPACA addresses complex tasks through a structured, multi-stage process focused on tool utilization. This method begins with meticulous planning to define the necessary tool calls, followed by the construction of a sequence of these calls designed to achieve the task objective. Crucially, the Tool-Chain Method incorporates a dedicated evaluation phase after each tool call, assessing the result and informing subsequent actions. This iterative process of planning, execution, and evaluation ensures a controlled and deliberate approach to complex problem-solving, aiming to maximize accuracy and minimize unnecessary computations.

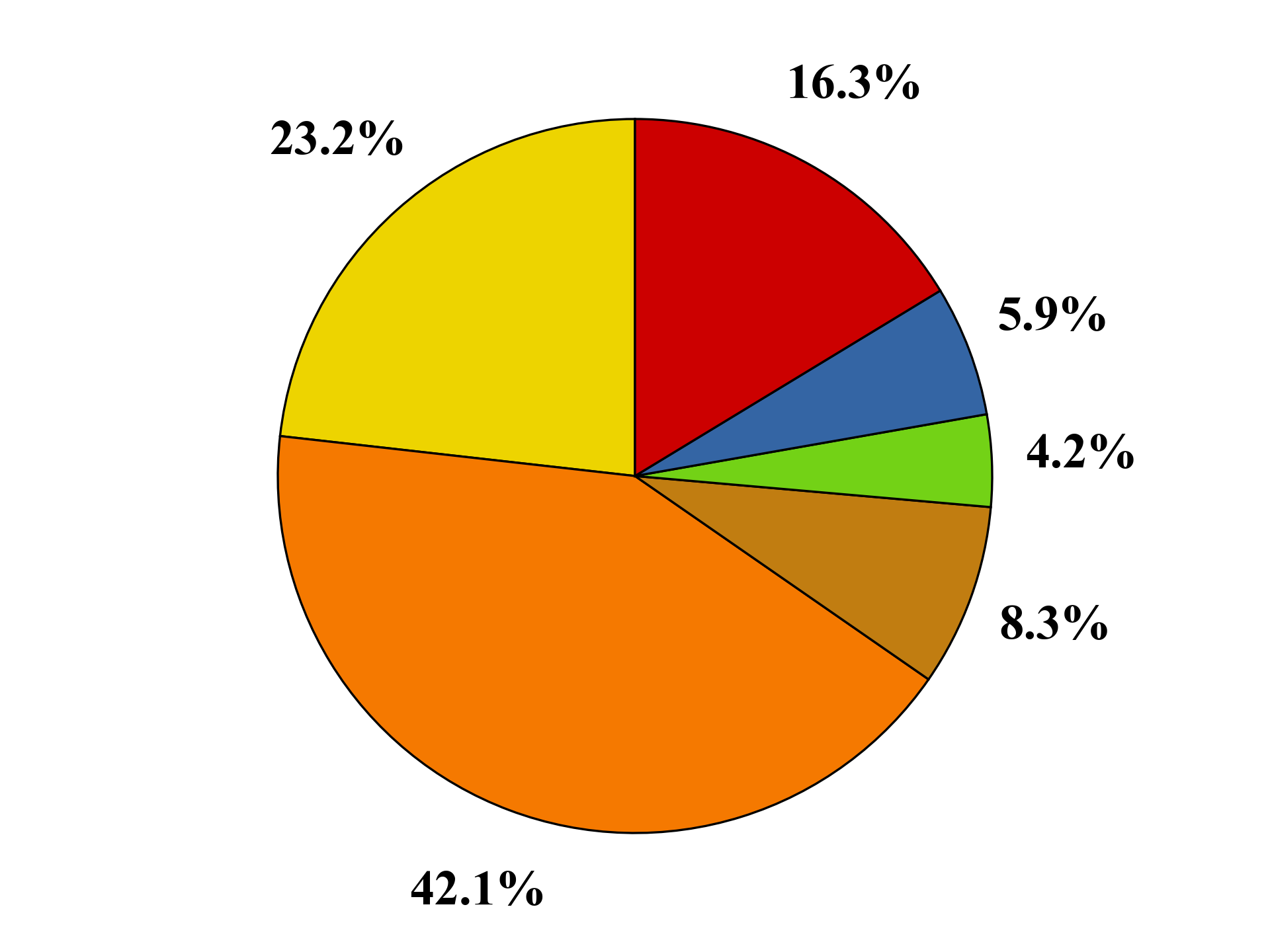

The Orchestration Method within OPACA builds upon tool-chaining by dynamically assembling multiple autonomous Agents to address complex, multi-faceted problems. This approach prioritizes modularity and adaptability, allowing the system to reconfigure its problem-solving strategy as needed. Evaluation of responses across all prompting methods-including Simple, Simple-Tools, Tool-Chain, and Orchestration-yields scores ranging from 1.0 to 5.0, indicating a quantifiable spectrum of response quality and highlighting the potential for comparative analysis of each method’s efficacy.

Evaluation of prompting methods within OPACA indicates that while both the Simple-Tools and Tool-Chain methods achieve high rates of correct tool usage, the Orchestration method exhibits a comparatively larger decrease between correct and perfect tool usage. This suggests that the dynamic agent composition inherent in the Orchestration method, while increasing modularity, may lead to instances of unnecessary or redundant tool calls, despite overall functional correctness. Specifically, a greater proportion of tool usages within the Orchestration method, though achieving the desired outcome, do not represent the most efficient or direct solution compared to the other methods tested.

Beyond the Hype: Why Open-Source LLMs Matter

The OPACA framework distinguishes itself through its deliberate design, eschewing reliance on any single large language model (LLM). This architectural flexibility permits seamless integration with established proprietary models, such as those from OpenAI, alongside a growing ecosystem of high-performing open-source alternatives including Mistral and Qwen models. This adaptability isn’t merely a technical detail; it actively promotes innovation by allowing researchers and developers to experiment with and tailor solutions to precise requirements, circumventing vendor lock-in and fostering a more diverse and accessible AI landscape. The system’s ability to function effectively across different LLMs signifies a departure from rigid AI infrastructure and towards a modular, adaptable approach to complex problem-solving.

The shift towards open-source large language models (LLMs) is actively cultivating a more innovative and adaptable artificial intelligence landscape. By diminishing dependence on a limited number of proprietary vendors, researchers and developers gain the freedom to customize and refine LLMs for highly specific applications. This flexibility is particularly crucial for specialized tasks where off-the-shelf solutions prove inadequate, allowing for the creation of AI tools precisely tailored to unique challenges and datasets. Consequently, the open-source approach not only accelerates the pace of development but also democratizes access to advanced AI capabilities, fostering a broader range of participation and ultimately driving more impactful solutions across diverse fields.

The flexibility to interchange large language models (LLMs) without substantial system redesign is fundamentally broadening access to sophisticated artificial intelligence. Recent evaluations demonstrate that different integration methods impact resource utilization in measurable ways; the ‘Simple’ approach, while quickest in execution, demands the highest token consumption. Conversely, ‘Orchestration’ minimizes token usage, though at the cost of increased processing time. This trade-off highlights a crucial point: decoupling the underlying LLM from the application’s architecture empowers developers to optimize for cost, speed, or efficiency, selecting the model best suited to their specific needs and budgetary constraints – a capability previously limited by vendor lock-in and inflexible systems.

SAGE: Scaling Towards Truly Intelligent Systems

SAGE represents an evolution of the OPACA framework, specifically designed to tackle intricate challenges requiring tool use within a multi-agent system. This new architecture doesn’t simply apply large language models (LLMs) to problems; it orchestrates a collective of agents, each potentially leveraging specialized tools to break down complex tasks into manageable components. By building upon OPACA’s foundations, SAGE achieves scalability, allowing for the deployment of numerous agents to address problems beyond the capacity of a single LLM. This distributed approach fosters robustness and adaptability, enabling the system to handle a wider range of tool-augmented LLM tasks, from data analysis and code generation to complex reasoning and decision-making, all within a dynamically configurable environment.

SAGE distinguishes itself through a deliberate architectural design centered on modularity, orchestration, and the utilization of open-source large language models. This combination fosters a system capable of exceeding the limitations of single, monolithic AI models; individual components – each responsible for a specific task – can be independently developed, updated, and reused. The orchestration layer then intelligently directs these modules, dynamically assembling workflows to address complex challenges. By embracing open-source LLMs, SAGE avoids vendor lock-in and facilitates community-driven improvements, ultimately leading to more resilient, transparent, and adaptable AI systems poised to tackle an ever-evolving landscape of tasks and data.

Ongoing development of SAGE prioritizes refining the system’s ability to dynamically assemble effective agent teams for specific tasks. This includes investigating algorithms that automatically determine optimal agent compositions based on task requirements and agent capabilities, moving beyond static configurations. Simultaneously, research is directed toward improving tool selection, allowing agents to more intelligently identify and utilize relevant tools from a growing library to enhance performance. Crucially, efforts are underway to explore innovative approaches to knowledge integration, enabling seamless sharing and combination of information between agents and external knowledge sources, ultimately fostering a more cohesive and adaptable reasoning system.

The pursuit of elegant agentic AI, as detailed in this paper with SAGE and the OPACA framework, feels… familiar. It’s all very neat – dynamic tool access, scalable multi-agent environments, zero-shot prompting – but one suspects it will inevitably resemble a Rube Goldberg machine built from hastily patched APIs. Barbara Liskov observed that “It’s one of the really hard things about programming-to make sure your abstractions don’t leak.” That’s precisely the issue here. These systems start as controlled experiments, demonstrating clever orchestration, but production environments have a way of exposing the cracks. They’ll call it AI and raise funding, but the underlying reality is always more brittle than the diagrams suggest. The elegant abstractions will leak, the tools will fail in unexpected ways, and someone will be debugging a runaway process at 3 AM, muttering about how it all started as a simple bash script.

The Road Ahead

The presented work, while demonstrating a degree of orchestration within the OPACA framework, merely shifts the locus of failure. The elegance of dynamic tool access will inevitably encounter the brutal realities of production: inconsistent APIs, rate limits, and tools returning data formats no one anticipated. The question isn’t whether these multi-agent systems can solve tasks, but rather what percentage of human effort will be devoted to debugging the inevitable tool-interaction failures. If all evaluation metrics appear promising, it is likely because those metrics fail to adequately capture the cost of maintenance.

Future work will undoubtedly explore scaling these systems to ever-larger agent populations. But history suggests that “infinite scalability” is often a temporary illusion. The complexity of inter-agent communication will grow exponentially, and the benefits of additional agents will diminish rapidly. A more pressing concern is the development of robust error handling and explainability. Knowing that a system failed is trivial; understanding why, given a tangled web of tool calls and agent interactions, remains a significant challenge.

Ultimately, the field will need to confront a fundamental truth: intelligence isn’t simply about solving problems; it’s about solving them efficiently. The cost of orchestrating these complex systems – both computational and human – must be carefully considered. The pursuit of ever-more-complex architectures should not eclipse the value of simpler, more reliable solutions.

Original article: https://arxiv.org/pdf/2601.09750.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- How to find the Roaming Oak Tree in Heartopia

- M7 Pass Event Guide: All you need to know

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- ATHENA: Blood Twins Hero Tier List

2026-01-18 08:08