Author: Denis Avetisyan

A new study of developer activity reveals that the integration of AI tools isn’t simply boosting productivity, but fundamentally reshaping how code is written and reused.

Longitudinal analysis of IDE telemetry data demonstrates nuanced changes in coding patterns, context switching, and code reuse practices following the adoption of AI-assisted development tools.

Despite the growing prevalence of AI-powered coding assistants, a comprehensive understanding of their long-term impact on software development practices remains elusive. This research, presented in ‘Evolving with AI: A Longitudinal Analysis of Developer Logs’, addresses this gap through a two-year study combining telemetry data from 800 developers with survey responses from 62 professionals. Our findings reveal that while developers report productivity gains from AI assistance, detailed analysis of their IDE activity demonstrates a more complex picture of increased coding and editing alongside shifts in code reuse and context switching patterns. How will these evolving workflows reshape the future of software engineering and the design of intelligent development tools?

Deconstructing the Software Lifecycle: A Necessary Disassembly

Conventional software development typically proceeds through cycles of planning, coding, testing, and deployment – a process frequently repeated as requirements evolve or issues emerge. This iterative nature, while allowing for adaptation, often translates into substantial time investment and considerable manual effort. Developers spend significant portions of their time on tasks like debugging, refactoring, and painstakingly verifying code functionality, rather than focusing solely on innovation. The inherent complexity of building and maintaining software, coupled with the need for thorough quality assurance, means projects can easily encounter delays and exceed initial estimates, highlighting the limitations of purely manual workflows in meeting the demands of rapidly changing technological landscapes.

Sustaining high code quality represents a persistent obstacle throughout the software development lifecycle, directly influencing both project completion speed and the dependability of the final product. Errors and vulnerabilities, if left unchecked, accumulate technical debt, necessitating costly rework and potentially leading to system failures. This challenge isn’t merely about eliminating bugs; it encompasses factors like code complexity, maintainability, and adherence to established coding standards. Rigorous testing, code reviews, and the implementation of automated quality assurance tools are essential, yet often prove insufficient without a proactive commitment to best practices and a culture of continuous improvement. Ultimately, prioritizing code quality isn’t simply a matter of preventing issues, but of building software that is robust, scalable, and trustworthy over the long term.

Contemporary applications, increasingly reliant on intricate architectures and vast codebases, present substantial challenges to traditional software development methodologies. The sheer scale and interdependence of modern systems necessitate a shift towards approaches that prioritize modularity, automation, and continuous integration. Developers are actively exploring techniques like microservices, serverless computing, and low-code/no-code platforms to decompose monolithic applications into manageable components, accelerating development cycles and enhancing resilience. Furthermore, the adoption of advanced testing frameworks, static analysis tools, and robust version control systems is crucial for maintaining code quality and facilitating collaborative development, ultimately reducing technical debt and ensuring long-term maintainability in the face of ever-evolving requirements.

The Algorithmic Revolution: Are We Witnessing a Paradigm Shift?

AI coding assistants leverage Large Language Models (LLMs) to automate portions of the software development lifecycle previously requiring significant manual effort. These tools excel at tasks such as generating boilerplate code, completing repetitive code blocks, and translating code between languages. This automation potential extends to accelerating development cycles by reducing the time spent on routine coding, allowing developers to focus on more complex problem-solving and architectural design. Specifically, LLMs are trained on vast datasets of existing code, enabling them to predict and suggest code completions with increasing accuracy and to identify and rectify common coding errors, thus streamlining the development process.

AI coding assistants function as integrated development environment (IDE) extensions or standalone applications designed to operate within the developer’s existing workflow. These tools leverage large language models to analyze code as it is being written, providing real-time suggestions for code completion, identifying potential errors, and proposing alternative implementations. Integration is commonly achieved through plugins for popular IDEs such as Visual Studio Code, IntelliJ IDEA, and others, allowing developers to receive suggestions directly within their coding environment. The suggestions are typically displayed as inline code snippets or through dedicated panels, enabling developers to accept, reject, or modify them with minimal disruption to their coding process. This direct integration minimizes context switching and allows for a more fluid and interactive coding experience.

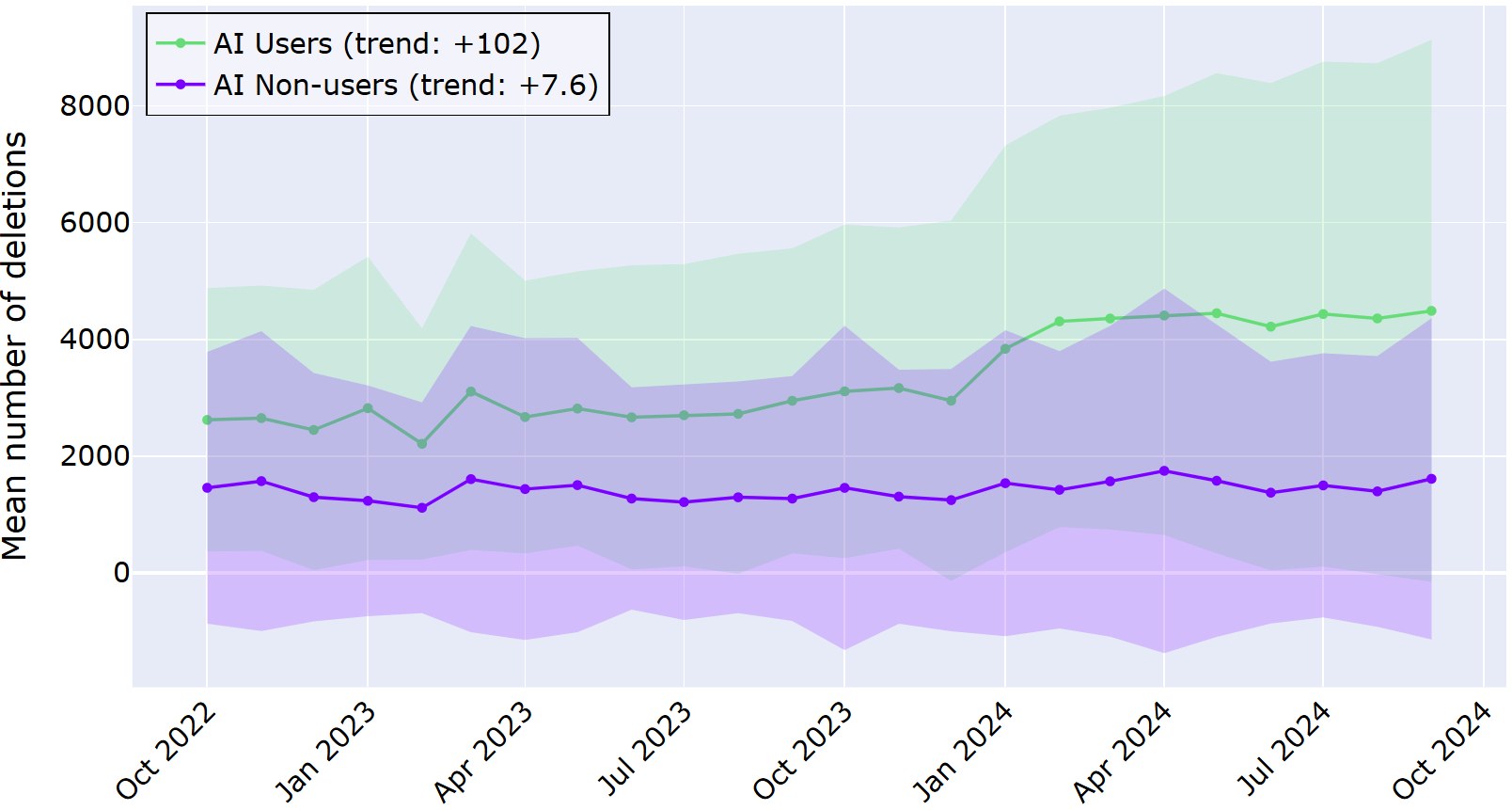

Analysis of developer activity data reveals a substantial difference in editing behavior between users of AI coding assistants and those who do not utilize such tools. Specifically, AI users demonstrated an average increase of 587 characters per month in combined typing and deleting activity. This contrasts sharply with the 75 character/month increase observed in non-AI users. This statistically significant disparity suggests that AI assistance is not simply accelerating coding speed, but is fundamentally altering the coding process, potentially involving more frequent experimentation, refinement, and iterative adjustments facilitated by real-time suggestions and automated code completion.

Dissecting the Workflow: Methodologies for Measuring Impact

IDE telemetry captures granular data regarding developer actions within the integrated development environment, providing objective measurements of coding behavior. This data includes metrics such as keystroke frequency, code completion acceptance rates, debugging session durations, and the frequency of specific code refactoring operations. By aggregating and analyzing these metrics across a user base, it becomes possible to identify common coding patterns, pinpoint areas of workflow inefficiency, and quantify the impact of tooling changes or new features on developer productivity. The objective nature of telemetry data allows for statistically significant comparisons between different user groups or time periods, offering a reliable basis for data-driven improvements to the development process.

Developer surveys are a qualitative research method employed to gather data on user experience and satisfaction with a given tool or workflow. These surveys typically utilize questionnaires – incorporating both closed-ended and open-ended questions – to assess perceptions of usability, identify pain points, and gauge overall satisfaction levels. The subjective data collected through surveys complements objective metrics, providing context and rationale behind observed behavioral patterns. Analysis of survey responses allows for the identification of areas for product improvement and helps prioritize development efforts based on user-reported needs and preferences. Properly designed surveys, with sufficient participation, offer valuable insights that are difficult to obtain through purely quantitative methods.

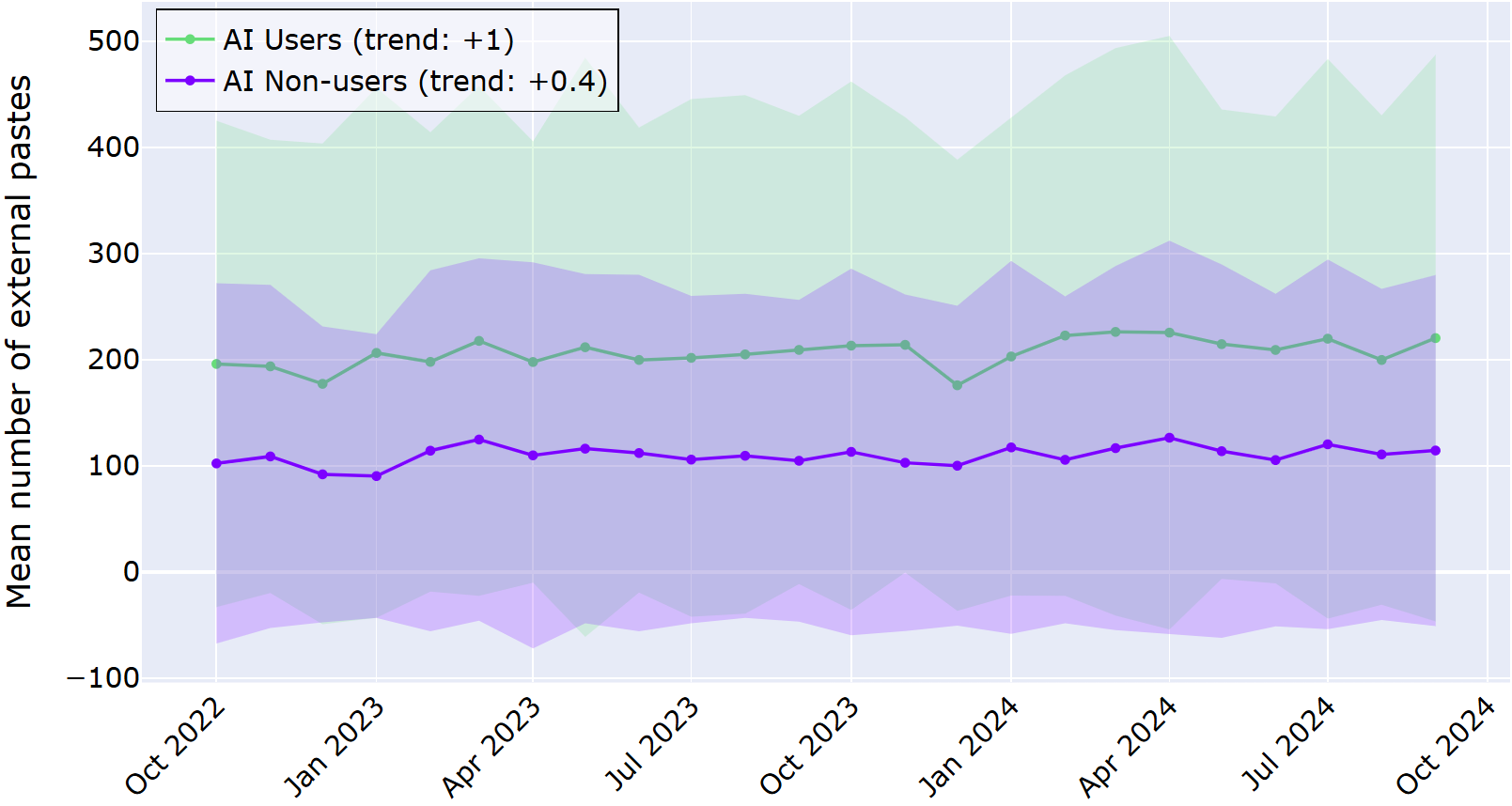

Analysis of IDE telemetry data indicates a statistically significant difference in editing behavior between developers utilizing AI assistance and those who do not. AI users demonstrate an average increase of 102 code deletions per month, contrasted with an average of 7.6 deletions for non-AI users. Furthermore, AI users incorporate an average of 1 external code paste per month, while non-AI users average 0.4. These metrics suggest that developers leveraging AI tools engage in a more frequent revision cycle and demonstrate a greater reliance on external code resources during the development process.

Beyond Automation: Envisioning a Future of Autonomous Codecraft

Agentic coding systems signify a substantial leap forward in software development, moving beyond simple code completion to encompass the autonomous generation of extensive code blocks with reduced human oversight. These systems aren’t merely tools that assist developers line-by-line; instead, they function as collaborators capable of independently tackling larger programming tasks. This capability stems from advanced AI models trained on vast code repositories, enabling them to understand complex requirements and translate them into functional code. The implication is a shift in the development process, where developers can focus on high-level design, architectural considerations, and rigorous testing, while the AI handles the more granular and repetitive aspects of code creation. Consequently, this evolution promises increased development speed, reduced error rates, and the potential to address the growing demand for software solutions across diverse industries.

The increasing capabilities of agentic coding systems are poised to redefine the developer’s role, moving beyond the traditional task of writing code line-by-line. As artificial intelligence assumes greater responsibility for code generation, human developers are likely to transition into higher-level functions focused on system architecture and quality assurance. This shift necessitates a focus on skills such as code review, design thinking, and the ability to define complex system requirements. Rather than being solely responsible for implementation, developers will increasingly oversee the AI’s output, ensuring it aligns with project goals and maintains necessary standards of robustness and security – essentially becoming curators and strategists of code, rather than its primary authors.

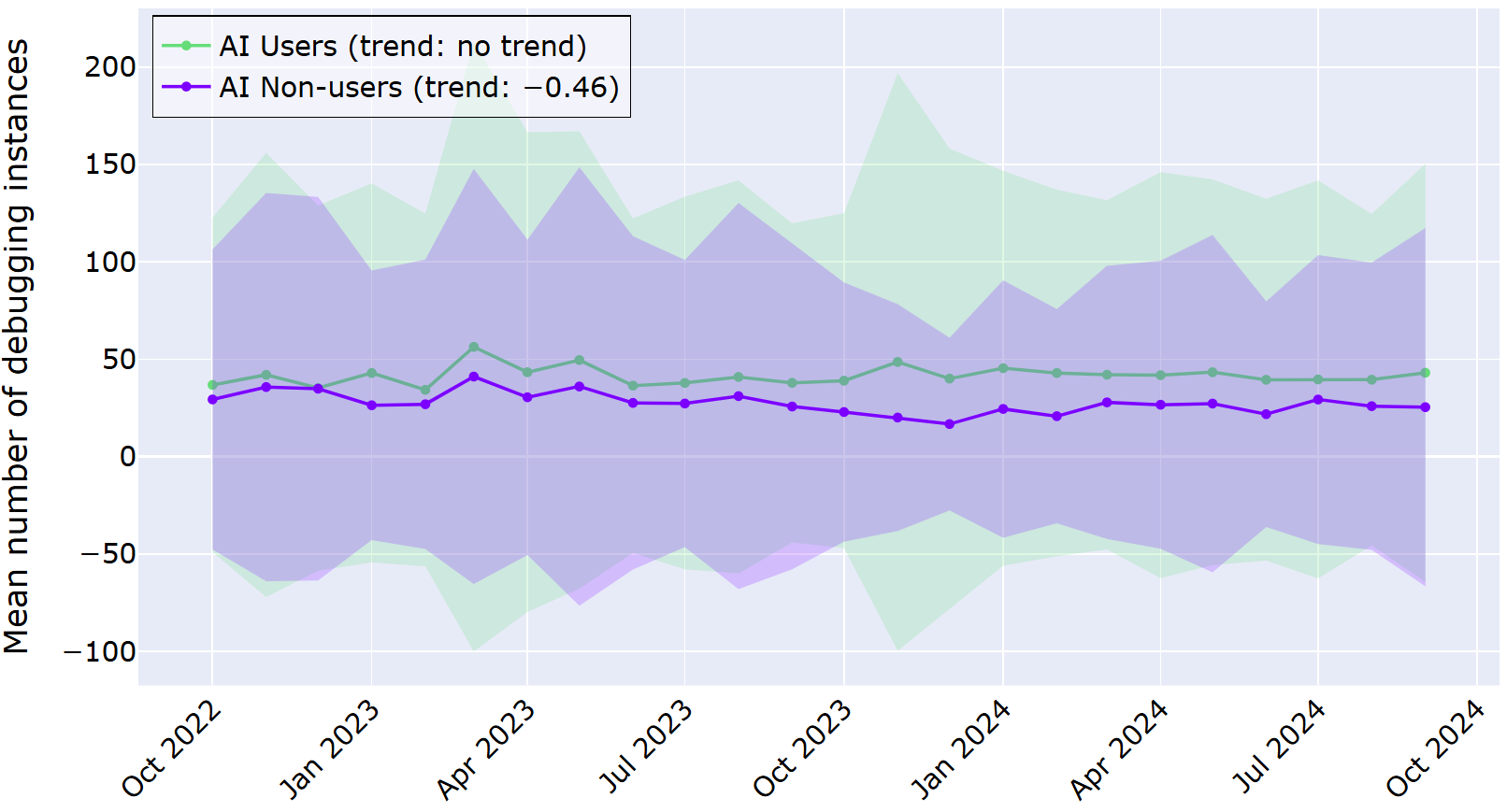

Analysis of coding workflows reveals a compelling trend: developers utilizing AI assistance experience a notable reduction in debugging efforts, averaging 0.46 fewer debugging events each month. This suggests that AI isn’t simply aiding in bug fixation, but potentially preventing their introduction altogether, fostering a more robust initial codebase. Coupled with this improved code quality is a marked increase in workflow dynamism, as evidenced by AI users switching between tasks 6.4 times more frequently per month than those without assistance; this heightened agility indicates a shift towards more complex projects and a greater capacity for parallel development, facilitated by the efficiency gains provided by AI-driven tools.

The study’s findings regarding altered context switching and coding activity present a fascinating paradox. Developers believe AI streamlines their workflow, yet telemetry data reveals a more complex reality of increased editing and code manipulation. This echoes Carl Friedrich Gauss’s sentiment: “If others would think as hard as I do, they would not think so differently.” The perceived efficiency gain may mask a deeper cognitive load, as developers spend more time refining AI-generated suggestions or integrating them into existing projects. The research doesn’t invalidate the potential of AI-assisted development, but rather suggests that simply observing a change in productivity isn’t enough; a deeper analysis of the underlying mechanisms-the ‘how’ and ‘why’ of developer interaction-is essential to truly understand its impact.

What’s Next?

The apparent productivity gains from AI-assisted development, as initially perceived, appear less straightforward when dissected through the lens of detailed IDE telemetry. This isn’t a refutation of benefit, but a call for a more precise accounting. The observed increase in coding and editing activity suggests a shift in developer labor – not necessarily its reduction. The question isn’t whether AI tools help, but how they restructure the work itself. Future research must move beyond simple metrics of lines-of-code or commit frequency and investigate the cognitive load associated with integrating these tools into established workflows.

The shifts in code reuse and context switching are particularly intriguing. Are developers simply delegating more trivial tasks to AI, freeing them for more complex problem-solving, or are they perpetually validating, refining, and correcting AI-generated code? The latter seems more likely. Every patch is a philosophical confession of imperfection. Longitudinal studies tracking developer frustration – measured perhaps through sentiment analysis of commit messages – could reveal the true cost of AI assistance.

Ultimately, the best hack is understanding why it worked. This requires moving beyond treating AI as a simple force multiplier and examining its impact on the fundamental processes of software creation. The goal shouldn’t be to automate programming entirely, but to understand how humans and machines can collaborate most effectively – even if that collaboration looks messier, more iterative, and ultimately, more human than initially anticipated.

Original article: https://arxiv.org/pdf/2601.10258.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- How to find the Roaming Oak Tree in Heartopia

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- M7 Pass Event Guide: All you need to know

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- ATHENA: Blood Twins Hero Tier List

2026-01-17 08:33