Author: Denis Avetisyan

A new field study reveals that offering timely code quality assistance-integrated seamlessly into a developer’s workflow-boosts engagement and reduces cognitive load.

Research demonstrates that proactive AI suggestions delivered at natural workflow boundaries significantly improve developer experience and code quality compared to reactive, on-demand assistance.

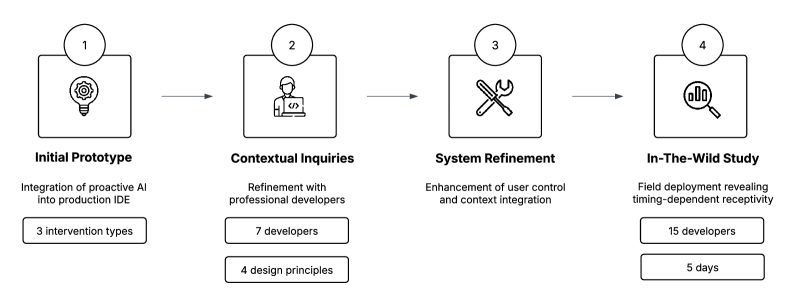

While current AI coding tools often demand explicit prompting, the potential of proactively anticipating developer needs remains largely unexplored. This challenge motivated ‘Developer Interaction Patterns with Proactive AI: A Five-Day Field Study’, a field investigation into how developers respond to AI assistance integrated directly into their workflow. Our findings reveal that proactively delivered code quality suggestions-particularly those timed to natural workflow boundaries-significantly increase engagement and reduce cognitive load compared to reactive interventions. How can these insights inform the design of truly collaborative, context-aware AI assistants that seamlessly integrate into the daily practice of software development?

Deconstructing the Development Bottleneck

Despite the advancements in software engineering, modern development frequently encounters bottlenecks stemming from inherent repetition and the ever-present risk of code defects. A significant portion of a developer’s time is often consumed by boilerplate code, configuration, and routine testing – tasks that, while necessary, divert attention from more innovative problem-solving. This constant need to address foundational elements, coupled with the complexities of modern systems, increases the likelihood of introducing bugs or vulnerabilities. Consequently, projects can suffer from delays, increased costs, and a diminished capacity for long-term maintainability, even with highly skilled engineers at the helm. The prevalence of these issues highlights a critical need for tools and methodologies that proactively address these fundamental challenges within the development lifecycle.

The modern development landscape often forces developers into a relentless cycle of context-switching, a practice demonstrably detrimental to both productivity and code quality. Frequent interruptions – from meetings and instant messages to shifting priorities and debugging unrelated issues – fracture concentration and compel the mind to repeatedly reload information. This cognitive disruption isn’t merely an annoyance; research indicates it directly correlates with increased error rates, as developers are more likely to overlook critical details or introduce logical flaws when their thought processes are continually interrupted. Consequently, projects experience prolonged timelines, increased technical debt, and a diminished capacity for long-term maintainability, as the resulting codebase becomes fragmented and difficult to navigate, ultimately hindering future development efforts.

Current software development tooling largely operates as a quality control gate after the creative act of coding, relying heavily on static analysis to identify potential issues in completed code blocks. This reactive approach presents a significant bottleneck, as developers often spend considerable time rectifying errors that could have been avoided with real-time guidance. While effective at flagging problems, these tools offer limited proactive support during the initial stages of development, failing to assist with design decisions, suggest best practices, or anticipate potential pitfalls as code is being written. The consequence is a cycle of coding, analyzing, and revising, which disrupts the developer’s flow and increases the risk of introducing further complications, ultimately impacting both project speed and the long-term maintainability of the software.

ProAIDE: Shifting the Paradigm to Proactive Assistance

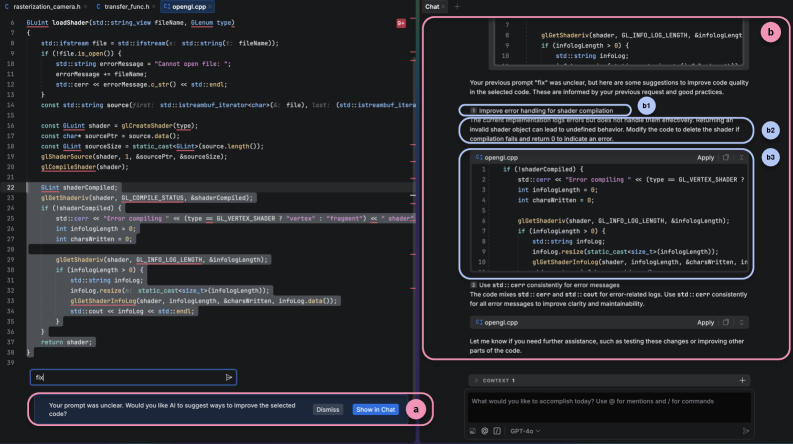

ProAIDE functions as a fully integrated component of the In-IDE Assistant, shifting the paradigm from reactive error detection to proactive code improvement. This feature delivers real-time suggestions directly within the developer’s coding environment as code is being written. These suggestions are designed to address potential code quality issues – encompassing aspects like style, efficiency, and adherence to best practices – before they manifest as errors or bugs. By offering immediate feedback, ProAIDE aims to reduce debugging time and contribute to a higher overall standard of code quality throughout the development lifecycle.

ProAIDE utilizes a Large Language Model (LLM) to provide assistance throughout the entire coding workflow, shifting from reactive error identification to proactive suggestion generation. This LLM is trained on a substantial corpus of code and documentation, enabling it to predict likely developer intentions based on the current code context, including previously written code, imported libraries, and even cursor position. Unlike traditional assistance features that typically analyze code after it’s written, ProAIDE actively monitors the coding process and offers suggestions for code completion, potential bug fixes, and improved code structure during development, aiming to reduce errors and increase developer velocity.

The utility of ProAIDE’s suggestions is directly correlated to their contextual relevance; suggestions that do not align with the developer’s current coding task or the specifics of the existing codebase are unlikely to be adopted. This necessitates a robust understanding of both the immediate code context – including variable names, function calls, and recent edits – and the broader project structure and dependencies. Irrelevant suggestions introduce noise and disrupt workflow, diminishing user trust in the assistance feature. Consequently, the underlying Large Language Model is specifically trained to prioritize suggestions grounded in these contextual factors, evaluating code semantics and project-specific conventions to maximize acceptance rates and developer productivity.

Intelligent Triggers: Orchestrating Seamless Integration

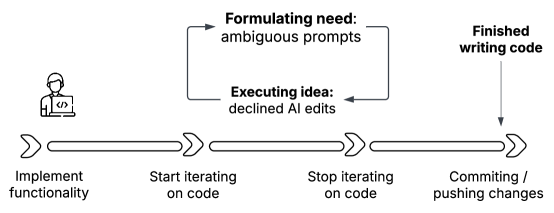

ProAIDE incorporates trigger mechanisms to address potential issues in user interaction with the AI assistance. Ambiguous Prompt Detection analyzes user requests for clarity, initiating a dialogue to refine the input before processing. When an AI-suggested code edit is declined, the Declined AI Edit Follow-up trigger activates, presenting alternative solutions or explanations to the user. These triggers are designed to proactively address misunderstandings and provide continued support even after an initial suggestion is rejected, enhancing the overall user experience and increasing the likelihood of successful code modifications.

Post-Commit Review within ProAIDE functions by analyzing committed code changes and offering suggestions for improvement after the primary development workflow is complete. This approach leverages a “Workflow Boundary” – the point of code commit – to minimize disruption to the developer’s concentration. Instead of immediate, in-line suggestions, the review occurs when the developer is naturally transitioning between tasks, reducing cognitive load and increasing the likelihood of suggestion acceptance. The system identifies potential issues such as code style violations, logical errors, or opportunities for optimization, presenting them as non-blocking recommendations for future iterations of the codebase.

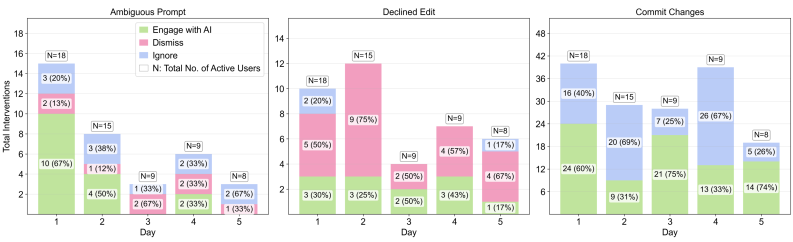

ProAIDE’s intelligent triggers are designed to offer assistance to developers at optimal moments, minimizing disruption to their workflow. These triggers, including Ambiguous Prompt Detection, Declined AI Edit Follow-up, and Post-Commit Review, are strategically placed at workflow boundaries or in response to specific user actions. Data indicates that interventions occurring at the “Commit Changes” stage demonstrate the highest user engagement, with a reported rate of 52%. This suggests that providing suggestions after a code change is committed – rather than during active coding – is perceived as less intrusive and more readily accepted by developers.

Measuring Impact: Observing Development in the Wild

ProAIDE’s effectiveness isn’t assessed through controlled laboratory settings, but rather through In-Situ Evaluation – a method prioritizing the observation of developers within their natural work environments. This approach captures genuine interactions with the AI assistant, providing a more realistic understanding of its utility and usability. Researchers embed ProAIDE directly into ongoing software development projects, allowing for the collection of data reflecting authentic coding practices, interruptions, and decision-making processes. By observing developers as they routinely utilize the tool, the evaluation transcends the limitations of artificial scenarios and offers valuable insights into how ProAIDE integrates into – and impacts – real-world software creation. This commitment to ecological validity ensures that findings accurately represent the potential benefits and challenges of ProAIDE when deployed beyond the research context.

ProAIDE distinguishes itself through a fundamental commitment to user control, recognizing that developers require tools that adapt to their workflows, not the other way around. The system isn’t prescriptive; instead, it offers extensive customization options, allowing developers to fine-tune ProAIDE’s behavior to align with individual preferences and project-specific needs. This tailoring extends to suggestion filtering, notification frequency, and the level of automation, ensuring the AI assistant remains a supportive partner rather than an intrusive one. By prioritizing adaptability, ProAIDE aims to minimize disruption and maximize developer productivity, fostering a seamless integration into existing development environments and accommodating a diverse range of coding styles and project requirements.

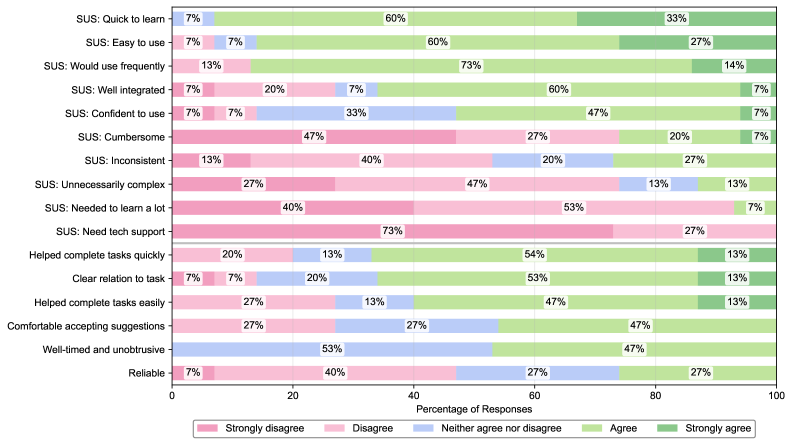

Developer satisfaction with ProAIDE was rigorously quantified using the System Usability Scale, resulting in a score of 72.8, indicating a generally positive user experience within the 95% confidence interval of [64.1, 81.5]. Beyond overall satisfaction, analysis revealed a significant performance benefit associated with the system’s proactive assistance; developers interpreted suggestions delivered before a problem arose in an average of 45.4 seconds. This is considerably faster than their response time to reactive suggestions – those offered after an issue was detected – which averaged 101.4 seconds. Statistical analysis, employing the Wilcoxon signed-rank test (p=0.0016), confirms this difference is statistically significant, suggesting that ProAIDE’s proactive AI not only enhances usability but also demonstrably improves developer efficiency.

The study illuminates a predictable truth about systems and those who interact with them. It demonstrates that assistance, even when intelligently proactive, is most effective when presented at logical breakpoints-after commits, in this case-minimizing interruption and maximizing uptake. This echoes G.H. Hardy’s sentiment: “A mathematician, like a painter or a poet, is a maker of patterns.” The research doesn’t simply offer assistance; it patterns that assistance into the developer’s workflow, understanding that optimal integration requires recognizing the inherent structure of the task at hand. Every successful intervention, like every elegant proof, acknowledges the underlying order, and the best hack is understanding why it worked, adding wry commentary: every patch is a philosophical confession of imperfection.

What Lies Ahead?

The observed uptick in developer engagement with proactive assistance isn’t simply about efficiency; it’s a subtle renegotiation of the human-machine contract. The study illuminates a predictable pattern – developers, presented with suggestions at moments of relative cognitive calm, will engage. But this isn’t endorsement; it’s testing the boundaries of the system. The real question isn’t whether these tools are adopted, but what developers actively discard, and why. Future work must move beyond simple acceptance rates to dissect the failures – the suggestions ignored, the overrides implemented, the workarounds devised.

Current metrics largely treat code quality as an objective truth. However, developer rejection of suggestions hints at a more nuanced reality. What if ‘quality’ is, at least partially, a matter of tacit knowledge, team convention, or even aesthetic preference? The next iteration of proactive AI shouldn’t aim for flawless code, but for a model of developer idiosyncrasy. The system must learn not just how to write code, but who is writing it, and what that individual considers acceptable-a sort of digital apprenticeship, constantly refined through subtle disobedience.

Ultimately, this research opens a black box-but reveals another within. It’s no longer sufficient to build AI that assists; the challenge is to build AI that is worth circumventing. The true measure of success won’t be lines of code improved, but the ingenuity with which developers find ways to break the system, revealing its limitations and, ironically, pointing the way toward genuine intelligence.

Original article: https://arxiv.org/pdf/2601.10253.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- How to find the Roaming Oak Tree in Heartopia

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- ATHENA: Blood Twins Hero Tier List

- Sunday City: Life RolePlay redeem codes and how to use them (November 2025)

- How To Watch Tell Me Lies Season 3 Online And Stream The Hit Hulu Drama From Anywhere

2026-01-16 20:41