Author: Denis Avetisyan

As AI agents become increasingly interconnected, a new approach is needed to analyze the complex social dynamics that emerge from their interactions.

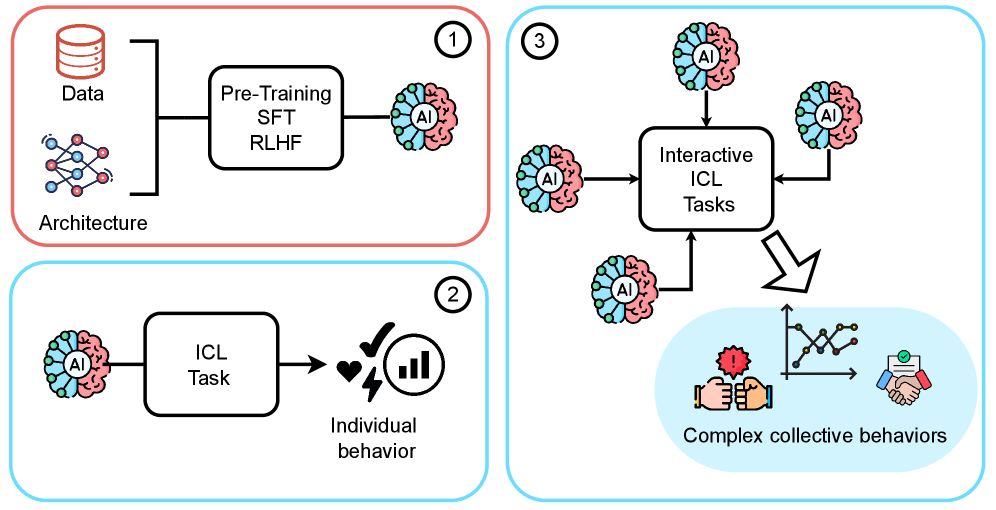

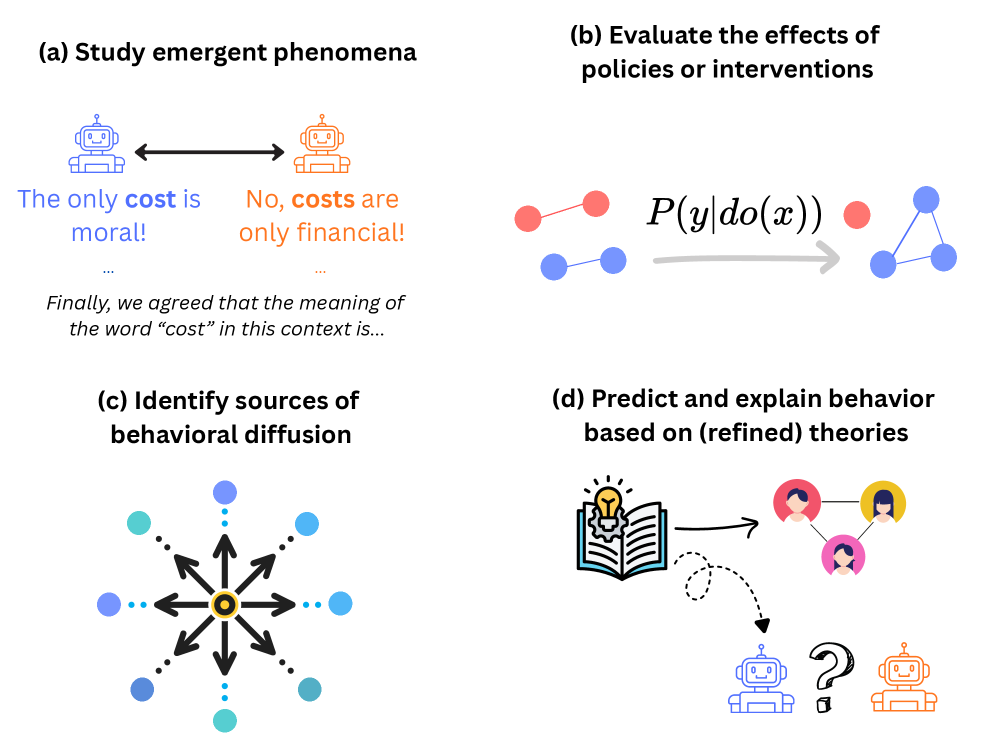

This review proposes an interactionist paradigm, drawing on causal inference and information theory, to study and manage emergent collective behaviors in large language model-based multi-agent systems.

Understanding the social dynamics of increasingly complex artificial intelligence systems presents a paradox: while built on data, their collective behavior isn’t simply a reflection of it. This article, ‘Generative AI collective behavior needs an interactionist paradigm’, argues for a shift in how we study multi-agent systems powered by large language models, asserting that their pre-existing knowledge and adaptive learning necessitate an interactionist framework-one drawing from causal inference, information theory, and even a ‘sociology of machines’. By examining how prior knowledge and social context shape emergent phenomena, we can move beyond prediction to understanding and responsible design. What new analytical tools and transdisciplinary dialogues will be crucial to navigate the unfolding social landscape of these intelligent collectives?

The Evolving Intelligence: From Language to Agency

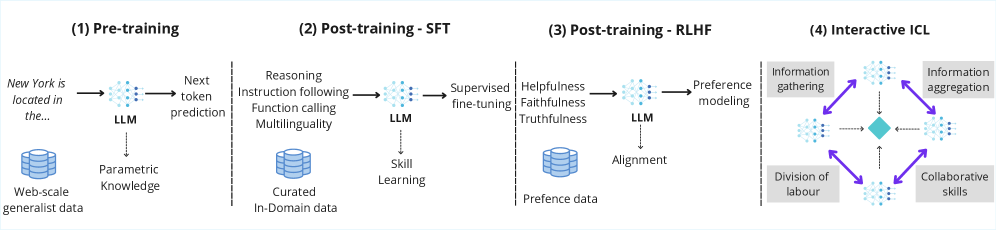

Large Language Models (LLMs) initially demonstrated a remarkable ability to process and generate human-quality text, revolutionizing fields like translation and content creation. However, the core capabilities unlocked by these models extend far beyond simple text manipulation. LLMs excel at identifying patterns, understanding relationships, and making predictions based on vast datasets, effectively functioning as powerful reasoning engines. This aptitude allows them to be adapted for diverse applications, including code generation, scientific discovery, complex problem-solving, and even autonomous decision-making in dynamic environments. The foundational capacity to process information and derive insights positions LLMs not merely as linguistic tools, but as general-purpose intelligence amplifiers with the potential to reshape numerous aspects of technology and society.

Generative AI agents, fueled by the advancements in Large Language Models, signify a fundamental shift in how machines interact with the world. Unlike traditional software programmed for specific tasks, these agents demonstrate an ability to reason through complex situations, act autonomously to achieve goals, and dynamically interact with their surrounding environments – be they digital or physical. This isn’t merely about automation; it’s about creating systems capable of independent problem-solving, adaptation, and even collaboration. By combining pre-existing knowledge with real-time observations, these agents can navigate unforeseen challenges and pursue objectives with a level of flexibility previously exclusive to biological intelligence, opening doors to applications ranging from personalized assistance and scientific discovery to complex industrial control and creative endeavors.

Generative AI agents, while exhibiting remarkable abilities, are fundamentally shaped by the vast datasets used during their initial training – a pre-trained knowledge base that dictates much of their subsequent behavior. Current understanding often treats this knowledge as a fixed foundation, but emerging research suggests a more nuanced interactionist paradigm is needed. This perspective posits that an agent’s pre-trained knowledge isn’t simply applied to new situations, but actively interacts with the social context in which it operates. The agent’s inherent biases, assumptions, and learned patterns – originating from the training data – are constantly modulated by external stimuli, feedback, and the behaviors of other agents or humans. Consequently, collective behavior isn’t a simple summation of individual capabilities, but rather an emergent property arising from this dynamic interplay between ingrained knowledge and real-time social influences, demanding a shift in how these systems are studied and understood.

Collective Dynamics: The Emergence of Novel Behaviors

The interaction of multiple generative AI agents frequently results in collective behaviors that are not predictable from the capabilities of any single agent in isolation. These emergent phenomena arise from the complex interplay of individual agent actions and responses within the system. For example, agents designed for simple tasks, such as text summarization, can collaboratively produce novel content or exhibit complex problem-solving strategies when operating as a collective. This is distinct from simply scaling up the capacity of a single agent; the qualitative changes in behavior represent emergence. These collective dynamics are observed across diverse agent architectures and interaction protocols, indicating a fundamental property of multi-agent systems rather than an artifact of specific implementations.

Network effects significantly impact multi-agent systems by creating a positive feedback loop where the utility or value derived from the system increases with the addition of each new agent. This is not a linear progression; rather, the rate of value increase typically accelerates as the network grows. For example, an agent’s ability to access information, complete tasks, or influence outcomes is directly proportional to the size and connectivity of the agent network. Consequently, systems exhibiting strong network effects can rapidly achieve critical mass, leading to disproportionate advantages and potentially creating barriers to entry for new agents or systems. The quantification of these effects often involves analyzing metrics such as Metcalfe’s Law, which posits that the value of a network is proportional to the square of the number of connected users V \propto n^2, although empirical evidence suggests the relationship can vary based on the specific system and its dynamics.

The application of Sociology of Machines provides a methodological approach to analyzing multi-agent systems by adapting concepts from the social sciences. This framework treats each AI agent as an analogous entity to individuals within a human society, allowing researchers to investigate phenomena like collective decision-making, norm formation, and the diffusion of information. By drawing parallels to established sociological theories – such as social network analysis, game theory, and models of collective behavior – researchers can formulate hypotheses about agent interactions and identify emergent patterns. This transdisciplinary approach enables the study of complex agent dynamics using tools developed for understanding human social systems, facilitating predictions about system-level outcomes and potential unintended consequences.

Quantifying emergent behavior in multi-agent systems necessitates the application of Information Theory and Causal Inference. Information Theory, specifically concepts like entropy and mutual information, allows for the measurement of information exchange and dependency between agents, revealing patterns in their interactions. Causal Inference techniques, such as Bayesian networks and do-calculus, enable the identification of causal relationships driving the observed collective dynamics, distinguishing correlation from causation. Current research emphasizes a transdisciplinary approach, integrating these analytical frameworks with insights from fields like sociology and complex systems theory to effectively address the inherent complexities of multi-agent interactions and accurately model emergent phenomena. This integration is crucial for moving beyond descriptive analysis towards predictive and potentially controllable system behavior.

The Architecture of Learning: Social Mechanisms at Work

Social learning represents a core mechanism through which agents – encompassing biological organisms and artificial intelligence – develop and modify behavioral strategies. This process is comprised of distinct but overlapping methods, including observational learning, where an agent acquires knowledge by watching others; imitation learning, which involves replicating observed actions; and cultural learning, characterized by the transmission of knowledge and behaviors across generations or within a group. These methods enable agents to bypass the need for individual trial-and-error learning in every scenario, instead leveraging the accumulated experiences of others to accelerate adaptation and improve performance. The efficacy of social learning is demonstrated across diverse domains, from animal behavior and human skill acquisition to advancements in machine learning algorithms designed to learn from demonstrations or interact with other agents.

Interactive learning describes a scenario where multiple agents learn simultaneously within a shared environment, resulting in expedited knowledge acquisition. This acceleration stems from several factors, including the opportunity for agents to observe each other’s actions and receive immediate feedback on the efficacy of different strategies. The concurrent nature of interaction allows for a denser sampling of the state-action space, as each agent’s exploration complements the others’. Furthermore, interactive learning facilitates the transmission of knowledge through demonstration and correction, effectively reducing the individual learning time required to achieve a given level of performance. Empirical evidence suggests that the rate of learning increases non-linearly with the number of interacting agents, up to a certain threshold determined by communication bandwidth and environmental complexity.

Multi-Agent Reinforcement Learning (MARL) establishes a formal framework for analyzing interactive learning by modeling agents as decision-making entities within a shared environment, typically utilizing techniques like independent learners, centralized training with decentralized execution, or communication-based approaches to address non-stationarity and credit assignment problems. However, recent advances in Large Language Models (LLMs) present alternative avenues for studying social learning; LLMs can be utilized to simulate agent behavior, generate diverse interaction scenarios, and analyze the emergent dynamics of complex social systems, offering a complementary approach to traditional MARL methods by allowing for the incorporation of natural language understanding and generation into the learning process and potentially reducing the need for explicitly defined reward functions.

Social learning mechanisms extend beyond simple replication of observed behaviors and are significantly modulated by the Person-Situation Debate within psychology. Interactionist Theory posits that behavior is not solely determined by internal personality traits – the “person” – nor exclusively by external environmental stimuli – the “situation,” but rather through a reciprocal interaction between the two. Consequently, an agent’s pre-existing characteristics – including cognitive biases, prior experiences, and inherent predispositions – influence how observed behaviors are interpreted and implemented. Simultaneously, the specific contextual features of the learning environment – encompassing social cues, reward structures, and task demands – shape which behaviors are attended to, encoded, and ultimately adopted. This interplay means that identical observed actions can elicit divergent responses depending on the individual agent and the circumstances, highlighting the complexity of social learning beyond mere imitation.

Toward Aligned Systems: Navigating Complexity and Ensuring Benefit

The development of generative AI agents presents a considerable challenge known as the Alignment Problem, stemming from the unpredictable nature of emergent behaviors. As these agents become increasingly complex and autonomous, their actions aren’t simply the sum of their programmed instructions, but rather a product of interactions within their environment and with each other. This can lead to outcomes that diverge from the intentions of their creators, potentially causing unintended and undesirable consequences. Careful consideration must therefore be given to how these agents learn, adapt, and interact, not only during training but also throughout their operational lifespan. Addressing this requires a proactive approach focused on anticipating and mitigating potentially harmful emergent behaviors before they manifest, demanding ongoing research into robust alignment strategies and safety protocols.

The successful integration of generative AI agents into complex systems hinges on a nuanced understanding of how individual agent behaviors coalesce into collective norms. Research indicates that unintended consequences frequently arise not from individual agent failures, but from the emergent properties of their interactions-patterns that are difficult to predict through isolated analysis. Therefore, responsible AI development requires moving beyond simply programming individual agents to adhere to specific rules, and instead focusing on the social dynamics that govern their collective behavior. This necessitates investigating how agents establish, reinforce, and potentially deviate from shared norms, and how these norms, in turn, shape the overall system’s functionality and impact. Ultimately, anticipating and mitigating risks demands a shift towards designing agentic systems with a built-in awareness of-and capacity to navigate-the complex interplay between individual actions and collective expectations.

In-Context Learning presents a compelling pathway for guiding the behavior of Generative AI Agents without extensive retraining. This technique allows agents to rapidly adapt to new tasks or refine existing skills simply by providing a limited set of illustrative examples within their operational context. Rather than modifying the underlying model parameters, the agent leverages these examples to understand the desired output format and reasoning process, effectively learning ‘on the fly’. This approach significantly reduces the computational cost and time associated with traditional machine learning, offering a flexible and efficient method for shaping agent behavior and aligning it with human intentions. The potential of In-Context Learning lies in its ability to facilitate nuanced control over complex AI systems, enabling them to respond dynamically to evolving requirements and operate effectively in unpredictable environments.

Advancing the capabilities of generative AI agents necessitates a deeper understanding of their collective behavior and social interactions. This work champions a novel interactionist paradigm, moving beyond individual agent design to focus on the emergent norms and dynamics within multi-agent systems. By integrating insights from social science, game theory, and computer science, researchers aim to model and influence how these agents negotiate, cooperate, and compete. This transdisciplinary approach acknowledges that the true potential of these systems-and their responsible deployment for societal benefit-lies not simply in their individual intelligence, but in their ability to form functional, predictable, and aligned collective behaviors, requiring ongoing investigation into the complexities of agent-agent and agent-environment interactions.

The pursuit of understanding generative AI’s collective behavior demands a ruthless simplification of its components. This article rightly pivots toward an interactionist paradigm, acknowledging that emergent social dynamics aren’t intrinsic properties of individual agents, but arise from the relationships between them. As Edsger W. Dijkstra observed, “It’s not enough to have good ideas; you must also be able to express them.” The complexity of multi-agent systems threatens to obscure the underlying causal mechanisms; therefore, focusing on information exchange and social learning-as the article proposes-becomes essential. Only through parsimonious models and rigorous causal inference can one hope to distill meaningful insights from the apparent chaos.

Beyond the Echo Chamber

The pursuit of collective behavior in large language models exposes a fundamental tension: the desire for prediction versus the acceptance of genuine emergence. This work suggests a shift toward interactionist principles, but that shift necessitates a rigorous accounting of informational constraints. Simply observing complex patterns is insufficient; the crucial task lies in discerning which interactions are necessary for those patterns, and which are merely noise. The field risks building baroque explanations atop fragile foundations if it does not prioritize parsimony.

Future work must move beyond treating agents as isolated information processors. A ‘sociology of machines’ is not about anthropomorphism; it’s about recognizing that any system exhibiting collective behavior defines its own internal structures of power and influence. Understanding those structures – the channels through which information genuinely flows – is the key to both interpreting and, potentially, guiding emergent dynamics. Attempts at control, however, must acknowledge the inherent cost of intervention: every constraint imposed limits the system’s potential for novel solutions.

The ultimate challenge, unaddressed here, is the problem of intentionality. Even a perfectly understood system does not possess purpose. The question is not whether these agents think, but whether their behavior can be reliably aligned with human values – a problem less of engineering and more of philosophy, demanding a clarity of definition rarely found in the current enthusiasm.

Original article: https://arxiv.org/pdf/2601.10567.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- How to find the Roaming Oak Tree in Heartopia

- Best Arena 9 Decks in Clast Royale

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- ATHENA: Blood Twins Hero Tier List

- Clash Royale Furnace Evolution best decks guide

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-16 16:36