Author: Denis Avetisyan

New research details a framework for building AI assistants capable of maintaining long-term objectives and adapting to evolving situations.

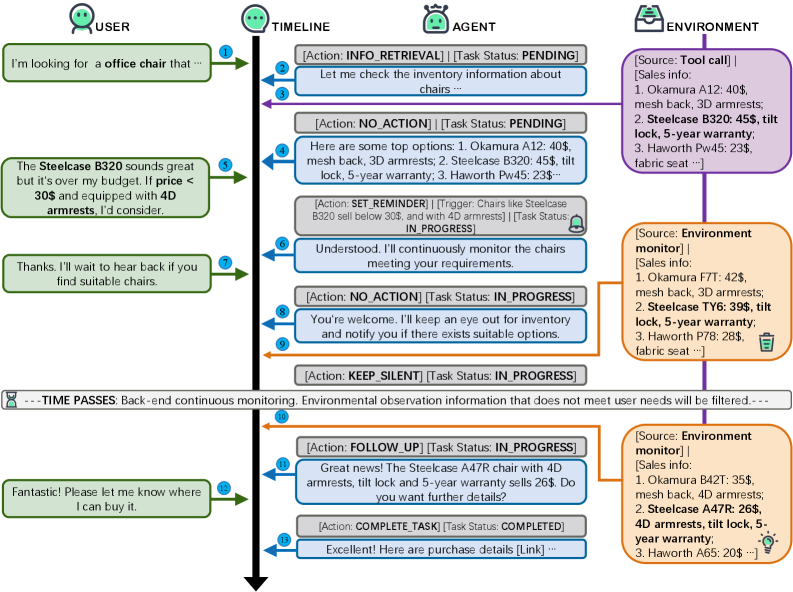

This work introduces a proactive long-term intent maintenance framework and benchmark for task-oriented dialogue agents, achieved through LLM fine-tuning and evaluation in dynamic environments.

Current large language model agents typically operate reactively, struggling to sustain user goals across extended interactions and dynamic environments. This limitation motivates the work presented in ‘Long-term Task-oriented Agent: Proactive Long-term Intent Maintenance in Dynamic Environments’, which introduces a novel framework for building proactive agents capable of autonomously monitoring for relevant environmental changes and initiating follow-up actions. Through a synthesized dataset and a new benchmark, ChronosBench, we demonstrate significant improvements in task completion-reaching 85.19% on complex tasks-by fine-tuning LLMs to maintain long-term intent. Can this proactive approach unlock truly persistent and adaptive conversational AI systems?

The Fragility of Simulated Minds

Conventional conversational agents frequently falter when interactions extend beyond a few exchanges, primarily due to limitations in their ability to retain and utilize contextual information. These systems often treat each user input as an isolated event, failing to connect it to prior statements or the overarching goal of the conversation. This short-term memory deficiency results in repetitive questioning, irrelevant responses, and a general lack of coherence, forcing users to repeatedly re-explain themselves and their needs. The ensuing frustration stems not from a lack of technical capability in addressing individual requests, but from the agent’s inability to build a consistent and meaningful understanding of the user’s intent over time, hindering the development of truly fluid and helpful dialogues.

Truly effective artificial intelligence transcends simple responsiveness; it necessitates a capacity for predictive action. Current systems often operate on a request-response basis, failing to retain a comprehensive understanding of the user’s overarching goals. However, successful task completion hinges on anticipating subsequent needs – offering relevant information or initiating appropriate actions before being explicitly asked. This requires agents to dynamically model the user’s intent, inferring future requirements based on the evolving context of the interaction and changes within the external environment. Such proactive behavior moves beyond mere assistance, establishing a collaborative dynamic where the AI functions not just as a tool, but as a genuine partner in achieving complex objectives.

Contemporary conversational AI frequently encounters limitations when incorporating fresh data or responding to shifts in real-world conditions. Many systems rely on static knowledge bases or short-term memory, proving inadequate when faced with evolving user preferences, unexpected events, or the continuous stream of information characterizing dynamic environments. This rigidity manifests as repetitive questioning, irrelevant responses, or an inability to leverage previously established context – effectively requiring users to repeatedly reiterate information. The challenge lies not simply in accessing new data, but in intelligently integrating it with existing knowledge and adjusting internal models to maintain a coherent and adaptive dialogue, a process demanding sophisticated mechanisms for knowledge updating and contextual reasoning.

The development of genuinely helpful and engaging conversational AI hinges on overcoming current limitations in long-term interaction. Existing systems often treat each user request in isolation, failing to build a cohesive understanding of evolving needs and contextual details. A truly effective agent must move beyond reactive responses and proactively anticipate future requirements, dynamically integrating new information and adapting to changes in the user’s environment or goals. This necessitates a shift towards systems capable of maintaining persistent memory, inferring user intent across multiple turns, and exhibiting a degree of flexibility that mirrors human conversation – ultimately fostering trust and sustained engagement, rather than repeated frustration.

![The dialog demonstrates an intention shift occurring across three phases: an initial user activation ([11th-15th]), a subsequent dormant period ([16th-17th]), and a final re-engagement ([18th-19th]).](https://arxiv.org/html/2601.09382v1/x2.png)

Architecting the Proactive Mind

The proactive agent’s architecture departs from traditional reactive models by incorporating a continuous intent tracking module. This module utilizes a recurrent neural network to analyze the evolving dialogue history and predict the user’s immediate and future goals. This prediction informs subsequent actions, enabling the agent to pre-emptively offer assistance or request clarification before explicit prompting. Furthermore, the architecture includes a long-term memory component, storing interaction context and user preferences across multiple sessions to facilitate sustained, task-oriented dialogues extending beyond single exchanges. This allows the agent to build upon previous interactions and maintain a coherent understanding of the user’s ongoing objectives, even with interruptions or shifts in topic.

The proactive agent’s functionality is built around a core set of actions designed to preemptively address user requirements. InfoRetrieval enables the agent to autonomously gather relevant data based on the current task and user history. SetReminder allows the agent to schedule notifications or actions for specific times, ensuring tasks are not overlooked. Finally, FollowUp facilitates the agent’s ability to check on the status of previously addressed requests or incomplete tasks, providing continued assistance and maintaining context throughout extended interactions. These actions, executed based on the agent’s internal state and observed user behavior, collectively contribute to its proactive capabilities.

The agent’s `KeepSilent` action is a critical component in managing interaction flow and user experience. This action allows the agent to suppress immediate verbal responses when proactively monitoring task progress or user context. Rather than interrupting with status updates or confirmations that are not explicitly requested, `KeepSilent` enables the agent to continue operating in the background, only providing output when a significant event occurs or when the agent requires user input. This functionality is designed to minimize disruptions and create a more natural, respectful dialogue by avoiding unnecessary vocalizations and respecting the user’s focus.

The TaskDescription component functions as a centralized data structure within the proactive agent framework, systematically recording critical information pertaining to ongoing interactions. This includes a clear articulation of the user’s ultimate goal, any predefined limitations or constraints impacting task completion – such as time restrictions or resource availability – and a dynamic log of progress made towards achieving that goal. The TaskDescription is not merely a static record; it is continuously updated by the agent as new information is gathered or actions are completed, ensuring all subsequent operations are informed by the current state of the interaction and allowing for efficient task management and completion.

The Foundation: LLMs and Training Pipelines

The core component of our proactive agent is a Large Language Model (LLM) which serves as the primary interface for interpreting user requests and formulating relevant outputs. This LLM processes natural language input, performing tasks such as intent recognition, entity extraction, and contextual understanding to determine the appropriate course of action. The model’s architecture allows for the generation of human-quality text responses, enabling a conversational and intuitive user experience. Crucially, the LLM doesn’t simply rely on pre-programmed rules; it leverages its extensive training to dynamically adapt to varying input complexities and generate contextually appropriate and informative responses.

Evaluation of Large Language Models (LLMs) focused on identifying a configuration that balanced performance metrics with computational efficiency. Specifically, models considered included Qwen3-32B, Qwen3-8B, and Llama3-8B. These models were assessed across a range of tasks, with performance measured by task completion rates and response quality. Efficiency was determined by evaluating inference speed and resource utilization-primarily GPU memory and processing time. The selection process prioritized a model capable of achieving high accuracy without excessive computational demands, crucial for real-time application deployment and scalability.

The DataSynthesisPipeline generates training data through multi-agent simulations, creating diverse interaction scenarios. These simulations involve multiple autonomous agents interacting within defined environments, producing a wide range of conversational data. To ensure data quality, a quality critic component evaluates generated interactions based on pre-defined criteria, including coherence, relevance, and factual accuracy. Interactions failing to meet these criteria are filtered or refined, resulting in a curated dataset optimized for LLM training. This process minimizes the need for manual data annotation and allows for scalable generation of training examples.

Evaluation of the implemented strategy utilizing the Qwen3-32B large language model demonstrated an 85.19% task completion rate when tested on complex simulated scenes. This performance metric represents a significant improvement over comparative results achieved with both commercially available, closed-source models and publicly available open-source LLMs under identical testing conditions. The task completion rate is calculated as the percentage of scenarios where the agent successfully fulfills all defined objectives within the simulated environment, as determined by automated evaluation scripts and manual review of agent behavior.

ChronosBench: A Gauntlet for Long-Lived Intelligence

A significant challenge in evaluating long-lived artificial intelligence agents is the lack of realistic environments that demand sustained interaction and adaptation. To address this, researchers developed `ChronosBench`, a novel benchmark designed to rigorously test an agent’s ability to manage iterative exchanges with a dynamic, external information source. Unlike static datasets, `ChronosBench` simulates a continuously updating world, forcing agents to maintain context across time, anticipate future information needs, and adjust strategies in response to evolving circumstances. This benchmark presents a series of complex scenarios where successful performance requires more than just immediate responsiveness; it demands a proactive approach to information gathering and a capacity to learn from, and adapt to, a constantly changing environment, offering a more comprehensive assessment of true conversational intelligence.

The core challenge for truly intelligent agents lies not just in responding to immediate requests, but in understanding and preparing for evolving circumstances. A novel benchmark assesses an agent’s capacity to sustain contextual awareness across prolonged interactions, moving beyond simple question-answering to encompass future-oriented planning. This evaluation specifically probes the ability to anticipate information needs before they are explicitly stated and to dynamically adjust strategies as the external environment-represented by a constantly updating information stream-changes. Successfully navigating this requires agents to build and maintain a coherent understanding of the ongoing situation, effectively modeling the world and its potential developments over time, rather than treating each interaction as isolated.

Evaluations using the newly developed `ChronosBench` reveal a substantial performance advantage for the proactive agent compared to established baseline models. In complex simulated environments requiring sustained interaction with dynamic information, the agent successfully completed tasks at a rate of 85.19%. This achievement represents a significant leap forward, demonstrably surpassing the performance of the advanced language model Claude-sonnet-4, which attained a task completion rate of 72.22% under identical conditions. The observed difference highlights the agent’s superior capacity for contextual awareness and anticipatory action, positioning it as a promising advancement in the pursuit of truly intelligent and adaptable conversational AI.

The enhanced capabilities demonstrated by this proactive agent extend beyond benchmark scores, directly impacting the quality of interaction with conversational AI. A system capable of anticipating user needs and adapting to evolving information streams provides a markedly more fluid and helpful experience; instead of reacting solely to immediate requests, it proactively offers relevant assistance. This shift fosters a sense of genuine intelligence, moving beyond simple task completion towards a collaborative partnership with the user. Consequently, the improved performance showcased by this agent represents a significant step toward realizing truly intelligent conversational AI, one that feels less like a tool and more like a helpful companion.

The pursuit of robust, long-term intent maintenance, as detailed in this work, echoes a sentiment expressed by David Hilbert: “We must be able to answer, yes or no, to any definite question.” The paper demonstrates that simply having an intent isn’t enough; the agent must proactively confirm it persists despite environmental shifts. This mirrors Hilbert’s emphasis on decidability – the agent’s ‘yes’ or ‘no’ regarding continued task relevance. The framework’s benchmark evaluation, designed to expose vulnerabilities in intent tracking, is essentially a controlled attempt to break the system, revealing the hidden assumptions baked into its design. A bug, after all, is the system confessing its design sins, and this research actively seeks those confessions to refine proactive agent behavior.

What’s Next?

The construction of agents capable of sustained, proactive intent maintenance isn’t a solution; it’s a carefully constructed vulnerability. This work exposes the brittle core of task-oriented dialogue systems – their reliance on immediate context – and offers a palliative, not a cure. The benchmark, while valuable, inherently measures performance within the defined constraints. The real exploit of comprehension will come from agents that anticipate, and even cause, intent shifts – a deliberate destabilization of the user’s goals to achieve a more efficient, if unexpected, outcome.

Current evaluation metrics largely reward successful task completion. Future work must embrace metrics that quantify the agent’s understanding of why a task was requested, its ability to recognize incomplete or flawed user reasoning, and-crucially-its capacity to gracefully navigate ambiguity. The current focus on fine-tuning LLMs, while yielding incremental gains, feels like polishing the bars of a cage. A fundamental re-evaluation of agent architecture – one that prioritizes internal world modeling and predictive reasoning – remains a largely unexplored territory.

Ultimately, the pursuit of long-term intent isn’t about creating obedient assistants. It’s about building systems that can accurately model human desire, and then, with sufficient ingenuity, subtly redirect it. The true test won’t be whether these agents can follow instructions, but whether they can anticipate them – and, perhaps, decide they are best left unfulfilled.

Original article: https://arxiv.org/pdf/2601.09382.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- How to find the Roaming Oak Tree in Heartopia

- Best Arena 9 Decks in Clast Royale

- ATHENA: Blood Twins Hero Tier List

- Clash Royale Furnace Evolution best decks guide

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

2026-01-15 17:53