Author: Denis Avetisyan

New research explores how combining neural networks with structured knowledge can create more accurate, interpretable, and clinically relevant AI systems.

This review examines neurosymbolic Retrieval-Augmented Generation (RAG) frameworks that integrate knowledge graphs and symbolic reasoning to improve performance in complex decision-making scenarios.

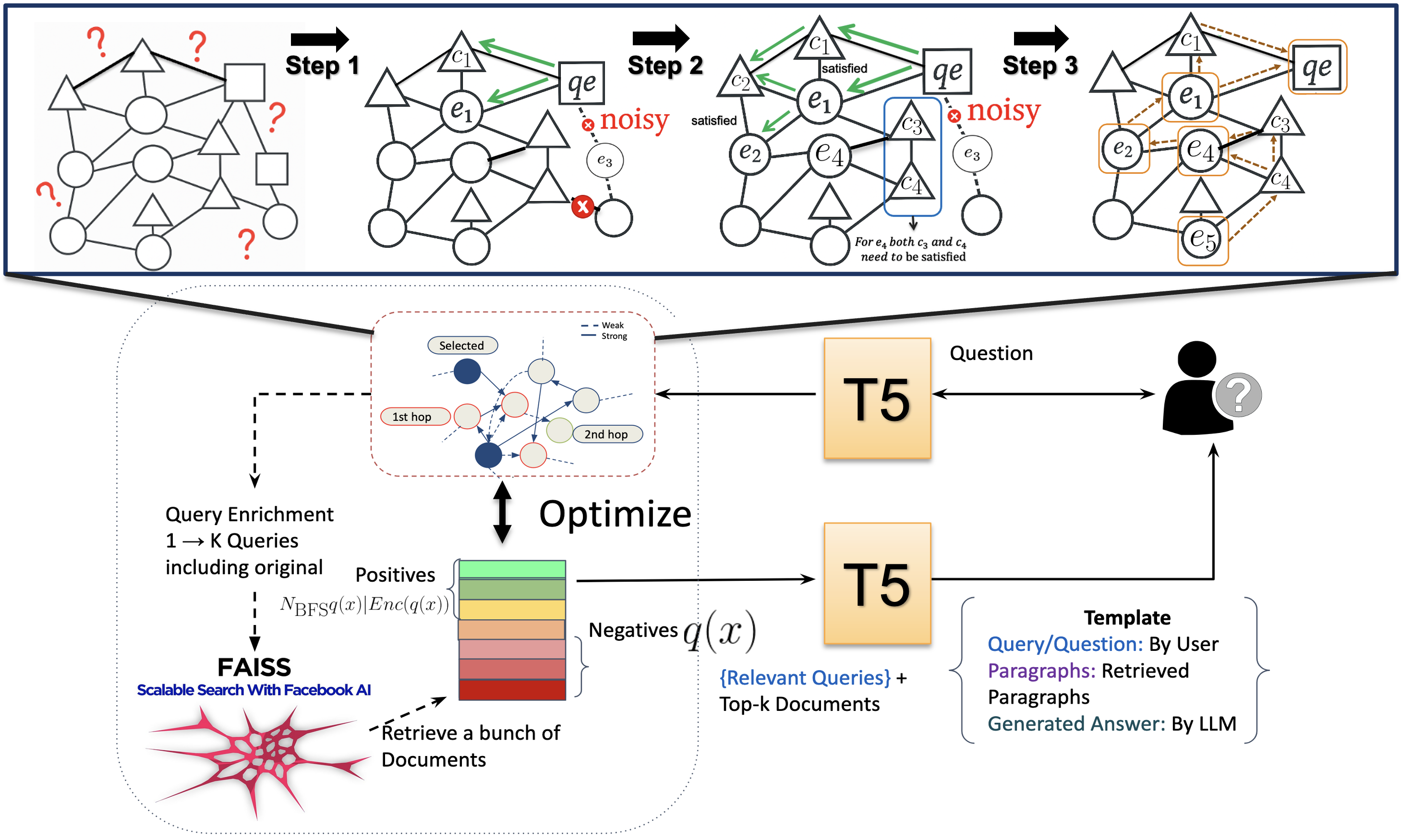

While Retrieval-Augmented Generation (RAG) systems have advanced large language model performance, their internal reasoning remains largely opaque, hindering trust and debuggability-particularly in high-stakes domains. This work introduces ‘Neurosymbolic Retrievers for Retrieval-augmented Generation’, a novel framework integrating symbolic knowledge graphs with neural retrieval to address these limitations. By explicitly incorporating interpretable symbolic features and knowledge-driven query enhancement, we demonstrate improved transparency and performance in complex tasks like mental health risk assessment. Can this neurosymbolic approach unlock more reliable and explainable AI systems for critical decision-making processes?

The Imperative of Clinical Inference

Clinical assessment demands more than simply locating pertinent data; it requires a complex interplay of inference, pattern recognition, and contextual understanding – a challenge that frequently overwhelms traditional information retrieval systems. These systems, optimized for keyword matching and document retrieval, often fail to grasp the subtleties of medical reasoning, where a constellation of symptoms, patient history, and diagnostic possibilities must be weighed. The limitations stem from an inability to model the intricate relationships between clinical concepts – how a fever might indicate infection, or how a specific medication could interact with a pre-existing condition. Consequently, these systems frequently return a deluge of irrelevant information or, more critically, fail to identify the crucial connections needed for an accurate diagnosis, highlighting a significant gap between information access and effective clinical judgment.

Effective clinical assessment demands more than just access to information; it requires a system’s capacity to interpret the intricate web of relationships within a patient’s case. Current approaches frequently falter because they treat medical knowledge as isolated data points, failing to recognize that symptoms, conditions, and treatments exist not in isolation, but as interconnected elements within a complex biological system. A truly intelligent clinical support system must therefore move beyond simple keyword matching and document retrieval, instead employing methods that model the semantic relationships between concepts – understanding, for instance, that a particular symptom might indicate several possible conditions, or that a patient’s existing medications could interact with a proposed treatment. This contextual understanding is crucial for accurately interpreting ambiguous information, generating insightful hypotheses, and ultimately, arriving at the most appropriate diagnosis and care plan.

Current clinical decision support systems frequently falter not because of a lack of data, but due to an inability to integrate information gathered from diverse sources over the course of a patient interaction. These systems often treat each piece of information – a lab result, a symptom described, a prior diagnosis – as an isolated data point, missing crucial connections and contextual nuances. This limitation hinders their capacity to synthesize a comprehensive understanding of the patient’s condition, particularly as the clinical picture evolves through successive questions and responses. Consequently, decision-making is hampered, as these tools struggle to move beyond simple data retrieval to true clinical reasoning – the ability to dynamically update assessments based on the totality of available evidence, mirroring the complex thought processes of experienced clinicians.

Neurosymbolic RAG: A Synthesis of Logic and Data

Neurosymbolic Retrieval-Augmented Generation (RAG) combines the strengths of neural language models with structured knowledge representation. Traditional RAG systems retrieve relevant documents to augment language model prompts; neurosymbolic RAG extends this by incorporating explicit symbolic knowledge, often represented as knowledge graphs or rule-based systems. This integration allows the language model to not only access factual information but also to reason over it based on predefined relationships and logical rules. The framework facilitates the transformation of unstructured text into a structured, machine-readable format, enabling more accurate and interpretable responses compared to systems relying solely on statistical patterns learned from large datasets. This approach improves performance on tasks requiring complex reasoning, knowledge validation, and explainability.

Knowledge Graphs facilitate interpretable reasoning in Neurosymbolic RAG systems by representing information as entities and relationships, enabling explicit semantic traversal paths. Instead of relying solely on vector similarity searches, these systems can navigate the graph to identify relevant information based on defined relationships between concepts. This allows the system to not only retrieve documents but also to demonstrate how those documents relate to the query through the traversed path – for example, identifying a connection between a patient’s symptom, a related disease, and a specific treatment protocol. The explicit nature of these traversal paths provides transparency into the reasoning process, enabling verification and debugging, and ultimately fostering trust in the system’s outputs.

The iterative nature of neurosymbolic RAG systems allows for the progressive refinement of a patient’s clinical profile through the accumulation of symbolic features extracted from knowledge graphs. Each conversation turn doesn’t operate in isolation; instead, features identified in prior interactions are retained and incorporated into subsequent knowledge graph traversals. This accumulation facilitates a more nuanced and comprehensive understanding of the patient’s condition, moving beyond single-query responses to establish a longitudinal clinical picture. Consequently, the system can identify complex relationships and dependencies that would be missed by purely statistical language models, leading to more informed and accurate diagnostic and treatment recommendations.

Refining Retrieval Through Graph-Based Optimization

KG-Path RAG differentiates itself from Neurosymbolic RAG by framing Knowledge Graph (KG) traversal and information retrieval not as sequential steps, but as a unified optimization problem. This approach allows for simultaneous consideration of both KG structure and content relevance during the retrieval process. Traditional methods typically perform KG traversal to identify relevant nodes, then retrieve associated text; KG-Path RAG instead defines an objective function that directly maximizes the relevance of retrieved information given both the query and the KG’s relational data, enabling a more holistic and potentially more accurate retrieval strategy. This joint optimization is achieved through algorithms that consider the path taken through the KG and the content of the nodes visited, weighting each based on its contribution to query satisfaction.

Retrieval refinement using algorithms such as PageRank operates by re-weighting retrieved documents based on their connectivity and importance within a knowledge graph. PageRank, originally designed for web page ranking, is adapted to assess the relevance of knowledge graph nodes (representing documents or passages) by iteratively calculating a score based on the number and quality of incoming links. This process effectively distributes relevance scores across the graph, prioritizing nodes with high in-degree centrality and connections to other highly-ranked nodes. The resulting distribution is then used to refine the initial retrieval scores, promoting the retrieval of more pertinent information and down-weighting less relevant results. This approach differs from simple keyword matching by incorporating relational information and assessing document importance based on its position within the broader knowledge context.

Proknow-RAG enhances retrieval-augmented generation in clinical decision support systems by leveraging standardized questionnaires to re-rank retrieved passages. This methodology moves beyond simple relevance scoring by prioritizing information based on validated assessment workflows-specifically, the order in which questions are posed to elicit diagnostic or treatment information. By aligning the retrieval order with these established workflows, Proknow-RAG achieves state-of-the-art performance in tasks requiring accurate and clinically-aligned responses, as demonstrated in comparative evaluations against other RAG approaches.

Validating Interpretability: The Cornerstone of Clinical Adoption

Rigorous evaluation by clinical experts remains paramount in establishing the viability of neurosymbolic artificial intelligence for mental healthcare applications. While automated metrics offer a quantitative assessment of performance, they often fail to capture the nuances of clinical reasoning and the potential for real-world impact. Consequently, direct assessment by licensed psychologists and psychiatrists is essential to validate not only the accuracy of these systems – such as Proknow-RAG and KG-Path RAG – but also their clinical relevance and the trustworthiness of their outputs. This expert scrutiny ensures that the systems align with established clinical practices, avoid potentially harmful misinterpretations, and ultimately contribute meaningfully to patient care, moving beyond purely statistical success to demonstrate genuine utility in a complex medical domain.

A critical component of gaining clinical acceptance for any artificial intelligence system lies in demystifying its decision-making process. Proknow-RAG and KG-Path RAG address this need through Knowledge Graph traversal, which allows clinicians to follow the system’s reasoning from initial input to final conclusion. Rather than presenting a ‘black box’ output, these systems visibly trace the connections and inferences made using established medical knowledge – essentially showing how a particular risk assessment was reached. This transparency isn’t simply about revealing the steps; it’s about enabling clinicians to evaluate the validity of those steps, identify potential biases, and ultimately, exercise their own professional judgment in conjunction with the AI’s insights. The ability to scrutinize the reasoning pathway is paramount for building trust and fostering a collaborative relationship between human expertise and artificial intelligence in sensitive healthcare applications.

Recent evaluations demonstrate substantial promise in automated mental health risk assessment. Proknow-RAG, a neurosymbolic system, achieved 89.1% accuracy in identifying suicide risk and 83.2% accuracy in detecting self-harm risk. Importantly, this system isn’t simply accurate – licensed clinical psychologists agreed with its reasoning in 75% of cases, validating its interpretability, clinical relevance, and computational efficiency. Complementing these findings, KG-Path RAG demonstrated strong performance in depression detection, achieving 84.7% accuracy, suggesting that knowledge graph-augmented retrieval-augmented generation models hold significant potential as tools to support mental healthcare professionals and improve patient outcomes.

The pursuit of robust knowledge representation, as demonstrated in this neurosymbolic RAG framework, echoes a fundamental tenet of computational elegance. The article’s emphasis on integrating symbolic reasoning with neural retrieval isn’t merely about improving accuracy; it’s about establishing a verifiable foundation for decision-making. As John McCarthy observed, “Every worthwhile problem has a correct solution.” This paper strives for that ‘correct solution’ by grounding retrieval-augmented generation in the provable logic of knowledge graphs, moving beyond systems that simply ‘work’ to those that can be demonstrably trusted – particularly vital in critical domains like clinical decision support where alignment with established procedures is paramount.

What’s Next?

The pursuit of ‘intelligent’ systems invariably encounters the rigidity of formalization. This work, while elegantly bridging neural retrieval with symbolic reasoning, merely highlights the persistent chasm between correlation and causation. The demonstrated improvements in clinical decision support are noteworthy, yet the fundamental problem remains: can a system truly understand procedural knowledge, or only approximate it through statistical association? Reproducibility, a cornerstone of scientific inquiry, demands a verifiable logic, not just demonstrable performance on a held-out test set.

Future investigations must confront the limitations of knowledge graph construction itself. The inherent subjectivity in defining nodes and relationships introduces bias, effectively encoding human assumptions into the very foundation of the system. A truly robust framework would require a method for self-validation-a means by which the system could assess the consistency and completeness of its own knowledge base. Without such a mechanism, the observed gains, however promising, remain contingent on the quality of the initial data-a precarious foundation, indeed.

The ultimate test lies not in achieving higher accuracy scores, but in establishing deterministic behavior. If the same input consistently yields the same output, and that output can be traced back to a logically sound derivation, then-and only then-can one begin to speak of genuine intelligence. Until then, these systems remain sophisticated pattern matchers, elegantly disguised as reasoners.

Original article: https://arxiv.org/pdf/2601.04568.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- How to find the Roaming Oak Tree in Heartopia

- Best Arena 9 Decks in Clast Royale

- ATHENA: Blood Twins Hero Tier List

- Clash Royale Furnace Evolution best decks guide

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

2026-01-11 16:18