Author: Denis Avetisyan

A new framework translates the complex obligations of the EU AI Act into practical, testable components for developers and organizations deploying high-risk artificial intelligence.

This paper proposes a structured approach to verify compliance with the EU AI Act’s requirements for high-risk AI systems, focusing on risk management and data governance.

Despite growing momentum toward responsible AI, translating the broad legal mandates of emerging regulations into practical, verifiable components remains a significant challenge. This paper, ‘Assessing High-Risk Systems: An EU AI Act Verification Framework’, addresses this gap by proposing a structured approach to systematically verify compliance with the EU AI Act. The framework organizes assessment along key dimensions-method type and assessment target-and maps legal requirements to concrete verification activities, bridging the gap between policy and practice. Will this approach foster consistent, trustworthy AI systems and streamline the path to regulatory alignment across Europe?

The Inevitable Architecture of Regulation

The forthcoming European Union AI Act represents a pivotal moment in the governance of artificial intelligence, with implications extending far beyond the EU’s borders. This landmark legislation specifically targets ‘High-Risk AI Systems’ – those deployed in critical infrastructure, education, employment, essential private and public services, law enforcement, and border control – subjecting them to stringent requirements before deployment. Unlike previous, largely self-regulatory approaches, the AI Act establishes legally binding obligations concerning data quality, transparency, human oversight, and cybersecurity. Consequently, developers and deployers of these systems will be compelled to conduct thorough risk assessments, implement robust mitigation strategies, and maintain comprehensive documentation. This proactive, legally-backed framework isn’t simply about compliance; it aims to foster innovation grounded in safety, ethical considerations, and public trust, potentially setting a global standard for responsible AI development and deployment.

The evolving regulatory environment, particularly concerning artificial intelligence, necessitates a fundamental shift towards preventative strategies. Rather than addressing issues after they arise, organizations are now compelled to integrate risk management, quality assurance, and ethical considerations directly into the design, development, and deployment phases of AI systems. This proactive stance involves rigorous testing, continuous monitoring for unintended biases or harms, and the establishment of clear accountability measures. It extends beyond mere technical functionality, demanding a holistic evaluation of societal impact and adherence to principles of fairness, transparency, and respect for fundamental rights. Ultimately, this approach aims to foster responsible innovation and build public trust in the increasingly pervasive role of AI technologies.

To navigate the evolving demands of artificial intelligence regulation, organizations are increasingly compelled to establish comprehensive governance frameworks. These frameworks extend beyond simple compliance checklists, demanding a holistic approach to AI lifecycle management-from data sourcing and model development to deployment and ongoing monitoring. A robust system necessitates clearly defined roles and responsibilities, rigorous documentation practices, and transparent audit trails. Prioritizing such governance isn’t merely about avoiding penalties associated with non-compliance-like the significant fines outlined in the EU AI Act-but is fundamentally about fostering stakeholder trust. Demonstrating a commitment to responsible AI through proactive governance builds confidence with customers, partners, and the public, ultimately enabling wider adoption and realizing the full potential of these powerful technologies.

Non-compliance with the evolving AI regulations, such as the EU AI Act, carries significant repercussions for organizations. Financial penalties can reach tens of millions of euros or a percentage of annual global turnover, depending on the severity of the infraction and the size of the company. Beyond monetary fines, however, lies the potential for restricted market access. AI systems deemed non-compliant may be barred from operating within the European Economic Area, effectively limiting a product’s reach and hindering commercial viability. This creates a substantial barrier to entry for developers and a considerable risk for established businesses, emphasizing the critical need for proactive adherence to the new legal standards and the implementation of robust risk mitigation strategies.

Charting a Course Through Complexity: Key Methodologies

A comprehensive Risk Management Process, as mandated by the EU AI Act, necessitates a systematic approach to identifying, analyzing, and mitigating potential harms associated with AI systems. This process requires organizations to define the scope of potential risks – including those related to fundamental rights, safety, and societal impact – and assess their likelihood and severity. Mitigation strategies must then be implemented, documented, and continuously monitored throughout the AI system’s lifecycle. This includes establishing clear risk acceptance criteria, implementing technical and organizational measures to reduce risks, and developing contingency plans for addressing residual risks. Documentation of this process, including risk assessments, mitigation plans, and monitoring results, is a core requirement for demonstrating compliance and facilitating regulatory audits.

A Quality Management System (QMS) applied to AI development establishes a structured framework for consistent performance throughout the entire AI lifecycle. This includes defined procedures for requirements gathering, data management, model design, implementation, testing, and deployment. Crucially, a QMS incorporates ongoing monitoring of model performance in production environments, with mechanisms for identifying and addressing drift, bias, or unexpected behavior. Documentation within the QMS details processes, validation results, and any corrective actions taken, providing a verifiable record of adherence to established quality standards and facilitating continuous improvement of AI systems.

Detailed technical documentation serves as a primary mechanism for demonstrating compliance with AI regulations, such as the EU AI Act. This documentation must encompass a comprehensive description of the AI system’s design, development, and intended purpose, alongside details regarding the training datasets utilized, algorithms employed, and performance metrics achieved. Furthermore, it should outline the system’s limitations, known risks, and mitigation strategies. Crucially, this documentation facilitates a clear audit trail, enabling regulators and internal stakeholders to verify the system’s adherence to established standards and principles, and to trace the rationale behind design choices and operational parameters. The documentation should be regularly updated to reflect any modifications or improvements to the AI system throughout its lifecycle.

Meticulous record-keeping within AI systems necessitates the comprehensive documentation of data provenance, model development processes, validation results, and deployment details. These records must include information pertaining to data acquisition, pre-processing steps, feature engineering, model architecture, training parameters, performance metrics, and any modifications made throughout the AI lifecycle. Furthermore, records should detail access controls, security measures, and incident reports. Maintaining this level of detail enables a clear audit trail for regulatory compliance, facilitates root cause analysis of failures, and supports ongoing monitoring and improvement of AI system performance, demonstrably fulfilling due diligence obligations and establishing accountability.

Beyond the Checklist: Cultivating Trustworthy Systems

Human agency and oversight in AI systems necessitate the implementation of mechanisms for meaningful human control throughout the AI lifecycle, from development and deployment to ongoing operation and decommissioning. This includes establishing clear lines of responsibility for AI-driven decisions, enabling human intervention to override or correct system outputs when necessary, and providing comprehensive audit trails to track system behavior and identify potential issues. Effective oversight requires not only technical interfaces for human interaction, but also clearly defined protocols and training for personnel responsible for managing and monitoring AI systems, ensuring they can appropriately respond to unexpected behavior and prevent unintended consequences. The goal is to maintain human accountability and prevent the automation of decisions that require ethical or contextual judgment.

Technical robustness and safety in AI systems necessitate consistent and predictable performance across a defined operational domain. This includes resilience to variations in input data, including noisy, incomplete, or adversarial examples, and maintenance of acceptable performance levels under stress conditions such as high load or resource constraints. System validation requires comprehensive testing, encompassing both nominal and edge-case scenarios, alongside the implementation of fault tolerance mechanisms and fail-safe procedures. Furthermore, robustness extends to the system’s ability to gracefully degrade in performance rather than exhibiting catastrophic failures, and to maintain security against malicious attacks aimed at compromising data integrity, model accuracy, or overall system functionality.

Effective data governance for AI systems requires a multi-faceted approach to data management. This includes establishing clear data lineage, ensuring data accuracy through validation and error detection, and maintaining data relevance by regularly updating and removing obsolete information. Critically, representativeness must be addressed by actively identifying and mitigating biases present in training data, potentially through techniques like data augmentation or re-weighting. Data governance frameworks should also define clear roles and responsibilities for data quality control, access management, and compliance with relevant regulations, ultimately improving model performance and fostering trustworthy AI applications.

Transparency in AI systems, facilitated by methods such as Explainable AI (XAI), is crucial for establishing user trust and enabling effective oversight. XAI techniques aim to make AI decision-making processes understandable to humans, moving beyond “black box” models. This involves providing insights into the factors influencing predictions, the reasoning behind conclusions, and the system’s confidence levels. Increased transparency allows stakeholders – including developers, auditors, and end-users – to identify potential biases, errors, or unintended consequences, and to validate the system’s behavior. Furthermore, it supports accountability by enabling a clear understanding of why a particular decision was made, which is essential for regulatory compliance and responsible AI deployment.

The Convergence of Standards and Reality

The implementation of standards such as ISO/IEC 42001 offers organizations a robust, systematic approach to managing the risks inherent in artificial intelligence systems. This framework isn’t merely a checklist of best practices; it establishes a comprehensive management system that integrates risk assessment and governance into the entire AI lifecycle. Critically, ISO/IEC 42001 is designed to align with emerging regulatory landscapes, most notably the European Union’s AI Act. By adopting this standard, organizations demonstrate a proactive commitment to responsible AI development and deployment, facilitating compliance with legal requirements and building stakeholder trust. The standard provides a structured methodology for identifying, analyzing, and mitigating potential harms, ensuring that AI systems are not only innovative but also safe, ethical, and accountable.

The standard ISO 23894 provides a systematic framework for managing risk throughout the lifecycle of an AI system. It moves beyond simply identifying potential harms – encompassing biases, inaccuracies, and unintended consequences – to offer a structured process for their mitigation. This involves establishing a risk management process, assessing the severity and likelihood of potential harms, and implementing appropriate controls to reduce these risks to an acceptable level. Notably, ISO 23894 advocates for a continuous monitoring and improvement cycle, ensuring that risk management remains adaptive to evolving AI technologies and changing societal expectations. By detailing concrete steps for risk assessment, treatment, and communication, the standard empowers organizations to proactively address potential harms, fostering responsible AI development and deployment.

To fortify the dependability of artificial intelligence systems, proactive security measures are essential, and intrusion detection systems represent a key component of this defense. These systems continuously monitor AI infrastructure for anomalous activity, identifying potential malicious attacks that could compromise data integrity, model accuracy, or overall system functionality. By establishing a baseline of normal operation, intrusion detection systems can flag deviations indicative of unauthorized access, data breaches, or adversarial manipulations. This heightened vigilance directly supports ‘Technical Robustness and Safety’ by enabling a rapid response to threats, minimizing potential harm, and maintaining the reliable performance of AI applications – crucial for sectors where safety and security are paramount.

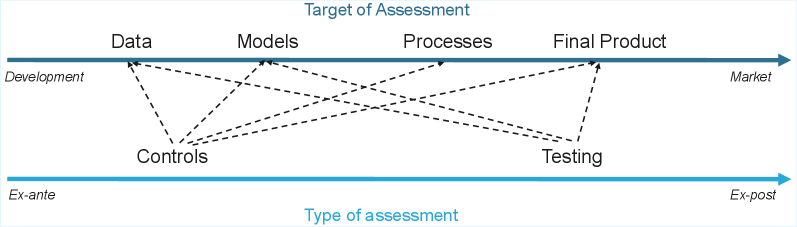

The adoption of international standards for AI governance isn’t merely a procedural step, but a demonstrable commitment to responsible innovation that cultivates stakeholder confidence. This work details a framework connecting the obligations outlined in the EU AI Act to concrete technical and organizational measures, structured across four key dimensions: data management, model development, operational processes, and the characteristics of final AI products. By mapping legal requirements to these practical elements, the research facilitates verifiable compliance, moving beyond aspirational principles to establish a pathway for operationalizing trustworthiness in AI systems and ensuring accountability throughout their lifecycle.

The pursuit of compliance, as detailed in the proposed framework, isn’t about achieving a static state of ‘safe’ AI, but rather acknowledging the inherent unpredictability of complex systems. This resonates deeply with the notion that monitoring is the art of fearing consciously. As David Hilbert observed, “We must be able to answer the question: can a finite description completely determine an infinite object?” The framework attempts to map legal obligations onto testable components, but it implicitly recognizes that complete determination is an illusion. true resilience begins where certainty ends, and the value lies not in eliminating risk, but in developing the capacity to reveal and respond to inevitable revelations within these growing ecosystems.

What’s Next?

This framework, attempting to solidify the ephemeral concepts of ‘trustworthy AI’ into testable assertions, is not a solution-it is a meticulously crafted invitation to future failures. The very act of defining compliance creates a local optimum, a brittle point around which unforeseen risks will inevitably coalesce. Long stability is the sign of a hidden disaster; a system declared ‘compliant’ is simply one where the edges of its vulnerabilities have not yet been discovered.

The true challenge lies not in checking boxes, but in cultivating resilience. The field must shift from verification – proving a system is safe at a single moment – to monitoring its evolution. Data governance, rightly highlighted, is not merely about present control, but about anticipating the subtle drifts in data distributions that will warp a system’s behavior over time. These systems don’t fail; they transform, becoming something other than what was intended.

Future work should abandon the search for absolute ‘trust’ and embrace the study of graceful degradation. The focus must move beyond the individual system and toward the ecosystem it inhabits – the complex interplay of data, users, and unintended consequences. The EU AI Act, and frameworks like this one, are not endpoints, but the first hesitant steps in a long, uncertain journey.

Original article: https://arxiv.org/pdf/2512.13907.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- Clash Royale Best Boss Bandit Champion decks

- Best Hero Card Decks in Clash Royale

- Call of Duty Mobile: DMZ Recon Guide: Overview, How to Play, Progression, and more

- Clash Royale December 2025: Events, Challenges, Tournaments, and Rewards

- Best Arena 9 Decks in Clast Royale

- Clash Royale Best Arena 14 Decks

- Clash Royale Witch Evolution best decks guide

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- Decoding Judicial Reasoning: A New Dataset for Studying Legal Formalism

2025-12-17 13:37