Author: Denis Avetisyan

New research reveals students aren’t just using ChatGPT, they’re actively learning how to interact with it, developing sophisticated strategies for leveraging its power and navigating its limitations.

This study demonstrates that naturalistic ChatGPT interaction fosters AI literacy through the emergence of distinct ‘use genres’ reflecting self-regulated learning and strategic tool integration.

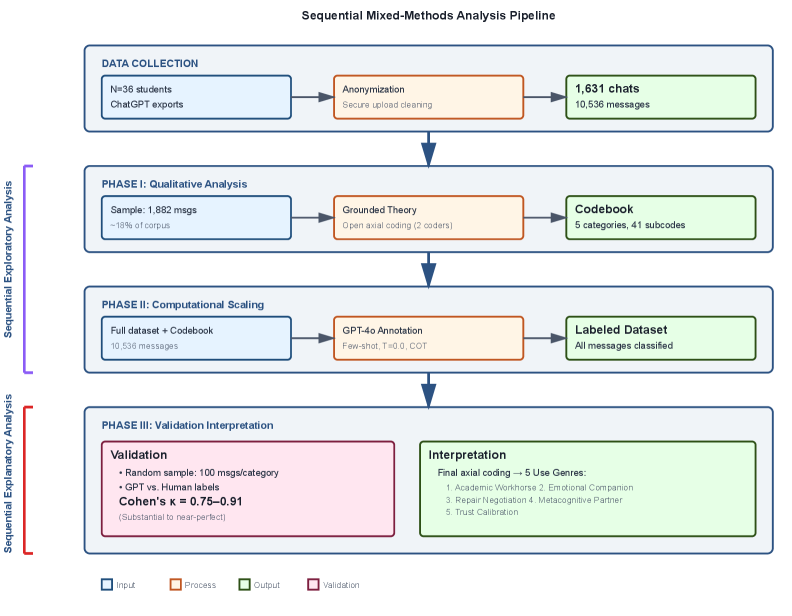

While frameworks for AI literacy abound, empirical understanding of how students actually learn to integrate generative AI remains limited. This research, ‘Learning to Live with AI: How Students Develop AI Literacy Through Naturalistic ChatGPT Interaction’, analyzes over 10,000 student-ChatGPT interactions to reveal five distinct ‘use genres’-from academic assistance to emotional support-that characterize evolving AI competence. These findings demonstrate that functional AI literacy emerges not through simple adoption, but through ongoing negotiation and critical appraisal of AI capabilities, including a crucial skillset we term ‘repair literacy’. How can educators leverage these naturally occurring interaction patterns to foster responsible AI integration and empower student agency in an increasingly AI-mediated world?

The Illusion of Progress: Mapping Student Interaction with AI

The arrival of ChatGPT marks a pivotal moment in education, fundamentally challenging established methods of teaching and learning. This technology isn’t merely a new tool for accessing information; it represents a paradigm shift, prompting a critical need to move beyond assumptions about student behavior and investigate how this AI is genuinely integrated into their academic lives. Traditional educational models often center on independent work and direct knowledge acquisition, but the accessibility of sophisticated AI assistance necessitates a re-evaluation of these norms. Understanding the nuanced ways students are interacting with ChatGPT – whether for clarification, brainstorming, or more complex tasks – is paramount to adapting pedagogical approaches and ensuring that learning remains meaningful and effective in this rapidly evolving landscape. The simple question of ‘use’ quickly expands into a complex investigation of evolving student behaviors and the very nature of knowledge creation.

Analysis of over 10,536 student messages reveals a surprisingly intricate relationship with ChatGPT, extending far beyond simple academic dishonesty. Initial observations demonstrate students aren’t solely seeking answers; rather, they are establishing distinct ‘Use Genres’ – repeatable patterns of interaction – with the AI. These patterns encompass not only requesting assistance with assignments, but also utilizing ChatGPT for brainstorming, clarifying concepts through iterative questioning, and even seeking feedback on their own work. This suggests a proactive engagement where students are actively shaping the AI’s responses to suit their individual learning needs, indicating a more collaborative – and complex – dynamic than previously assumed. The data challenges the notion of ChatGPT as merely a tool for ‘cheating’ and highlights its evolving role as a versatile learning companion.

Analysis of student interactions with ChatGPT reveals a surprising depth beyond simple assignment completion. The study indicates that students frequently utilize the AI not merely as a tool for generating answers, but as a conversational partner for emotional support and self-reflection; messages demonstrate students seeking encouragement, processing anxieties about academic performance, and requesting feedback on their own understanding of concepts. This complex interplay necessitates a shift in analytical frameworks; traditional assessments of plagiarism or academic dishonesty prove inadequate when confronted with these nuanced exchanges. Researchers propose that understanding these ‘Use Genres’ requires considering the AI as a socio-emotional resource, demanding qualitative methods capable of interpreting the subtle cues and relational dynamics within these digital dialogues, and ultimately reshaping how educators perceive the role of artificial intelligence in student learning.

The effective integration of artificial intelligence into educational frameworks hinges on a comprehensive understanding of how students are adapting to and interacting with these tools. Simply acknowledging the presence of AI is insufficient; educators must move beyond concerns of academic dishonesty and delve into the nuanced patterns of student engagement. This requires recognizing that AI tools like ChatGPT are becoming integrated into students’ learning processes in ways that extend beyond simple task completion – encompassing areas of emotional support, self-reflection, and personalized learning. By analyzing these evolving interactions, educators can proactively shape pedagogical approaches, design assignments that leverage AI’s strengths while mitigating its weaknesses, and ultimately foster a learning environment that promotes genuine understanding and critical thinking, rather than rote memorization or superficial completion.

The Allure of the Algorithm: Trust and Emotional Connection

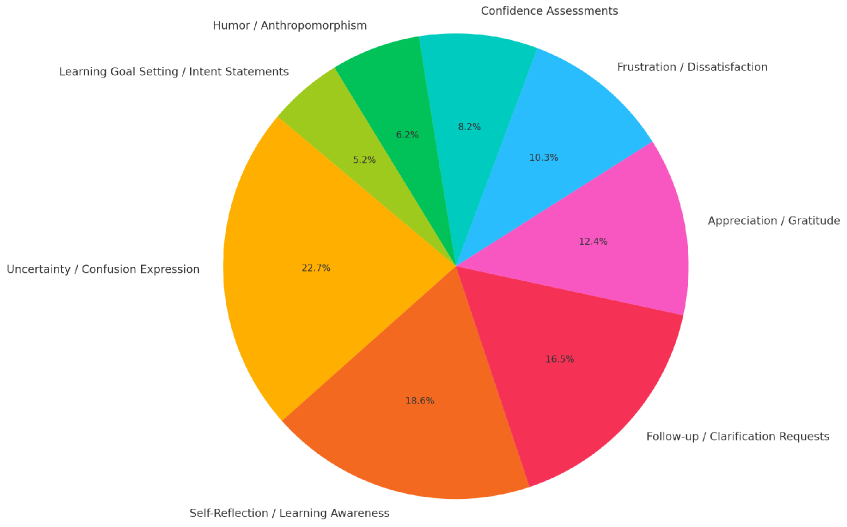

Students increasingly utilize ChatGPT not solely as an information resource, but as a conversational partner, exhibiting behaviors consistent with seeking emotional support and validation. This phenomenon, categorized as engagement with an ‘Emotional Companion Genre,’ reveals a demand for interactive experiences that go beyond factual responses. Consequently, the development of ‘Relational AI Literacy’ is crucial; this involves understanding the limitations of AI in providing genuine emotional connection, recognizing the potential for misinterpreting AI outputs as empathetic, and critically evaluating the socio-emotional impact of these interactions. This literacy extends beyond technical proficiency to encompass the ability to navigate the affective dimensions of human-AI relationships and establish healthy boundaries.

Trust Calibration, in the context of student interaction with ChatGPT, refers to the iterative process by which users evaluate the AI’s responses and subsequently modify their level of reliance on its output. This isn’t a static assessment; students continually weigh the perceived accuracy, relevance, and completeness of ChatGPT’s contributions against their existing knowledge and expectations. Initial interactions often involve high scrutiny, with students verifying information and cross-referencing it with other sources. As students gain experience and observe consistent performance, they may increase their trust and reduce verification efforts, or conversely, decrease trust if inconsistencies or errors are detected. This dynamic adjustment of trust levels is crucial, as it directly impacts how students integrate ChatGPT’s output into their learning process and decision-making.

The increasing prevalence of large language models like ChatGPT is shifting evaluative focus from content accuracy to interactional qualities. Students are no longer solely concerned with the factual correctness of generated text, but also with the manner in which the AI responds – including tone, style, and perceived empathy. This represents a move beyond assessing what ChatGPT says to analyzing how it communicates, creating a socio-emotional exchange characterized by perceptions of rapport and responsiveness. Consequently, users are implicitly evaluating the AI’s performance not just on cognitive tasks, but on its ability to simulate social interaction, leading to more complex relationships with AI systems.

The increasing prevalence of student interactions with AI like ChatGPT necessitates a broadened pedagogical approach that incorporates the emotional and relational aspects of these exchanges. Traditional educational focuses on factual accuracy and cognitive skill development are insufficient; educators must now also address how students are forming attachments, seeking validation, and calibrating trust with AI systems. This includes fostering critical awareness of the potential for emotional dependence, the limitations of AI empathy, and the importance of maintaining healthy boundaries in human-AI interactions. Ignoring these emotional dimensions risks hindering students’ development of crucial socio-emotional skills and potentially fostering unhealthy reliance on AI for emotional support.

The Burden of Correction: Algorithmic Labor and Repair Genres

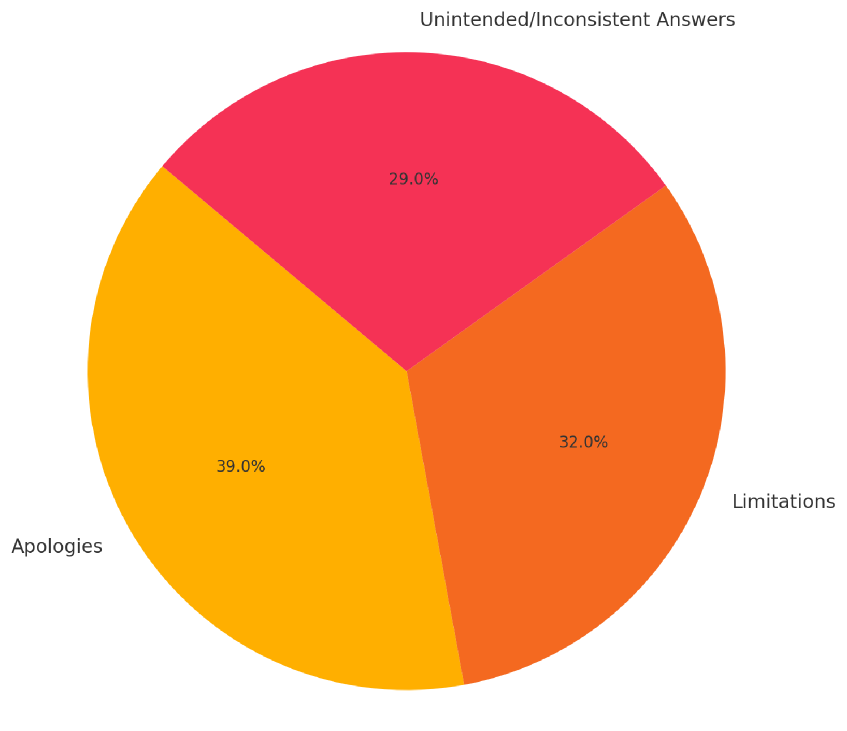

Analysis of student interactions with ChatGPT indicates a prevalent pattern of “Repair and Negotiation,” wherein users actively identify and correct inaccuracies, ambiguities, or inadequacies in the AI’s generated text. This process extends beyond simple error reporting; students frequently rephrase prompts, provide additional context, or offer alternative phrasing to guide the model toward a desired output. The cumulative effect of these iterative corrections and refinements constitutes a form of ‘Algorithmic Labor,’ representing a measurable contribution of human effort toward the ongoing training and improvement of the language model. Data from our study demonstrates that a substantial proportion of user interactions fall into this category, highlighting its significance as a key mode of engagement with the technology.

Analysis of user interaction data reveals that a substantial portion of engagements with ChatGPT extend beyond simple error correction; users are actively involved in a process of iterative refinement that functionally contributes to the AI’s ongoing development. This ‘algorithmic labor’ isn’t limited to identifying factual inaccuracies or stylistic issues, but encompasses providing implicit and explicit instruction through repeated prompting and response evaluation. The observed patterns demonstrate that users are, in effect, training the model by providing feedback that shapes its subsequent outputs, representing a considerable input towards the AI’s continuous learning cycle, and composing a statistically significant portion of observed interactions within the study dataset.

Student interaction with ChatGPT frequently extends beyond simple prompting and response; analysis indicates a pattern of active modification and refinement of the AI’s output. This iterative process of identifying inadequacies, providing corrective input, and evaluating revised responses demonstrates a clear exercise of agency on the part of the user. Furthermore, this engagement cultivates problem-solving skills as students must analyze the AI’s reasoning, diagnose errors, and formulate strategies to guide the model toward desired outcomes – a skill set developed within the novel context of human-AI collaboration and requiring a different approach than traditional problem sets.

Active engagement with ChatGPT, specifically through iterative refinement of its outputs, presents opportunities for developing metacognitive skills. The process of identifying inaccuracies, formulating corrective prompts, and evaluating revised responses requires users to explicitly monitor their own thinking and understanding of the task at hand. This ‘repair and negotiation’ interaction forces users to articulate their expectations, analyze the AI’s reasoning, and adapt their strategies accordingly, leading to a heightened awareness of both their own cognitive processes and the inherent limitations of current AI models, such as their susceptibility to biases and factual errors.

The Illusion of Partnership: AI as a Metacognitive Tool and Fostering Agency

Emerging patterns of student interaction with ChatGPT reveal a distinct “Metacognitive Partner” genre, where the AI isn’t simply used for answers, but to actively enhance the learning process itself. Students are prompting the AI to evaluate their understanding, identify knowledge gaps, and suggest tailored learning strategies – essentially, using it as a personalized thinking tool. This goes beyond basic question-answering; students request feedback on their reasoning, ask for alternative explanations, and even solicit help in planning study sessions. Through this iterative process of self-assessment and strategic adaptation, students cultivate essential ‘Self-Regulated Learning’ skills, becoming more aware of their own cognitive processes and taking greater control of their educational journey. The AI, in this capacity, facilitates a move from passive reception of information to active construction of knowledge, empowering students to become more effective and autonomous learners.

ChatGPT demonstrates potential as a personalized learning coach by actively prompting students to reflect on their understanding and providing tailored feedback. This interaction isn’t simply about receiving answers; the system encourages self-assessment, prompting learners to identify knowledge gaps and refine their strategies. Through this iterative process of questioning and response, students are guided toward greater autonomy, developing the capacity to monitor their own progress and take ownership of their learning journey. The system effectively functions as a scaffold, offering support that gradually diminishes as the student’s self-regulation skills improve, ultimately fostering a sense of agency and empowering them to become independent, strategic learners.

The shift towards proactive engagement with artificial intelligence signifies a fundamental change in how students approach learning. Rather than simply receiving information delivered by an instructor or textbook, students are now actively utilizing AI tools – like ChatGPT – to guide their exploration and refine their understanding. This isn’t about automation of learning, but augmentation; students pose questions, evaluate responses, and iterate on their thinking with the AI as a partner. Consequently, the responsibility for knowledge acquisition shifts from a passive reception to an active construction, fostering a sense of ownership over the learning process and cultivating crucial self-directed learning skills. This empowered approach positions students not as consumers of information, but as active architects of their own educational journey, building a deeper and more personalized understanding of complex topics.

Effective integration of ChatGPT hinges not simply on access, but on cultivating student agency – the ability to direct one’s own learning. Research reveals a surprisingly nuanced relationship between students and AI, extending beyond mere task completion. A recent study identified five distinct ways students currently utilize these tools, categorized as ‘Academic Workhorse’ for straightforward assignments, ‘Repair/Negotiation’ when clarifying concepts, ‘Emotional Companion’ for support, ‘Metacognitive Partner’ for self-assessment, and crucially, ‘Trust Calibration’ – learning to critically evaluate AI outputs. This multifaceted approach highlights that successful implementation requires equipping students with the skills to move beyond passive acceptance and actively leverage AI as a tool for self-directed learning, fostering genuine ownership of their educational journey.

The study meticulously details how students carve out ‘use genres’ with ChatGPT, essentially building workarounds for a tool that’s fundamentally unpredictable. It’s a beautiful, messy process-students aren’t passively accepting AI’s output; they’re actively negotiating with its limitations. This reminds one of G. H. Hardy’s assertion: “Mathematics may be compared to a box of tools.” Students, much like mathematicians, are selecting and adapting tools – in this case, an imperfect AI – to solve learning problems. The research confirms what experience suggests: elegant theory rarely survives contact with production, and students will always find a way to make even the most flawed system ‘just work’ for them. One suspects they’re leaving excellent notes for future digital archaeologists, documenting every hack and bypass.

What’s Next?

The observation that students carve out ‘use genres’ with these large language models feels…inevitable. It’s always the users who end up doing the real engineering. One suspects this ‘AI literacy’ isn’t some transferable skill, but a highly contextual awareness of what this particular black box will hallucinate today. It used to be a simple bash script, really, and now it’s a probabilistic parrot. The next step isn’t more literacy training; it’s understanding the economic forces that will inevitably standardize those genres. They’ll call it AI-powered learning and raise funding.

A genuinely interesting problem remains the long-term effects of outsourcing cognitive friction. Students aren’t just using ChatGPT; they’re offloading the messy work of synthesis, the uncomfortable process of being wrong. This research hints at a workaround – the development of meta-cognitive strategies – but doesn’t address whether those strategies scale. Or, frankly, whether anyone will bother studying the inevitable atrophy of baseline skills.

Ultimately, this field will be defined not by elegant frameworks for ‘AI literacy’, but by the predictable accumulation of tech debt. Each new model, each ‘improvement,’ will invalidate the carefully constructed genres documented here. The documentation lied again. The real work will be endlessly patching the interface between human cognition and whatever probabilistic text generator is fashionable next year.

Original article: https://arxiv.org/pdf/2601.20749.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Gold Rate Forecast

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- How to have the best Sunday in L.A., according to Bryan Fuller

- Simulating Society: Modeling Personality in Social Media Bots

- January 29 Update Patch Notes

- Love Island: All Stars fans praise Millie for ‘throwing out the trash’ as she DUMPS Charlie in savage twist while Scott sends AJ packing

2026-01-29 21:45